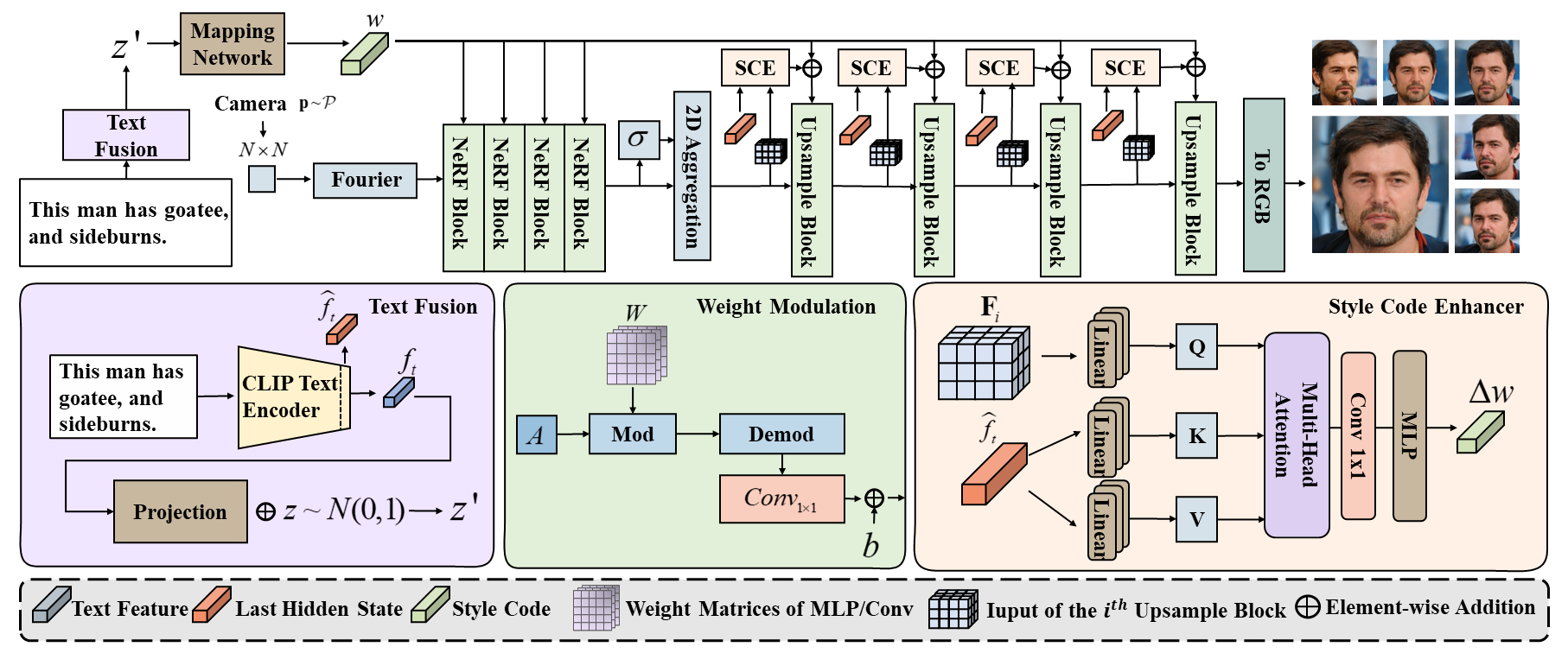

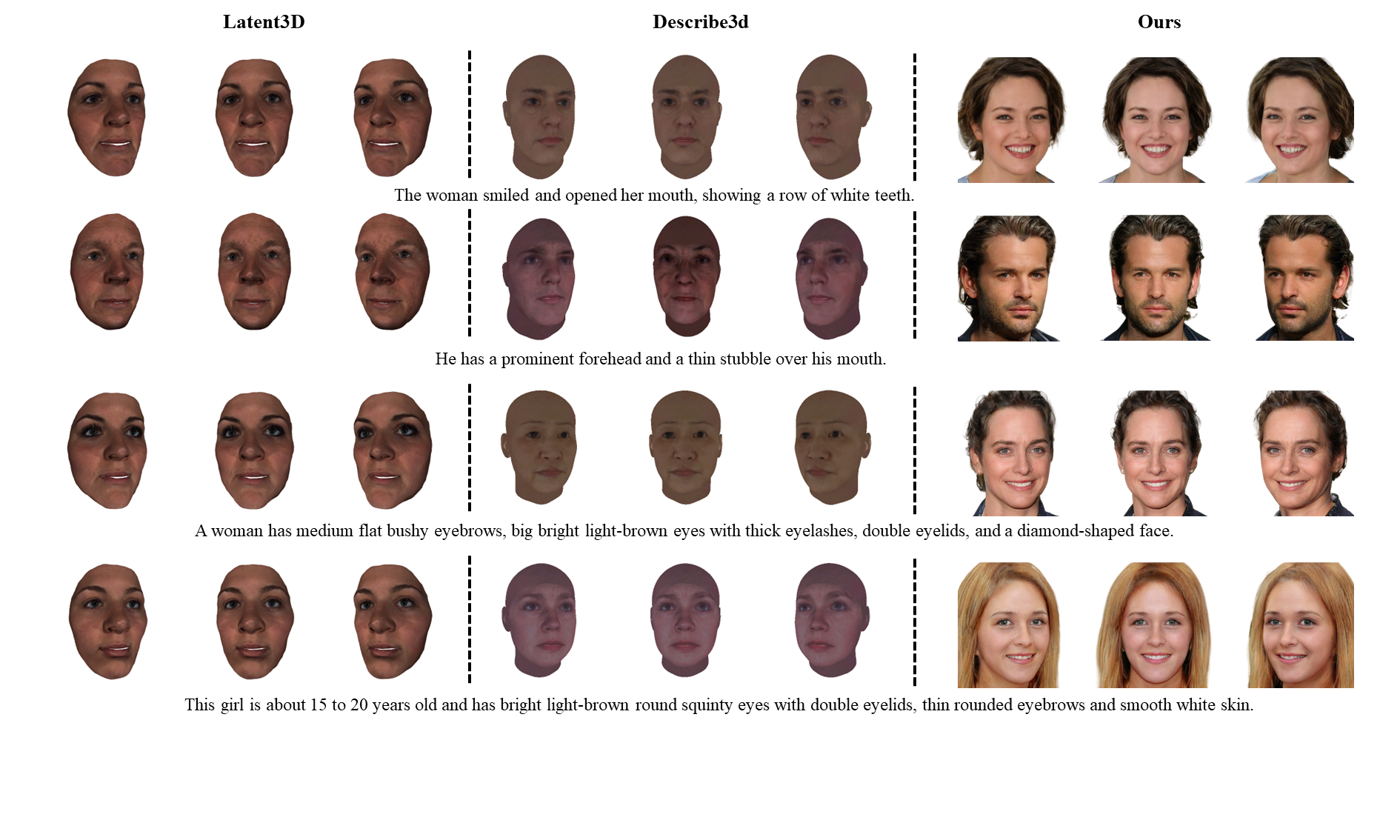

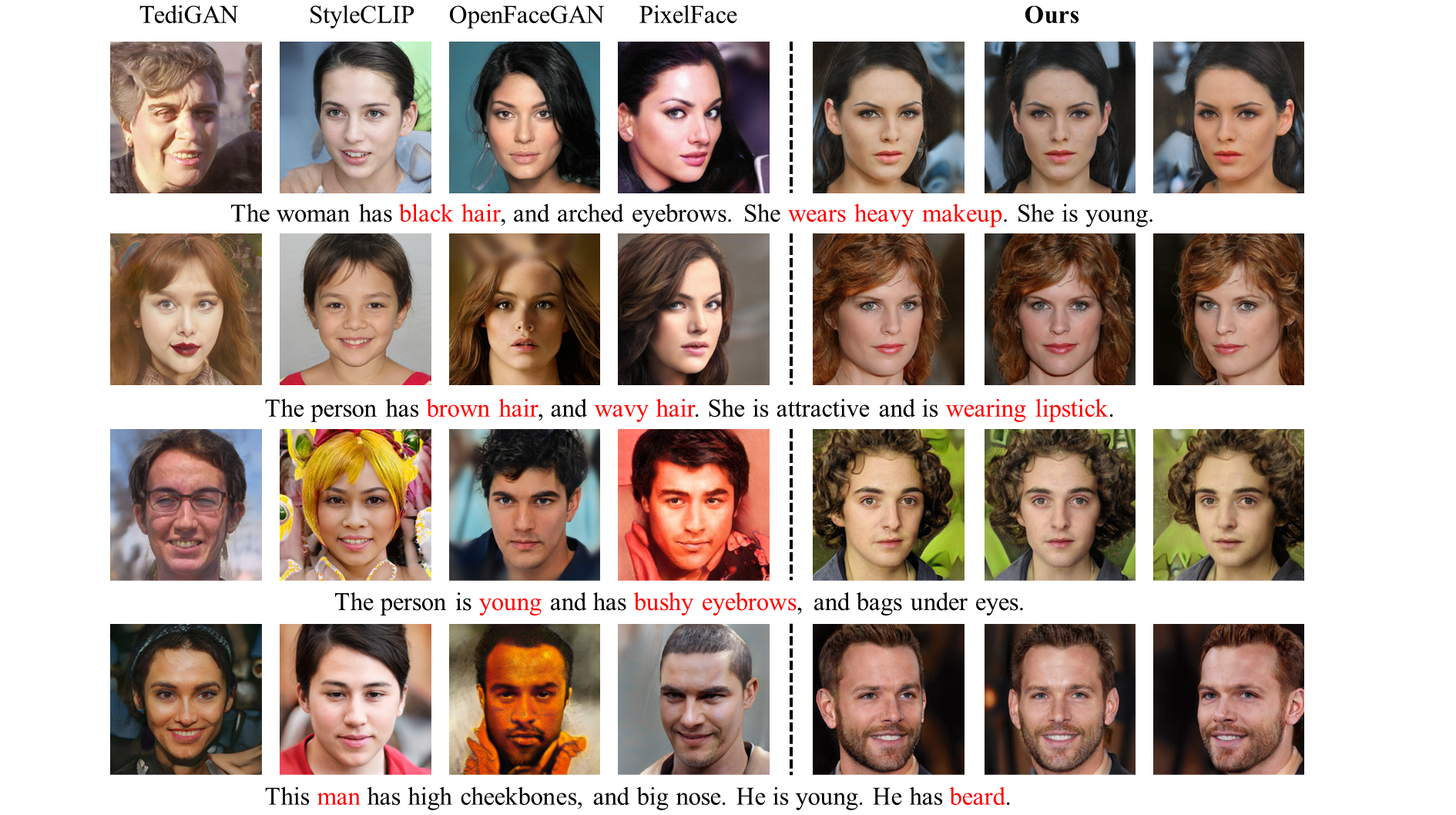

This is an official implementation of ICML 2024 Paper "Fast Text-to-3D-Aware Face Generation and Manipulation via Direct Cross-modal Mapping and Geometric Regularization". The proposed

The codebase is tested on

- Python 3.7

- PyTorch 1.7.1

For additional python libraries, please install by:

pip install -r requirements.txt

Please refer to https://github.com/NVlabs/stylegan2-ada-pytorch for additional software/hardware requirements.

Tip

A modification has been made to the clip package to enable simultaneous extraction of text features and token embeddings. Please replace the existing model.py file in your own clip installation path.

We train our

Before training, please dowload the dataset2.json, and place the file in the MMceleba dataset directory.

The model weight can be download at here.

use the shell script,

bash train_train_4_E3_Face.sh

Please check configuration files at conf/model and conf/spec. You can always add your own model config. More details on how to use hydra configuration please follow https://hydra.cc/docs/intro/.

use the shell script,

bash run_eval_4_E3_Face.sh

use the shell script,

bash sample.sh

If

@InProceedings{zhang2024fast,

title = {Fast Text-to-3{D}-Aware Face Generation and Manipulation via Direct Cross-modal Mapping and Geometric Regularization},

author = {Zhang, Jinlu and Zhou, Yiyi and Zheng, Qiancheng and Du, Xiaoxiong and Luo, Gen and Peng, Jun and Sun, Xiaoshuai and Ji, Rongrong},

booktitle = {Proceedings of the 41st International Conference on Machine Learning},

pages = {60605--60625},

year = {2024},

editor = {Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix},

volume = {235},

series = {Proceedings of Machine Learning Research},

month = {21--27 Jul},

publisher = {PMLR},

pdf = {https://raw.githubusercontent.com/mlresearch/v235/main/assets/zhang24cp/zhang24cp.pdf},

url = {https://proceedings.mlr.press/v235/zhang24cp.html},

}

This project largely references StyleNeRF. Thanks for their amazing work!