Fork of nanoGPT to experiment on with Curricular Learning

pip install torch numpy transformers datasets tiktoken wandb tqdm

Dependencies:

- pytorch <3

- numpy <3

transformersfor huggingface transformers <3datasetsfor huggingface datasets <3 (if you want to download + preprocess Wikipedia dataset)tiktokenfor OpenAI's fast BPE code <3wandbfor optional logging <3tqdmfor progress bars <3

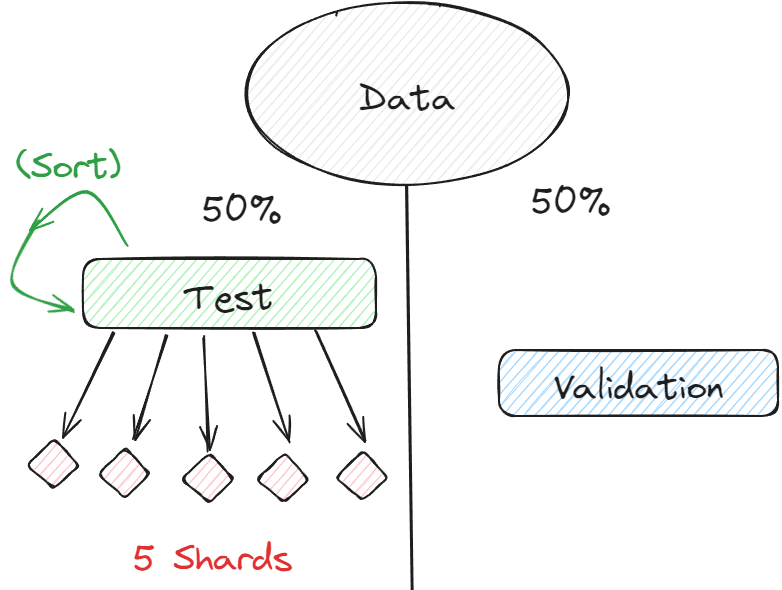

$ python data/sorted_wikipedia_dataset/prepare.py

This creates a 5x Shards and val.bin in that data directory.

Now rename current shrad to be trained on to train.bin and remember to chang num_ters or else it will not run.

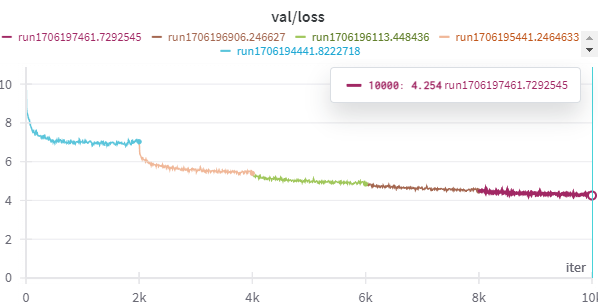

$ python train.py config/train_wikipedia_shards.py