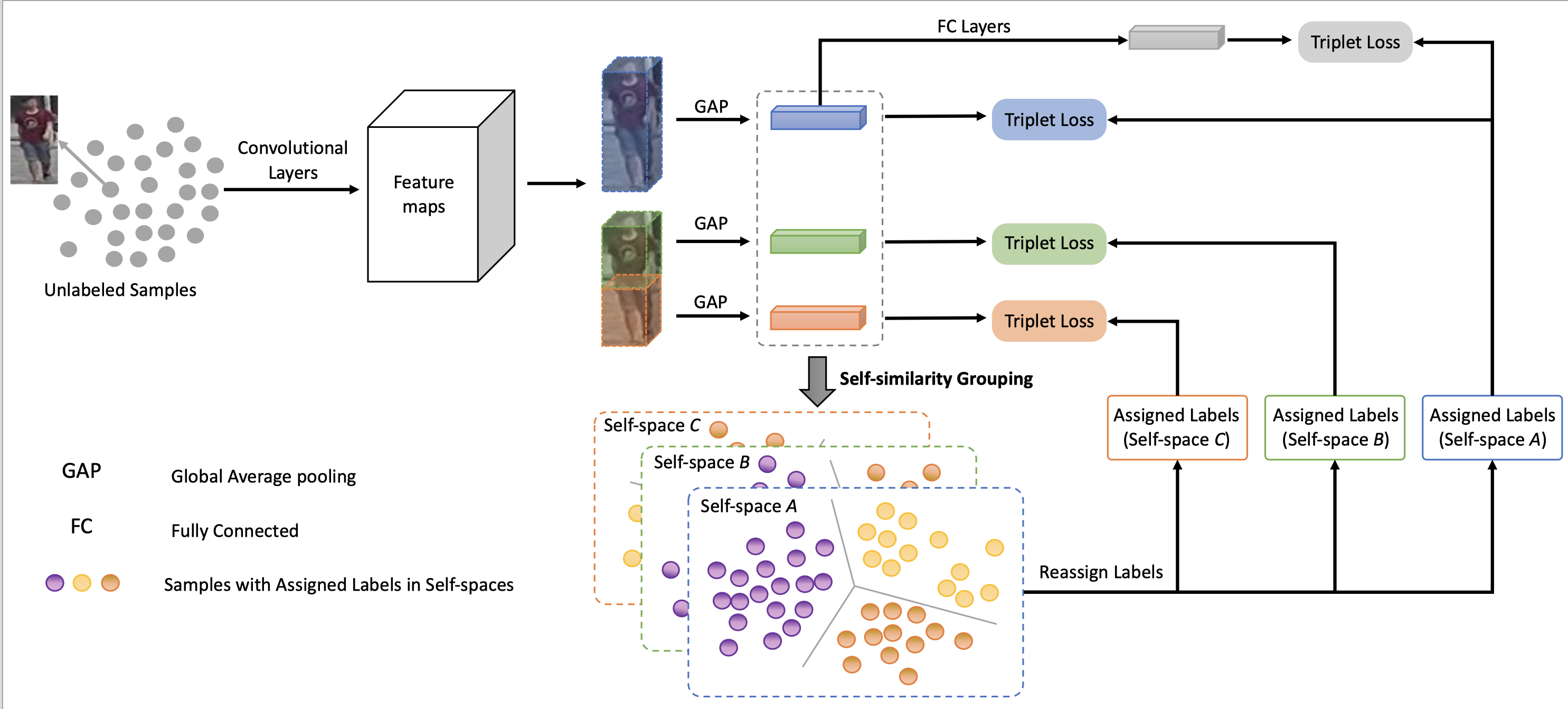

Self-similarity Grouping: A Simple Unsupervised Cross Domain Adaptation Approach for Person Re-identificatio(SSG)

Implementation of the paper Self-similarity Grouping: A Simple Unsupervised Cross Domain Adaptation Approach for Person Re-identification, ICCV 2019 (Oral)

The SSG approach proposed in the paper is simple yet effective and achieves the state-of-arts on three re-ID datasets: Market1501, DukdMTMC and MSMT17.

Run source_train.py via

python source_train.py \

--dataset <name_of_source_dataset>\

--resume <dir_of_source_trained_model>\

--data_dir <dir_of_source_data>\

--logs_dir <dir_to_save_source_trained_model>To replicate the results in the paper, you can download pre-trained models on Market1501, DukeMTMC and MSMT17 from GoogleDrive. There maybe some bugs in source_train.py, please refer to DomainAdaptiveReID to obtained the pretrained model or just use the pretrained model provided by us. And you can find all models after adaptation from GoogleDrive. Our models can be trained with PyTorch 0.4.1 or PyTorch 1.0.

python selftraining.py \

--src_dataset <name_of_source_dataset>\

--tgt_dataset <name_of_target_dataset>\

--resume <dir_of_source_trained_model>\

--iteration <number of iteration>\

--data_dir <dir_of_source_target_data>\

--logs_dir <dir_to_save_model_after_adaptation>\

--gpu-devices <gpu ids>\

--num-split <number of split>Or just command

./run.shpython semitraining.py \

--src_dataset <name_of_source_dataset>\

--tgt_dataset <name_of_target_dataset>\

--resume <dir_of_source_trained_model>\

--iteration <number of iteration>\

--data_dir <dir_of_source_target_data>\

--logs_dir <dir_to_save_model_after_adaptation>\

--gpu-devices <gpu ids>\

--num-split <number of split>\

--sample <sample method>| Source Dataset | Rank-1 | mAP |

|---|---|---|

| DukeMTMC | 82.6 | 70.5 |

| Market1501 | 92.5 | 80.8 |

| MSMT17 | 73.6 | 48.6 |

| SRC --> TGT | Before Adaptation | Adaptation by SSG | Adaptation by SSG++ | |||

|---|---|---|---|---|---|---|

| Rank-1 | mAP | Rank-1 | mAP | Rank-1 | mAP | |

| Market1501 --> DukeMTMC | 30.5 | 16.1 | 73.0 | 53.4 | 76.0 | 60.3 |

| DukeMTMC --> Market1501 | 54.6 | 26.6 | 80.0 | 58.3 | 86.2 | 68.7 |

| Market1501 --> MSMT17 | 8.6 | 2.7 | 31.6 | 13.2 | 37.6 | 16.6 |

| DukeMTMC --> MSMT17 | 12.38 | 3.82 | 32.2 | 13.3 | 41.6 | 18.3 |

- The pre-trained model is trained with Pytorch 0.4.1, there may be some error when loading it by Pytorch with higher version. This link should be helpful

- The source_training.py codes may have some bugs, I suggest you directly using our pretrained baseline model. And I will fix the bugs soon.

- To reproduce results listed in paper, I recommend to use two GPUs with batch size of 32. And in general, the experimental results may have be a little different from the results listed in paper (+/-1%).

Our code is based on open-reid and DomainAdaptiveReID.