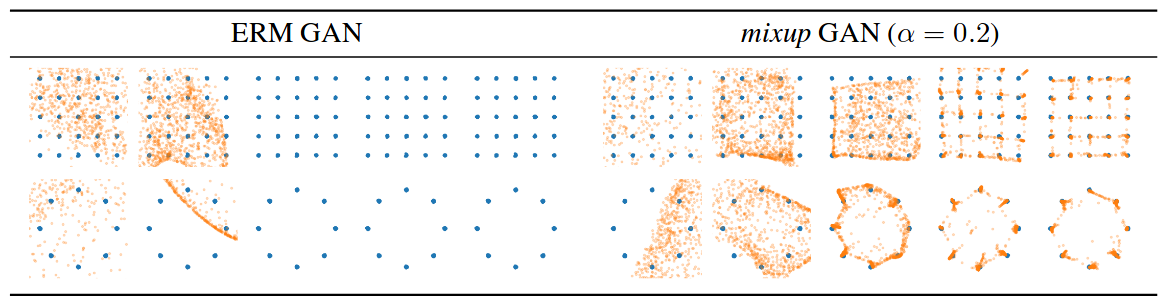

This repo contains demo reimplementations of the CIFAR-10 training code and the GAN experiment in PyTorch based on the following paper:

Hongyi Zhang, Moustapha Cisse, Yann N. Dauphin and David Lopez-Paz. mixup: Beyond Empirical Risk Minimization. https://arxiv.org/abs/1710.09412

The following table shows the median test errors of the last 10 epochs in a 200-epoch training session. (Please refer to Section 3.2 in the paper for details.)

| Model | weight decay = 1e-4 | weight decay = 5e-4 |

|---|---|---|

| ERM | 5.53% | 5.18% |

| mixup | 4.24% | 4.68% |

- A Tensorflow implementation of mixup which reproduces our results in tensorpack

- Official Facebook implementation of the CIFAR-10 experiments

The CIFAR-10 reimplementation of mixup is adapted from the pytorch-cifar repository by kuangliu.