Quantviz is a tool that runs different quantization simulations and produces results as a csv file which can be viewed wih a visualization tool

- FP8 (Ideas taken from FP8 simulation - Qualcomm)

- VS-Quant (Ideas taken from Nvidia)

- The tool requires that the tensor that have to be subjected to quantization simulation are saved in “ot” files. If you have “pt” you can run the executables - “pt_to_npz” and “npz_to_ot” to convert them to “ot” files

dune exec pt_to_npz

dune exec npz_to_ot- In the example below

- Weights are saved as “layer_variables.ot” tensors

- Inputs are saved as “inputs.ot” tensors

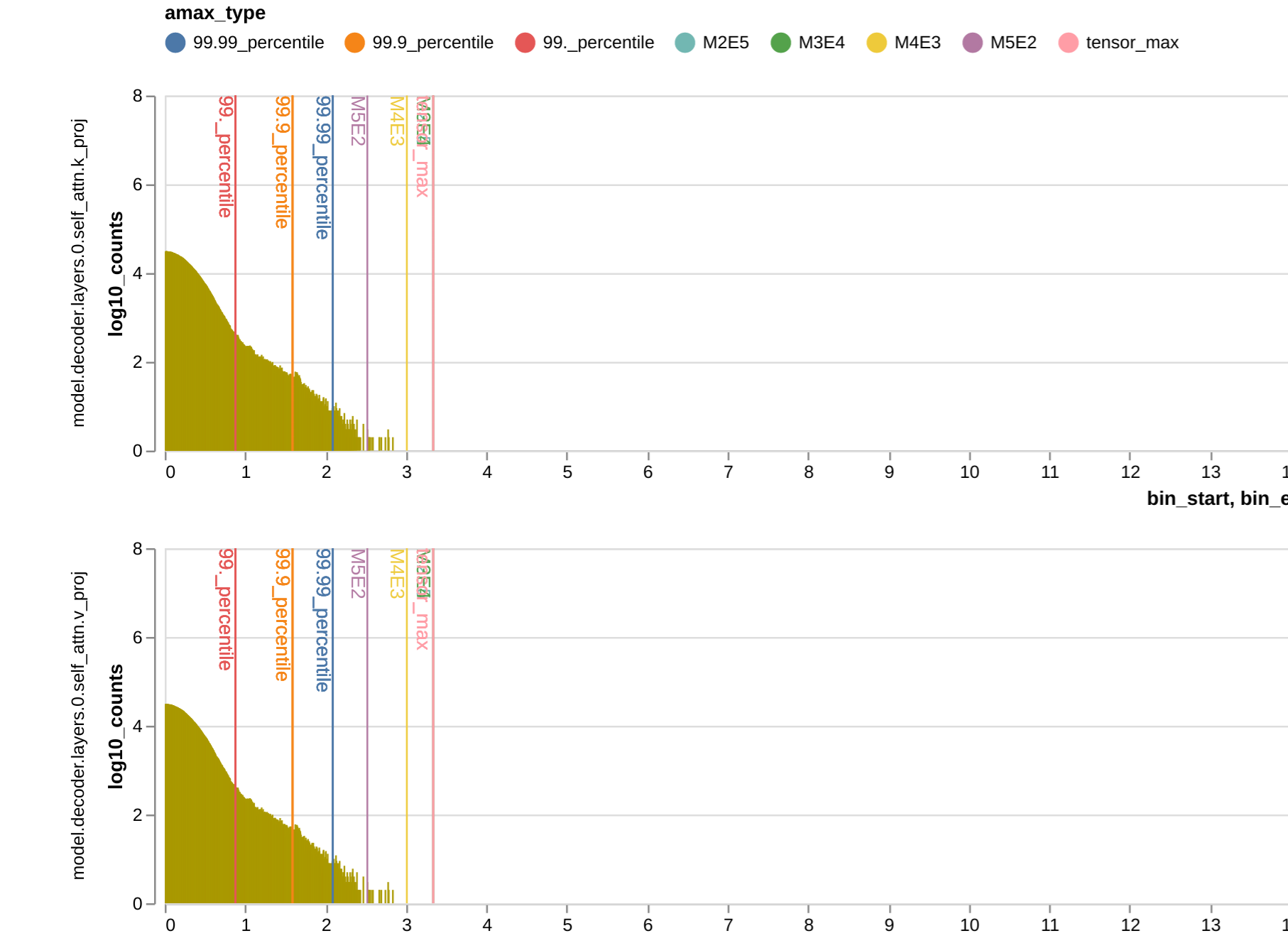

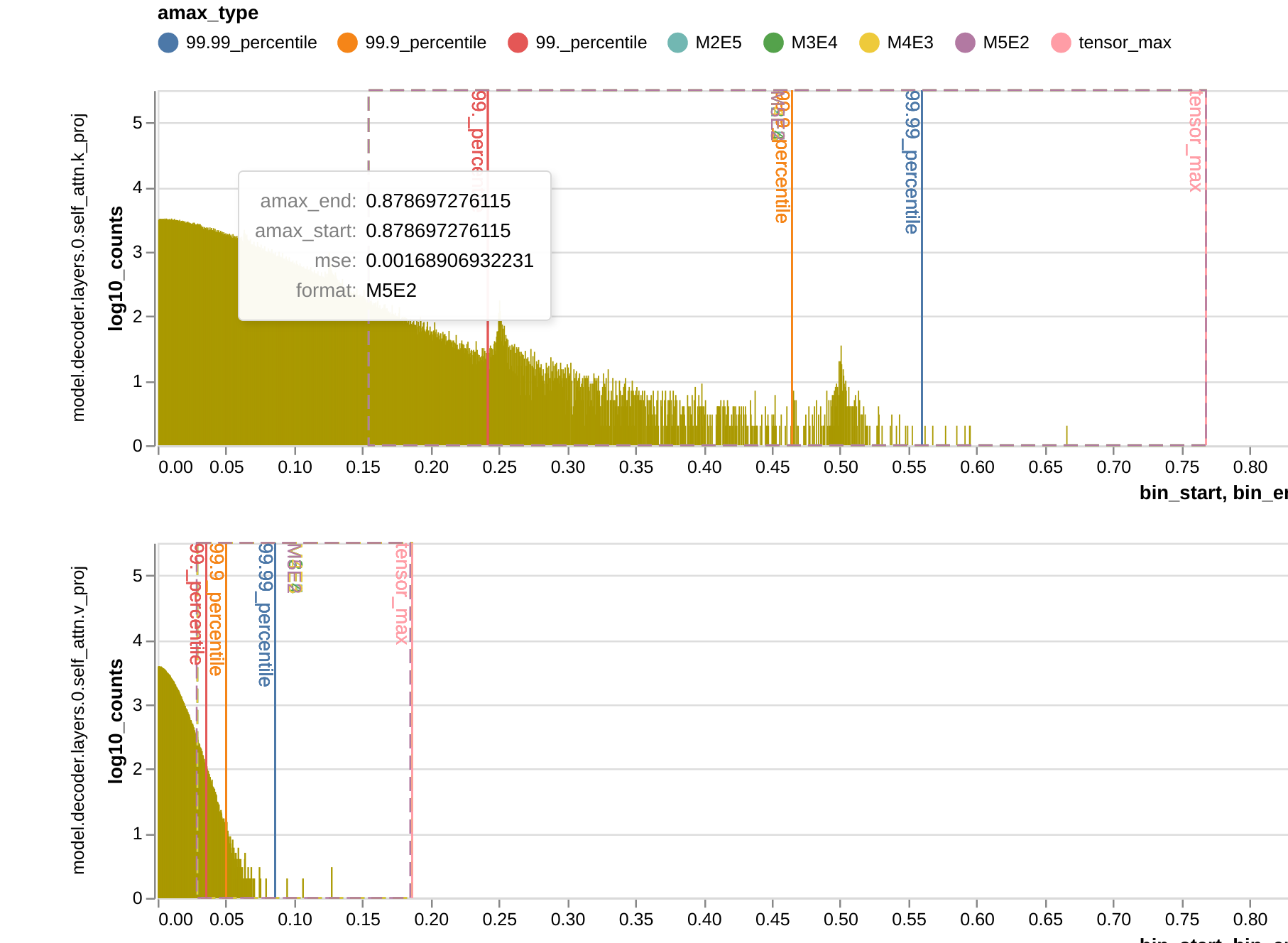

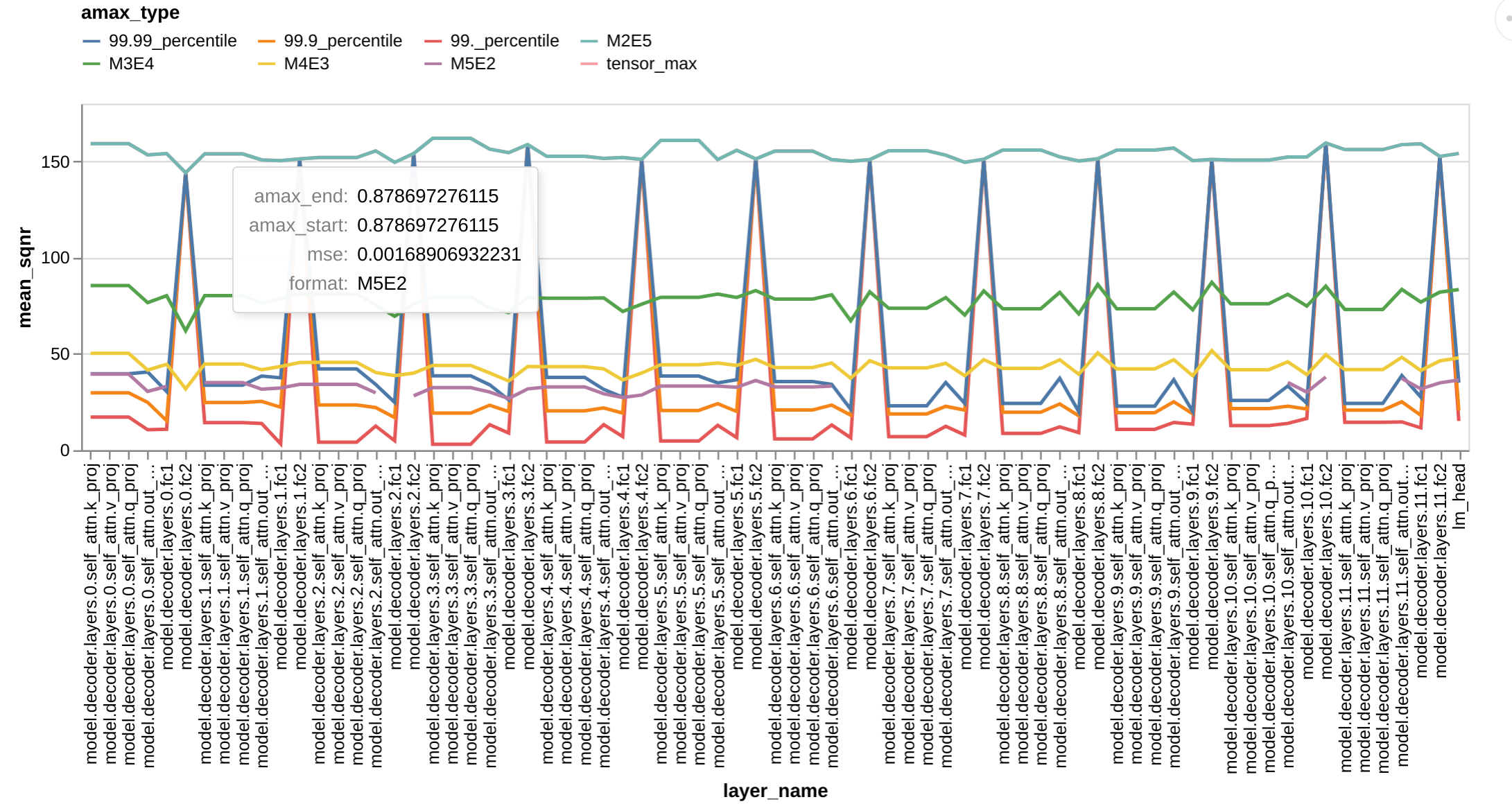

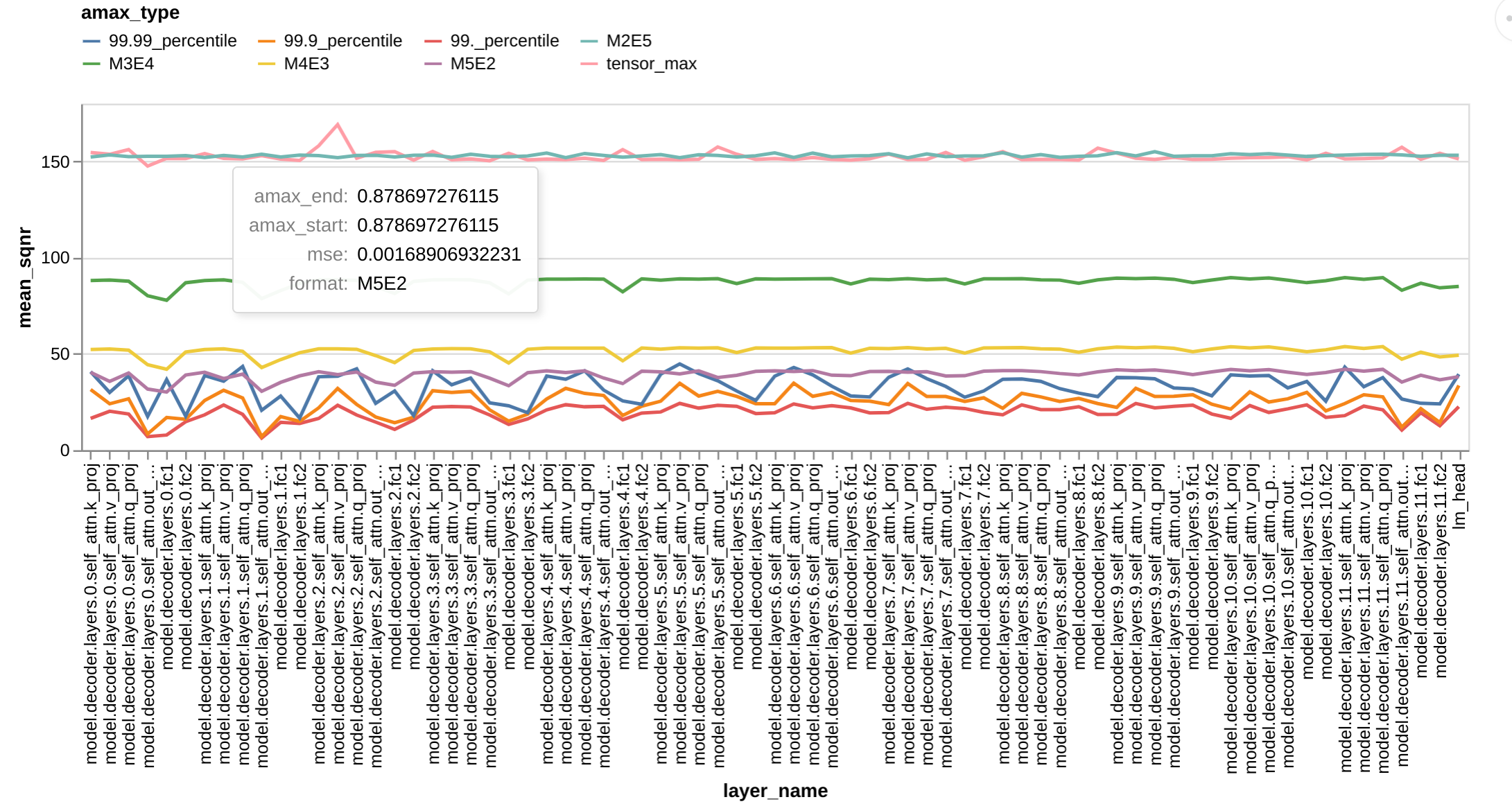

- Runs calibration using tensor_max, 99.9 percentile, 99.99 percentile, optimal calibration finding using MSE(Mean Squared Error)

- Finds the calibration point that gives the lowest MSE

- In per-channel quantization,

- The same steps listed above are done but for each channel there is a different calibration point for quantization

- You can change the defaults by passing command line arguments. You can see the available command line arguments by using “–help” on the executable.

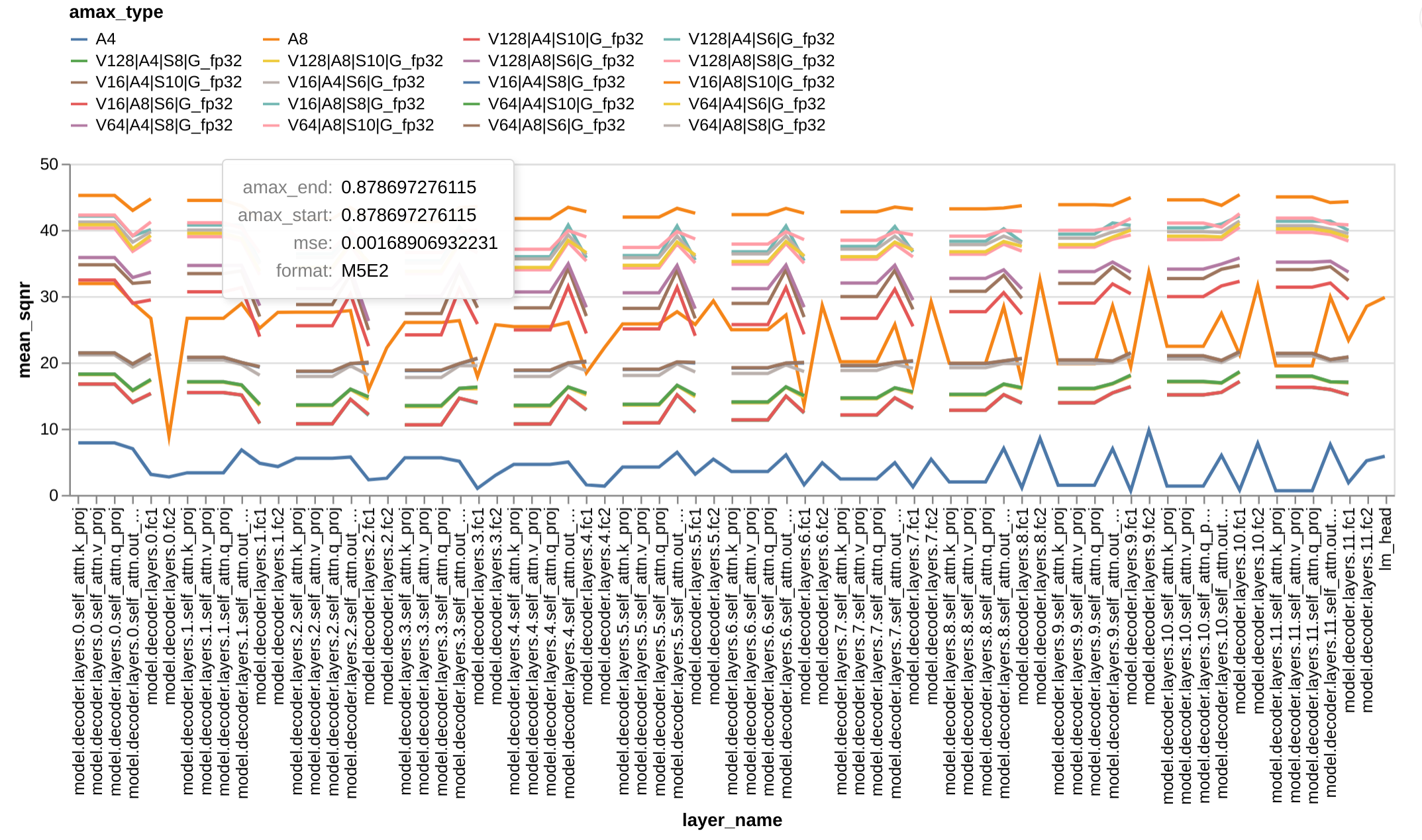

- FP8 formats investigated: M5E2, M4E3, M3E4, M2E5

# Uses per-channel quantization for weight tensors

dune exec quantviz -- fp8 data/artifacts/opt125m/fp32/layers layer_variables -c 1# Uses per-tensor quantization for input tensors

dune exec quantviz -- fp8 data/arul/data/artifacts/opt125m/fp32/layers inputs- There is a two level scaling implementation just as the paper describes.

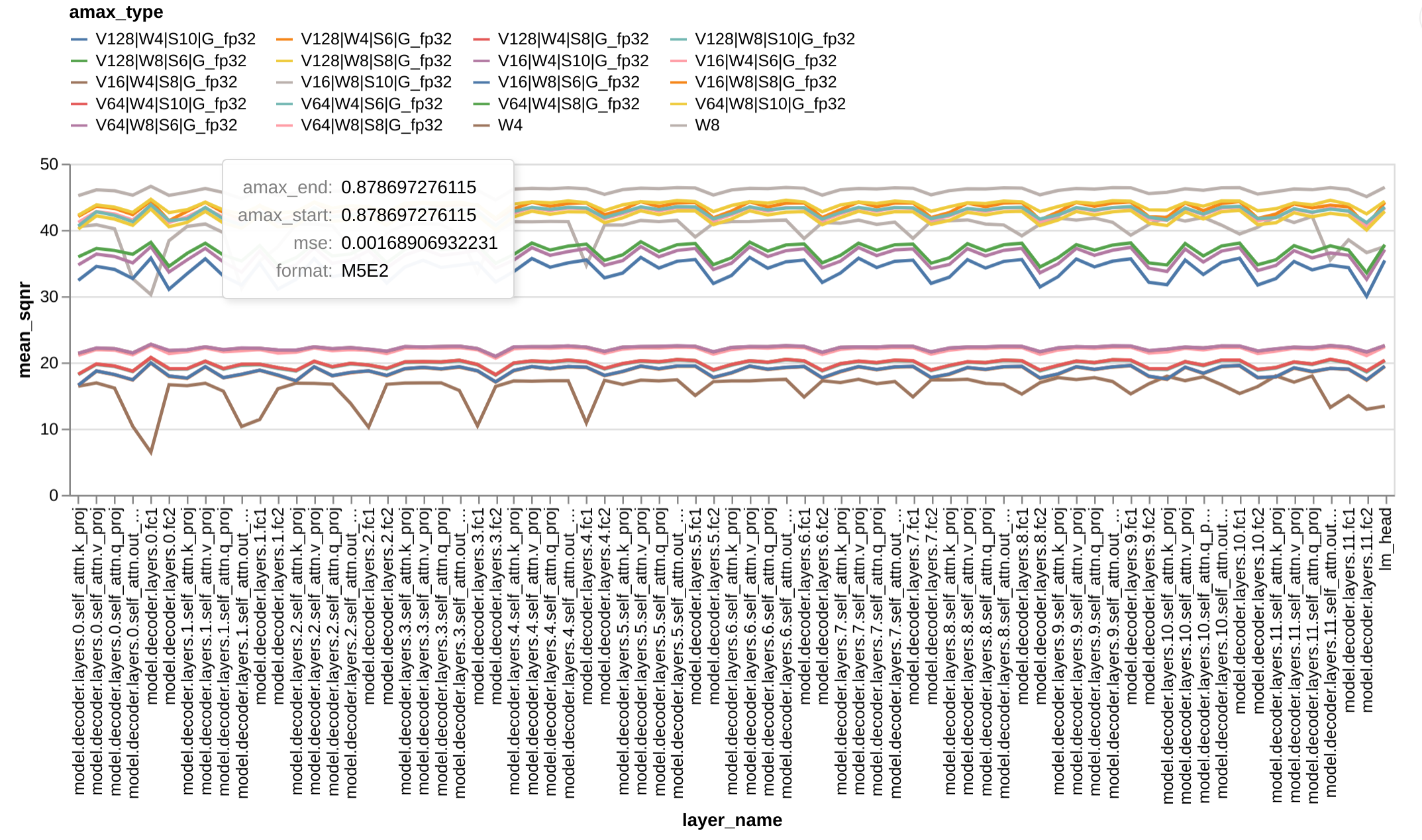

- Vector sizes sweeped by default are: 16, 64, 128

- Level 1 Quantization bitwidths sweeped are: 4, 8

- Level 2 Quantization bitwidths sweeped are: 6, 10

- For weight tensors, you can enable per-channel quantization by passing the channel dimension with “-c <cdim”

# input tensor

dune exec quantviz -- vsq data/arul/data/artifacts/opt125m/fp32/layers inputs

# weight tensor

dune exec quantviz -- vsq /nfs/nomster/data/arul/data/artifacts/opt125m/fp32/layers layer_variables -c 1You can place the tensor on CUDA and run the tool. The CUDA device to place the tensor on can be passed using “-d” option. If no argument is passed, the tensor will be kept on CPU for the simulations

I like using vega-lite. So, I have made the vega-lite recipes available. You can use `vega-export` command line tool to generate a visualization to look at the generated csv file.

These were generated on OPT-125m. Input tensor used per-tensor

calibrations. Weight tensors used per-channel calibrations.