Operationalizing Machine Learning (AzureML Nanodegree)

In this project we are provided a dataset: the Bank marketing dataset. We use automl to determined the best performing model, which can be used to predict whether or not the clients end up subscribing to the product being advertised by the bank. We deploy this model, so that it can used by others to work with on this dataset. We make sure to enable logging so that we can track what goes with our deployed model for debugging purposes.

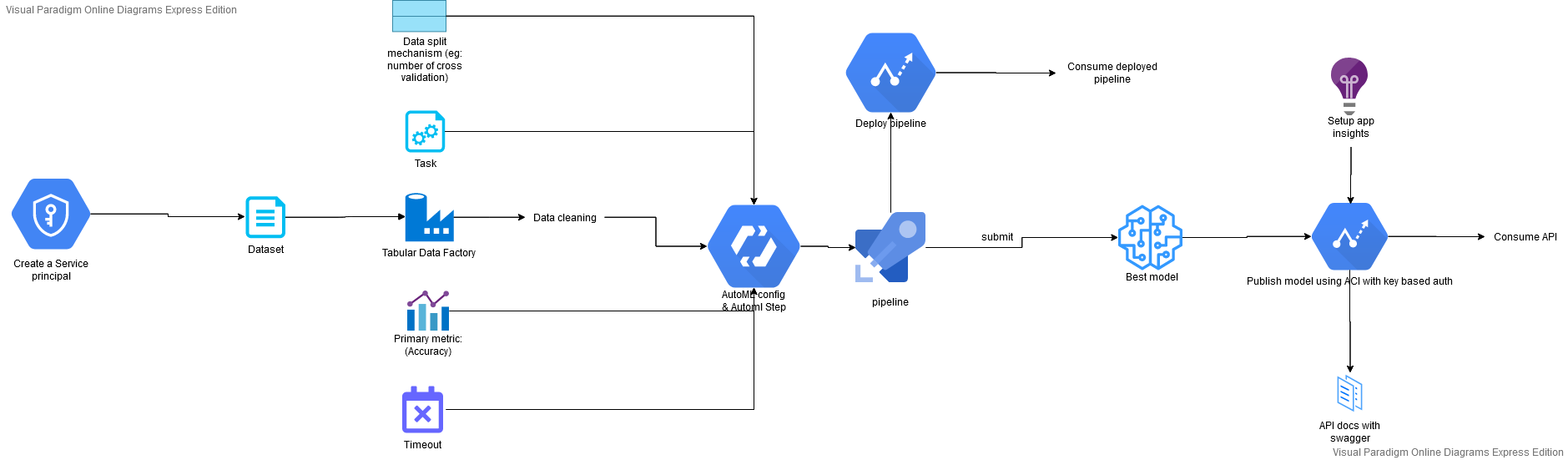

Architectural Diagram

Here is an architectural view of the project:

Key Steps

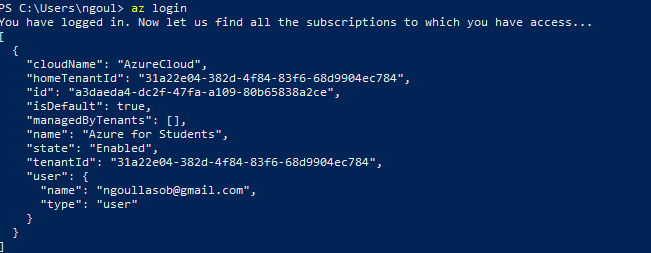

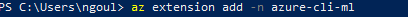

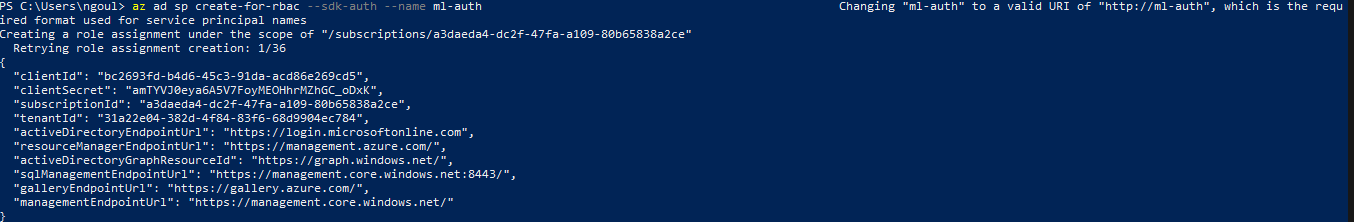

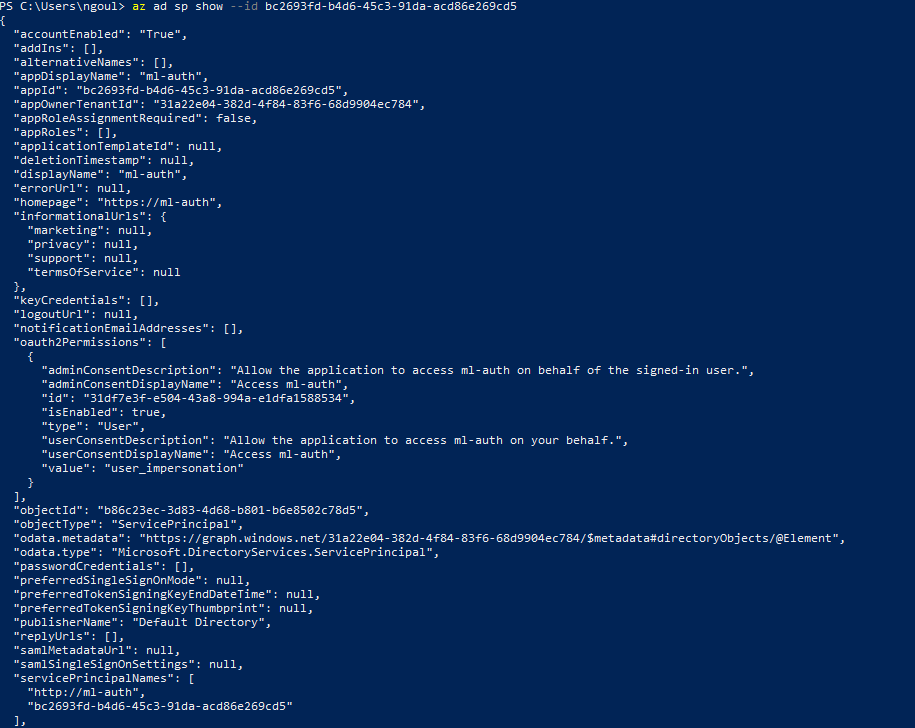

Creating a service principal

We kickstart by creating an service principal. We first install az command line tool and also the azure-cli-ml extension to it. We login and then create a service principal:

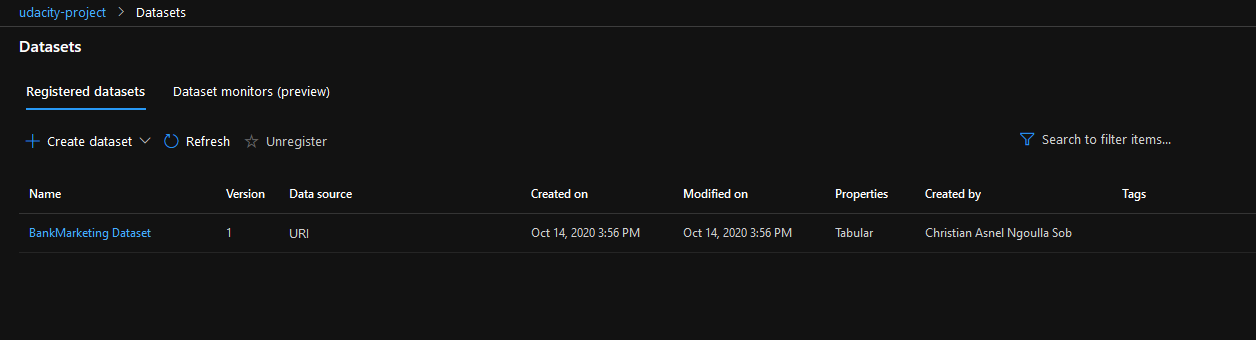

Getting the data

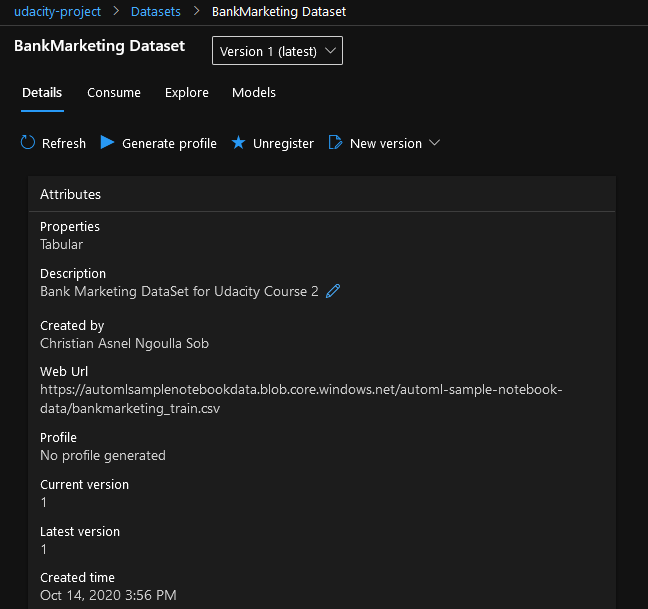

We get the data to be used from this link, and save it under the name BankMarketing Dataset.

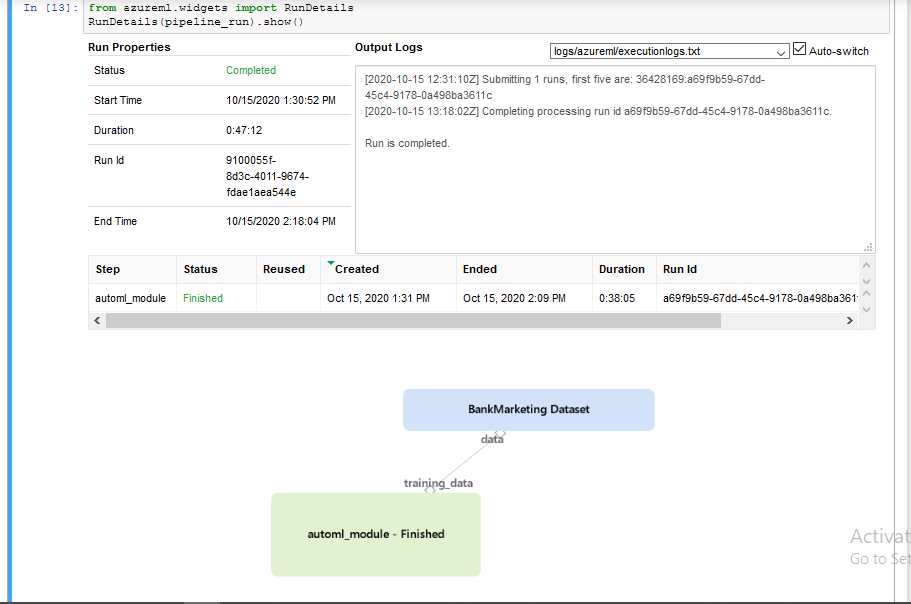

Setting up the pipeline and Running the experiment

We setup new compute cluster and a pipeline with an automl step configured to run classification with a primary metric of AUC_weighted on our dataset.

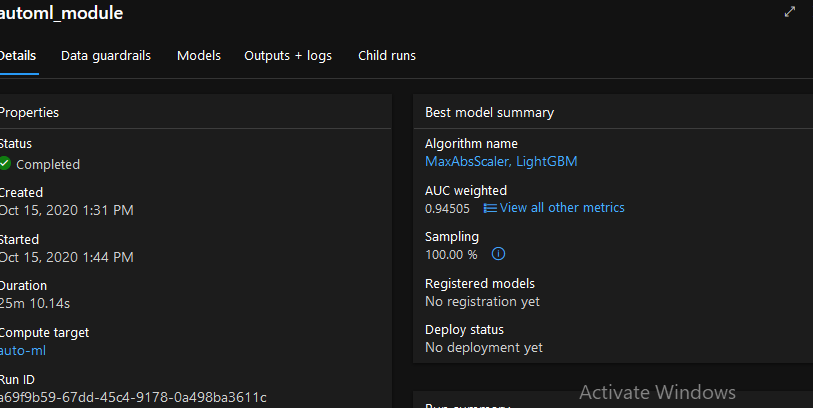

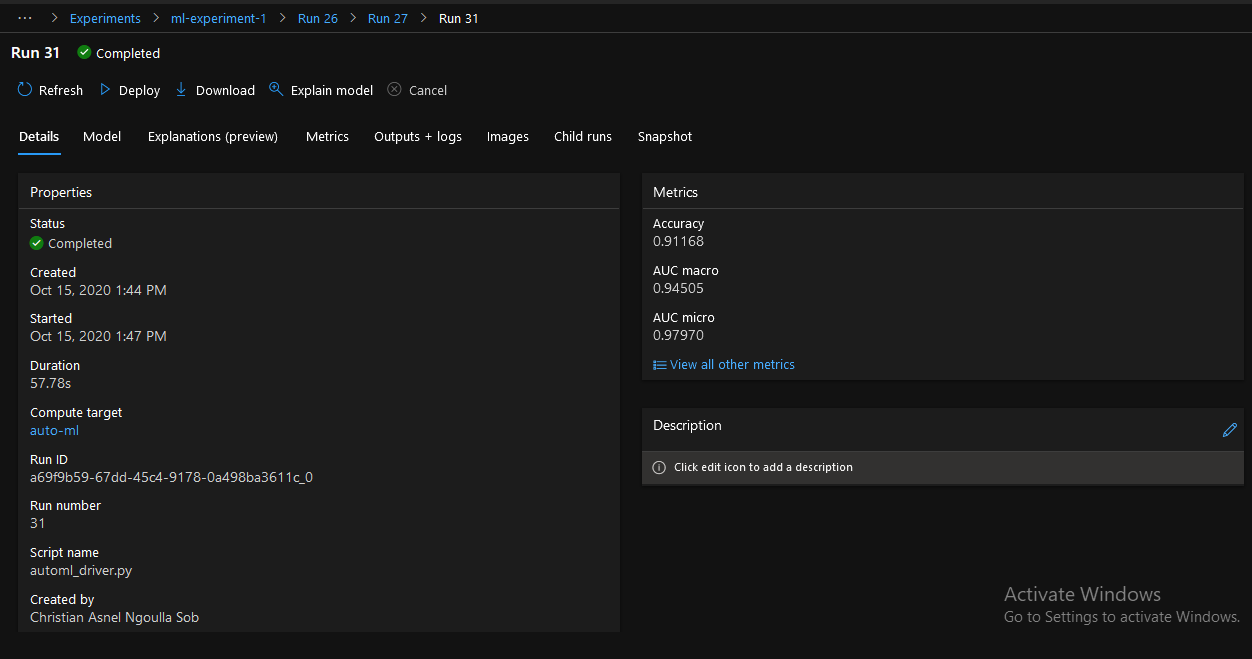

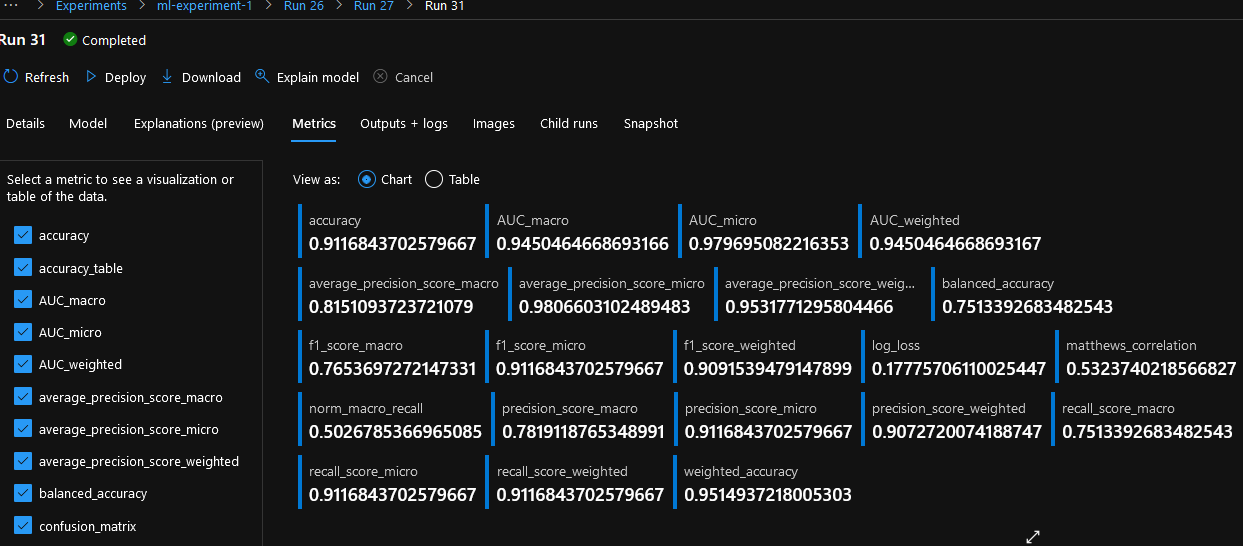

Selecting the best performing model

Once our automated ML step is completed, we select the best performing model (MaxAbsScaler, LightGBM).

Here are some metrics of the best performing model:

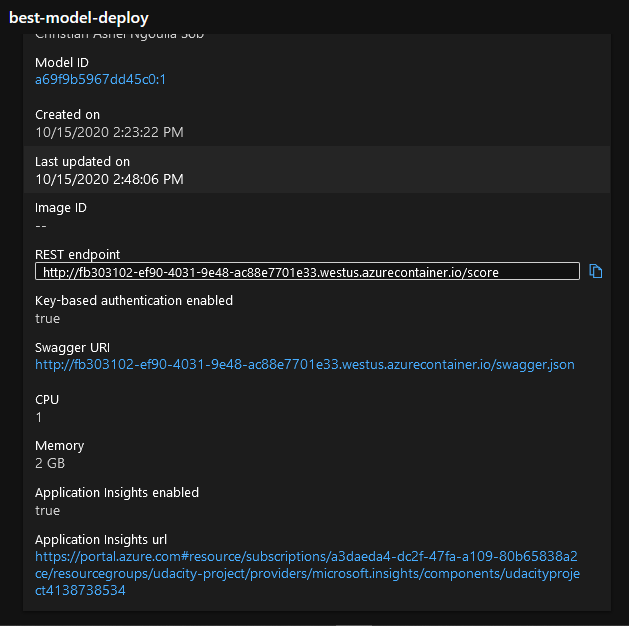

Deploying the best performing model

We now proceed with deploying the best performing model. We use an ACI (azure container instance) with authentication enabled for the deployment.

Setup logging

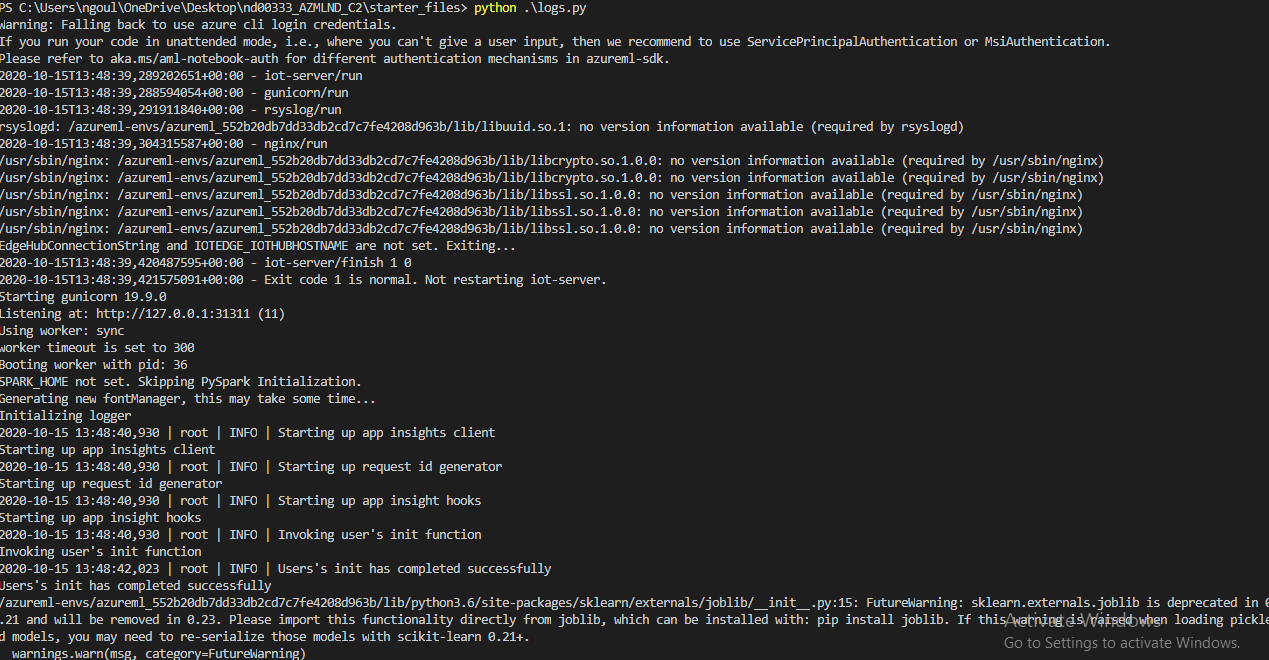

We setup login so that we can track the activities on our pipeline. This is particularly useful because it can help us debug errors that may arise (503 for example which is usually the result of an unhandled exception.)

We run the script found in logs.py to enable logging:

Here we can clearly see that logging as been enabled on our endpoint:

Application insigths enabled

true

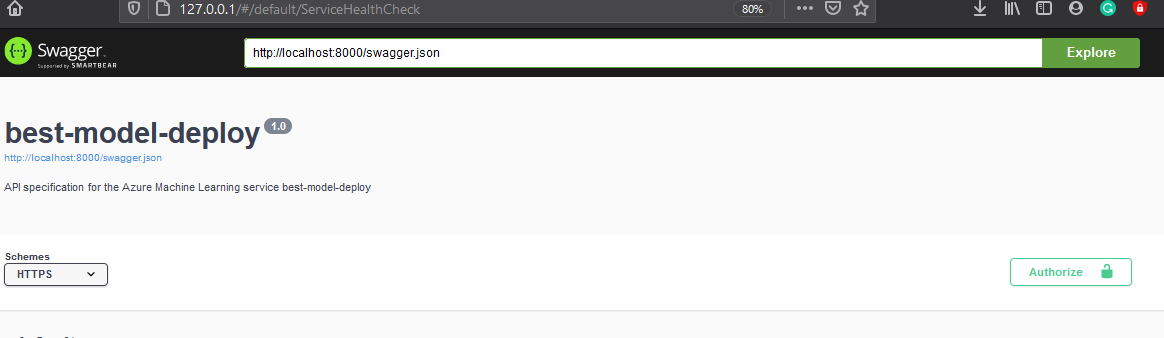

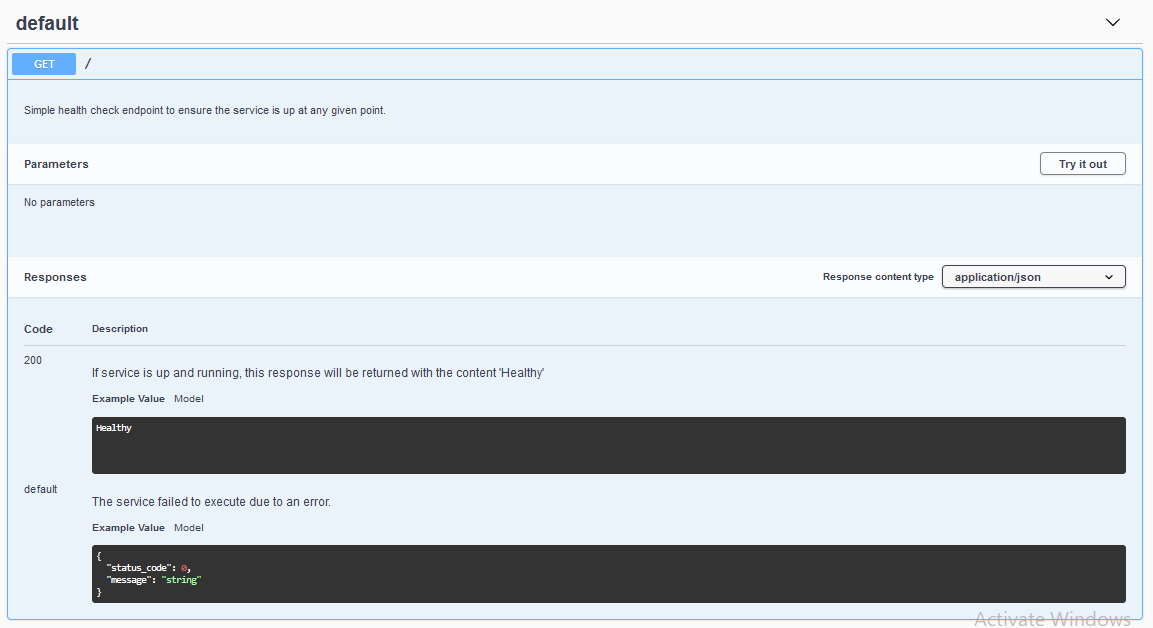

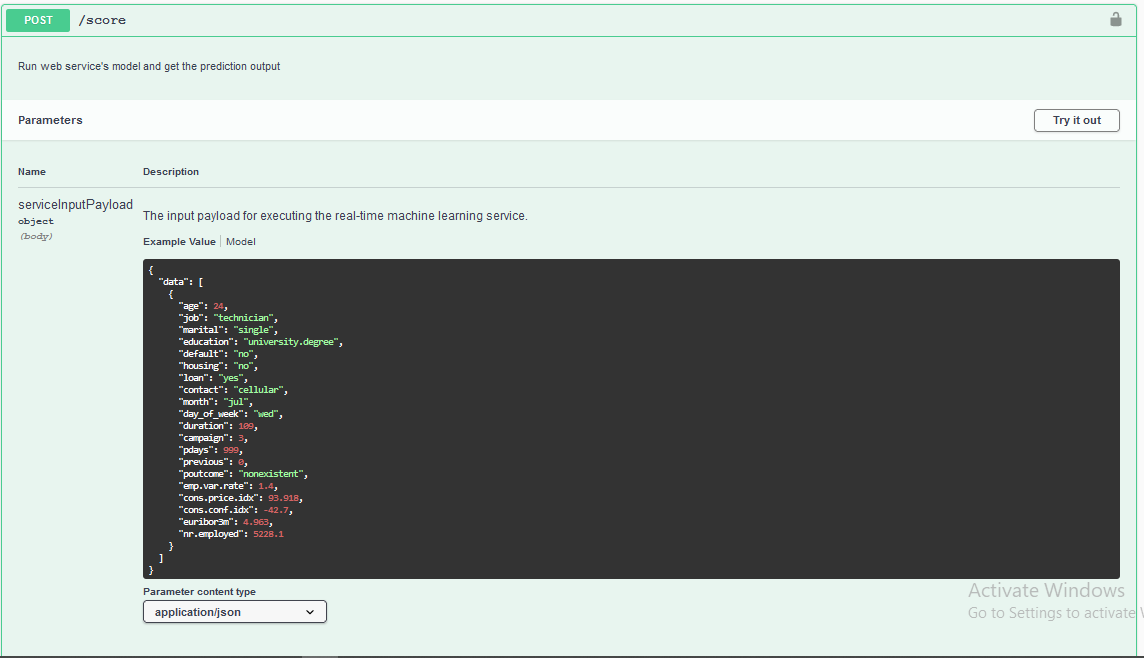

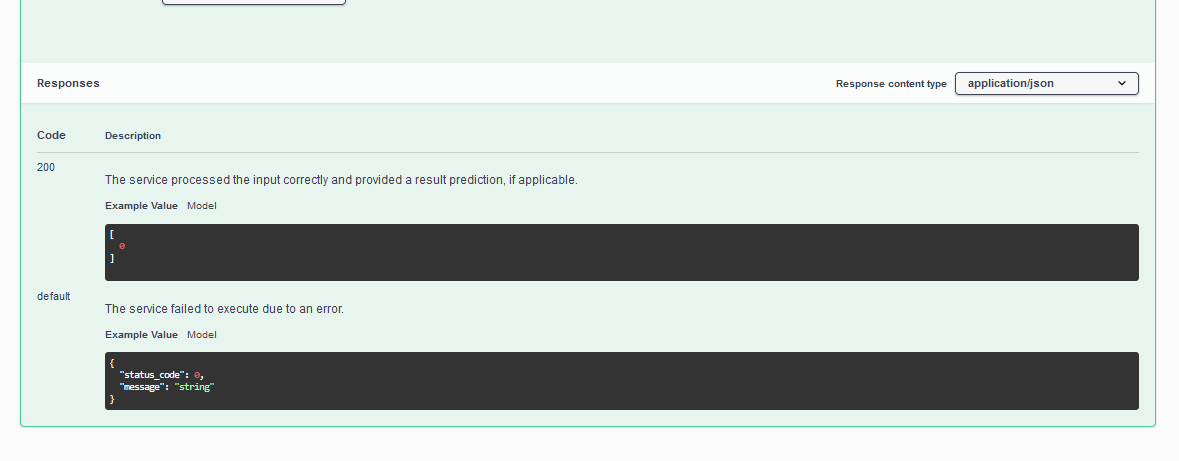

Endpoint documentation: SWAGGER

Documenting our endpoint is very important. Documentation provide information on the type of requests that can be made on the endpoints available for consumption, the type of input or data expected with a sample.

We get the document from our endpoint which is found in the provided on this link

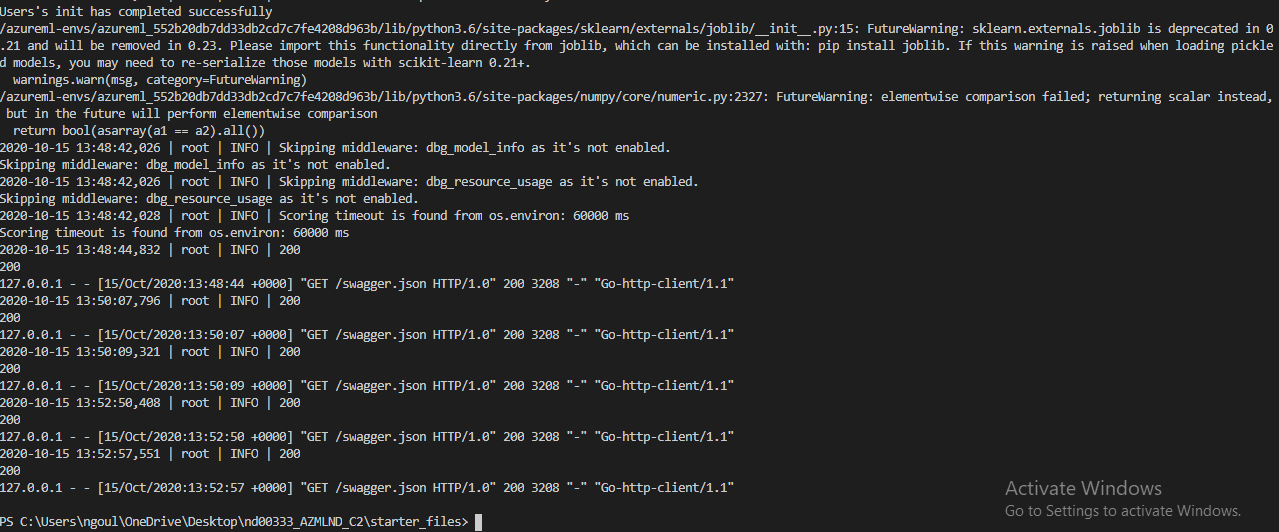

Consume the endpoint

We modify the script found in ./endpoint.py to consume the endpoint to make prediction. We make post requests to our endpoint with some data to make prediction.

We provide the neccessary key for the request in the header (We have setup our endpoint to require a key for security).

Define and run an ML pipeline

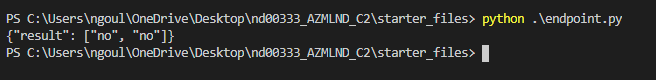

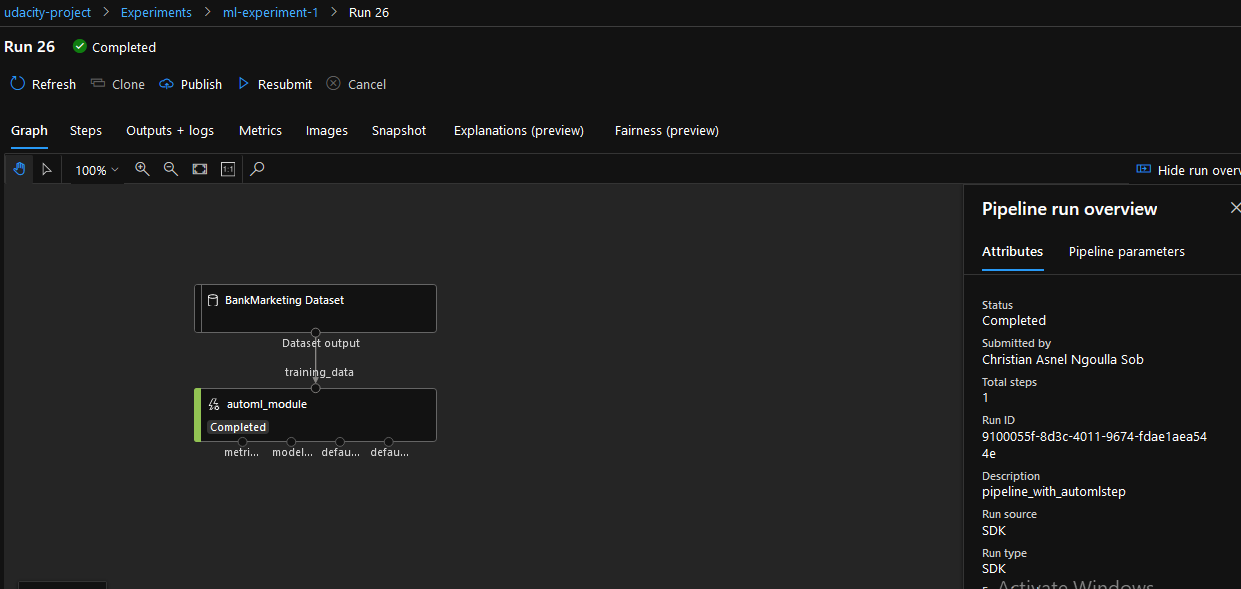

We get the same data (BankMarketting Dataset), we define a pipeline with an automl step for training our data (using classification).

Here is a screenshot showing that our pipeline run has been completed with our dataset and the auto_ml module:

Publish the pipeline

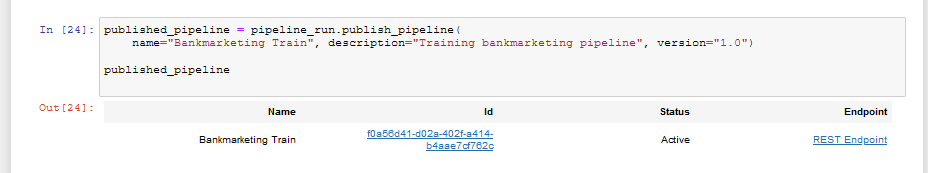

We go ahead and publish the pipeline as follows:

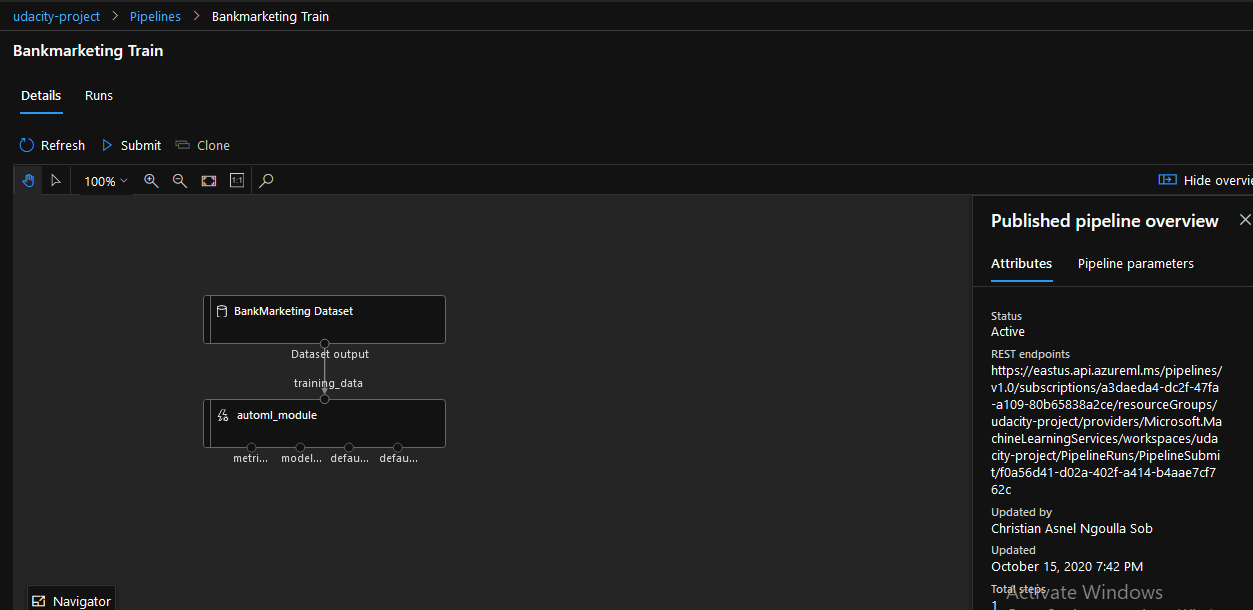

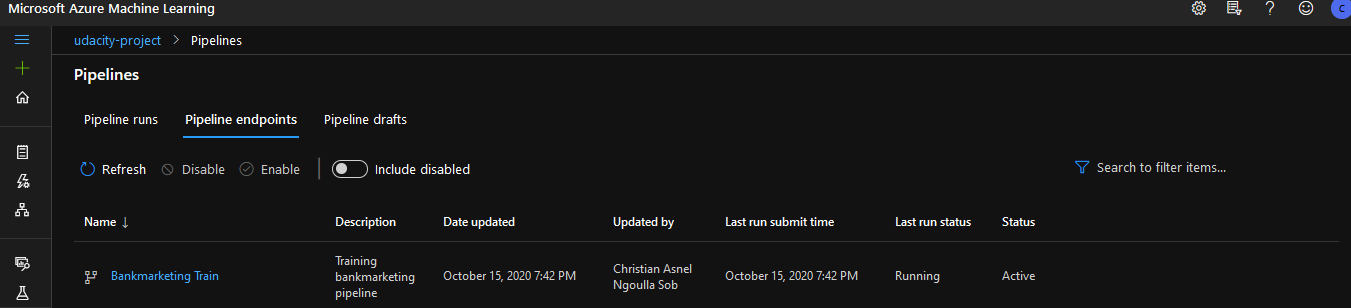

Here are screenshots showing the published pipeline with its active endpoint:

We consume the publish pipeline

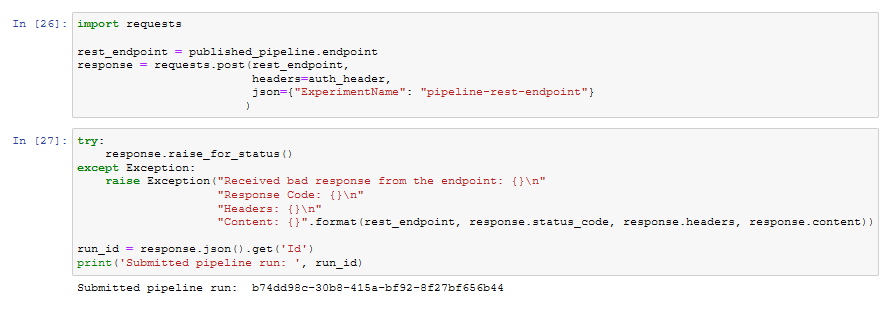

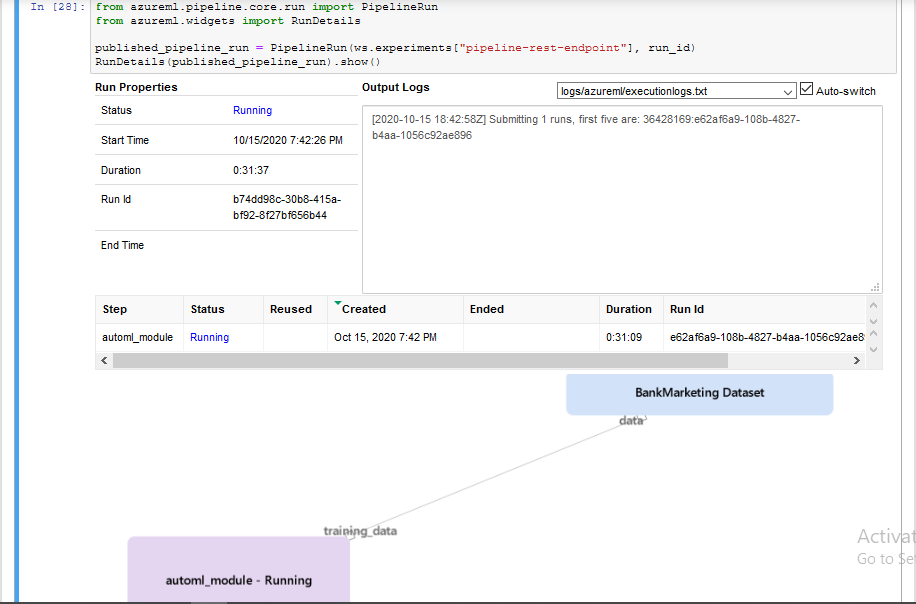

We consume our pipeline, making a post request to our pipeline endpoint with all authentication details in the header, to trigger a run.

Here are some details about the run:

Screen Recording

Here is a screencast showing the project in action.

Standout Suggestions

TODO (Optional): This is where you can provide information about any standout suggestions that you have attempted.

Improve on the project

They are several steps we can take to improve on this project.

- We can further clean the data. The data presents many outliers. We need to remove these outliers to improve on our model, because outliers tend to drow the results in their direction making our predictions inaccurate. We can also remove column that are not really meaningful or impactful. The

defaultcolumn has the same value for almost every row: which is why we can conclude that it doesn't really have an impact on our predictions. - We can also consider adding a parallel run step to our pipeline to support batch input. This could greatly reduce the processing time in case we have a lot of data to process.