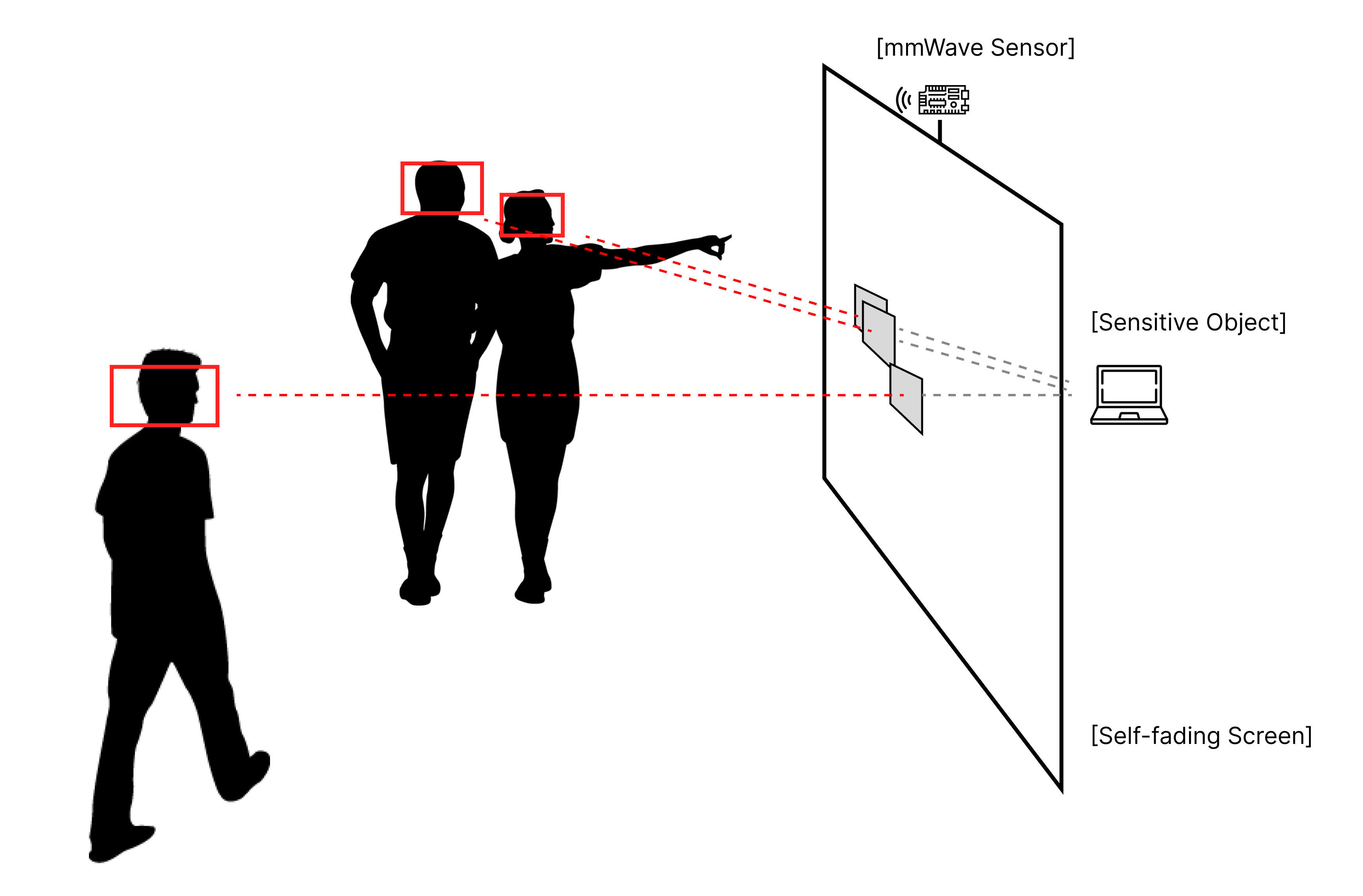

This repository implements a top-down approach to Multi-Person Pose Estimation (MPPE) through Multi Target Tracking (MTT) for monitoring the presence of humans entering a scene and Pose Estimation on every bounding box to estimate the location of 19 human-joint keypoints. It includes functionalities to estimate targets' line-of-sight and ensures privacy protection by activating local opacities on self-fading smart windows. The system is specifically designed to receive radar data from the IWR1443 millimeter-wave sensor by Texas Instruments.

NOTE: This repository uses a simplified GTRACK algorithm for tracking and modifies the MARS model architecture for posture estimation module.

This is the repository for my MSc thesis: Real-time mmWave Multi-Person Pose Estimation System for Privacy-Aware Windows

-

Clone this repository.

git clone https://github.com/AsteriosPar/mmWave_MSc

-

Install Dependencies.

pip install -r requirements.txt

-

Adjust the system and scene configurations from the default directory

./src/constants.py. -

(Optional) For creating\logging an experiment for offline experimentation, in the directory of the local copy run the logging module.

python3 ./src/DataLogging.py

-

Run the program online or offline on a logged experiment.

# online python3 ./src/main.py # offline python3 ./src/offline_main.py

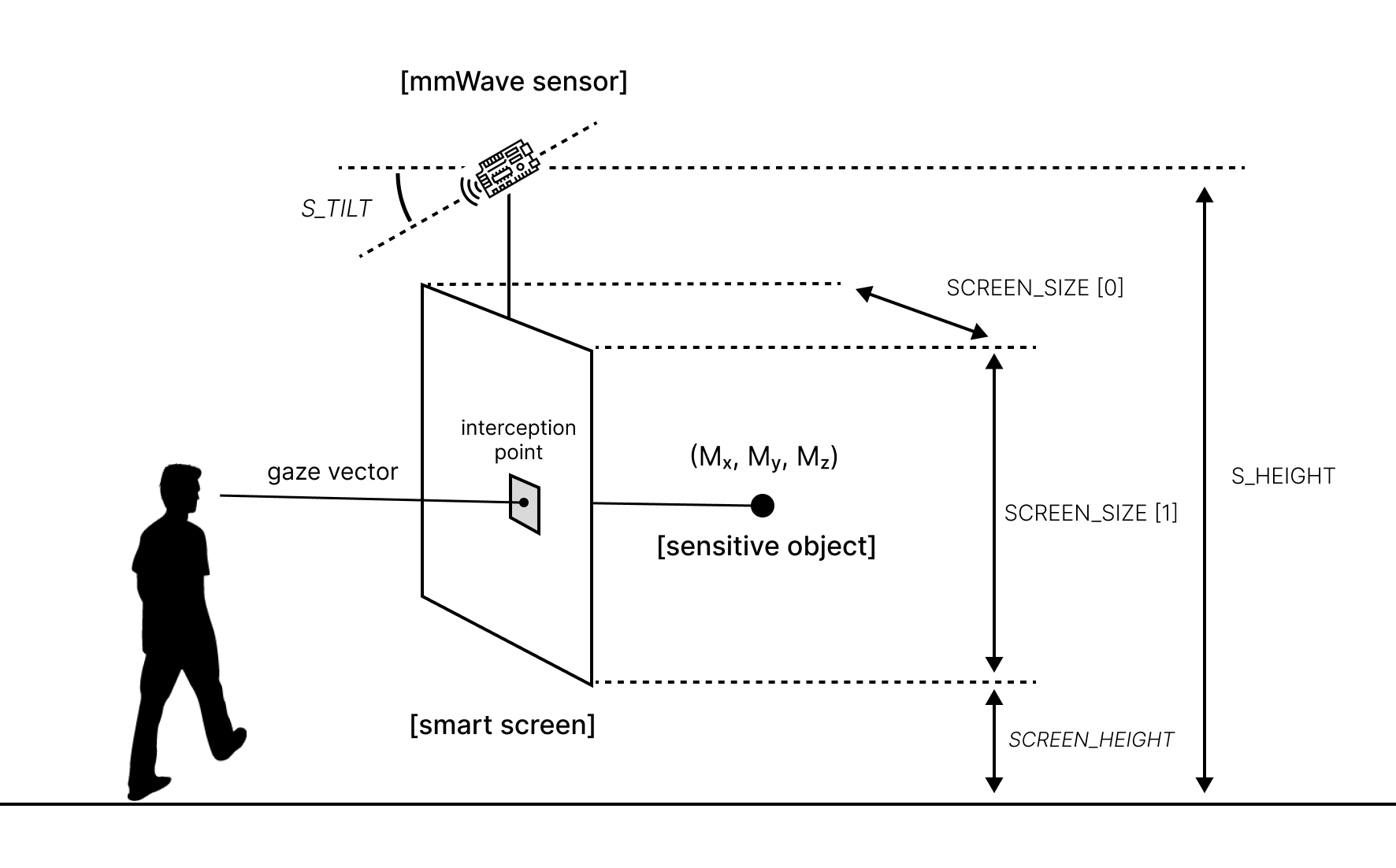

All the necessary scene configuration parameters are shown in the picture below. NOTE: Make sure to set the SCREEN_CONNECTED configuration to TRUE to enable the privacy shielding function. Otherwise, the system will visualize the estimated skeletons and their bounding boxes.

SCREEN_CONNECTED = True-

Download our dataset in the main project directory.

-

Run (or modify) the preprocessing algorithm.

python3 ./src/preprocessing.py- The output will be in the /formatted/ folder and have the format:

├── dataset

│ ├── formatted

│ │ ├── kinect

│ │ | ├── training_labels.npy

│ │ | ├── validate_labels.npy

│ │ | ├── testing_labels.npy

│ │ ├── mmWave

│ │ | ├── training_mmWave.npy

│ │ | ├── validate_mmWave.npy

│ │ | ├── testing_mmWave.npy

- Train the model.

python3 ./src/train.py