[WIP]

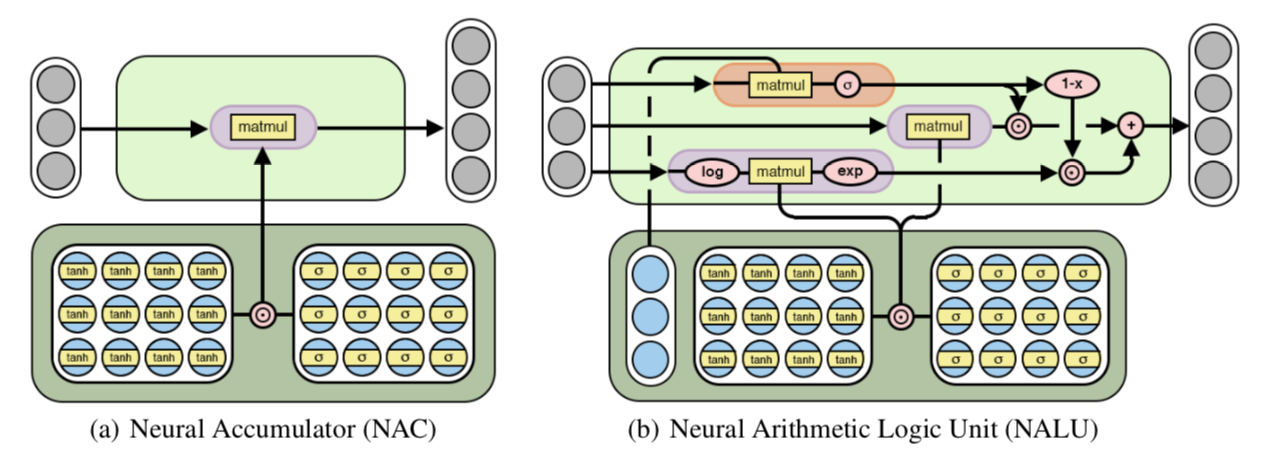

This is a Keras implementation of Neural Arithmetic Logic Units by Andrew Trask, Felix Hill, Scott Reed, Jack Rae, Chris Dyer and Phil Blunsom.

Simply add them as normal layers after importing nalu.py or nac.py.

NALU has several additional parameters, the most important of which is whether to apply the gating mechanism or not

use_gatingis True by default, enabling the behaviour from the paper.- Resetting

use_gatingallows the layer to model more complex expressions.

from nalu import NALU

ip = Input(...)

x = NALU(10, use_gating=True)(ip)

...- Tensorflow (Tested) | Theano

- Keras 2+