This is the official repository with PyTorch implementation of LW-DETR: A Transformer Replacement to YOLO for Real-Time Detection.

☀️ If you find this work useful for your research, please kindly star our repo and cite our paper! ☀️

- Release a series of real-time detection models in LW-DETR, including LW-DETR-tiny, LW-DETR-small, LW-DETR-medium, LW-DETR-large and LW-DETR-xlarge, named <LWDETR_*size_60e_coco.pth>. Please refer to Hugging Face to download.

- Release a series of pretrained models in LW-DETR. Please refer to Hugging Face to download.

[2024/7/15] We present OVLW-DETR, an efficient open-vocabulary detector with outstanding performance and low latency, built upon LW-DETR. It surpasses existing real-time open-vocabulary detectors on the standard Zero-Shot LVIS benchmark. The source code and pre-trained model is comming soon, please stay tuned!

- 1. Introduction

- 2. Installation

- 3. Preparation

- 4. Train

- 5. Eval

- 6. Deploy

- 7. Main Results

- 8. References

- 9. Citation

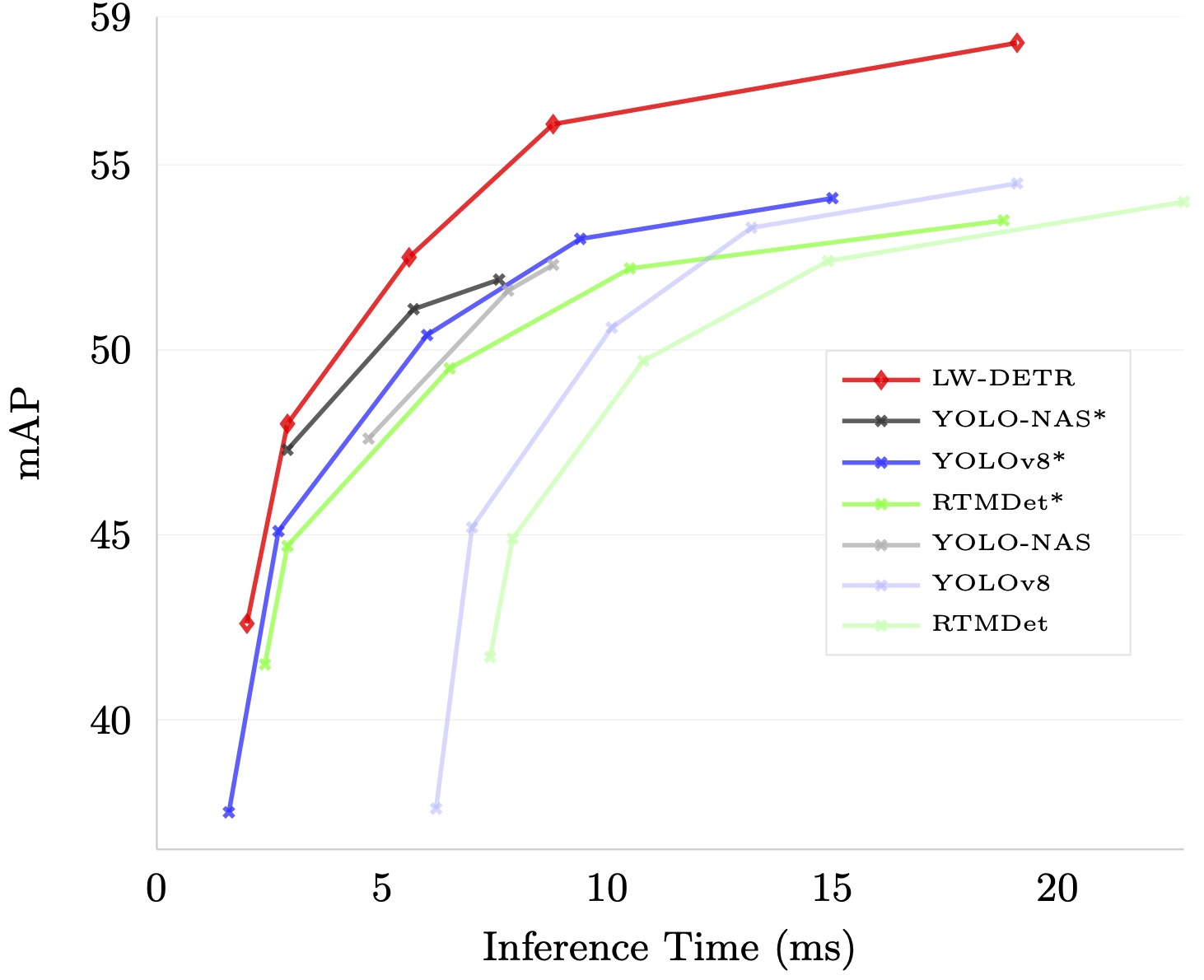

LW-DETR is a light-weight detection tranformer, which outperforms YOLOs for real-time object detection. The architecture is a simple stack of a ViT encoder, a projector, and a shallow DETR decoder. LW-DETR leverages recent advanced techniques, such as training-effective techniques, e.g., improved loss and pretraining, and interleaved window and global attentions for reducing the ViT encoder complexity. LW-DETR improves the ViT encoder by aggregating multi-level feature maps, and the intermediate and final feature maps in the ViT encoder, forming richer feature maps, and introduces window-major feature map organization for improving the efficiency of interleaved attention computation. LW-DETR achieves superior performance than on existing real-time detectors, e.g., YOLO and its variants, on COCO and other benchmark datasets.

The code is developed and validated under python=3.8.19, pytorch=1.13.0, cuda=11.6,TensorRT-8.6.1.6. Higher versions might be available as well.

- Create your own Python environment with Anaconda.

conda create -n lwdetr python=3.8.19

conda activate lwdetr- Clone this repo.

git clone https://github.com/Atten4Vis/LW-DETR.git

cd LW-DETR- Install PyTorch and torchvision.

Follow the instruction on https://pytorch.org/get-started/locally/.

# an example:

conda install pytorch==1.13.0 torchvision==0.14.0 pytorch-cuda=11.6 -c pytorch -c nvidia- Install required packages.

For training and evaluation:

pip install -r requirements.txtFor deployment:

Please refer to NVIDIA for installation instruction of TensorRT

pip install -r deploy/requirements.txt- Compiling CUDA operators

cd models/ops

python setup.py build install

# unit test (should see all checking is True)

python test.py

cd ../..For MS COCO dataset, please download and extract COCO 2017 train and val images with annotations from http://cocodataset.org. We expect the directory structure to be the following:

COCODIR/

├── train2017/

├── val2017/

└── annotations/

├── instances_train2017.json

└── instances_val2017.json

For Objects365 dataset for pretraining, please download Objects365 images with annotations from https://www.objects365.org/overview.html.

All the checkpoints can be found in Hugging Face.

- Pretraining on Objects365.

- Pretrained the ViT.

We pretrain the ViT on the dataset Objects365 using a MIM method, CAE v2, based on the pretrained models. Please refer to the following link to download the pretrained models, and put them into pretrain_weights/.

| Model | Comment |

|---|---|

| caev2_tiny_300e_objects365 | pretrained ViT model on objects365 for LW-DETR-tiny/small using CAE v2 |

| caev2_tiny_300e_objects365 | pretrained ViT model on objects365 for LW-DETR-medium/large using CAE v2 |

| caev2_tiny_300e_objects365 | pretrained ViT model on objects365 for LW-DETR-xlarge using CAE v2 |

- Pretrained LW-DETR.

We retrain the encoder and train the projector and the decoder on Objects365 in a supervision manner. Please refer to the following link to download the pretrained models, and put them into pretrain_weights/.

| Model | Comment |

|---|---|

| LWDETR_tiny_30e_objects365 | pretrained LW-DETR-tiny model on objects365 |

| LWDETR_small_30e_objects365 | pretrained LW-DETR-small model on objects365 |

| LWDETR_medium_30e_objects365 | pretrained LW-DETR-medium model on objects365 |

| LWDETR_large_30e_objects365 | pretrained LW-DETR-large model on objects365 |

| LWDETR_xlarge_30e_objects365 | pretrained LW-DETR-xlarge model on objects365 |

- Finetuning on COCO.

We finetune the pretrained model on COCO. If you want to reimplement our repo, please skip this step. If you want to directly evaluate our trained models, please refer to the following link to download the finetuned models, and put them into

output/.

| Model | Comment |

|---|---|

| LWDETR_tiny_60e_coco | finetuned LW-DETR-tiny model on COCO |

| LWDETR_small_60e_coco | finetuned LW-DETR-small model on COCO |

| LWDETR_medium_60e_coco | finetuned LW-DETR-medium model on COCO |

| LWDETR_large_60e_coco | finetuned LW-DETR-large model on COCO |

| LWDETR_xlarge_60e_coco | finetuned LW-DETR-xlarge model on COCO |

You can directly run scripts/lwdetr_<model_size>_coco_train.sh file for the training process on coco dataset.

Train a LW-DETR-tiny model

sh scripts/lwdetr_tiny_coco_train.sh /path/to/your/COCODIRTrain a LW-DETR-small model

sh scripts/lwdetr_small_coco_train.sh /path/to/your/COCODIRTrain a LW-DETR-medium model

sh scripts/lwdetr_medium_coco_train.sh /path/to/your/COCODIRTrain a LW-DETR-large model

sh scripts/lwdetr_large_coco_train.sh /path/to/your/COCODIRTrain a LW-DETR-xlarge model

sh scripts/lwdetr_xlarge_coco_train.sh /path/to/your/COCODIRYou can directly run scripts/lwdetr_<model_size>_coco_eval.sh file for the evaluation process on coco dataset. Please refer to 3. Preparation to download a series of LW-DETR models.

Eval our pretrained LW-DETR-tiny model

sh scripts/lwdetr_tiny_coco_eval.sh /path/to/your/COCODIR /path/to/your/checkpointEval our pretrained LW-DETR-small model

sh scripts/lwdetr_small_coco_eval.sh /path/to/your/COCODIR /path/to/your/checkpointEval our pretrained LW-DETR-medium model

sh scripts/lwdetr_medium_coco_eval.sh /path/to/your/COCODIR /path/to/your/checkpointEval our pretrained LW-DETR-large model

sh scripts/lwdetr_large_coco_eval.sh /path/to/your/COCODIR /path/to/your/checkpointEval our pretrained LW-DETR-xlarge model

sh scripts/lwdetr_xlarge_coco_eval.sh /path/to/your/COCODIR /path/to/your/checkpointYou can run scripts/lwdetr_<model_size>_coco_export.sh file to export models for development. Before execution, please ensure that TensorRT and cuDNN environment variables are correctly set.

Export a LW-DETR-tiny model

# export ONNX model

sh scripts/lwdetr_tiny_coco_export.sh /path/to/your/COCODIR /path/to/your/checkpoint

# convert model from ONNX to TensorRT engine as well

sh scripts/lwdetr_tiny_coco_export.sh /path/to/your/COCODIR /path/to/your/checkpoint --trtExport a LW-DETR-small model

# export ONNX model

sh scripts/lwdetr_small_coco_export.sh /path/to/your/COCODIR /path/to/your/checkpoint

# convert model from ONNX to TensorRT engine as well

sh scripts/lwdetr_small_coco_export.sh /path/to/your/COCODIR /path/to/your/checkpoint --trtExport a LW-DETR-medium model

# export ONNX model

sh scripts/lwdetr_medium_coco_export.sh /path/to/your/COCODIR /path/to/your/checkpoint

# convert model from ONNX to TensorRT engine as well

sh scripts/lwdetr_medium_coco_export.sh /path/to/your/COCODIR /path/to/your/checkpoint --trtExport a LW-DETR-large model

# export ONNX model

sh scripts/lwdetr_large_coco_export.sh /path/to/your/COCODIR /path/to/your/checkpoint

# convert model from ONNX to TensorRT engine as well

sh scripts/lwdetr_large_coco_export.sh /path/to/your/COCODIR /path/to/your/checkpoint --trtExport a LW-DETR-xlarge model

# export ONNX model

sh scripts/lwdetr_xlarge_coco_export.sh /path/to/your/COCODIR /path/to/your/checkpoint

# convert model from ONNX to TensorRT engine as well

sh scripts/lwdetr_xlarge_coco_export.sh /path/to/your/COCODIR /path/to/your/checkpoint --trtYou can use deploy/benchmark.py tool to run benchmarks of inference latency.

# evaluate and benchmark the latency on a onnx model

python deploy/benchmark.py --path=/path/to/your/onnxmodel --coco_path=/path/to/your/COCODIR --run_benchmark

# evaluate and benchmark the latency on a TensorRT engine

python deploy/benchmark.py --path=/path/to/your/trtengine --coco_path=/path/to/your/COCODIR --run_benchmark The main results on coco dataset. We report the mAP as reported in the original paper, as well as the mAP obtained from re-implementation.

Method |

pretraining | Params (M) | FLOPs (G) | Model Latency (ms) | Total Latency (ms) | mAP | Download |

|---|---|---|---|---|---|---|---|

LW-DETR-tiny |

✔ | 12.1 | 11.2 | 2.0 | 2.0 | 42.6(42.9) | Link |

LW-DETR-small |

✔ | 14.6 | 16.6 | 2.9 | 2.9 | 48.0(48.1) | Link |

LW-DETR-medium |

✔ | 28.2 | 42.8 | 5.6 | 5.6 | 52.5(52.6) | Link |

LW-DETR-large |

✔ | 46.8 | 71.6 | 8.8 | 8.8 | 56.1(56.1) | Link |

LW-DETR-xlarge |

✔ | 118.0 | 174.2 | 19.1 | 19.1 | 58.3(58.3) | Link |

Our project is conducted based on the following public paper with code:

If you find this code useful in your research, please kindly consider citing our paper:

@article{chen2024lw,

title={LW-DETR: A Transformer Replacement to YOLO for Real-Time Detection},

author={Chen, Qiang and Su, Xiangbo and Zhang, Xinyu and Wang, Jian and Chen, Jiahui and Shen, Yunpeng and Han, Chuchu and Chen, Ziliang and Xu, Weixiang and Li, Fanrong and others},

journal={arXiv preprint arXiv:2406.03459},

year={2024}

}