This repository contains the authors' implementation of UVStyle-Net: Unsupervised Few-shot Learning of 3D Style Similarity Measure for B-Reps.

- About UVStyle-Net

- Citing this Work

- Quickstart

- Full Datasets

- Other Experiments

- Using Your Own Data

- License

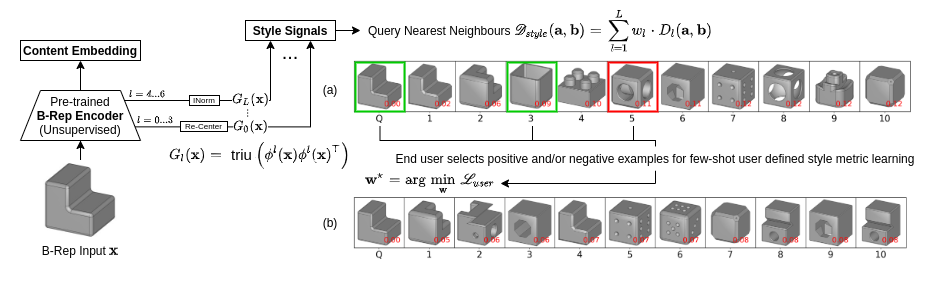

UVStyle-Net is an unsupervised style similarity learning method for Boundary Representations (B-Reps)/CAD models, which can be tailored to an end-user's interpretation of style by supplying only a few examples.

The way it works can be summarized as follows:

- Train the B-Rep encoder (we use UV-Net without edge features) using content classification (supervised), or point cloud reconstruction (unsupervised)

- Extract the Gram matrices of the activations in each layer of the encoder

- Define the style distance for two solids as a weighted sum of distances (Euclidean, cosine, etc.) between each layer's Gram matrix using uniform weights

- OPTIONAL: Determine the best weights for the style distance according to a few user selected examples that share a common style

For a 5 minute overview, take a look at our ICCV presentation video/poster below, or for full details, see the paper.

If you use any of the code or techniques from the paper, please cite the following:

Meltzer, Peter, Hooman Shayani, Amir Khasahmadi, Pradeep Kumar Jayaraman, Aditya Sanghi, and Joseph Lambourne. ‘UVStyle-Net: Unsupervised Few-Shot Learning of 3D Style Similarity Measure for B-Reps’. In IEEE/CVF International Conference on Computer Vision (ICCV), 2021. http://arxiv.org/abs/2105.02961.

@inproceedings{meltzer_uvstyle-net_2021,

title = {{UVStyle}-{Net}: {Unsupervised} {Few}-shot {Learning} of {3D} {Style} {Similarity} {Measure} for {B}-{Reps}},

shorttitle = {{UVStyle}-{Net}},

url = {http://arxiv.org/abs/2105.02961},

booktitle = {{IEEE}/{CVF} {International} {Conference} on {Computer} {Vision} ({ICCV})},

author = {Meltzer, Peter and Shayani, Hooman and Khasahmadi, Amir and Jayaraman, Pradeep Kumar and Sanghi, Aditya and Lambourne, Joseph},

year = {2021},

note = {arXiv: 2105.02961},

keywords = {Computer Science - Computer Vision and Pattern Recognition, 68T07, 68T10, Computer Science - Machine Learning, I.3.5, I.5.1},

}

We recommend using a virtual environment such as conda:

$ conda create --name uvstylenet python=3.7

WINDOWS: $ activate uvstylenet

LINUX, macOS: $ source activate uvstylenet- swap

dglinrequirmenets.txt:3for the correct cuda version for your system. i.e.cdl-cu102,dgl-cu100, etc. (for cpu only usedgl) - for gpu use you may need to install cudatoolkit/set environment variable

LD_LIBRARY_PATHif you have not done so already

Install the remaining requirements:

$ pip install -r requirements.txtTo use the download script requires a 7z extractor to be installed:

# Linux

$ sudo apt-get install p7zip-full

# MacOS

$ brew install p7zipor for windows, visit: https://www.7-zip.org/download.html.

To get started quickly and interact with the models, we recommend downloading only the pre-computed Gram matrices for the SolidLETTERS test set along with the pre-trained SolidLETTERS model, the test set dgl binary files, and the test set meshes (to assist visualization). You will need a little bit over 7GB of space for all this data. All of these necessary files can be downloaded into the correct directory structure with:

$ python download_data.py quickstartAll dashboards use streamlit and can be run from the project root.

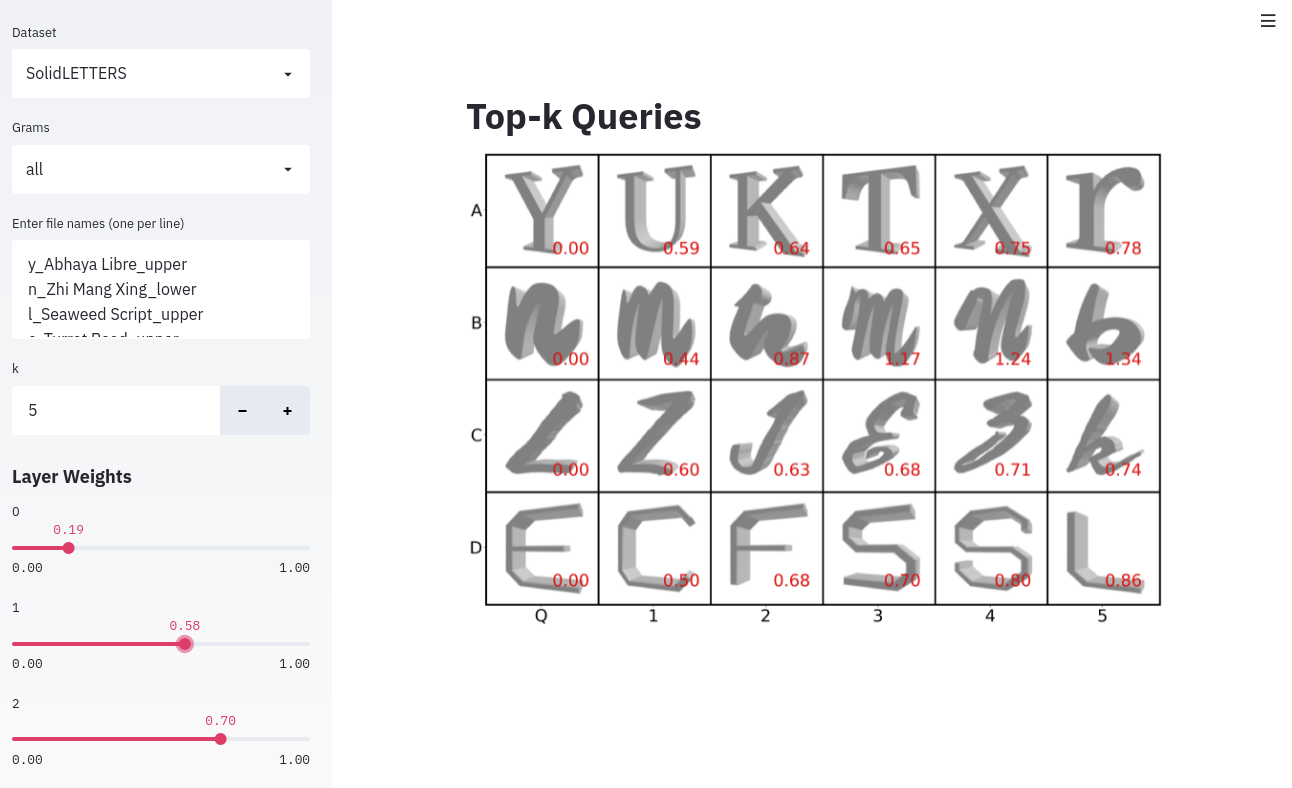

Experiment with manually adjusting the layer weights used to compute the style loss, and observe their effect on the nearest neighbours. Available for SolidLETTERS and ABC datasets.

$ streamlit run dashboards/top_k.pyVisualize the xyz position gradients of the style loss between a pair of solids. Black lines indicate the direction and magnitude of the gradient of the loss with respect to each of the individual sampled points (i.e. which direction to move each sampled point to better match the style between the solids). Available for SolidLETTERS.

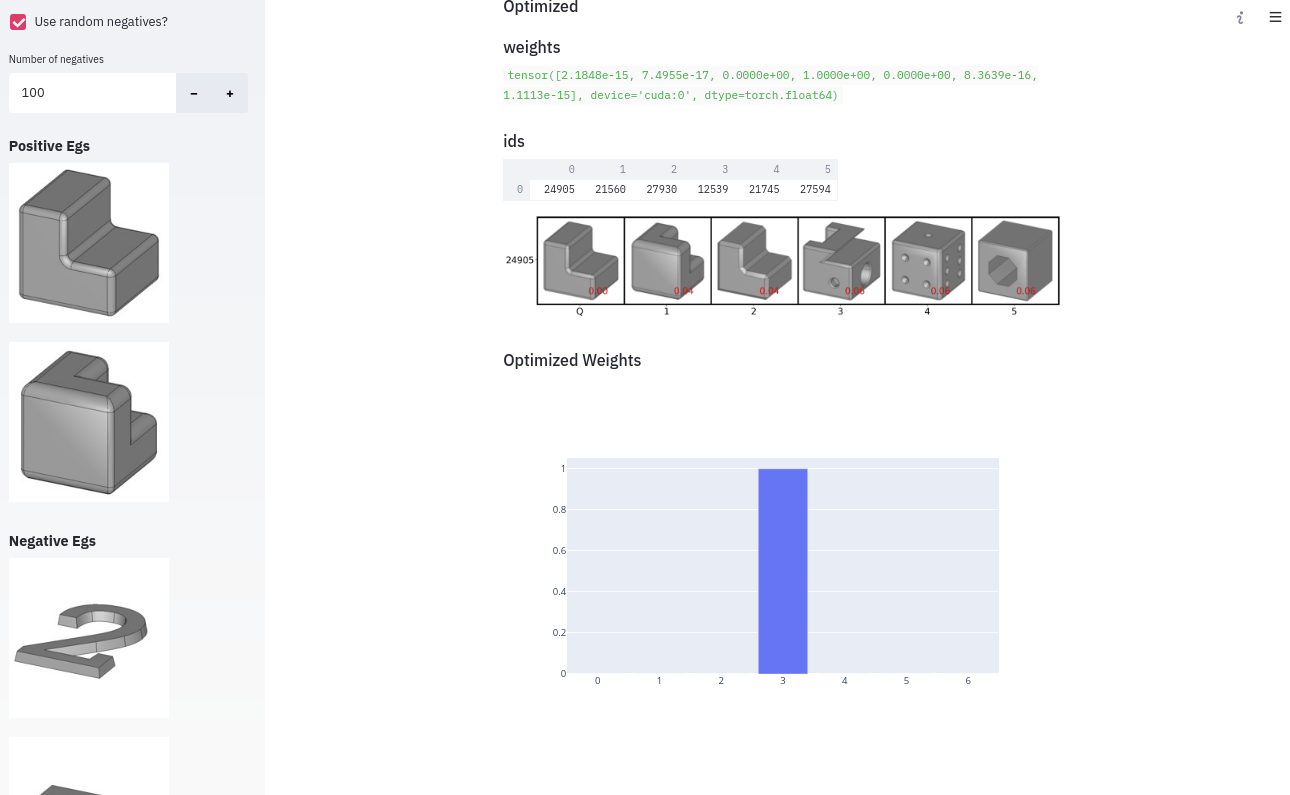

$ streamlit run dashboards/visualize_style_loss.pyExperiment with different positive and negative examples for few-shot optimization of the user defined style loss. Select negatives manually, or use randomly drawn examples from the remaining dataset. Available for SolidLETTERS and ABC datasets.

streamlit run dashboards/few_shot_optimization.pyTo download the full datasets in all formats you will need approximately 111 GB (+ approx. 10 GB for temporary files during extraction). A breakdown is given below:

- SolidLETTERS (46 GB for complete set)

- grams: 7.5 GB

- dgl binary files: 7.7 GB

- mesh: 9.9 GB

- point clouds: 3.3 GB

- smt: 18 GB

- pngs: 84 MB

- ABC (65 GB for complete set)

- grams: 12.6 GB (uv-net: 8 GB, psnet: 4.6 GB)

- dgl binary files: 20 GB

- mesh: 13 GB

- point clouds: 2.3 GB

- smt: 18 GB

- pngs: 513 MB

- subset labels: 16 MB

All data/parts you require may be downloaded using the following script:

$ python download_data.py

usage: download_data.py [-h] [--all] [--grams] [--dgl] [--mesh] [--pc] [--smt]

[--pngs] [--labels]

{quickstart,models,solid_letters,abc}

positional arguments:

{quickstart,models,solid_letters,abc}

optional arguments:

-h, --help show this help message and exit

solid_letters, abc:

--all

--grams

--dgl

--mesh

--pc

--smt

--pngs

abc only:

--labels

To only download files necessary for the quickstart above use command quickstart. For the pre-trained

models use command models. To download SolidLETTERS or ABC datasets, use command

solid_letters or abc accordingly, followed by the arguments indicating the format(s).

Examples:

# Pre-trained models

$ python download_data.py models

# Complete SolidLETTERS dataset in all formats

$ python download_data.py solid_letters --all

# ABC mesh and point clouds only

$ python download_data.py abc --mesh --pcEnsure you have the Gram matrices for SolidLETTERS subset in

PROJECT_ROOT/data/SolidLETTERS/grams/subset.

$ python experiments/linear_probes.py

| layer | linear_probe | linear_probe_err | |

|---|---|---|---|

| 0 | 0_feats | 0.992593 | 0.0148148 |

| 1 | 1_conv1 | 1 | 0 |

| 2 | 2_conv2 | 1 | 0 |

| 3 | 3_conv3 | 1 | 0 |

| 4 | 4_fc | 0.977778 | 0.0181444 |

| 5 | 5_GIN_1 | 0.940741 | 0.0181444 |

| 6 | 6_GIN_2 | 0.874074 | 0.0296296 |

| 7 | content | 0.755556 | 0.0377705 |

Ensure you have the Gram matrices for the complete SolidLETTERS test set in

PROJECT_ROOT/data/SolidLETTERS/grams/all.

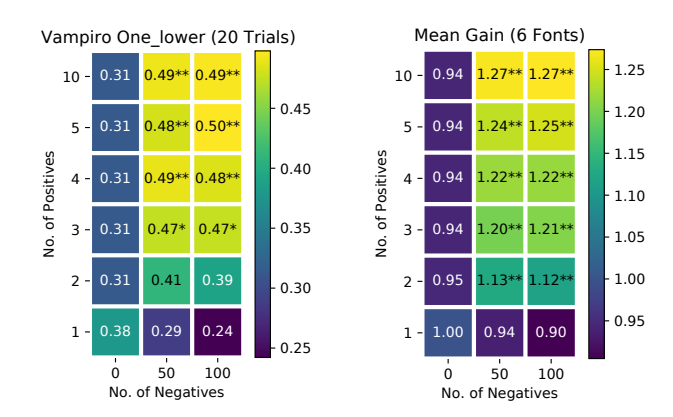

First perform the optimizations and hits@10 scoring (if you run into memory problems

please reduce --num_threads):

$ python experiments/font_selection_optimize.py

usage: font_selection_optimize.py [-h] [--exp_name EXP_NAME]

[--num_threads NUM_THREADS]

optional arguments:

-h, --help show this help message and exit

--exp_name EXP_NAME experiment name - results for each font/trial will be

saved into this directory (default: SolidLETTERS-all)

--num_threads NUM_THREADS

number of concurrent threads (default: 6)

Next collate all results and produce the heatmaps (figures will be saved to the experiments directory):

$ python experiments/font_selection_optimize_collate_and_plot.py

usage: font_selection_optimize_collate_and_plot.py [-h] [--exp_name EXP_NAME]

optional arguments:

-h, --help show this help message and exit

--exp_name EXP_NAME experiment name - font scores will be read fromthis

directory (default: SolidLETTERS-all)

Ensure you have the UVStyle-Net Gram matrices for the complete ABC dataset in

PROJECT_ROOT/data/ABC/grams/all as well as the PSNet* Gram matrices in

PROJECT_ROOT/psnet_data/ABC/grams/all. Finally, you will need the labeled subset

pngs in PROJECT_ROOT/data/ABC/labeled_pngs.

First perform the logistic regression and log the results for each trial (if

you run into memory problems please reduce --num_threads):

$ python experiments/abc_logistic_regression.py

usage: abc_logistic_regression.py [-h] [--num_threads NUM_THREADS]

optional arguments:

-h, --help show this help message and exit

--num_threads NUM_THREADS

number of concurrent threads (default: 5)

Next collate all results and produce the comparison table:

$ python experiments/abc_logistic_regression_collate_scores.py

| cats | UVStyle-Net | PSNet* | diff | |

|---|---|---|---|---|

| 2 | flat v electric | 0.789 ± 0.034 | 0.746 ± 0.038 | 0.0428086 |

| 0 | free_form v tubular | 0.839 ± 0.011 | 0.808 ± 0.023 | 0.0308303 |

| 1 | angular v rounded | 0.805 ± 0.010 | 0.777 ± 0.020 | 0.0279178 |

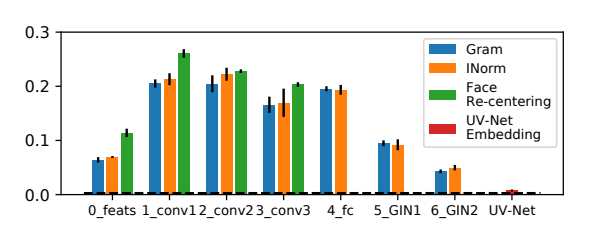

Ensure you have all versions of Gram matrices for the complete SolidLETTERS

test set (all_raw, all_inorm_only, all_fnorm_only, all) - see

above.

Then run the logistic regression probes on each version of the gram and log

the results:

python experiments/compare_normalization.py

Next collate all results and produce the comparison chart (plot saved to the experiments directory):

python experiments/compare_normalization_plot.py

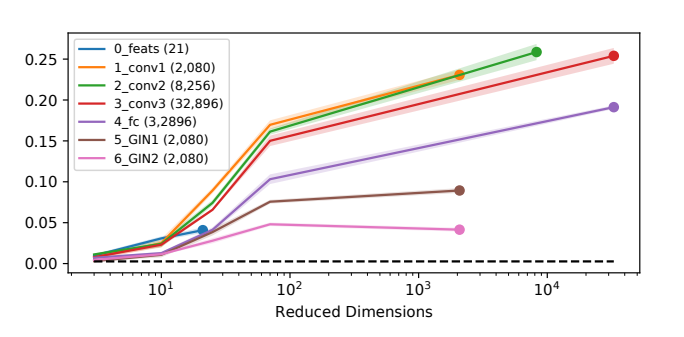

First run the PCA/probes for each dimension:

$ python experiments/dimension_reduction_probes.py

Next process the results and create the plot (figure will be saved to the experiments directory):

$ python experiments/dimension_reduction_plot.py

usage: dimension_reduction_plot.py [-h] [--include_content]

optional arguments:

-h, --help show this help message and exit

--include_content include content embeddings in plot (default: False)

Coming soon.

$ python train_classifier.py -h

usage: train_classifier.py [-h] [--dataset_path DATASET_PATH]

[--device DEVICE] [--batch_size BATCH_SIZE]

[--seed SEED] [--epochs EPOCHS] [--lr LR]

[--optimizer {SGD,Adam}] [--use-timestamp]

[--times TIMES] [--suffix SUFFIX]

[--nurbs_model_type {cnn,wcnn}]

[--nurbs_emb_dim NURBS_EMB_DIM]

[--mask_mode {channel,multiply}]

[--area_as_channel]

[--input_channels {xyz_only,xyz_normals}]

[--brep_model_type BREP_MODEL_TYPE]

[--graph_emb_dim GRAPH_EMB_DIM]

[--classifier_type {linear,non_linear}]

[--final_dropout FINAL_DROPOUT]

[--size_percentage SIZE_PERCENTAGE]

[--apply_square_symmetry APPLY_SQUARE_SYMMETRY]

[--split_suffix SPLIT_SUFFIX]

UV-Net Classifier Training Script for Solids

optional arguments:

-h, --help show this help message and exit

--dataset_path DATASET_PATH

Path to the dataset root directory

--device DEVICE which gpu device to use (default: 0)

--batch_size BATCH_SIZE

batch size for training and validation (default: 128)

--seed SEED random seed (default: 0)

--epochs EPOCHS number of epochs to train (default: 350)

--lr LR learning rate (default: 0.01)

--optimizer {SGD,Adam}

--use-timestamp Whether to use timestamp in dump files

--times TIMES Number of times to run the experiment

--suffix SUFFIX Suffix for the experiment name

--nurbs_model_type {cnn,wcnn}

Feature extractor for NURBS surfaces

--nurbs_emb_dim NURBS_EMB_DIM

Embedding dimension for NURBS feature extractor

(default: 64)

--mask_mode {channel,multiply}

Whether to consider trimming mask as channel or

multiply it with computed features

--area_as_channel Whether to use area as a channel in the input

--input_channels {xyz_only,xyz_normals}

--brep_model_type BREP_MODEL_TYPE

Feature extractor for B-rep face-adj graph

--graph_emb_dim GRAPH_EMB_DIM

Embeddings before graph pooling

--classifier_type {linear,non_linear}

Classifier model

--final_dropout FINAL_DROPOUT

final layer dropout (default: 0.3)

--size_percentage SIZE_PERCENTAGE

Percentage of data to use

--apply_square_symmetry APPLY_SQUARE_SYMMETRY

Probability of applying square symmetry transformation

to uv domain

--split_suffix SPLIT_SUFFIX

Suffix for dataset split folders

$ python train_test_recon_pc.py train for_public ⬆ ✱ ◼

usage: Pointcloud reconstruction experiments [-h]

[--dataset_path DATASET_PATH]

[--device DEVICE]

[--batch_size BATCH_SIZE]

[--epochs EPOCHS]

[--use-timestamp]

[--suffix SUFFIX]

[--encoder {pointnet,uvnetsolid}]

[--decoder {pointmlp}]

[--dataset {solidmnist,abc}]

[--num_points NUM_POINTS]

[--latent_dim LATENT_DIM]

[--use_tanh]

[--npy_dataset_path NPY_DATASET_PATH]

[--split_suffix SPLIT_SUFFIX]

[--uvnet_sqsym UVNET_SQSYM]

[--state STATE] [--no-cuda]

[--seed SEED]

[--grams_path GRAMS_PATH]

{train,test}

positional arguments:

{train,test}

optional arguments:

-h, --help show this help message and exit

train:

--dataset_path DATASET_PATH

Path to the dataset root directory

--device DEVICE Which gpu device to use (default: 0)

--batch_size BATCH_SIZE

batch size for training and validation (default: 128)

--epochs EPOCHS number of epochs to train (default: 350)

--use-timestamp Whether to use timestamp in dump files

--suffix SUFFIX Suffix for the experiment name

--encoder {pointnet,uvnetsolid}

Encoder to use

--decoder {pointmlp} Pointcloud decoder to use

--dataset {solidmnist,abc}

Dataset to train on

--num_points NUM_POINTS

Number of points to decode

--latent_dim LATENT_DIM

Dimension of latent space

--use_tanh Whether to use tanh in final layer of decoder

--npy_dataset_path NPY_DATASET_PATH

Path to pointcloud dataset when encoder takes in

solids

--split_suffix SPLIT_SUFFIX

Suffix for dataset split folders

--uvnet_sqsym UVNET_SQSYM

Probability of applying square symmetry transformation

to uv domain

Gram matrices will be saved into directory given as --grams_path:

$ python test_classifier.py

usage: test_classifier.py [-h] [--state STATE] [--no-cuda] [--subset]

[--apply_square_symmetry APPLY_SQUARE_SYMMETRY]

UV-Net Classifier Testing Script for Solids

optional arguments:

-h, --help show this help message and exit

--state STATE PyTorch checkpoint file of trained network.

--no-cuda Run on CPU

--subset Compute subset only (default: false)

--grams_path GRAMS_PATH

path to save Gram matrices to

--apply_square_symmetry APPLY_SQUARE_SYMMETRY

Probability of applying square symmetry transformation

to uv-domain

Gram matrices will be saved to directory given as --grams_path:

$ python train_test_recon_pc.py test

usage: Pointcloud reconstruction experiments [-h]

[--dataset_path DATASET_PATH]

[--device DEVICE]

[--batch_size BATCH_SIZE]

[--epochs EPOCHS]

[--use-timestamp]

[--suffix SUFFIX]

[--encoder {pointnet,uvnetsolid}]

[--decoder {pointmlp}]

[--dataset {solidmnist,abc}]

[--num_points NUM_POINTS]

[--latent_dim LATENT_DIM]

[--use_tanh]

[--npy_dataset_path NPY_DATASET_PATH]

[--split_suffix SPLIT_SUFFIX]

[--uvnet_sqsym UVNET_SQSYM]

[--state STATE] [--no-cuda]

[--seed SEED]

[--grams_path GRAMS_PATH]

{train,test}

positional arguments:

{train,test}

optional arguments:

-h, --help show this help message and exit

train:

--dataset_path DATASET_PATH

Path to the dataset root directory

--device DEVICE Which gpu device to use (default: 0)

--batch_size BATCH_SIZE

batch size for training and validation (default: 128)

--epochs EPOCHS number of epochs to train (default: 350)

--use-timestamp Whether to use timestamp in dump files

--suffix SUFFIX Suffix for the experiment name

--encoder {pointnet,uvnetsolid}

Encoder to use

--decoder {pointmlp} Pointcloud decoder to use

--dataset {solidmnist,abc}

Dataset to train on

--num_points NUM_POINTS

Number of points to decode

--latent_dim LATENT_DIM

Dimension of latent space

--use_tanh Whether to use tanh in final layer of decoder

--npy_dataset_path NPY_DATASET_PATH

Path to pointcloud dataset when encoder takes in

solids

--split_suffix SPLIT_SUFFIX

Suffix for dataset split folders

--uvnet_sqsym UVNET_SQSYM

Probability of applying square symmetry transformation

to uv domain

test:

--state STATE PyTorch checkpoint file of trained network.

--no-cuda Run on CPU

--seed SEED Seed

--grams_path GRAMS_PATH

directory to save Gram matrices to (default:

data/ABC/uvnet_grams)

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.