Author: Victor Iwuoha

Date: 13th & 14th October 2023

Event: DataFest Africa Workshop

This Project is built using the astro cli provisioned by Astronomer To Run this project a linux environment is highly recommended.

- Airflow Core Concepts such as (Operators, taskflow API, dynamic task mapping, Data-Aware Scheduling, Variables & Connections )

- DBT UI Familiarization Up to Job Creation with basic concepts of Macros, Documentation, Snapshots for SCD's, and Exposures.

- Linux Environment/ github codespaces/Ubuntu distribution on Windows

- Docker Compose

- A DBT Cloud Account (With an API Key)

- A .env file at the root of this directory with environment variables exactly as those in .env.example but with actual values. (Do this edit In Codespaces / with a machine of 4 Cores, 8GB RAM & 32GB Storage)

- An accessible Postgres database with a valid connection URL. (Spin Up a free one on ElephantSql.com). In the Url, replace *postgres with postgresql

- Basic Understanding of Python & SQL

- Fork This Project to your git profile, create a branch named dev, then connect the repository to your dbt account.

- Give DBT adequate access to connect to this repository on your git provider (github/gitlab) -> see steps

- Create a dbt project with the name airflow_dbt_magic or any name of choice and point it to the dbt subdirectory of this repository.

- Create two DBT environment Variables as follows;

- Key: DBT_DATAFEST_23_DB Value: As used above within airflow .env

- Key: DBT_DATAFEST_23_SCHEMA, Value: dbt_DATAFEST_23_USER (where DATAFEST_23_USER has the same value as used in .env above). This can basically be aby schema or database.

- Create a Production environment and link it to the main branch, then create a simple DBT JOB in the Production Environment called AIRFLOW DBT JOB and add the commands (

dbt build&dbt snapshot) Also select the generate docs on run checkbox. Note the Job Id as well as the Account id as they would be needed in Airflow.

-

Configuration, Connections & Airflow Variables setup

- a. After adding the environment variables in Prerequisites above to your .env file, (optionally) rename the

airflow_settings.example.yamlfile asairflow_settings.yamland supply the adequate values, doing so would let astro automatically load these to your airflow instance (otherwise, follow step 2 below). - b. Run the start.sh script using the command

bash start.shThis should start your project, export all environment variables and create a data_lake/ dir. To restart your airflow container after any environment/config changes, simply run the commandastro dev restart.

- a. After adding the environment variables in Prerequisites above to your .env file, (optionally) rename the

-

Create 2 airflow Connections and one Airflow Variable by using the airflow UI via Admin>Variables

-

a. DBT Cloud connection with the following;

- Connection Id: dbt_cloud_default

- Account Id: YOUR_DBT_ACCOUNT_ID

- Api Token: YOUR_DBT_API_TOKEN

-

b. Postgres DB Connection as follows;

- Connection Id: postgres_default

- Host: rajje.db.elephantsql.com (same as supplied in .env) or any other hosting platform including localhost.

- Schema: As supplied during meetup or any other database on your host

- Login: User name for schema

- Password: Password of User to DB

- Port: 5432

-

c. DBT JOB ID Variable as follows;

- Key: datafest_meetup_job

- Value: YOUR_CREATED_DBT_JOB_ID

- Description: DATAFEST meetup Job ID

-

-

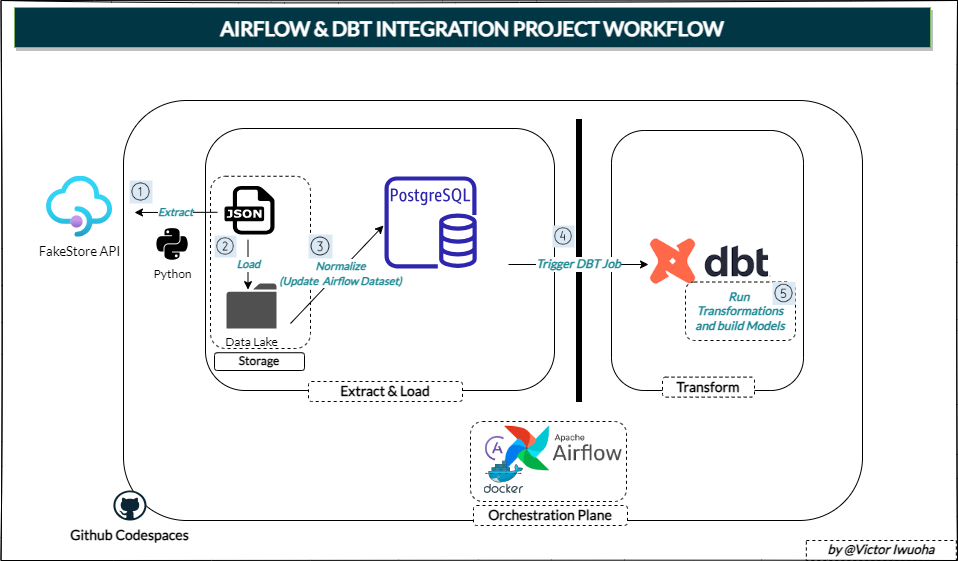

Turn on the two fakestore_ dags and Trigger the Dag Named fakestore_elt_pipeline. If this Runs SuccessFully , the fakestore_dbt_job_pipeline would automagically get triggered based on the dataset schedule. See more on Airflow Datasets.

-

Wait for the dbt dag to complete running and navigate to the dbt cloud UI to see that the dag was triggered via the API. For more notes on the operation of this dag, see DbtCloudOperator. In More complex Setups, there are packages that can be used with dbt core to convert your entire dbt project into airflow tasks for easier management. An example is Astronomer Cosmos.

The Structure of this project was adapted from the astronomer provided astro cli and created using astro dev init Docs are available at the following Links

The compilation of this project was inspired with ❤️ by the dbt-lagos-community 📦 .

===========================