Update: All my latest model files that can be used with chaiNNer can be downloaded from this google drive folder. Make sure to use a chaiNNer version above/newer than Alpha v0.19.5 for DAT and SRFormer support. If you cannot find this version (by the time of writing this README entry it is not released yet) make sure to use the nightly version instead, newer than 2023-08-27 since at that date/version, DAT and SRFormer was added to chaiNNer.

A repo for me to publish trained models.

Each folder should contain its own README.md file with infos to the model, while configs and examples may be present also.

Models can also be found on this openmodeldb page.

Some of these models can be run as a demo in this corresponding gradio space but inference times will be long since its using the free CPU and not a GPU.

These models can of course be run locally, with an application called chaiNNer, I made a youtube video on how to set up and use chaiNNer.

Results for some of these can be compared on my interactive visual comparison website.

I also created a youtube video where I show how I am training these models.

Photos

4xNomos8kSCHAT-L - Photo upscaler, handles a bit of jpg compression and blur, HAT-L model (good results but very slow since huge model)

4xNomos8kSCHAT-S - Photo upscaler, handles a bit of jpg compression and blur, HAT-S model

4xNomos8kSCSRFormer - Photo upscaler, handles a bit of jpg compression and blur, SRFormer base model (also good results but also slow since big model)

4xNomos8kSC - Photo upscaler, handles a bit of jpg compression and blur, RRDBNet base model

4xLSDIR - Photo upscaler, no degradation handling, RRDBNet base model

4xLSDIRplus - Photo upscaler, handles a bit of jpg compression and blur, RRDBNet base model

4xLSDIRplusC - Photo upscaler, handles a bit of jpg compression, RRDBNet base model

4xLSDIRplusN - Photo upscaler, almost no degradation handling, RRDBNet base model

4xLSDIRplusR - Photo upscaler, handles degradation but too strong so loses details, RRDBNet base model

4xLSDIRCompact3 - Photo upscaler, handles a bit of jpg compression and blur, SRVGGNet model

4xLSDIRCompactC3 - Photo upscaler, handles a bit of jpg compression, SRVGGNet model

4xLSDIRCompactN3 - Photo upscaler, handles no degradations, SRVGGNet model

4xLSDIRCompactR3 - Photo upscaler, handles degradation but too strong so loses details, SRVGGNet model

4xLSDIRCompact2 - Photo upscaler, handles a bit of jpg compression and blur, SRVGGNet model

4xLSDIRCompactC2 - Photo upscaler, handles a bit of jpg compression, SRVGGNet model

4xLSDIRCompactR2 - Photo upscaler, handles degradation but too strong so loses details, SRVGGNet model

4xLSDIRCompact1 - Photo upscaler, handles a bit of jpg compression and blur, SRVGGNet model

4xLSDIRCompactC1 - Photo upscaler, handles a bit of jpg compression, SRVGGNet model

4xLSDIRCompactR1 - Photo upscaler, handles degradation but too strong so loses details, SRVGGNet model

2xParimgCompact - Photo upscaler that does some color shifting since based on ImagePairs, SRVGGNet model

Anime

2xHFA2kAVCOmniSR - Anime frame upscaler that handles AVC (h264) video compression, OmniSR model

2xHFA2kAVCOmniSR_Sharp - Anime frame upscaler that handles AVC (h264) video compression with sharper outputs, OmniSR model

4xHFA2kAVCSRFormer_light - Anime frame upscaler that handles AVC (h264) video compression, SRFormer lightweight model

2xHFA2kAVCEDSR_M - Anime frame upscaler that handles AVC (h264) video compression, EDSR-M model

2xHFA2kAVCCompact - Anime frame upscaler that handles AVC (h264) video compression, SRVGGNet model

4xHFA2kLUDVAESwinIR_light - Anime image upscaler that handles various realistic degradations, SwinIR light model

4xHFA2kLUDVAEGRL_small - Anime image upscaler that handles various realistic degradations, GRL small model

4xHFA2kLUDVAESRFormer_light - Anime image upscaler that handles various realistic degradations, SRFormer light model

4xHFA2k - Anime image upscaler that handles some jpg compression and blur, RRDBNet base model

2xHFA2kCompact - Anime image upscaler that handles some jpg compression and blur, SRVGGNet model

4xHFA2kLUDVAESAFMN - dropped model since there were artifacts on the outputs when training with SAFMN arch

AI generated

4xLexicaHAT - An AI generated image upscaler, does not handle any degradations, HAT base model

2xLexicaSwinIR - An AI generated image upscaler, does not handle any degradations, SwinIR base model

2xLexicaRRDBNet - An AI generated image upscaler, does not handle any degradations, RRDBNet base model

2xLexicaRRDBNet_Sharp - An AI generated image upscaler with sharper outputs, does not handle any degradations, RRDBNet base model

07.07.23

2xHFA2kAVCSRFormer_light

A SRFormer light model for upscaling anime videos downloaded from the web, handling AVC (h264) compression.

06.07.23

2xLexicaSwinIR, 4xLexicaHAT, 4xLSDIR, 4xLSDIRplus, 4xLSDIRplusC, 4xLSDIRplusN, 4xLSDIRplusR

I upladed my model on openmodeldb and therefore 'release' these models, the Lexica models handle no degradations and are for upscaling AI generated outputs further. The LSDIRplus are the official ESRGAN plus model further finetunes with LSDIR, the 4xLSDIRplus is an interpolation of C and R and handles compression and a bit of noise/blur. The 4xLSDIRplusN handles no degradation, the 4xLSDIRplusC handles compression, and 4xLSDIRplusR used the official Real-ESRGAN configs but its only for extremer cases since it destroys details, the 4xLSDIRplusC models should be sufficient for most cases.

30.06.23

4xNomos8kSCHAT-L & 4xNomos8kSCHAT-S

My twelfth release, this time a HAT large and small model (they uploaded HAT-S codes and models two months ago) - a 4x realistic photo upscaling model handling JPG compression, trained on the HAT network (small and large model) with musl's Nomos8k_sfw dataset together with OTF (on the fly degradation) jpg compression and blur.

26.06.23

4xNomos8kSCSRFormer

My eleventh release, a 4x realistic photo upscaling model handling JPG compression, trained on the SRFormer network (base model) with musl's Nomos8k_sfw dataset together with OTF (on the fly degradation) jpg compression and blur.

18.06.23

2xHFA2kAVCOmniSR & 2xHFA2kAVCOmniSR_Sharp

My tenth release, a 2x anime upscaling model that handles AVC (h264) compression trained on the OmniSR network (second released community model to use this network, which paper released less than two months ago, on the 24.04.23).

18.06.23

2xHFA2kAVCEDSR_M

My ninth release, a fast 2x anime upscaling model that handles AVC (h264) compression trained on the EDSR network (M model).

18.06.23

2xHFA2kAVCCompact

Also in my ninth release, a compact 2x anime upscaling model that handles AVC (h264) compression trained on the SRVGGNet, also called Real-ESRGAN Compact network.

14.06.23

4xHFA2kLUDVAEGRL_small

My eight release - 4x anime upscaling model handling real degradation, trained on the GRL network (small model) with musl's HFA2kLUDVAE dataset.

10.06.23

4xHFA2kLUDAVESwinIR_light

My seventh release - 4x anime upscaling model handling real degradation, trained on the SwinIR network (small model) with musl's HFA2kLUDVAE dataset.

10.06.23

4xHFA2kLUDVAESRFormer_light

Also in my seventh release - 4x anime upscaling model handling real degradation, trained on the SRFormer network (light model) with musl's HFA2kLUDVAE dataset.

01.06.23

2xLexicaRRDBNet & 2xLexicaRRDBNet_Sharp

My sixth release - a 2x upscaler for AI generated images (no degradations), trained on the ESRGAN (RRDBNet) network (base model) with around 34k images from lexica.art.

10.05.23

4xNomos8kSC

My fifth release - a 4x realistic photo upscaling model handling JPG compression, trained on the ESRGAN (RRDBNet) network (base model) with musl's Nomos8k_sfw dataset together with OTF (on the fly degradation) jpg compression and blur.

07.05.23

4xHFA2k

My fourth release - a 4x anime image upscaling model, trained on the ESRGAN (RRDBNet) network (base model) with musl's HFA2k dataset together with OTF (on the fly degradation) jpg compression and blur.

05.05.23

2xParimgCompact

My third release - a 2x compact photo upscaling model trained on the SRVGGNet, also called Real-ESRGAN Compact network with Microsofts ImagePairs (11,421 images, 111 GB ). Was one of the very first models I had started training and finished it now.

18.04.23

2xHFA2kCompact

My second release - a 2x anime compact upscaling model trained on the SRVGGNet, also called Real-ESRGAN Compact network with musl's HFA2k dataset together with OTF (on the fly degradation) jpg compression and blur.

11.03.23

4xLSDIRCompact

My very first release - a 4x compact model for photo upscaling trained on the SRVGGNet, also called Real-ESRGAN Compact network. Up to 3 versions, version 3 contains N C R models, version 2 is a general model interpolated of C and R. Trained on the huge LSDIR dataset (84991 images *2 for paired training C around 160 GB). Suggested is the 4xLSDIRCompactC3 model

These models can be found in the "Interpolated" folder, it consists of

4xInt-Ultracri

UltraSharp + Remacri

4xInt-Superscri

NMKD Superscale + Remacri

4xInt-Siacri

NMKD Siax ("CX") + Remacri

4xInt-RemDF2K

Remacri + RealSR_DF2K_JPEG

4xInt-RemAnime

Remacri + AnimeSharp

4xInt-RemacRestore

Remacri + UltraMix_Restore

4xInt-AnimeArt

AnimeSharp + VolArt

2xInt-LD-AnimeJaNai

LD-Anime + AnimeJaNai

Lexica

Training different models for AI generated image upscaling without degradations. (RRDBNet, HAT, SwinIR)

HFA2kLUDVAE

Training lightweight models of different networks to test for interence speed and metrics for anime upscaling with realistic degradations. See results in the corresponding results folder.

LSDIR

A series trained on the big LSDIR dataset. Mostly interpolated output result. Then N for no degradation, C for compression (should be sufficient for most cases) and R for noise, blur and compression (only use in extremer cases).

LSDIRplus

An RRDBNet experiment to see what influence a huge dataset has on the official x4plus model for photo upscaling.

HFA2kAVC

Model series handling AVC (h264) compression usually found on videos from the web. So for upscaling videos downloaded from the web basically.

SAFMN

More specifically the 4xHFA2kLUDVAESAFMN model, this network had a tendency to generate artifacts on certain outputs. Dropped the whole network from future models because of artifacts introduction.

1xUnstroyer Series - Deleted

Was a series of srvggnet models that I started training to remove various degradations simultaneously (compression: MPEG, MPEG-2, H264, HEVC, webp, jpg ; noise, blur etc) but results were not to my liking since it was too many different degradations for such a small network, dropped the project and worked on others

CompactPretrains

These pretrains have been provided by Zarxrax and they are great to use to kick off training a compact model.

Some of these model folders contain examples, meaning inputs and outputs to visually see the effects of such a model. Series like LUDVAE or AVC have their own results folder where and encompass the outputs of multiple models trained on the same dataset and similiar settings, most of the time models of different networks, so these outputs can be compared with each other, coming from the same input. visual outputs (and inputs).

LUDVAE Model Series comparison images (Input, SwinIR small, SRFormer lightweight, GRL small). Specific model in bottom caption:

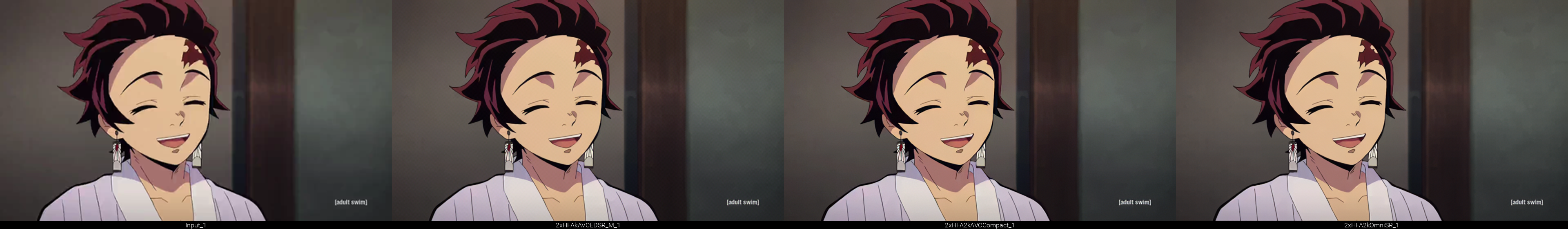

AVC Model Series comparison images (Input, EDSR, SRVGGNet, OmniSR). Specific model in bottom caption:

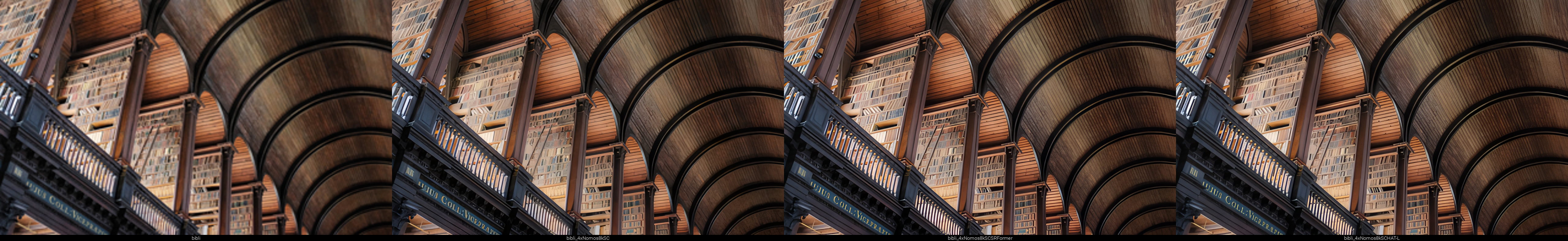

Nomos8kSC Series comparison images (Input, ESRGAN (RRDBNet), SRFormer base, HAT large.

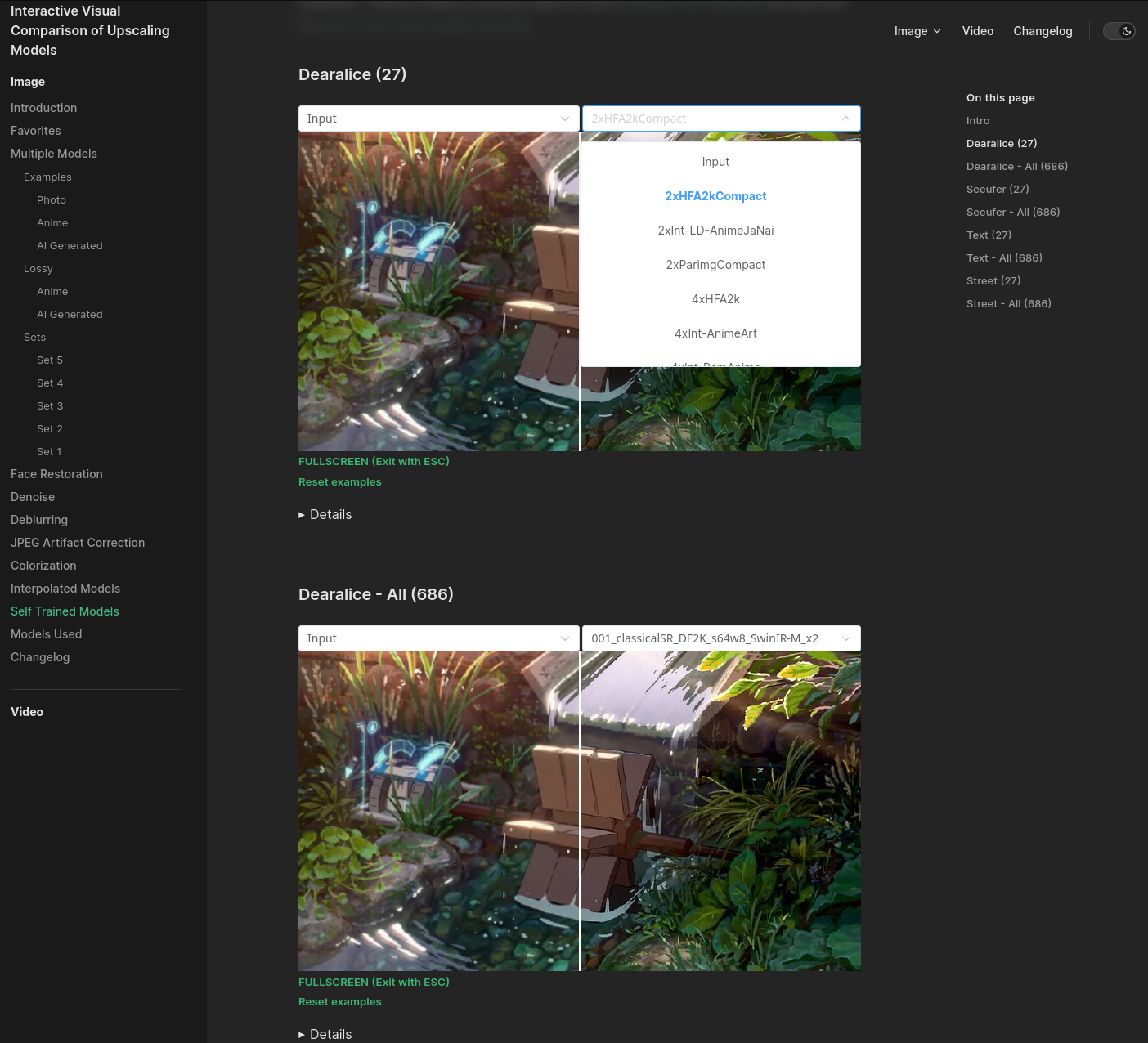

I also made the Selftrained page where you can find with a slider the different outputs of my models but also with the example below together with over 600 other models so my self trained model outputs can be compared with a lot of other official paper models or community trained models.

Website Selftrained Page screenshot, click corresponding link above to get to the actual interactive version:

And then I started doing quick tests like downloading an anime opening in 360p from youtube with one of those yt2mp4 converters online (so the input has the compression artifacts for this usecase) and using my models on extracted frames.

For this anime opening I often liked my 2xHFA2kCompact model which is very fast for inference and gave good results which can be seen on these example frames

Frame 933: https://imgsli.com/MTg2OTc5/0/4

Frame 475: https://imgsli.com/MTg2OTc3/0/4

Frame 1375: https://imgsli.com/MTg2OTk0/0/4

But then 2xHFA2kCompact deblurs scenes that have intentional blur/bokeh effect (see railing in the background) where I personally liked the AVC series better, so 2xHFA2kAVCOmniSR (or 2xHFA2kAVCCompact) since it kept that effect / stayed more truthful to the input in that sense

Frame 1069 https://imgsli.com/MTg2OTg3/0/2

Frame 2241 https://imgsli.com/MTg2OTgy/0/1

Frame 933 screenshot as a single example of the above imgsli comparison links, click corresponding links above to get to the actual interactive versions: