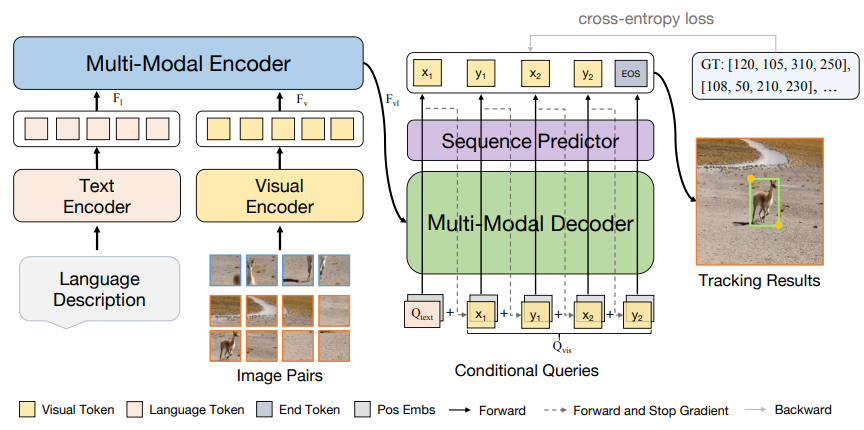

The official implementation for the TCSVT 2023 paper [Towards Unified Token Learning for Vision-Language Tracking].

[Models] [Raw Results]

| Tracker | TNL2K (AUC) | LaSOT (AUC) | LaSOT-ext (AUC) | OTB99-Lang (AUC) |

|---|---|---|---|---|

| VLT_{TT} | 54.7 | 67.3 | 48.4 | 74.0 |

| JointNLT | 56.9 | 60.4 | - | 65.3 |

| MMTrack | 58.6 | 70.0 | 49.4 | 70.5 |

conda create -n mmtrack python=3.8

conda activate mmtrack

bash install.sh

Run the following command to set paths for this project

python tracking/create_default_local_file.py --workspace_dir . --data_dir ./data --save_dir ./output

After running this command, you can also modify paths by editing these two files

lib/train/admin/local.py # paths about training

lib/test/evaluation/local.py # paths about testing

-

Download the preprocessed json file of reforco dataset. If the former link fails, you can download it here.

-

Download the refcoco-train2014 dataset from Joseph Redmon's mscoco mirror.

-

Download the OTB_Lang dataset from Link

Put the tracking datasets in ./data. It should look like:

${PROJECT_ROOT}

-- data

-- lasot

|-- airplane

|-- basketball

|-- bear

...

-- tnl2k

|-- test

|-- train

-- refcoco

|-- images

|-- refcoco

|-- refcoco+

|-- refcocog

-- otb_lang

|-- OTB_query_test

|-- OTB_query_train

|-- OTB_videos

Dowmload the pretrained OSTrack and Roberta-base, and put it under $PROJECT_ROOT$/pretrained_networks.

python tracking/train.py \

--script mmtrack --config baseline --save_dir ./output \

--mode multiple --nproc_per_node 2 --use_wandb 0

Replace --config with the desired model config under experiments/mmtrack.

If you want to use wandb to record detailed training logs, you can set --use_wandb 1.

Download the model weights from Google Drive

Put the downloaded weights on $PROJECT_ROOT$/output/checkpoints/train/mmtrack/baseline

Change the corresponding values of lib/test/evaluation/local.py to the actual benchmark saving paths

Some testing examples:

- LaSOT_lang or other off-line evaluated benchmarks (modify

--datasetcorrespondingly)

python tracking/test.py --tracker_name mmtrack --tracker_param baseline --dataset_name lasot_lang --threads 8 --num_gpus 2

python tracking/analysis_results.py # need to modify tracker configs and names

- lasot_extension_subset_lang

python tracking/test.py --tracker_name mmtrack --tracker_param baseline --dataset_name lasot_extension_subset_lang --threads 8 --num_gpus 2

- TNL2k_Lang

python tracking/test.py --tracker_name mmtrack --tracker_param baseline --dataset_name tnl2k_lang --threads 8 --num_gpus 2

- OTB_Lang

python tracking/test.py --tracker_name mmtrack --tracker_param baseline --dataset_name otb_lang --threads 8 --num_gpus 2

- Thanks for the OSTrack, Stable-Pix2Seq and SeqTR library, which helps us to quickly implement our ideas.

If our work is useful for your research, please consider cite:

@ARTICLE{Zheng2023mmtrack,

author={Zheng, Yaozong and Zhong, Bineng and Liang, Qihua and Li, Guorong and Ji, Rongrong and Li, Xianxian},

journal={IEEE Transactions on Circuits and Systems for Video Technology},

title={Towards Unified Token Learning for Vision-Language Tracking},

year={2023},

}