In this repository, we use the scenario of product sales forecasting to demonstrate the recommended approach for batch scoring with R models on Azure. This architecture can be generalized for any scenario involving batch scoring using R models.

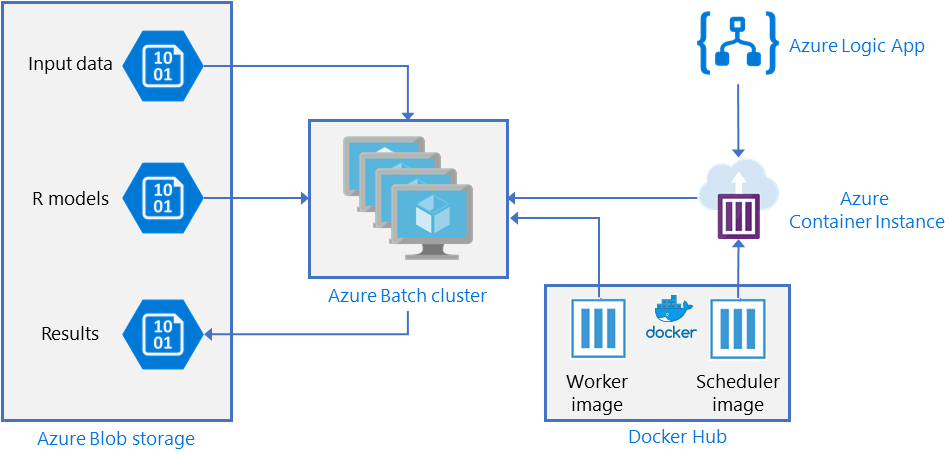

The above architecture works as follows:

- Model scoring is parallelized across a cluster of virtual machines running on Azure Batch.

- Each Batch job reads input data from a Blob container, makes a prediction using pre-trained R models, and writes the results back to the Blob container.

- Batch jobs are triggered by a scheduler script using the doAzureParallel R package. The script runs on an Azure Container Instance (ACI).

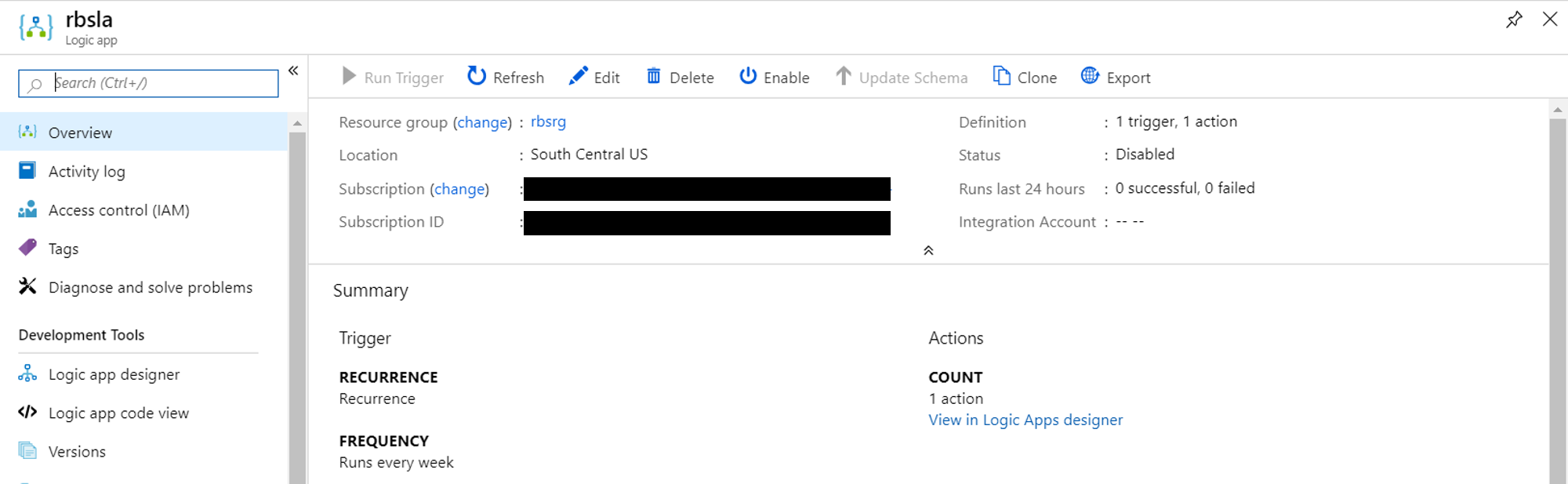

- The ACI is run on a schedule managed by a Logic App.

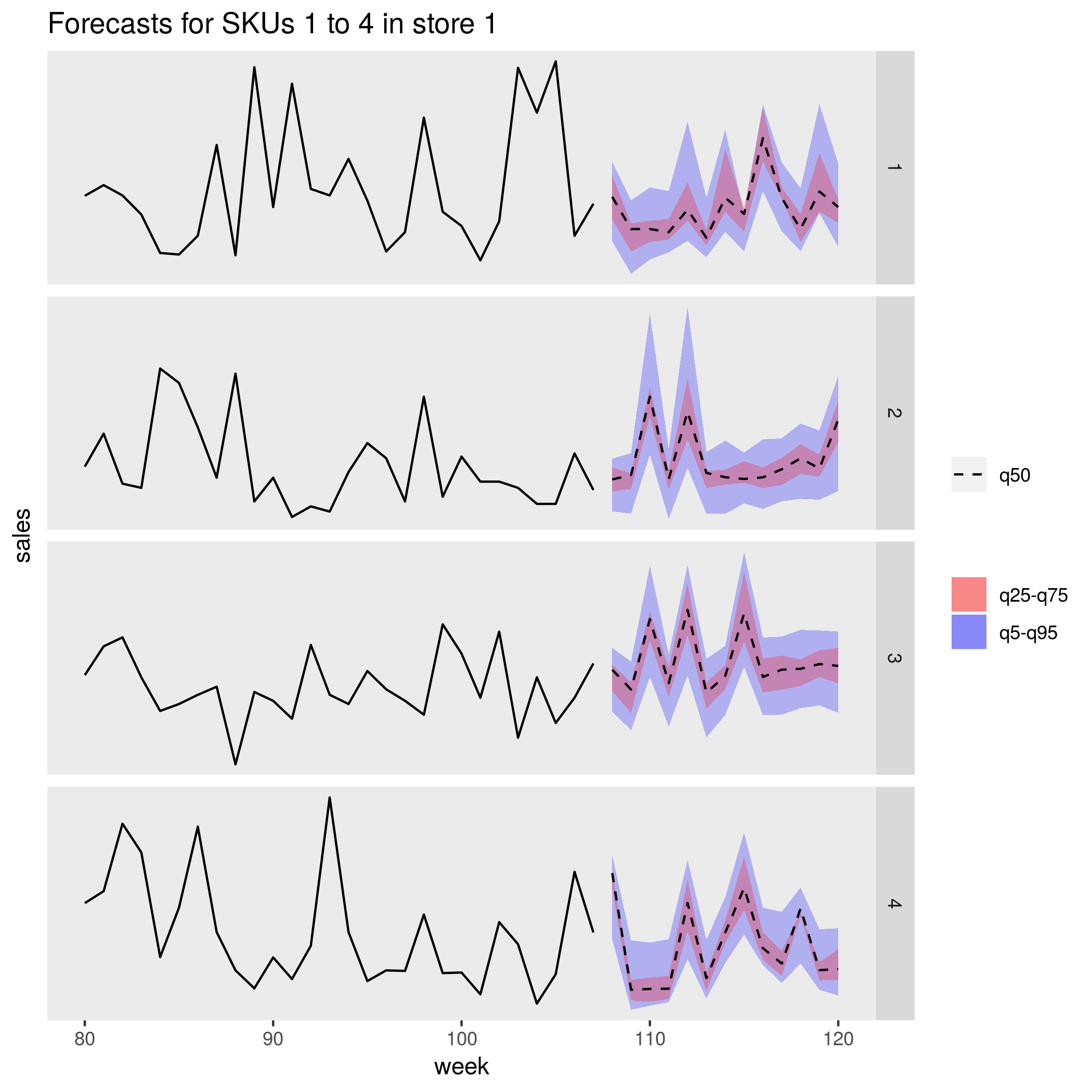

This example uses the scenario of a large food retail company that needs to forecast the sales of thousands of products across multiple stores. A large grocery store can carry tens of thousands of products and generating forecasts for so many product/store combinations can be a very computationally intensive task. In this example, we generate forecasts for 1,000 products across 83 stores, resulting in 5.4 million scoring operations. The architecture deployed is capable of scaling to this challenge. See here for more details of the forecasting scenario.

This repository has been tested on an Ubuntu Data Science Virtual Machine which comes with many of the local/working machine dependencies pre-installed.

Local/Working Machine:

- Ubuntu >=16.04LTS (not tested on Mac or Windows)

- R >= 3.4.3

- Docker >=1.0 (check current version with

docker version) - Azure CLI >=2.0 (check current version with

az --version)

R packages (install by running Rscript R/install_dependencies.R):

- gbm >=2.1.4.9000

- rAzureBatch >=0.6.2

- doAzureParallel >=0.7.2

- bayesm >=3.1-1

- ggplot2 >= 3.1.0

- tidyr >=0.8.2

- dplyr >=0.7.8

- jsonlite >=1.5

- devtools >=1.13.4

- dotenv >=1.0.2

- AzureContainers >=1.0.1

- AzureGraph >= 1.0.0

- AzureStor >=2.0.1

- AzureRMR >=2.1.1.9000

You will also require an Azure Subscription.

While it is not required, Azure Storage Explorer is useful to inspect your storage account.

Run the following in your local terminal:

- Clone the repo

git clone <repo-name> cdinto the repo- Install R dependencies

Rscript R/install_dependencies.R

Start by filling out details of the deployment in 00_resource_specs.R. Then run through the following R scripts. It is intended that you step through each script line-by-line (with Ctrl + Enter if using RStudio). Before executing the scripts, set your working directory of your R session setwd("~/RBatchScoring"). It is recommended that you restart your R session and clear the R environment before running each script.

- 01_generate_forecasts_locally.R

- 02_deploy_azure_resources.R

- 03_forecast_on_batch.R

- 04_run_from_docker.R

- 05_deploy_logic_app.R

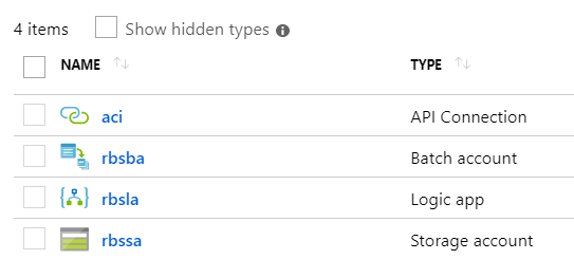

After running these scripts, navigate to your resource group in the Azure portal to see the following deployed resources:

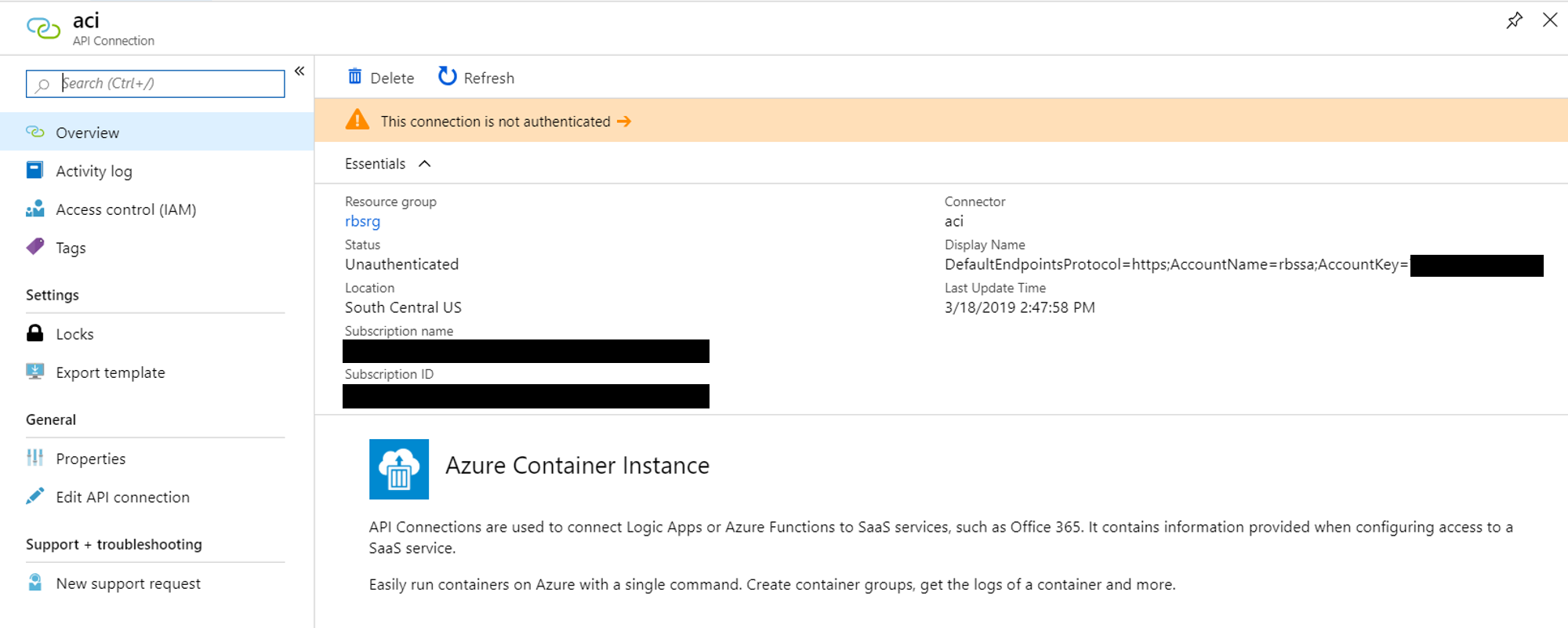

To complete the deployment, you will need to authenticate to allow the Logic App to create an ACI. Click on the ACI connector to authenticate as shown below:

Finally, you now need enable the Logic App. Go to the logic app's pane in the portal and click the Enable button to kick off its first run.

Go to the new Container instances object in your resource group and see the status of the running job in the Containers pane.

When you are finished with your deployment, you can run 06_delete_resources.R to delete the resources that were created.

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.microsoft.com.

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.