| title | description | keywords |

|---|---|---|

Deploy DDC on Azure |

Learn how to deploy Docker Datacenter with one click, using an ARM (ARM Resource Manager) Template. |

docker, datacenter, install, orchestration, management, azure, swarm |

- Not maintained for now, new docker ee template for Azure is @ https://azuremarketplace.microsoft.com/en-us/marketplace/apps/docker.dockerdatacenter

- Repo is last updated with 1.13 CS Engine for DDC - ucp-2.0.1_dtr-2.1.3

- for "Docker for Azure" with OMS, please visit https://aka.ms/docker4azure

- Azure Docker Datacenter

- Azure Docker DataCenter Templates for the to-be GAed Docker DataCenter with

ucp:2.0.0-Beta4ucp:2.0.0ucp:2.0.1(native Swarm with Raft) anddtr:2.1.0-Beta4dtr:2.1.0dtr:2.1.1dtr:2.1.1dtr:2.1.3 initially based on the "legacy" Docker DataCenter 1.x Azure MarketPlace Gallery Templates (1.0.9). Read about this DDC version features blog. - For Raft, please view Docker Orchestration: Beyond the Basics By Aaron Lehmann at the ContainerCon, Europe - Berlin, October 4-9, 2016. and Swarm Raft Page.

- Apps may leverage swarm mode orchestration on engine 1.12.

- Please see the LICENSE file for licensing information.

- Docker DataCenter License as used as-is (

parameterized orto be uploaded license - lic file) in this project forUCP 2.0.0 Beta4ucp:2.0.0ucp:2.0.1 andDTR 2.1.0 Beta4dtr:2.1.0dtr:2.1.1dtr:2.1.2dtr:2.1.3 canonly be obtained presently for the private betanow be obtained as standard Trial License for Docker DataCenter 2.0 from docker Store/hub.Please refer to this post in the docker forum for beta details. Please sign up here for GA Waiting list. - This project has adopted the Microsoft Open Source Code of Conduct. For more information, see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

- This project is hosted at: https://github.com/Azure/azure-dockerdatacenter

- This repo is initially based on the "legacy" Docker DataCenter 1.x Azure MarketPlace Gallery Templates (1.0.9)

- Uses the 14.04 LTS Images as per docker MarketPlace offer for the above.

- Uses the new public tar.gz from

herehereherehereNow GAed hereNow GAed and with ucp:2.0.1 hereNow GAed and with ucp:2.0.1 and dtr:2.1.1 hereNow GAed and with ucp:2.0.1 and dtr:2.1.2 hereNow GAed and with ucp:2.0.1 and dtr:2.1.3 herefor changes using the Raft Protocol and Auto HA for private beta (to be GAed DDC) and native swarm mode orchestration on MS Azure. DDCPrivate Beta docs are here andGA Guides are here - New Go-based Linux CustomScript Extension being used

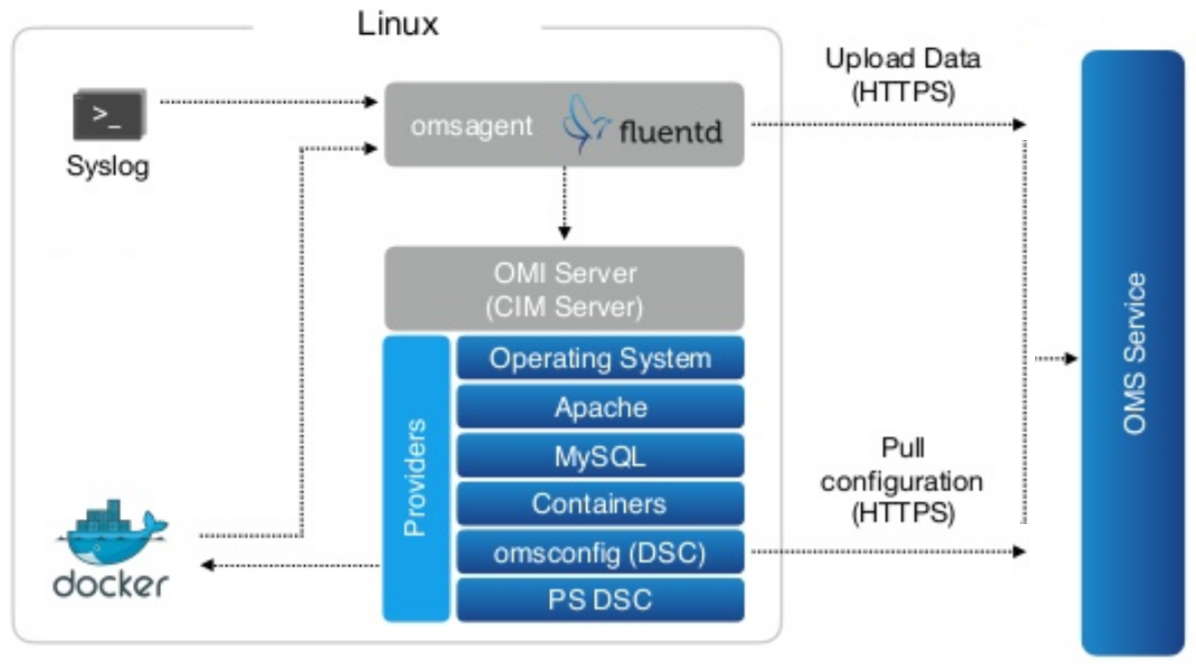

- Uses the Docker CS 1.12 Engine support for OMS Linux Agents

- Latest docker-compose and docker-machine available.

- Dashboard URL

- http://{{UCP Controller Nodes LoadBalancer Full DNS IP name}}.{{region of the Resource Group}}.cloudapp.azure.com

- The Above is the FQDN of the LBR or Public IP for UCP Controller or Managers

- http://{{DTR worker Nodes LoadBalancer Full DNS IP name}}.{{region of the Resource Group}}.cloudapp.azure.com

- The Above is the FQDN of the LBR or Public IP for DTR

- All passwords have been disabled in the nodes and one can only use public rsa_id to ssh to the nodes.

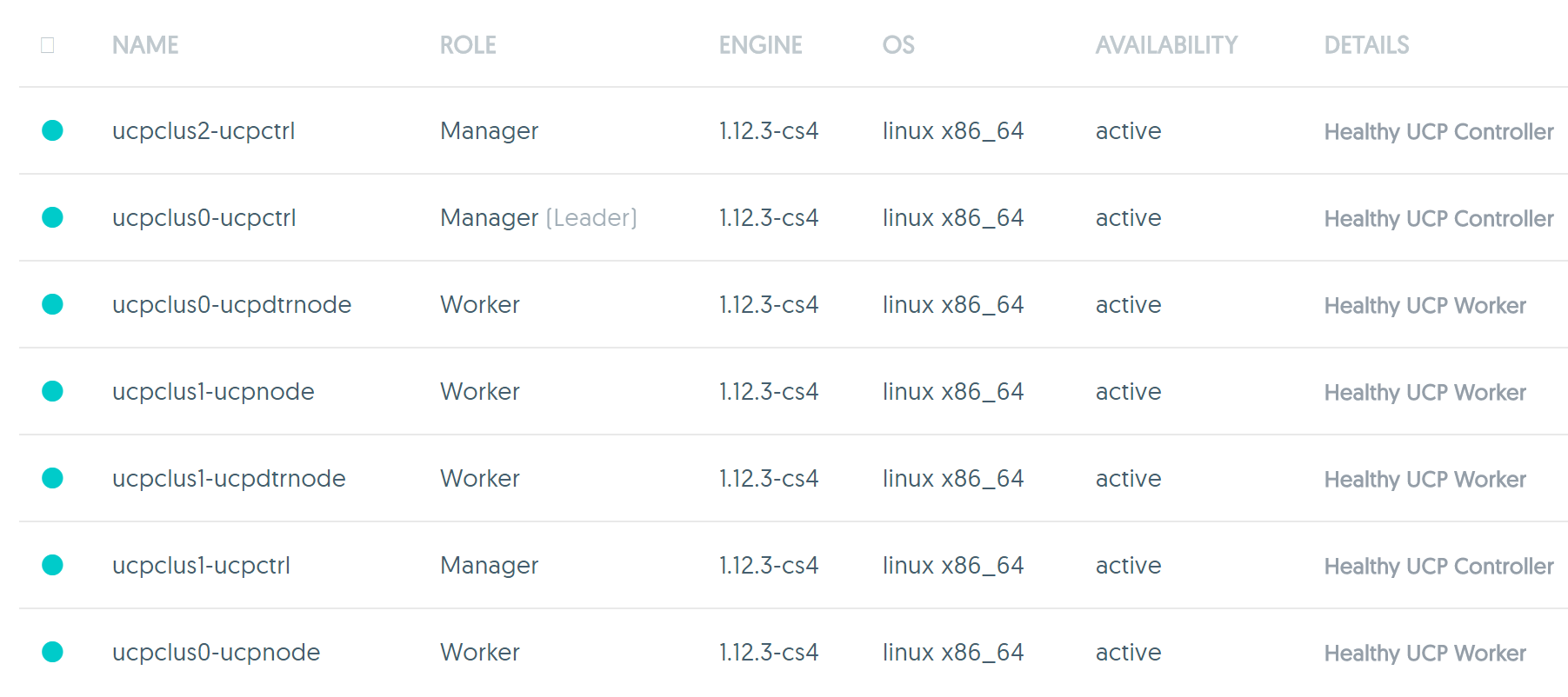

A Minimal Fresh topology with minimum 3 Worker Managers, minimum 2 worker nodes, one DTR with local storage and another as replica would look like the following

The Minimal Topology (Minimum 3 Worker Manager Nodes for valid Raft HA and minimum 2 worker nodes with 2 extra for DTR and Replica)

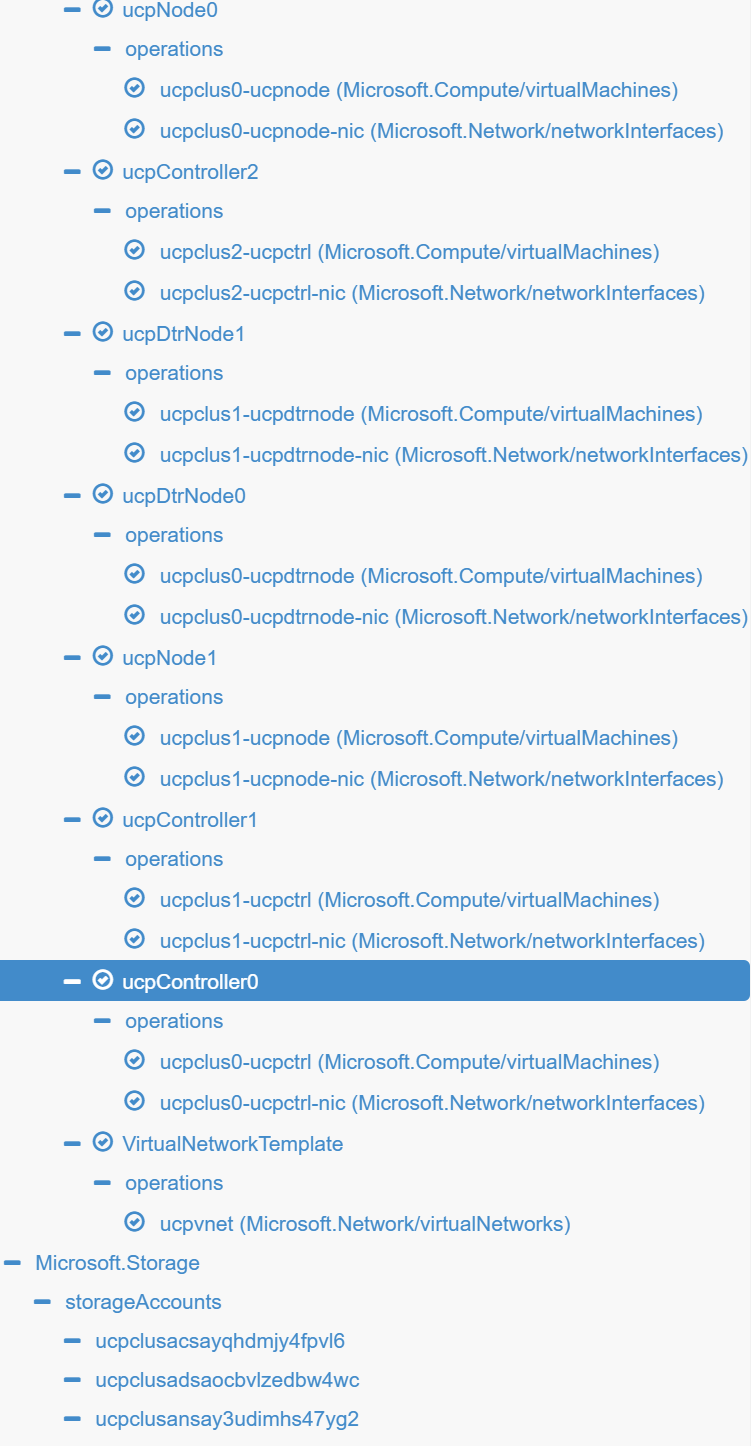

As from https://resources.azure.com

Please report bugs by opening an issue in the GitHub Issue Tracker

Patches can be submitted as GitHub pull requests. If using GitHub please make sure your branch applies to the current master as a 'fast forward' merge (i.e. without creating a merge commit). Use the git rebase command to update your branch to the current master if necessary.

OMS Setup is optional and the OMS Workspace Id and OMS Workspace Key can either be kept blank or populated post the steps below.

Create a free account for MS Azure Operational Management Suite with workspaceName

-

Provide a Name for the OMS Workspace.

-

Link your Subscription to the OMS Portal.

-

Depending upon the region, a Resource Group would be created in the Sunscription like "mms-weu" for "West Europe" and the named OMS Workspace with portal details etc. would be created in the Resource Group.

-

Logon to the OMS Workspace and Go to -> Settings -> "Connected Sources" -> "Linux Servers" -> Obtain the Workspace ID like

ba1e3f33-648d-40a1-9c70-3d8920834669and the "Primary and/or Secondary Key" likexkifyDr2s4L964a/Skq58ItA/M1aMnmumxmgdYliYcC2IPHBPphJgmPQrKsukSXGWtbrgkV2j1nHmU0j8I8vVQ== -

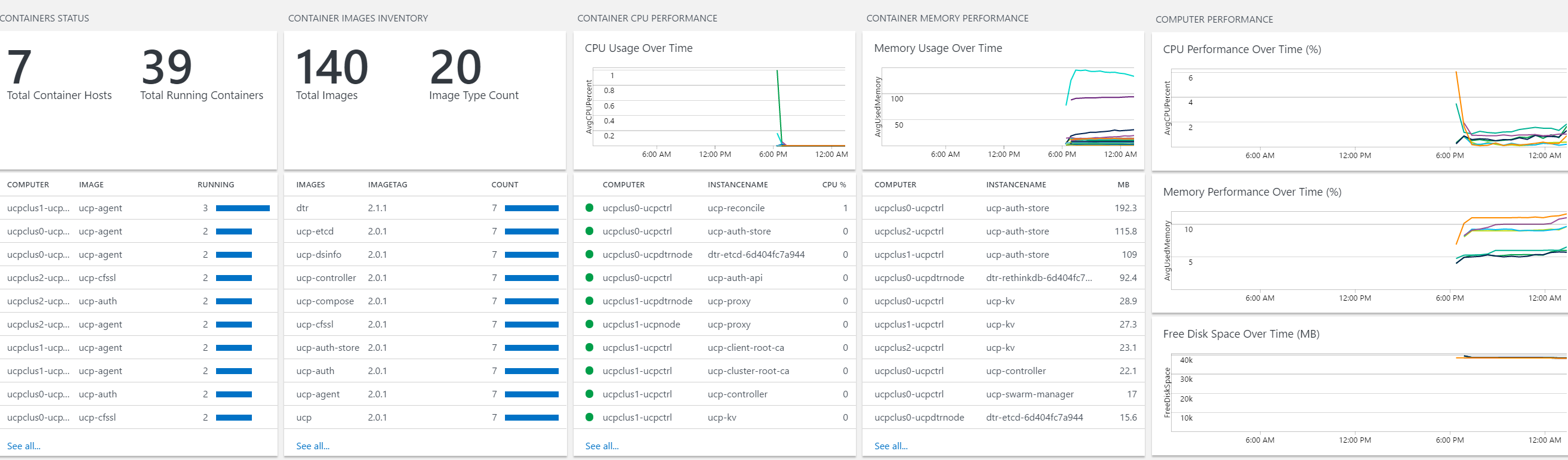

Add The solutions "Agent Health", "Activity Log Analytics" and "Container" Solutions from the "Solutions Gallery" of the OMS Portal of the workspace.

-

While Deploying the DDC Template just the WorkspaceID and the Key are to be mentioned and all will be registered including all containers in any nodes of the DDC auto cluster.

-

Then one can login to https://OMSWorkspaceName.portal.mms.microsoft.com and check all containers running for Docker DataCenter and use Log Analytics and if Required perform automated backups using the corresponding Solutions for OMS.

-

Or if the OMS Workspace and the Machines are in the same subscription, one can just connect the Linux Node sources manually to the OMS Workspace as Data Sources

-

New nodes to be added to the cluster as worker needs to follow the Docker Instructions for OMS manually.

-

All Docker Engines in this Azure DDC autocluster(s) are automatically instrumented via ExecStart and Specific DOCKER_OPTIONS to share metric with the OMS Workspace during deployment as in the picture below.

Credits : Ahmet's Blog, New Azure cli 2.0 Preview.

- Please visit the Azure Container Registry Page.

- Please refer to the Documentation.

- Create Dockerized new Azure cli

$ docker run -dti -v ${HOME}:/home/azureuser --restart=always --name=azure-cli-python azuresdk/azure-cli-python- Login to Azure from the cli docker instance

$ docker exec -ti azure-cli-python bash -c "az login && bash"- Please view output below. Subscription Id, Tenant Id and names are representational.

- To sign in, use a web browser to open the page https://aka.ms/devicelogin and enter the code XXXXX to authenticate.

[

{

"cloudName": "AzureCloud",

"id": "123a1234-1b23-1e00-11c3-123456789d12",

"isDefault": true,

"name": "Microsoft Azure Subscription Name",

"state": "Enabled",

"tenantId": "12f123bf-12f1-12af-12ab-1d3cd456db78",

"user": {

"name": "ab@company.com",

"type": "user"

}

}

]- create a resource group via new azure cli from inside the container logged into azure

bash-4.3# az group create -n acr -l southcentralus

{

"id": "/subscriptions/123a1234-1b23-1e00-11c3-123456789d12/resourceGroups/acr",

"location": "southcentralus",

"managedBy": null,

"name": "acr",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null

}- Create the acr instance

bash-4.3# az acr create -n acr12345 -g acr -l southcentralus- Subscription Id, App Id, registry Name, Login Server are representational

- ACR is available presently in eastus, westus and southcentralus

Create a new service principal and assign access:

az ad sp create-for-rbac --scopes /subscriptions/123a1234-1b23-1e00-11c3-123456789d12/resourcegroups/acr/providers/Microsoft.ContainerRegistry/registries/acr12345 --role Owner --password <password>

Use an existing service principal and assign access:

az role assignment create --scope /subscriptions/123a1234-1b23-1e00-11c3-123456789d12/resourcegroups/acr/providers/Microsoft.ContainerRegistry/registries/acr12345 --role Owner --assignee <app-id>

{

"adminUserEnabled": false,

"creationDate": "2016-12-09T03:45:14.843041+00:00",

"id": "/subscriptions/123a1234-1b23-1e00-11c3-123456789d12/resourcegroups/acr/providers/Microsoft.ContainerRegistry/registries/acr12345",

"location": "southcentralus",

"loginServer": "acr12345-microsoft.azurecr.io",

"name": "acr12345",

"storageAccount": {

"accessKey": null,

"name": "acr123456789"

},

"tags": {},

"type": "Microsoft.ContainerRegistry/registries"

}- Subscription Id, App Id, registry Name, Login Server are representational

bash-4.3# az ad sp create-for-rbac -scopes /subscriptions/123a1234-1b23-1e00-11c3-123456789d12/resourcegroups/acr/providers/Microsoft.ContainerRegistry/registries/acr12345 --role Owner --password bangbaM23#

Retrying role assignment creation: 1/24

Retrying role assignment creation: 2/24

{

"appId": "ab123cd5-b1ab-1234-abab-a2bcd90abcde",

"name": "http://azure-cli-2016-12-09-03-46-57",

"password": "bangbaM23#",

"tenant": "12f123bf-12f1-12af-12ab-1d3cd456db78"

}- Login

$ docker login -u ab123cd5-b1ab-1234-abab-a2bcd90abcde -p bangbaM23# acr12345-microsoft.azurecr.io - Pull a public image

$ docker pull dwaiba/azureiot-nodered - Tag for new ACR

$ docker tag dwaiba/azureiot-nodered acr12345-microsoft.azurecr.io/ab123cd5-b1ab-1234-abab-a2bcd90abcde/azureiot-nodered:latest - Push to ACR

$ docker push acr12345-microsoft.azurecr.io/ab123cd5-b1ab-1234-abab-a2bcd90abcde/azureiot-nodered:latest - Run it

$ docker run -dti -p 1880:1880 -p 1881:1881 acr12345-microsoft.azurecr.io/ab123cd5-b1ab-1234-abab-a2bcd90abcde/azureiot-nodered:latest

Presently Docker is using the docker4x repository for entirely private images with dockerized small footprint go apps for catering to standard design of Docker CE and Docker EE for Public Cloud. The following are the last ones for Azure including the ones used for Docker Azure EE (DDC) and Docker CE. The base system service stack of Docker for CE and EE can be easily obtained via any standard monitoring like OMS or names obtained via

docker search docker4x --limit 100|grep azure

- docker4x/upgrademon-azure

- docker4x/requp-azure

- docker4x/upgrade-azure

- docker4x/upg-azure

- docker4x/ddc-init-azure

- docker4x/l4controller-azure

- docker4x/create-sp-azure

- docker4x/logger-azure

- docker4x/azure-vhd-utils

- docker4x/l4azure

- docker4x/waalinuxagent (dated)

- docker4x/meta-azure

- docker4x/cloud-azure

- docker4x/guide-azure

- docker4x/init-azure

- docker4x/agent-azure