Official implementation of 'Point-PEFT: Parameter-Efficient Fine-Tuning for 3D Pre-trained Models'.

The paper has been accepted by AAAI 2024.

[2023.5] We release ICCV2023 'ViewRefer3D', a multi-view framework for 3D visual grounding exploring how to grasp the view knowledge from both text and 3D modalities with LLM.

[2024.4] We release 'Any2Point', adapting Any-Modality pre-trained Models with 1% parameters to 3D downstream tasks with SOTA performance.

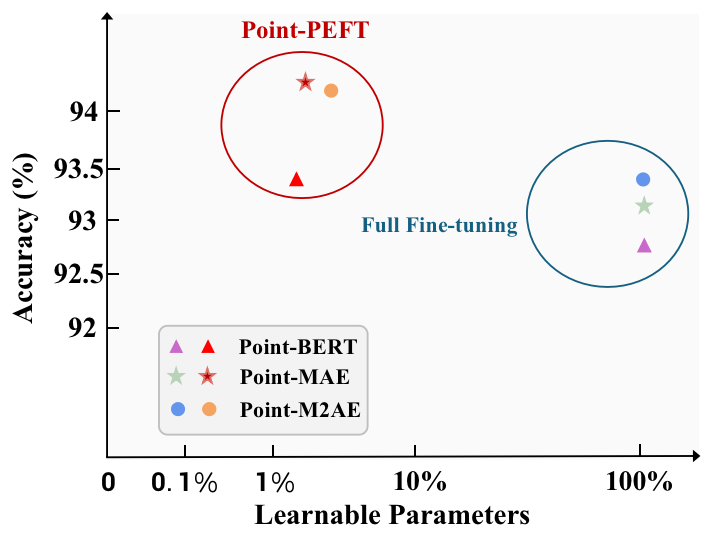

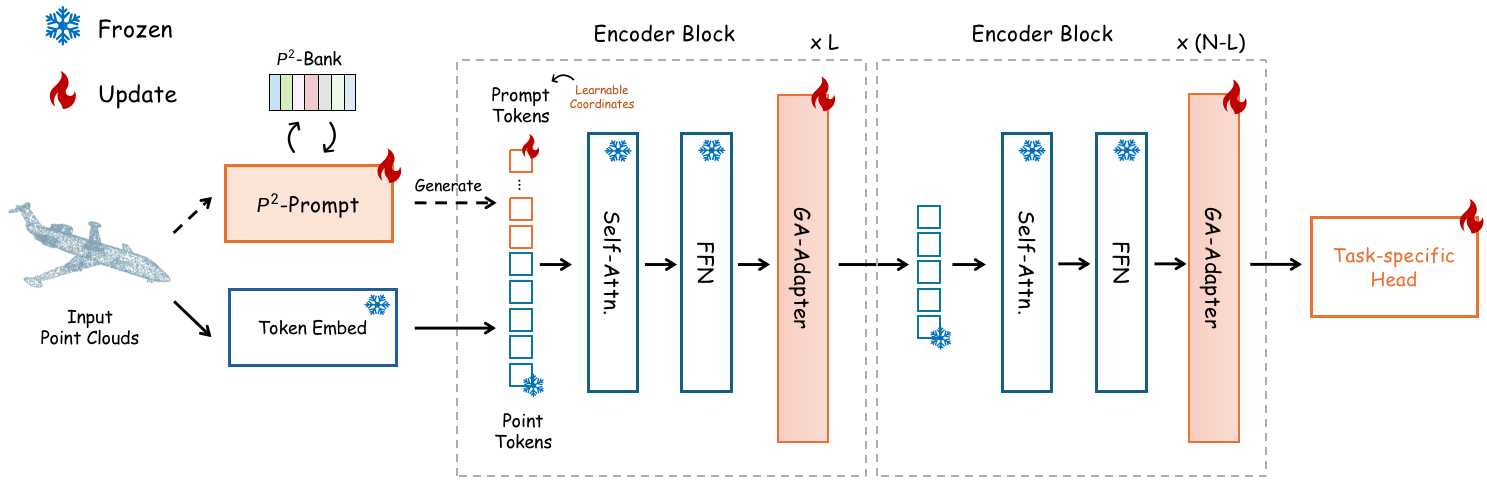

We propose the Point-PEFT, a novel framework for adapting point cloud pre-trained models with minimal learnable parameters. Specifically, for a pre-trained 3D model, we freeze most of its parameters, and only tune the newly added PEFT modules on downstream tasks, which consist of a Point-prior Prompt and a Geometry-aware Adapter. The Point-prior Prompt constructs a memory bank with domain-specific knowledge and utilizes a parameter-free attention for prompt enhancement. The Geometry-aware Adapter aims to aggregate point cloud features within spatial neighborhoods to capture fine-grained geometric information.

Comparison with existing 3D pre-trained models on the PB-T50-RS split of ScanObjectNN:

| Method | Parameters | PB-T50-RS |

|---|---|---|

| Point-BERT | 22.1M | 83.1% |

| +Point-PEFT | 0.6M | 85.0% |

| Point-MAE-aug | 22.1M | 88.1% |

| +Point-PEFT | 0.7M | 89.1% |

| Point-M2AE-aug | 12.9M | 88.1% |

| +Point-PEFT | 0.7M | 88.2% |

Comparison with existing 3D pre-trained models on the ModelNet40 without voting method:

| Method | Parameters | Acc |

|---|---|---|

| Point-BERT | 22.1M | 92.7% |

| +Point-PEFT | 0.6M | 93.4% |

| Point-MAE | 22.1M | 93.2% |

| +Point-PEFT | 0.8M | 94.2% |

| Point-M2AE | 15.3M | 93.4% |

| +Point-PEFT | 0.6M | 94.1% |

Real-world shape classification on the PB-T50-RS split of ScanObjectNN:

| Method | Acc. | Logs |

|---|---|---|

| Point-M2AE-aug | 88.2% | scan_m2ae.log |

| Point-MAE-aug | 89.1% | scan_mae.log |

Create a conda environment and install basic dependencies:

git clone https://github.com/EvenJoker/Point-PEFT.git

cd Point-PEFT

conda create -n point-peft python=3.8

conda activate point-peft

# Install the according versions of torch and torchvision

conda install pytorch torchvision cudatoolkit

# e.g., conda install pytorch==1.11.0 torchvision==0.12.0 torchaudio==0.11.0 cudatoolkit=11.3

pip install -r requirements.txtInstall GPU-related packages:

# Chamfer Distance and EMD

cd ./extensions/chamfer_dist

python setup.py install --user

cd ../emd

python setup.py install --user

# PointNet++

pip install "git+https://github.com/erikwijmans/Pointnet2_PyTorch.git#egg=pointnet2_ops&subdirectory=pointnet2_ops_lib"

# GPU kNN

pip install --upgrade https://github.com/unlimblue/KNN_CUDA/releases/download/0.2/KNN_CUDA-0.2-py3-none-any.whlFor pre-training and fine-tuning, please follow DATASET.md to install ModelNet40, ScanObjectNN, and ShapeNetPart datasets, referring to Point-BERT. Specially Put the unzip folder under data/.

The final directory structure should be:

│Point-PEFT/

├──cfgs/

├──datasets/

├──data/

│ ├──ModelNet/

│ ├──ScanObjectNN/

├──...

M2AE:Please download the ckpt-best.pth, pre-train.pth and cache_shape.pt into the ckpts/ folder.

For the PB-T50-RS split of ScanObjectNN, run:

sh Finetune_cache_prompt_scan.shMAE:Please download the ckpt-best.pth, pre-train.pth and cache_shape.pt into the ckpts/ folder.

For the PB-T50-RS split of ScanObjectNN, run:

sh finetune.shIf you find our paper and code useful in your research, please consider giving a star ⭐ and citation 📝.

@inproceedings{tang2024point,

title={Point-PEFT: Parameter-efficient fine-tuning for 3D pre-trained models},

author={Tang, Yiwen and Zhang, Ray and Guo, Zoey and Ma, Xianzheng and Zhao, Bin and Wang, Zhigang and Wang, Dong and Li, Xuelong},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={38},

number={6},

pages={5171--5179},

year={2024}

}This repo benefits from Point-M2AE, Point-BERT, Point-MAE. Thanks for their wonderful works.