English | 中文

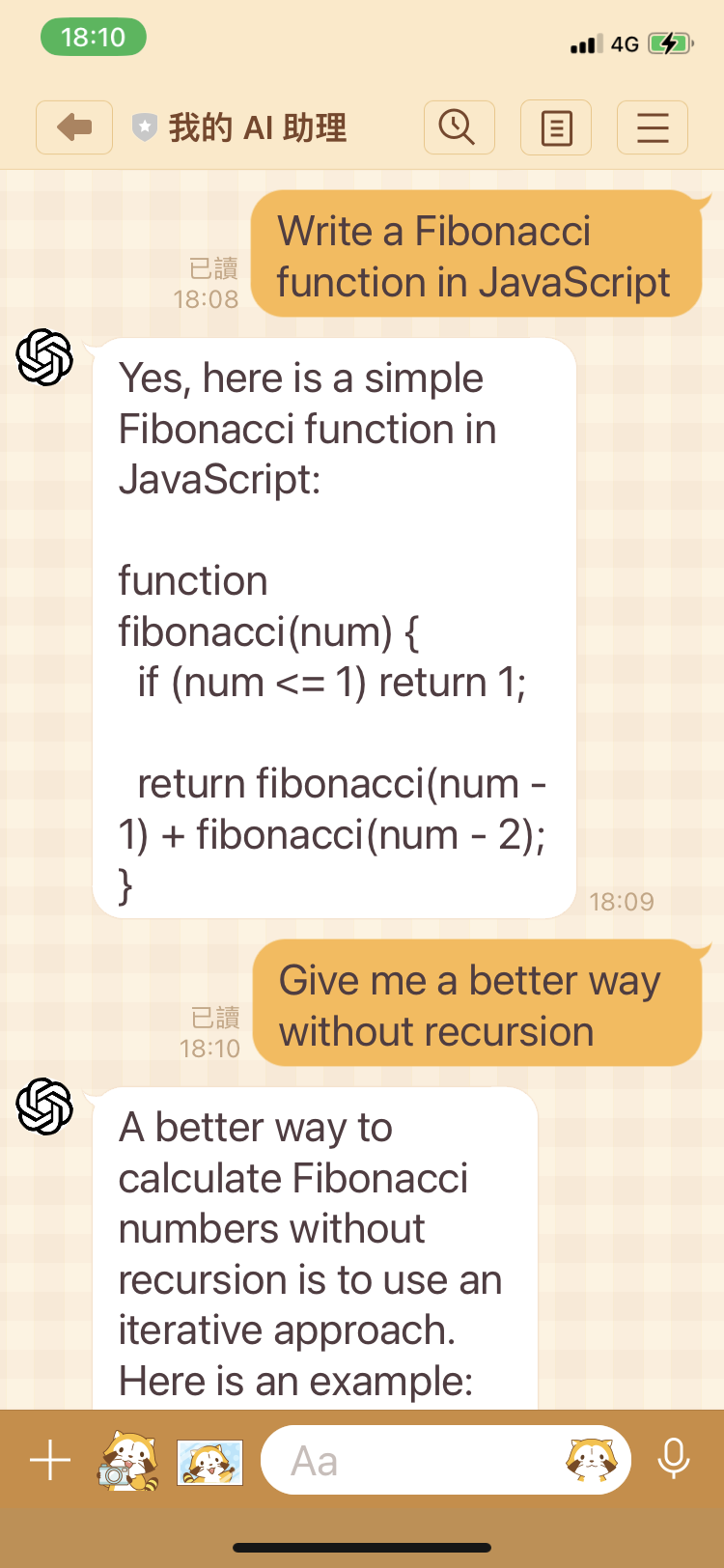

GPT AI Assistant is a lightweight and extensible application that is implemented using the OpenAI API and LINE Messaging API.

Through the installation process, you can start to chat with your own AI assistant using the LINE mobile app.

- Log in to the OpenAI website.

- Generate an OpenAI API key.

- Log in to the LINE website.

- Add a provider (e.g. "My Provider").

- Create a channel (e.g. "My AI Assistant") of type Messaging API.

- Click the "Messaging API" tab and generate a channel access token.

- Log in to the GitHub website.

- Go to the

gpt-ai-assistantproject. - Click the "Star" button to support this project and the developer.

- Click the "Fork" button to copy the source code to your own repository.

- Go to the

- Log in to the Vercel website.

- Click the "Create a New Project" button to create a new project.

- Click the "Import" button to import the

gpt-ai-assistantproject. - Click the "Environment Variables" tab and add the following environment variables with their corresponding values:

OPENAI_API_KEYwith the OpenAI API key.LINE_CHANNEL_ACCESS_TOKENwith the LINE channel access token.LINE_CHANNEL_SECRETwith the LINE channel secret.APP_LANGwithen.

- Click the "Deploy" button and wait for the deployment to complete.

- Go to the dashboard, copy the application URL, e.g. "https://gpt-ai-assistant.vercel.app/".

- Go back to the LINE website.

- Go to the page of "My AI Assistant", click the "Messaging API" tab, set the "Webhook URL" to application URL with "/webhook" path, e.g. "https://gpt-ai-assistant.vercel.app/webhook" and click the "Update" button.

- Click the "Verify" button to verify the webhook call is successful.

- Enable the "Use webhook" feature.

- Disable the "Auto-reply messages" feature.

- Disable the "Greeting messages" feature.

- Scan the QR code using the LINE mobile app to add as a friend.

- Start chatting with your own AI assistant!

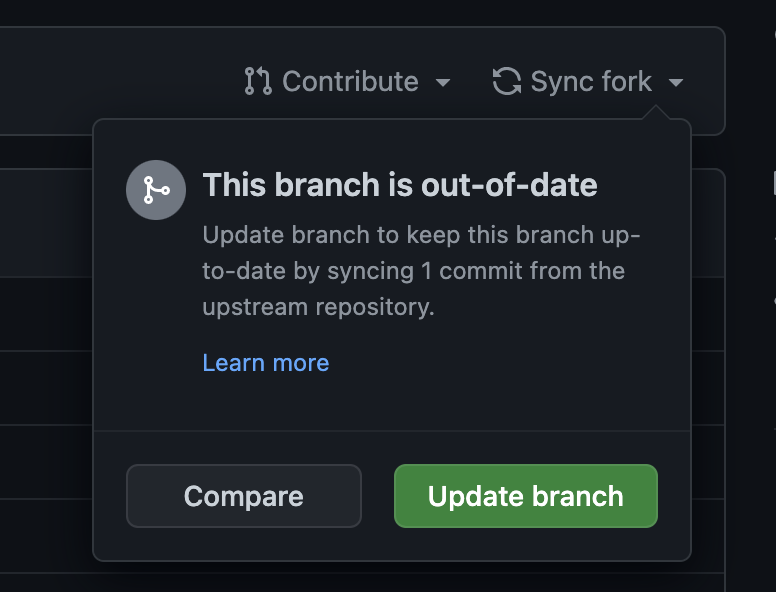

On your own gpt-ai-assistant project page, you can click on the "Sync fork" menu and then click on either the "Update branch" or "Discard commit" button to synchronize the latest code to your repository.

When the Vercel bot detects a change in the code, it will automatically redeploy.

Send commands using the LINE mobile app to perform specific functions.

| Name | Description |

|---|---|

Command, /command |

Show the application commands. |

Version, /version |

Show the application version. |

Chat, /chat |

Start a conversation with AI Assistant. |

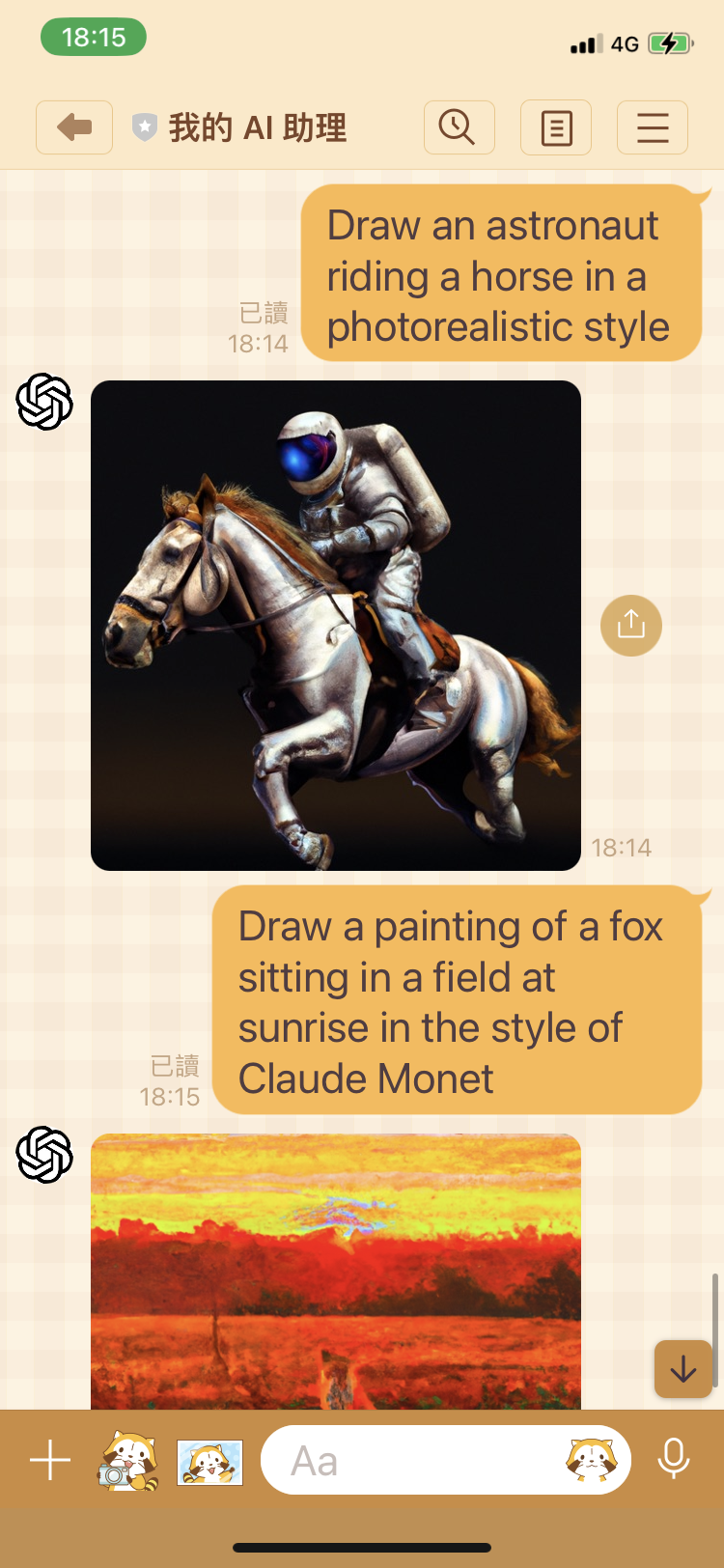

Draw, /draw |

Ask AI Assistant to draw a picture. |

Continue, /continue |

Ask AI Assistant to continue the conversation. |

Activate, /activate |

Activate auto-reply. The VERCEL_ACCESS_TOKEN environment variable is required. |

Deactivate, /deactivate |

Deactivate auto-reply. The VERCEL_ACCESS_TOKEN environment variable is required. |

Restart, /restart |

Deploy the application. The VERCEL_DEPLOY_HOOK_URL environment variable is required. |

Set environment variables to change program settings.

| Name | Default Value | Description |

|---|---|---|

APP_DEBUG |

false |

Print prompt to console. The value must be true of false. |

APP_WEBHOOK_PATH |

/webhook |

Custom webhook URL path of application. |

APP_LANG |

zh |

Application language. The value must be one of zh, en or ja. |

SETTING_AI_NAME |

AI |

Name of AI Assistant. This is used to call AI Assistant when status is deactivated. |

SETTING_AI_ACTIVATED |

null |

Status of AI Assistant. Controlled by application. |

VERCEL_ACCESS_TOKEN |

null |

Vercel access token |

VERCEL_DEPLOY_HOOK_URL |

null |

Vercel deploy hook URL |

OPENAI_API_KEY |

null |

OpenAI API key |

OPENAI_COMPLETION_MODEL |

text-davinci-003 |

Refer to model parameter for details. |

OPENAI_COMPLETION_TEMPERATURE |

0.9 |

Refer to temperature parameter for details. |

OPENAI_COMPLETION_MAX_TOKENS |

160 |

Refer to max_tokens parameter for details. |

OPENAI_COMPLETION_FREQUENCY_PENALTY |

0 |

Refer to frequency_penalty parameter for details. |

OPENAI_COMPLETION_PRESENCE_PENALTY |

0.6 |

Refer to presence_penalty parameter for details. |

OPENAI_IMAGE_GENERATION_SIZE |

256x256 |

Refer to size parameter for details. |

LINE_CHANNEL_ACCESS_TOKEN |

null |

LINE channel access token |

LINE_CHANNEL_SECRET |

null |

LINE channel secret |

Click the "Redeploy" button to redeploy if there are any changes.

- Check if the environment variables of the project are filled out correctly in the Vercel.

- Click the "Redeploy" button to redeploy if there are any changes.

- If there is still a problem, please go to Issues page, describe your problem and attach a screenshot.

Clone the project.

git clone git@github.com:memochou1993/gpt-ai-assistant.gitGo to the project directory.

cd gpt-ai-assistantInstall dependencies.

npm ciCopy .env.example to .env.test.

cp .env.example .env.testRun the tests.

npm run testCheck the results.

> gpt-ai-assistant@0.0.0 test

> jest

console.info

=== 000000 ===

AI: 嗨!我可以怎麼幫助你?

Human: 嗨?

AI: OK!

Test Suites: 1 passed, 1 total

Tests: 1 passed, 1 total

Snapshots: 0 total

Time: 1 sCopy .env.example to .env.

cp .env.example .envSet the environment variables as follows:

APP_DEBUG=true

APP_PORT=3000

VERCEL_GIT_REPO_SLUG=gpt-ai-assistant

VERCEL_ACCESS_TOKEN=<your_vercel_access_token>

OPENAI_API_KEY=<your_openai_api_key>

LINE_CHANNEL_ACCESS_TOKEN=<your_line_channel_access_token>

LINE_CHANNEL_SECRET=<your_line_channel_secret>Start a local server.

npm run devStart a proxy server.

ngrok http 3000Go back to the LINE website, modify the "Webhook URL" to e.g. "https://0000-0000-0000.jp.ngrok.io/webhook" and click the "Update" button.

Send a message from the LINE mobile app.

Check the results.

> gpt-ai-assistant@1.0.0 dev

> node api/index.js

=== 0x1234 ===

AI: 哈囉!

Human: 嗨?

AI: 很高興見到你!有什麼可以為你服務的嗎?Copy .env.example to .env.

cp .env.example .envSet the environment variables as follows:

APP_DEBUG=true

APP_PORT=3000

VERCEL_GIT_REPO_SLUG=gpt-ai-assistant

VERCEL_ACCESS_TOKEN=<your_vercel_access_token>

OPENAI_API_KEY=<your_openai_api_key>

LINE_CHANNEL_ACCESS_TOKEN=<your_line_channel_access_token>

LINE_CHANNEL_SECRET=<your_line_channel_secret>Start a local server with Docker Compose.

docker-compose up -dDetailed changes for each release are documented in the release notes.

- jayer95 - Debugging

- All other contributors