This is microservice of our IASA-KA-75-KA-76-Pharos-Production-Distributed-Systems project called SagasLife. This part is responsible for scaling photos to different resolutions(by width).

- About

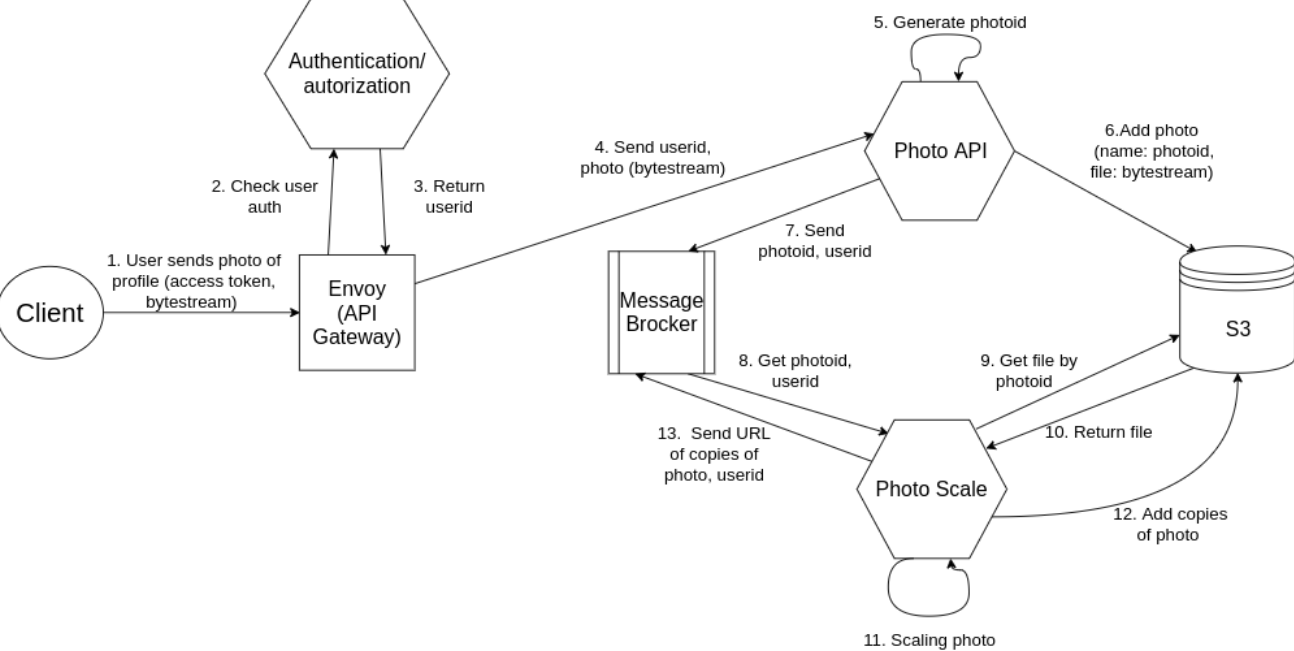

- Data flows

- Algorithm

- Examples

- Customization

- Running

- Running kafka

- Running tests

- Running in docker

The main operation is scaling original photo:

Two main operations is put and delete photo. Both of them accept image name. Requests to this microservice are sent via Kafka topics. All of the topic names can be configured via conf/config.json. All errors are being reported to Sagas microservice via sagasTopic option in config file.

There are two main topics userpicsTopic and photosTopic that are responsible for streaming two types of messages: put: and del: photo. The type of topic is needed to determine the type of AWS S3 bucket, that stores original photo to rescale and in which future scales will be stored.

Message in userpicsTopic :

put:pretty_woman.jpg

Actions:

put:means this is scale request,userpicsTopicmeans, that this photo is stored inuserpicsBucket.- Get original

pretty_woman.jpgphoto from AWS - Scale it to different sizes defined in config file.

- Sent it to S3 adding suffixes defined in config. For example three new files will be created:

pretty_woman.jpg.sm,pretty_woman.jpg.md,pretty_woman.jpg.lg, that means small, medium and large sizes respectively. - Report operation status to

Sagas

Message in photosTopic :

del:pretty_woman.jpg

Actions:

del:means this is scale request,photosTopicmeans, that this photo is stored inphotosBucket.- Sent removal request to AWS s3 adding suffixes defined in config. For example three files will be removed :

pretty_woman.jpg.sm,pretty_woman.jpg.md,pretty_woman.jpg.lgthat means small, medium and large sizes respectively. - Report operation status to

Sagas

All this settings defined in scales/src/main/resources/conf/config.json - Single Source Of Truth. Customize it to suit your needs, even without reloading the project.

sizes: add new sizes to scale intoawsphotosBucket: name of ordinary photos S3 bucketuserpicsBucket: name of userpics S3 bucketregion: region of bucket, like it is defined in aws links(e. g.us-east-2)

extension: image extension(e. g.jpg)kafkahostportphotosTopic: name of ordinary photos topicuserpicsTopic: name of userpics topicsagasTopic: name ofSagastopicdeleteRequest: prefix of delete request(likedel:)scaleRequest: prefix of scale request(likeput:)

Use java 12. Add new configuration with such settings:

- Main class:

scales.Launcher - Program arguments:

run scales.verticles.MainVerticle -cluster - Classpath of module:

photo-scale-microservice.scales.main

Then provide such environment variables: AWS_S3_ACCESS_KEY and AWS_S3_SECRET_KEY, to use your AWS S3. All of these you could get as described here.

For more info on configuring run, read this one.

To run project you need to start kafka with configuration from scales/src/main/resources/conf/config.json file. Go to misc/kafk.. and there are 5 .sh files:

start1ZooKeeper.shstart2Kafka.shcreateTopic.shlistenTopic.shsend2Topic.sh

Here is my normal usage of this commands(assuming topics are previously created):

./start1ZooKeeper.sh./start2Kafka.sh./send2Topic.sh local userpicsTopic./send2Topic.sh local photosTopic./listenTopic.sh local sagasTopic

P. S. You'll need a whole lot of terminals(or a virtual terminals, like terminator is. Highly recommend!)

There are two types of tests for this project:

- Unit tests - testing autonomous part of the code

- Integration tests - testing project as a whole

From now on we are inside directory scales/src/test/java

There are one unit test currently: ResizeTest. You should add JUnit configuration pointing to unit/ResizeTest.

It is responsible for testing ImageResize class.

To run this ones you need to start kafka and run main configuration(two items above).

Then add two configurations pointing to integration/DeleteTest and integration/ScaleTest.

You could easily add your own tests of this type: just inherit from abstract class integration/ScaleGeneralTest

and define custom method: actualTests, fulfill it with 4 types of method calls:

delPhoto- put delete request to photosTopic;delUserpic- put delete request to userpicsTopic;putPhoto- put scale request to photosTopic;putUserpic- put scale request to userpicsTopic;

That's it, inherited code will easily do the job for you!

But beware of mixing del and put requests!

Because of asynchronous nature of vertx it might not be completed sequentially, as you'd want it to be.

Even though the tests will pass. The reason it works because in real world there are no chance that two

types of requests are being fired on one photo simultaneously.

Just run docker-compose up and you are done, well it is not THAT easy, but it is easy indeed.

Firstly you need to read .env_exmpl file and do what it tolds you.

Then you can steadily run docker-compose up, it will perform this ones for you:

- Setting up zookeeper

- Setting up kafka

- Setting up vertx(running program)

Now you need to connect to internal docker kafka, so that you can send some info inside.

Use misc/kafka.../send2Topic.sh and misc/kafka.../listenTopic.sh in such a way(to send to internal kafka):

./send2Topic.sh userpicsTopic./send2Topic.sh photosTopic./listenTopic.sh sagasTopic

Go on, just write put:some_photo.jpg or del:other_photo.jpg. But this photos should at least be on your S3.