Nicolás Ayobi1,2, Santiago Rodríguez1,2*, Alejandra Pérez1,2*, Isabela Hernández1,2*, Nicolás Aparicio1,2, Eugénie Dessevres1,2, Sebastián Peña3, Jessica Santander3, Juan Ignacio Caicedo3, Nicolás Fernández4,5, Pablo Arbeláez1,2

*Equal contribution.

1 Center for Research and Formation in Artificial Intelligence (CinfonIA), Bogotá, Colombia.

2 Universidad de los Andes, Bogotá, Colombia.

3 Fundación Santafé de Bogotá, Bogotá, Colombia

4 Seattle Children’s Hospital, Seattle, USA

5 University of Washington, Seattle, USA

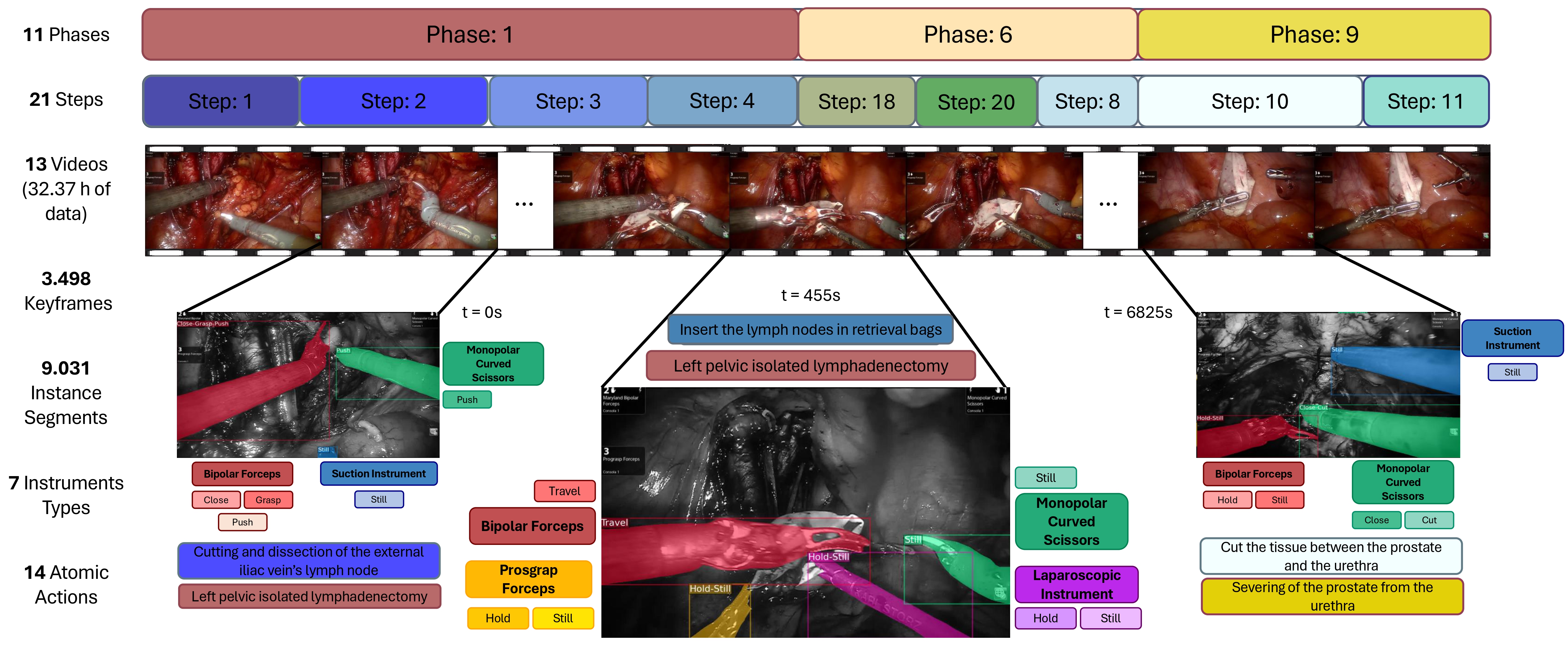

We present the Holistic and Multi-Granular Surgical Scene Understanding of Prostatectomies (GraSP) dataset, a curated benchmark that models surgical scene understanding as a hierarchy of complementary tasks with varying levels of granularity. Our approach enables a multi-level comprehension of surgical activities, encompassing long-term tasks such as surgical phases and steps recognition and short-term tasks including surgical instrument segmentation and atomic visual actions detection. To exploit our proposed benchmark, we introduce the Transformers for Actions, Phases, Steps, and Instrument Segmentation (TAPIS) model, a general architecture that combines a global video feature extractor with localized region proposals from an instrument segmentation model to tackle the multi-granularity of our benchmark. Through extensive experimentation, we demonstrate the impact of including segmentation annotations in short-term recognition tasks, highlight the varying granularity requirements of each task, and establish TAPIS's superiority over previously proposed baselines and conventional CNN-based models. Additionally, we validate the robustness of our method across multiple public benchmarks, confirming the reliability and applicability of our dataset. This work represents a significant step forward in Endoscopic Vision, offering a novel and comprehensive framework for future research towards a holistic understanding of surgical procedures.

This repository provides instructions for downloading the GraSP dataset and running the PyTorch implementation of TAPIS, both presented in the paper Pixel-Wise Recognition for Holistic Surgical Scene Understanding.

This work is an extended and consolidated version of three previous works:

- Towards Holistic Surgical Scene Understanding, MICCAI 2022, Oral. Code here.

- Winner solution of the 2022 SAR-RARP50 challenge

- MATIS: Masked-Attention Transformers for Surgical Instrument Segmentation, ISBI 2023, Oral. Code here.

Please check these works!

In this link, you will find all the files that compose the entire Holistic and Multi-Granular Surgical Scene Understanding of Prostatectomies (GraSP) dataset. These files include the original Radical Prostatectomy videos, our sampled preprocessed and raw frames, and the gathered annotations for all four semantic tasks. The data in the link has the following organization:

GraSP:

|

|__GraSP_30fps.tar.gz

|__GraSP_1fps.tar.hz

|__raw_frames_30fps.tar.gz

|__raw_frames_1fps.tar.gz

|__videos.tar.gz

|__1fps_to_30fps_association.json

|__README.txt

These files contain the following aspects and versions of our dataset:

GraSP_30fps.tar.gzIs a compressed archive with all the preprocessed frames sampled at 30fps and all annotations for all tasks in our benchmark. This is the complete dataset used for model training and evaluation.GraSP_1fps.tar.gzIs a compressed archive with a lighter version of the dataset with preprocessed frames sampled at 1fps and the annotations for these frames.raw_frames_30fps.tar.gzIs a compressed archive with all original frames sampled at 30 fps before frame preprocessing.raw_frames_1fps.tar.gzIs a compressed archive with original frames sampled at 1 fps before frame preprocessing.videos.tar.gzIs a compressed archive with our dataset's original raw Radical Prostatctomy videos.1fps_to_30fps_association.jsonContains the name association between frames sampled at 1fps and frames sampled at 30fps.README.txtInformation file with a summary of files' contents.

You can download all files recursively by running the following command in a Linux terminal:

$ wget -r http://157.253.243.19/GraSPHowever, we recommend downloading just the files you require by running:

$ wget http://157.253.243.19/GraSP/<file_name>For example, you can only download the video files by running:

$ wget http://157.253.243.19/GraSP/videos.tar.gzNote: All compressed archives contain a README file with further details and instructions on the data's structure and format.

In case you cannot download the files from our servers, you can download the dataset from this Google Drive link.

The GraSP_30fps.tar.gz is the only archive necessary to run our code. Hence, to download and uncompress this file, run the following command in a Linux terminal:

$ wget http://157.253.243.19/GraSP/GraSP_30fps.tar.gz

$ tar -xzvf GraSP_30fps.tar.gzAfter decompressing the archive, the GraSP_30fps directory must have the following structure:

GraSP_30fps

|

|___frames

| |

| |___CASE001

| | |__000000000.jpg

| | |__000000001.jpg

| | |__000000002.jpg

| | ...

| |___CASE002

| | ...

| ...

| |

| |___README.txt

|

|___annotations

|__segmentations

| |

| |__CASE001

| | |__000000068.png

| | |__000001642.png

| | |__000003218.png

| | ...

| ...

| |__CASE053

| |__000000015.png

| |__000001065.png

| |__000002115.png

| ...

|

|__grasp_long-term_fold1.json

|__grasp_long-term_fold2.json

|__grasp_long-term_train.json

|__grasp_long-term_test.json

|__grasp_short-term_fold1.json

|__grasp_short-term_fold2.json

|__grasp_short-term_train.json

|__grasp_short-term_test.json

|__README.txt

Go to the TAPIS directory to find our source codes and instructions for running our TAPIS model.

If you have any doubts, questions, issues, corrections, or comments, please email n.ayobi@uniandes.edu.co.

If you find GraSP or TAPIS useful for your research (or its previous versions, PSI-AVA, TAPIR, and MATIS), please include the following BibTex citations in your papers.

@article{ayobi2024pixelwise,

title={Pixel-Wise Recognition for Holistic Surgical Scene Understanding},

author={Nicol{\'a}s Ayobi and Santiago Rodr{\'i}guez and Alejandra P{\'e}rez and Isabela Hern{\'a}ndez and Nicol{\'a}s Aparicio and Eug{\'e}nie Dessevres and Sebasti{\'a}n Peña and Jessica Santander and Juan Ignacio Caicedo and Nicol{\'a}s Fernández and Pablo Arbel{\'a}ez},

year={2024},

url={https://arxiv.org/abs/2401.11174},

eprint={2401.11174},

journal={arXiv},

primaryClass={cs.CV}

}

@InProceedings{ayobi2023matis,

author={Nicol{\'a}s Ayobi and Alejandra P{\'e}rez-Rond{\'o}n and Santiago Rodr{\'i}guez and Pablo Arbel{\'a}es},

booktitle={2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI)},

title={MATIS: Masked-Attention Transformers for Surgical Instrument Segmentation},

year={2023},

pages={1-5},

doi={10.1109/ISBI53787.2023.10230819}

}

@InProceedings{valderrama2020tapir,

author={Natalia Valderrama and Paola Ruiz and Isabela Hern{\'a}ndez and Nicol{\'a}s Ayobi and Mathilde Verlyck and Jessica Santander and Juan Caicedo and Nicol{\'a}s Fern{\'a}ndez and Pablo Arbel{\'a}ez},

title={Towards Holistic Surgical Scene Understanding},

booktitle={Medical Image Computing and Computer Assisted Intervention -- MICCAI 2022},

year={2022},

publisher={Springer Nature Switzerland},

address={Cham},

pages={442--452},

isbn={978-3-031-16449-1}

}