Wenxuan Ma, Shuang Li, Jinming Zhang, Chi Harold Liu, Jingxuan Kang, Yulin Wang, and Gao Huang

Official implementation of our ICCV 2023 paper (BorLan).

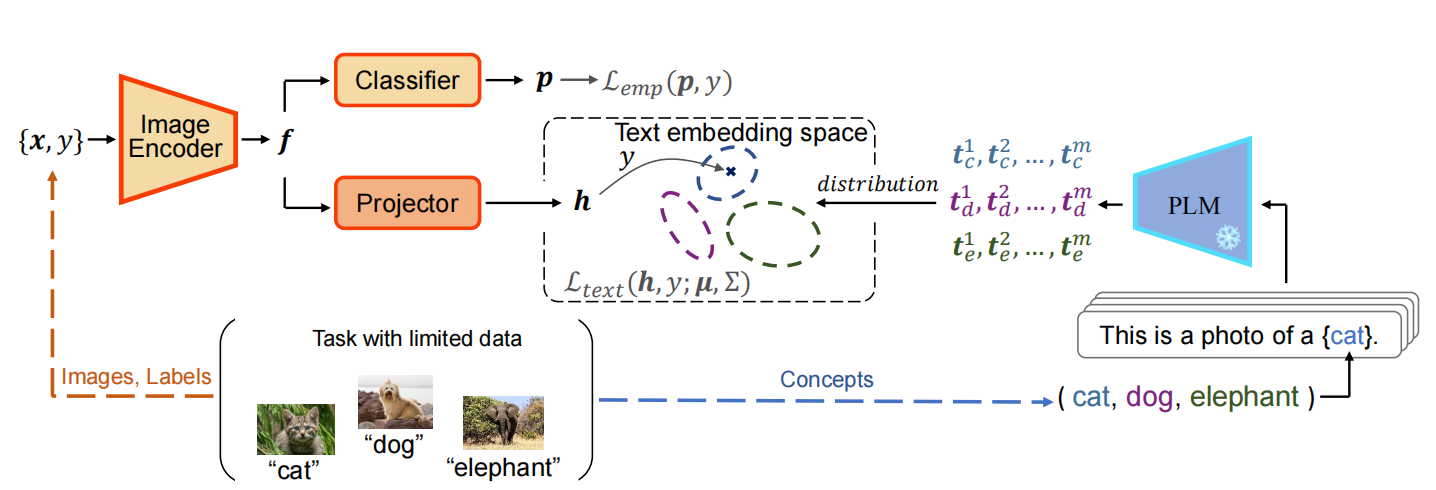

BorLan is a simple data-efficient learning paradigm that includes three parts:

- Obtain text embedding of task concepts via pre-trained language model (PLM). (This part can be conducted before the visual training once and for all for a given dataset.)

- Main task loss (i.e., CrossEntropy)

- Distribution alignment loss that leverages text embedding space to promote data-efficient visual training.

Run the following command to obtain text embeddings.

You need to modify the following things in the code:

- classnames: List

- save_name: str

# Bert-Large

python text_features/text_embedding.py

# GPT-2

python text_features/text_embedding_gpt.py

# CLIP ViT-Large

python text_features/text_embedding_clip.pyRun the following command for Semi-Supervised Learning tasks:

sh run.shThis repository borrows codes from the following repos. Many thanks to the authors for their great work.

Self-Tuning: https://github.com/thuml/Self-Tuning

CoOp: https://github.com/KaiyangZhou/CoOp

If you find this project useful, please consider citing:

@inproceedings{ma2023borrowing,

title={Borrowing Knowledge From Pre-trained Language Model: A New Data-efficient Visual Learning Paradigm},

author={Ma, Wenxuan and Li, Shuang and Zhang, Jinming and Liu, Chi Harold and Kang, Jingxuan and Wang, Yulin and Huang, Gao},

booktitle={Proceedings of the IEEE/CVF international conference on computer vision},

year={2023}

}

If you have any questions about our code, feel free to contact us or describe your problem in Issues.

Email address: wenxuanma@bit.edu.cn.