Dirichlet-based Uncertainty Calibration for Active Domain Adaptation [ICLR 2023 Spotlight]

by Mixue Xie, Shuang Li, Rui Zhang and Chi Harold Liu

- Overview

- Prerequisites Installation

- Datasets Preparation

- Code Running

- Acknowledgments

- Citation

- Contact

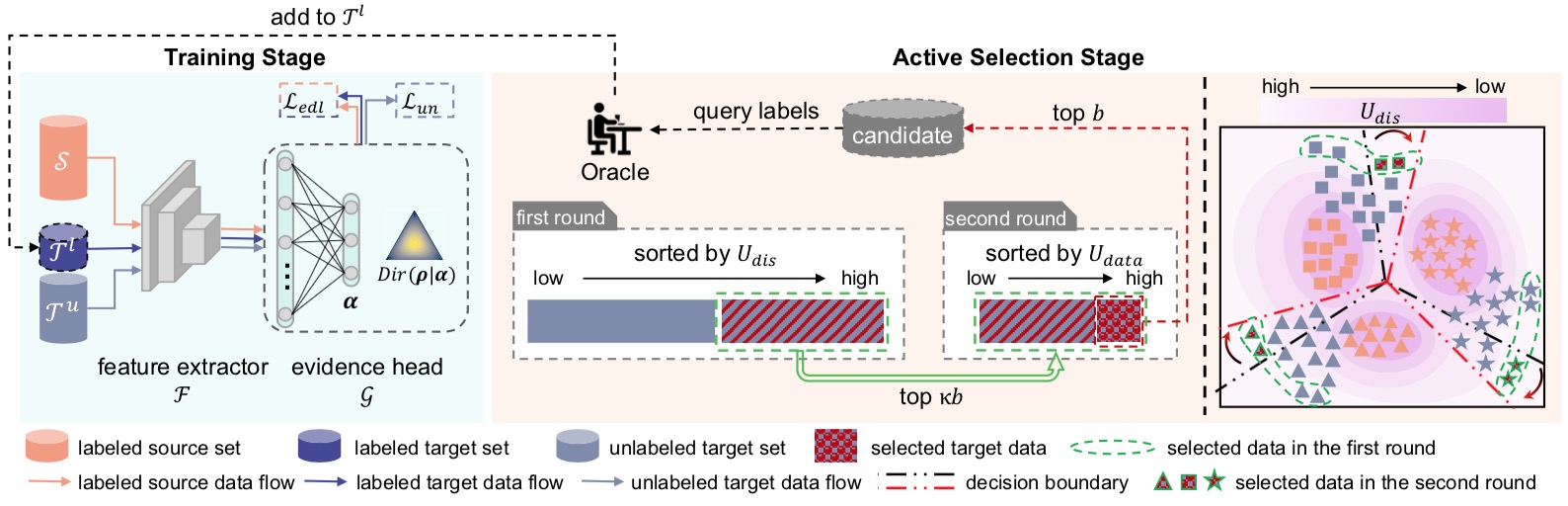

We propose a Dirichlet-based Uncertainty Calibration (DUC) approach for active domain adaptation (DA). It provides a novel perspective for active DA by introducing the Dirichlet-based evidential model and designing an uncertainty origin-aware selection strategy to comprehensively evaluate the value of samples.

-

For cross-domain image classification tasks, this code is implemented with

Python 3.7.5,CUDA 11.4onNVIDIA GeForce RTX 2080 Ti. To try out this project, it is recommended to set up a virtual environment first.# Step-by-step installation conda create --name DUC_cls python=3.7.5 conda activate DUC_cls # this installs the right pip and dependencies for the fresh python conda install -y ipython pip # this installs required packages pip install -r requirements_cls.txt

-

For cross-domain semantic segmentation tasks, this code is implemented with

Python 3.7.5,CUDA 11.2onNVIDIA GeForce RTX 3090. To try out this project, it is recommended to set up a virtual environment first.# Step-by-step installation conda create --name DUC_seg python=3.7.5 conda activate DUC_seg # this installs the right pip and dependencies for the fresh python conda install -y ipython pip # this installs required packages python3 -m pip install torch==1.7.0+cu110 torchvision==0.8.1+cu110 torchaudio===0.7.0 -f https://download.pytorch.org/whl/torch_stable.html pip install -r requirements_seg.txt

-

Image Classification Datasets

- Download Office-Home Dataset

- Download VisDA-2017 Dataset

- Download DomainNet dataset and miniDomainNet's split files

Symlink the required dataset by running

ln -s /path_to_home_dataset data/home ln -s /path_to_visda2017_dataset data/visda2017 ln -s /path_to_domainnet_dataset data/domainnet

The data folder should be structured as follows:

├── data/ │ ├── home/ | | ├── Art/ | | ├── Clipart/ | | ├── Product/ | | ├── RealWorld/ │ ├── visda2017/ | | ├── train/ | | ├── validation/ │ ├── domainnet/ | | ├── clipart/ | | |—— infograph/ | | ├── painting/ | | |—— quickdraw/ | | ├── real/ | | ├── sketch/ | |—— -

Semantic Segmentation Datasets

- Download Cityscapes Dataset

- Download GTAV Dataset

- Download SYNTHIA Dataset

Symlink the required dataset by running

ln -s /path_to_cityscapes_dataset datasets/cityscapes ln -s /path_to_gtav_dataset datasets/gtav ln -s /path_to_synthia_dataset datasets/synthia

Generate the label static files for GTAV/SYNTHIA Datasets by running

python datasets/generate_gtav_label_info.py -d datasets/gtav -o datasets/gtav/ python datasets/generate_synthia_label_info.py -d datasets/synthia -o datasets/synthia/

The data folder should be structured as follows:

├── datasets/ │ ├── cityscapes/ | | ├── gtFine/ | | ├── leftImg8bit/ │ ├── gtav/ | | ├── images/ | | ├── labels/ | | ├── gtav_label_info.p │ └── synthia | | ├── RAND_CITYSCAPES/ | | ├── synthia_label_info.p │ └──

-

for cross-domain image classification:

# running for cross-domain image classification: sh script_cls.sh -

for cross-domain semantic segmentation:

# go to the directory of semantic segmentation tasks cd ./segmentation # running for GTAV to Cityscapes sh script_seg_gtav.sh # running for SYNTHIA to Cityscapes sh script_seg_syn.sh

This project is based on the following open-source projects. We thank their authors for making the source code publicly available.

If you find this work helpful to your research, please consider citing the paper:

@inproceedings{xie2023DUC,

title={Dirichlet-based Uncertainty Calibration for Active Domain Adaptation},

author={Xie, Mixue and Li, Shuang and Zhang, Rui and Liu, Chi Harold},

booktitle={International Conference on Learning Representations (ICLR)},

year={2023}

}If you have any problem about our code, feel free to contact mxxie@bit.edu.cn or describe your problem in Issues.