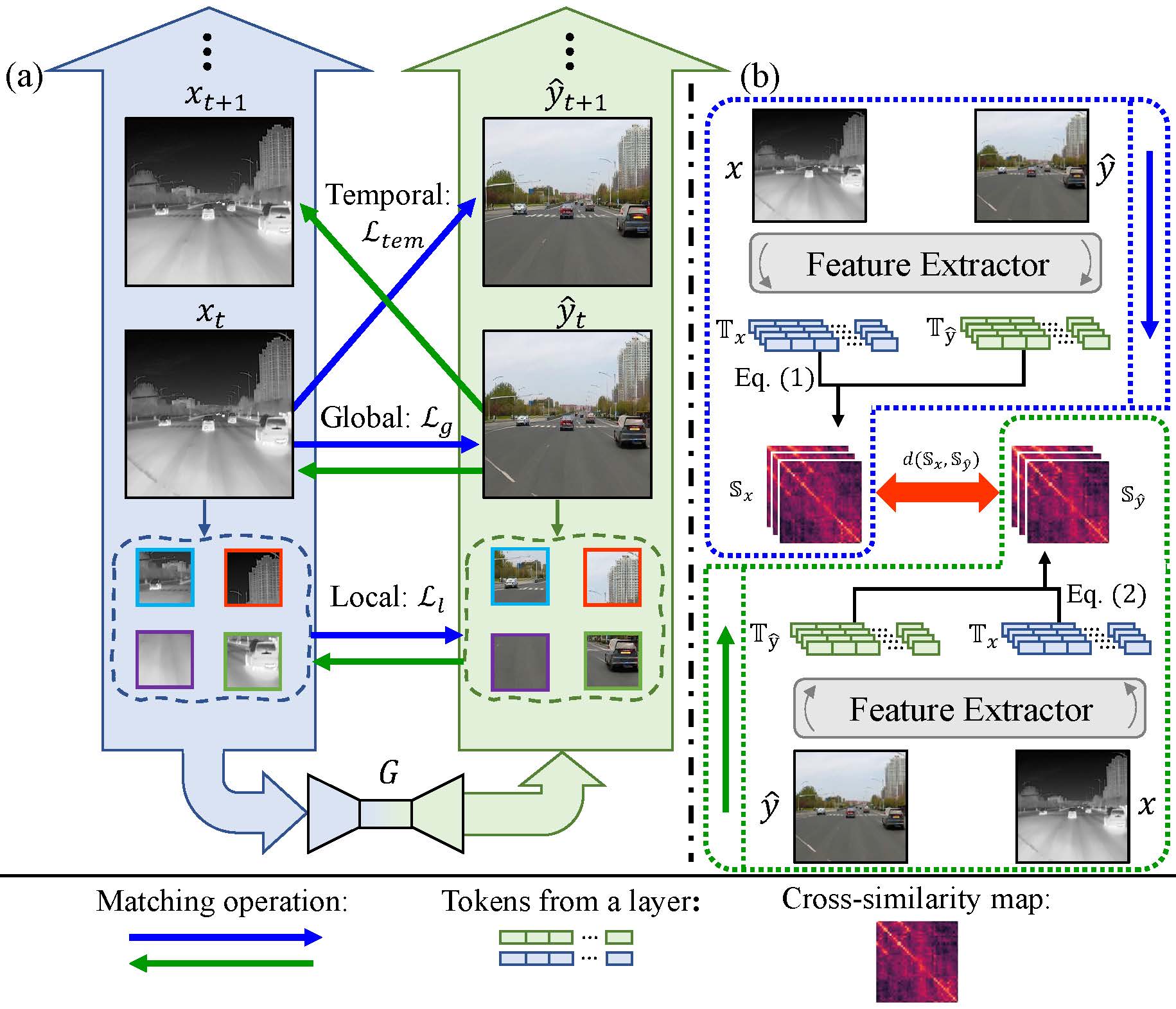

This repository is the official Pytorch implementation for ACM MM'22 paper "ROMA: Cross-Domain Region Similarity Matching for Unpaired Nighttime Infrared to Daytime Visible Video Translation".[Arxiv]

Examples of Object Detection:

Examples of Video Fusion

More experimental results can be obtained by contacting us.

- The domain gaps between unpaired nighttime infrared and daytime visible videos are even huger than paired ones that captured at the same time, establishing an effective translation mapping will greatly contribute to various fields.

- Our proposed cross-similarity, which are calculated across domains, could make the generative process focus on learning the content of structural correspondence between real and synthesized frames, getting rid of the negative effects of different styles.

The following is the required structure of dataset. For the video mode, the input of a single data is the result of concatenating two adjacent frames; for the image mode, the input of a single data is a single image.

Video/Image mode:

trainA: \Path\of\trainA

trainB: \Path\of\trainB

Concrete examples of the training and testing are shown in the script files ./scripts/train.sh and ./scripts/test.sh, respectively.

| InfraredCity | Total Frame | ||||

|---|---|---|---|---|---|

| Nighttime Infrared | 201,856 | ||||

| Nighttime Visible | 178,698 | ||||

| Daytime Visible | 199,430 | ||||

| InfraredCity-Lite | Infrared Train |

Infrared Test |

Visible Train |

Total | |

| City | clearday | 5,538 | 1,000 | 5360 | 15,180 |

| overcast | 2,282 | 1,000 | |||

| Highway | clearday | 4,412 | 1,000 | 6,463 | 15,853 |

| overcast | 2,978 | 1,000 | |||

| Monitor | 5,612 | 500 | 4,194 | 10,306 | |

The datasets and their more details are available in InfiRay.

If you find our work useful in your research or publication, please cite our work:

@inproceedings{ROMA2022,

title = {ROMA: Cross-Domain Region Similarity Matching for Unpaired Nighttime Infrared to Daytime Visible Video Translation},

author = {Zhenjie Yu and Kai Chen and Shuang Li and Bingfeng Han and Chi Harold Liu and Shuigen Wang},

booktitle = {ACM MM},

pages = {5294--5302},

year = {2022}

}

This code borrows heavily from the PyTorch implementation of Cycle-GAN and Pix2Pix and CUT.

A huge thanks to them!

@inproceedings{CycleGAN2017,

title = {Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networkss},

author = {Zhu, Jun-Yan and Park, Taesung and Isola, Phillip and Efros, Alexei A},

booktitle = {ICCV},

year = {2017}

}

@inproceedings{CUT2020,

author = {Taesung Park and Alexei A. Efros and Richard Zhang and Jun{-}Yan Zhu},

title = {Contrastive Learning for Unpaired Image-to-Image Translation},

booktitle = {ECCV},

pages = {319--345},

year = {2020},

}