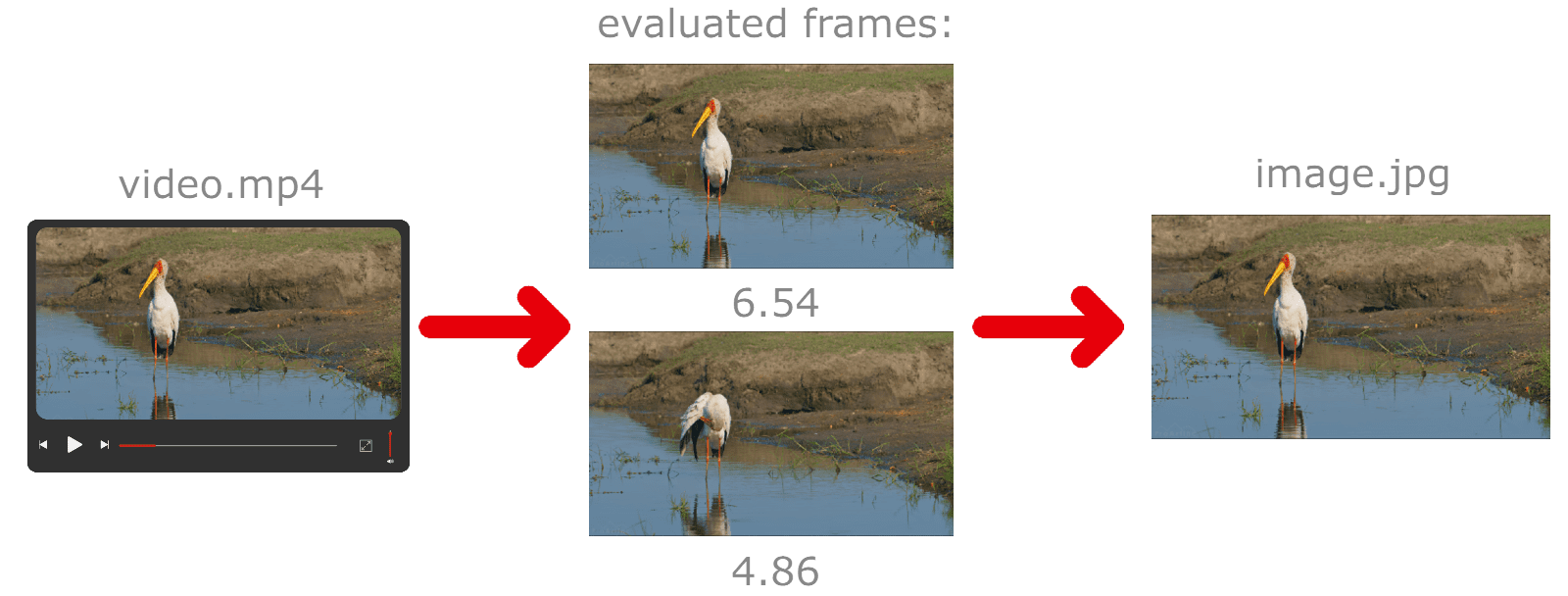

PerfectFrameAI is a tool that uses artificial intelligence to analyze video materials

and automatically save the best frames.

Best Frames Extraction 🎞️➜🖼️

Selecting the best frames from video files.

- Takes the first video from the specified location.

- Splits the video into frames. Frames are taken at 1-second intervals. Frames are processed in batches.

- Evaluates all frames in the batch using an AI model and assigns them a numerical score.

- Divides the batch of frames into smaller groups.

- Selects the frame with the highest numerical score from each group.

- Saves the frames with the best scores in the chosen location.

Input: Folder with video files.

Output: Frames saved as .jpg.

Top Images Extraction 🖼️➜🖼️

Selecting the best images from a folder of images.

- Loads the images. Images are processed in batches.

- Evaluates all images in the batch using an AI model and assigns them a numerical score.

-

Calculates the score an image must have to be in the top 90% of images.

This value can be changed in

schemas.py-top_images_percent. - Saves the top images in the chosen location.

Input: Folder with images.

Output: Images saved as .jpg.

🆕 Frames Extraction 🖼️🖼️🖼️

Extract and return frames from a video.

Modifying best_frames_extractor by skipping AI evaluation part.

python start.py best_frames_extractor --all_frames

- Takes the first video from the specified location.

- Splits the video into frames. Frames are taken at 1-second intervals. Frames are processed in batches.

- Saves all frames in the chosen location.

Input: Folder with video files.

Output: Frames saved as .jpg.

- Docker

- Python 3.7+ (method 1 only)

- 8GB+ RAM

- 10GB+ free disk space

Lowest tested specs - i5-4300U, 8GB RAM (ThinkPad T440) - 4k video, default 100img/batch.

Remember you can always decrease images batch size in schemas.py if you out of RAM.

Install Docker:

Docker Desktop: https://www.docker.com/products/docker-desktop/Install Python v3.7+:

MS Store: https://apps.microsoft.com/detail/9ncvdn91xzqp?hl=en-US&gl=USPython.org: https://www.python.org/downloads/

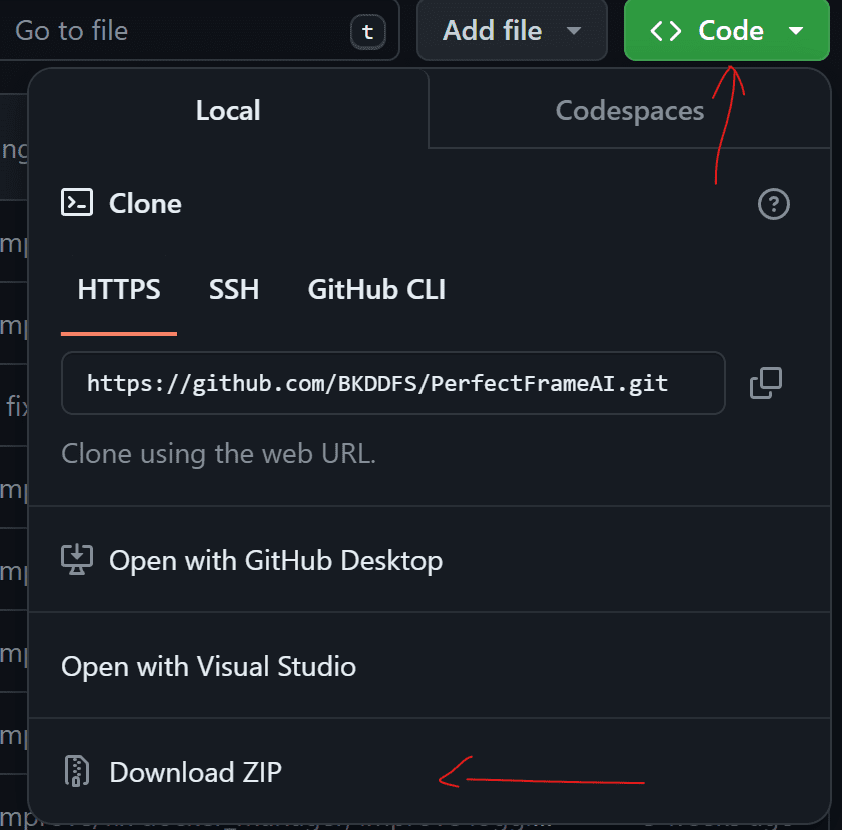

🚀 Method 1 - CLI

Requires Python. Simple and convenient.

Requires Python. Simple and convenient.

Hint for Windows users:

As a Windows user, you can use:

quick_demo_gpu.batorquick_demo_cpu.batif you don't have an Nvidia GPU.

It will runbest_frames_extractorwith the default values. Just double-click on it. You can modify the default values in config.py to adjust the application to your needs.

Warning!

Please note that when running the .bat file, Windows Defender may flag it as dangerous. This happens because obtaining a code-signing certificate to prevent this warning requires a paid certificate...

Run start.py from the terminal.

Example (Best Frames Extraction, default values):

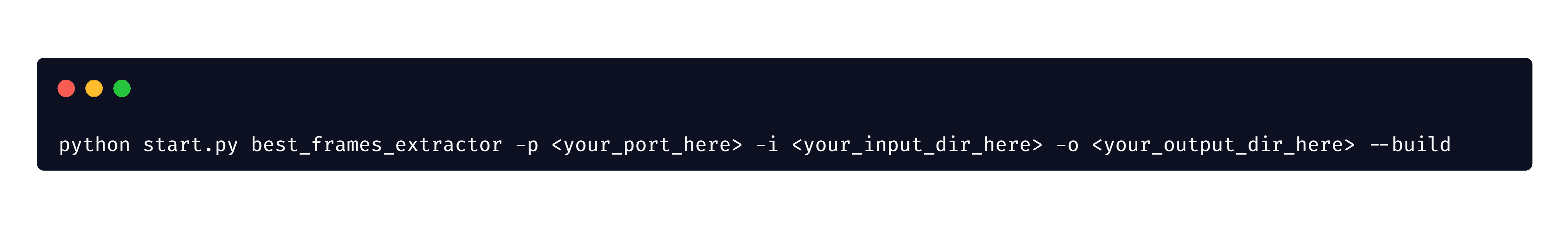

| Flag | Short | Description | Type | Default Value |

|---|---|---|---|---|

| --input_dir | -i | Change input directory | str | ./input_directory |

| --output_dir | -o | Change output directory | str | ./output_directory |

| --port | -p | Change the port the extractor_service will run on |

int | 8100 |

| --build | -b | Builds a new Docker image with the new specified settings. Always use with the --build flag if you don't understand. | bool | False |

| --all_frames | For skipping frames evaluation part. | bool | False | |

| --cpu | Uses only CPU for processing. If you, don't have GPU you must use it. | bool | False |

Example (Best Frames Extraction):

You can edit other default parameters in config.py.

🐳 Method 2 - docker-compose.yaml:

Does not require Python. Run using Docker Compose.

Does not require Python. Run using Docker Compose.

Docker Compose Docs: https://docs.docker.com/compose/

Remember to delete GPU part in docker-compose.yaml if you don't have GPU!

- Run the service:

docker-compose up --build -d - Send a request to the chosen endpoint.

Example requests:

- Best Frames Extraction:

POST http://localhost:8100/extractors/best_frames_extractor - Top Frames Extraction:

POST http://localhost:8100/extractors/top_images_extractor - Current working extractor:

GET http://localhost:8100/

Optionally, you can edit docker-compose.yaml if you don't want to use the default settings.

- Best Frames Extraction:

The tool uses a model built according to the principles of Neural Image Assessment (NIMA) models to determine the aesthetics of images.

Model Input

The model accepts properly normalized images in a Tensor batch.

The NIMA model, after processing the images, returns probability vectors, where each value in the vector corresponds to the probability that the image belongs to one of the aesthetic classes.

Aesthetic Classes

There are 10 aesthetic classes. In the NIMA model, each of the 10 classes corresponds to a certain level of aesthetics, where:

- Class 1: Very low aesthetic quality.

- Class 2: Low aesthetic quality.

- Class 3: Below average aesthetic quality. ...

- Class 10: Exceptionally high aesthetic quality.

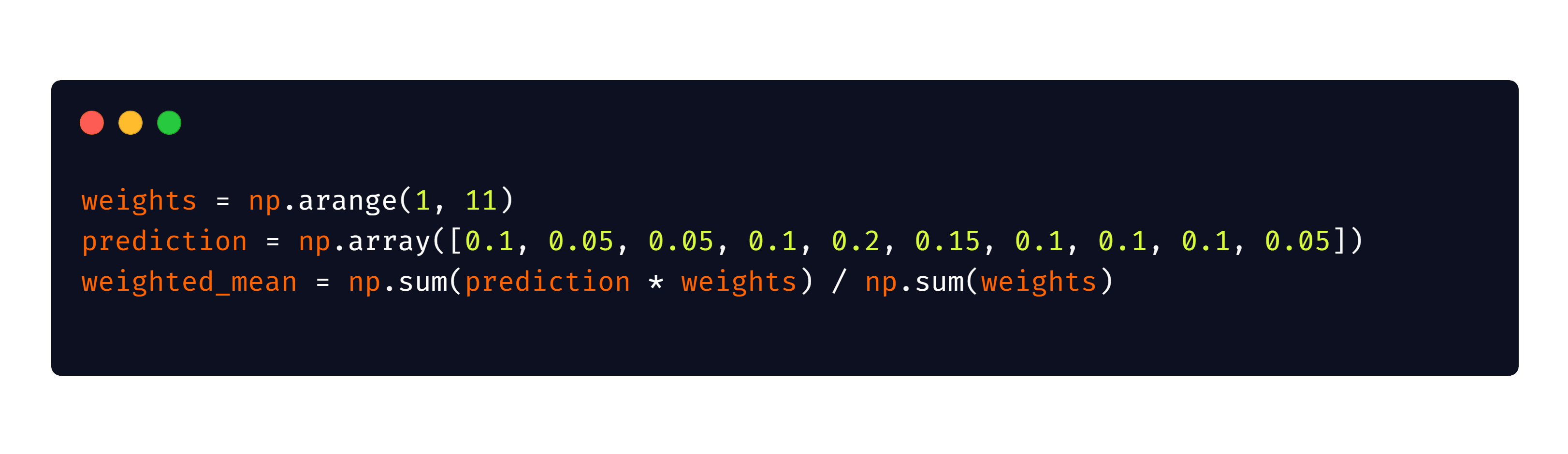

The final image score is calculated using the weighted mean of the scores for each class, where the weights are the class values from 1 to 10.

Suppose the model returns the following probability vector for one image:

[0.1, 0.05, 0.05, 0.1, 0.2, 0.15, 0.1, 0.1, 0.1, 0.05]This means that the image has:

- 10% probability of belonging to class 1

- 5% probability of belonging to class 2

- 5% probability of belonging to class 3

- and so on...

By calculating the weighted mean of these probabilities, where the weights are the class values (1 to 10):

Model Architecture

The NIMA model uses the InceptionResNetV2 architecture as its base. This architecture is known for its high performance in image classification tasks.

Pre-trained Weights

The model uses pre-trained weights that have been trained on a large dataset (AVA dataset) of images rated for their aesthetic quality. The tool automatically downloads the weights and stores them in a Docker volume for further use.

Image Normalization

Before feeding images into the model, they are normalized to ensure they are in the correct format and value range.

Class Predictions

The model processes the images and returns a vector of 10 probabilities, each representing the likelihood of the image belonging to one of the 10 aesthetic quality classes (from 1 for the lowest quality to 10 for the highest quality).

Weighted Mean Calculation

The final aesthetic score for an image is calculated as the weighted mean of these probabilities, with higher classes having greater weights.

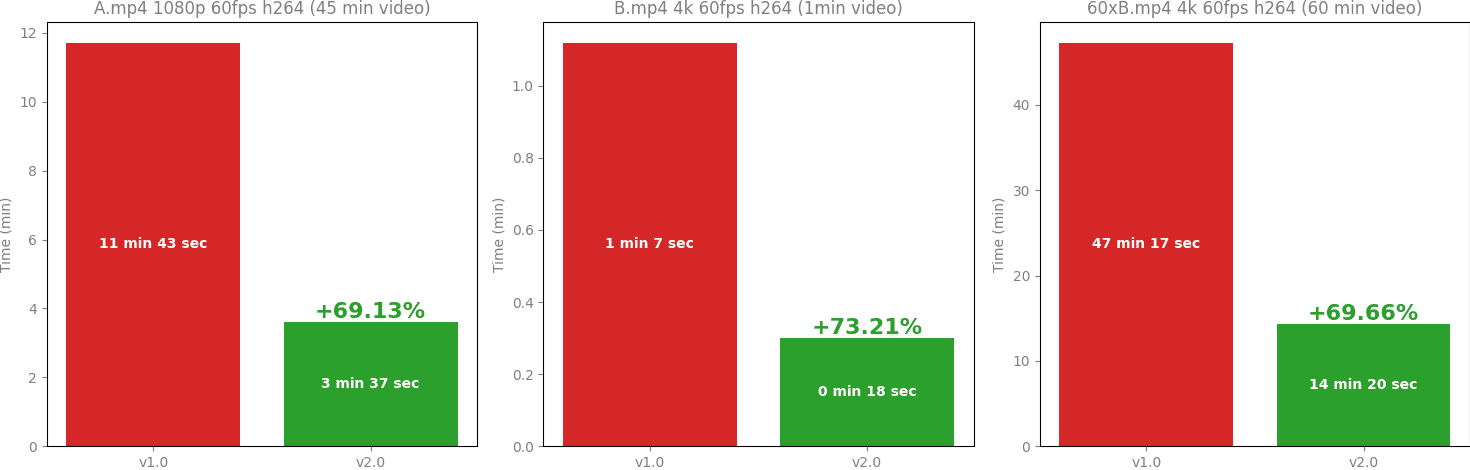

PerfectFrameAI is a tool created based on one of the microservices of my main project.

I refer to that version as v1.0.

| Feature | v1.0 | v2.0 |

|---|---|---|

| CLI | ❌ | ✅ |

| Automatic Installation | ❌ | ✅ |

| Fast and Easy Setup | ❌ | ✅ |

| RAM usage optimization | ❌ | ✅ |

| Performance | +0% | +70% |

| Size* | 12.6 GB | 8.4 GB |

| Open Source | ❌ | ✅ |

*v1.0 all dependencies and model vs v2.0 docker image size + model size

- RTX3070ti (8GB)

- i5-13600k

- 32GB RAM

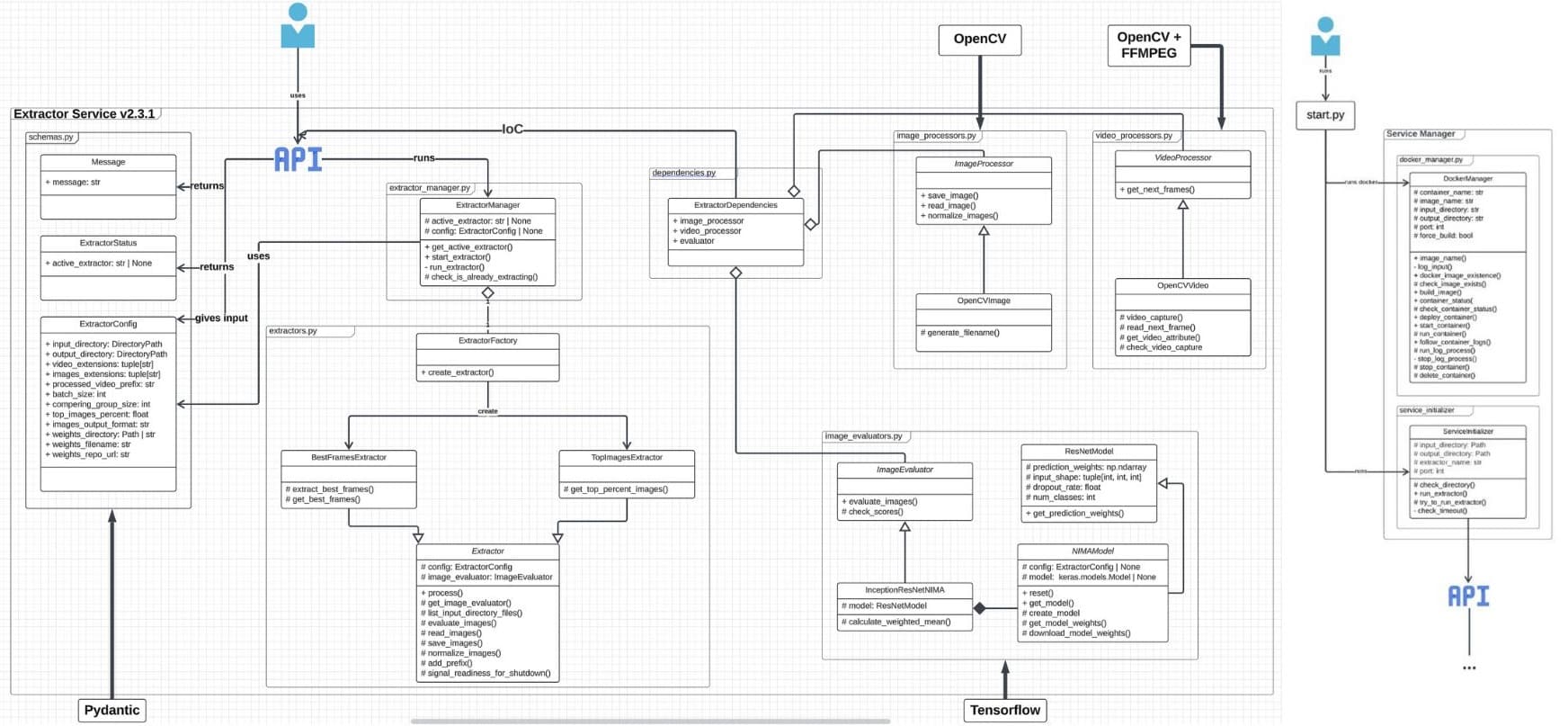

- Python - the main language in which the project is written.

The external part of

PerfectFrameAIuses only standard Python libraries for ease of installation and configuration. - FastAPI - the framework on which the main part of

PerfectFrameAIis built (in v1.0 Flask). - OpenCV - for image manipulation.

- numpy - for operations on multidimensional arrays.

- FFMPEG - as an extension to OpenCV, for decoding video frames.

- CUDA - to enable operations on graphics cards.

- Tensorflow - the machine learning library used (in v1.0 PyTorch).

- Docker - for easier building of a complex working environment for

PerfectFrameAI. - pytest - the framework in which the tests are written.

- docker-py - used only for testing Docker integration with the included

PerfectFrameAImanager. - Poetry - for managing project dependencies.

All dependencies are available in the pyproject.toml.

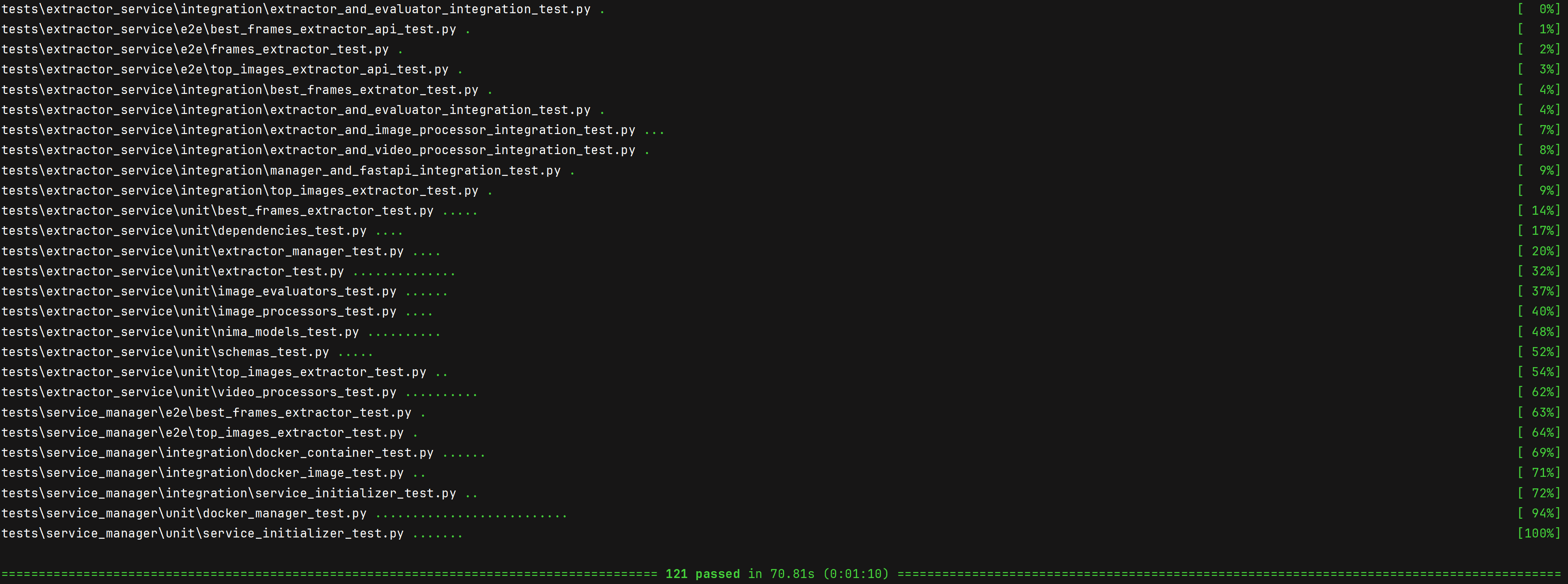

You can run the tests by installing the dependencies from pyproject.toml

and typing in the terminal in the project location - pytest.

unit

Each module has its own unit tests. They test each of the methods and functions available in the modules. Test coverage is 100% (the tests fully cover the business logic).

integration

- Testing Docker integration with docker_manager.

- Testing integration with the parser.

- Testing integration of business logic with the NIMA model.

- Testing integration with FastAPI.

- Testing integration with OpenCV.

- Testing integration with FFMPEG.

- Testing various module integrations...

e2e

- Testing extractor_service as a whole.

- Testing extractor_service + service_initializer as a whole.

Below is a list of features that we are planning to implement in the upcoming releases. We welcome contributions and suggestions from the community.

-

Implementation of Nvidia DALI.

- It will enable moving frame decoding (currently the longest part) to the GPU.

- Additionally, it will allow operating directly on Tensor objects without additional conversions.

- Fixing data spilling during frame evaluation. Currently, evaluation has a slight slowdown in the form of a spilling issue.

If you're interested in contributing to this project, please take a moment to read our Contribution Guide. It includes all the information you need to get started, such as:

- How to report bugs and submit feature requests.

- Our coding standards and guidelines.

- Instructions for setting up your development environment.

- The process for submitting pull requests.

Your contributions help make this project better, and we appreciate your efforts. Thank you for your support!

I am looking for feedback on the code quality and design of this project. If you have any suggestions on how to improve the code, please feel free to:

- Leave comments on specific lines of code via pull requests.

- Open an Issue to discuss larger changes or general suggestions.

- Participate in discussions in the 'Discussions' section of this repository.

Your insights are invaluable and greatly appreciated, as they will help improve both the project and my skills as a developer.

For more direct communication, you can reach me at Bartekdawidflis@gmail.com.

https://research.google/blog/introducing-nima-neural-image-assessment/

Pre-trained weights:

https://github.com/titu1994/neural-image-assessment

PerfectFrameAI is licensed under the GNU General Public License v3.0. See the LICENSE file for more information.