This is the official code of the paper:

The project aims to build a Protein-Protein Interaction (PPI) extraction model based on Transformer architecture.

We used the five PPI benchmark datasets and four biomedical relation extraction (RE) datasets to evaluate our model.

In addition, we provide the expanded version of PPI datasets, called typed PPI, which further delineate the functional role of proteins (structural or enzymatic).

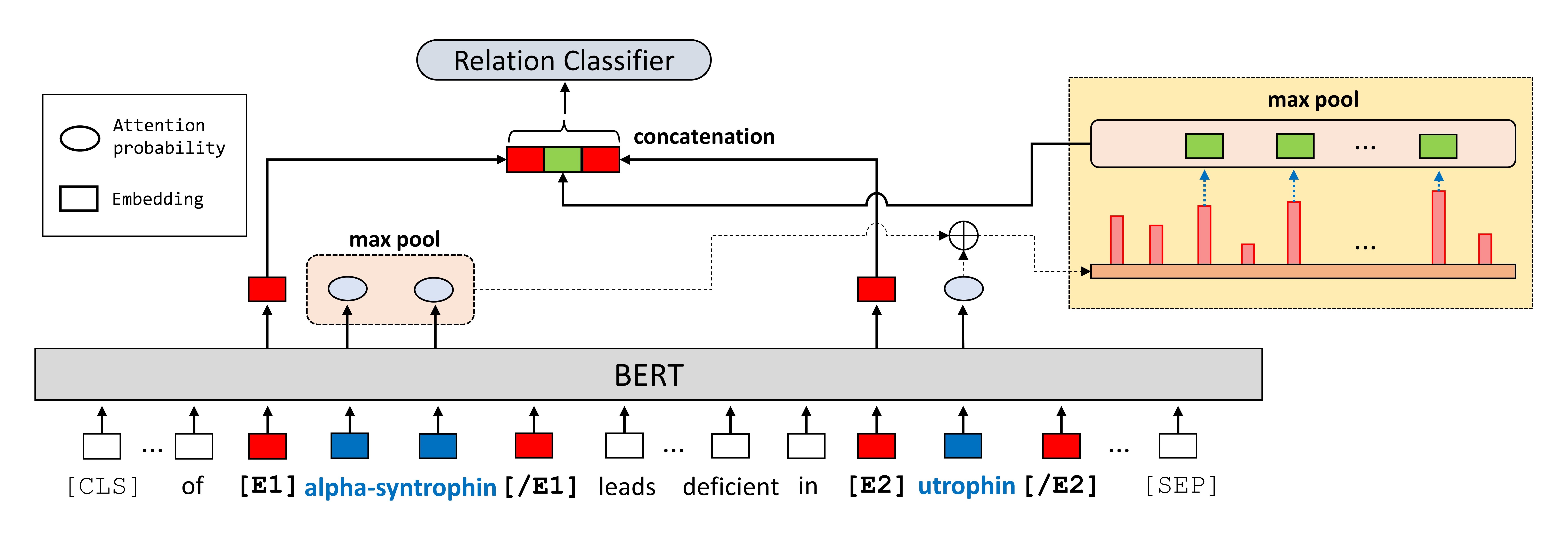

Figure: The relation representation consists of entity start markers and the max-pooled of relational context, which is a series of tokens chosen by attention probability of the entities. The relation representation based on mention pooling is depicted in mention_pooling. The example sentence is "Absence of alpha-syntrophin leads to structurally aberrant neuromuscular synapses deficient in utrophin". (Source: BioInfer corpus).

Figure: The relation representation consists of entity start markers and the max-pooled of relational context, which is a series of tokens chosen by attention probability of the entities. The relation representation based on mention pooling is depicted in mention_pooling. The example sentence is "Absence of alpha-syntrophin leads to structurally aberrant neuromuscular synapses deficient in utrophin". (Source: BioInfer corpus).

The code was implemented on Python version 3.9 and and PyTorch version = 1.10.2. The versions of the dependencies are listed in requirements.txt

** Please refer to the README.md in the datasets directory.

To reproduce the results of the experiments, use the following command:

export SEED=1

export DATASET_DIR=PPI-Relation-Extraction/datasets

export OUTPUT_DIR=PPI-Relation-Extraction/YOUR-OUTPUT-DIR

# PPI benchmark

export DATASET_NAME=PPI/original/AImed

python PPI-Relation-Extraction/src/relation_extraction/run_re.py \

--model_list dmis-lab/biobert-base-cased-v1.1 \

--task_name "re" \

--dataset_dir $DATASET_DIR \

--dataset_name $DATASET_NAME \

--output_dir $OUTPUT_DIR \

--do_train \

--do_predict \

--seed $SEED \

--remove_unused_columns False \

--save_steps 100000 \

--per_device_train_batch_size 16 \

--per_device_eval_batch_size 32 \

--num_train_epochs 10 \

--optim "adamw_torch" \

--learning_rate 5e-05 \

--warmup_ratio 0.0 \

--weight_decay 0.0 \

--relation_representation "EM_entity_start" \

--use_context "attn_based" \

--overwrite_cache \

--overwrite_output_dirRefer to the bash script run.sh, and you can find the hyperparameter settings of the datasets in hyper-parameters-configuration.txt

Here are the results of the experiments. The model was trained on two NVIDIA V100 GPUs. Note different number of GPUs and batch size can produce slightly different results.

| Method | ChemProt | DDI | GAD | EU-ADR |

|---|---|---|---|---|

| SOTA | 77.5 | 83.6 | 84.3 | 85.1 |

| Ours (Entity Mention Pooling + Relation Context) | 80.1 | 81.3 | 85.0 | 86.0 |

| Ours (Entity Start Marker + Relation Context) | 79.2 | 83.6 | 84.5 | 85.5 |

| Method | AIMed | BioInfer | HPRD50 | IEPA | LLL | Avg. |

|---|---|---|---|---|---|---|

| SOTA | 83.9 | 90.3 | 85.5 | 84.9 | 89.2 | 86.5 |

| Ours (Entity Mention Pooling + Relation Context) | 90.8 | 88.2 | 84.5 | 85.9 | 84.6 | 86.8 |

| Ours (Entity Start Marker + Relation Context) | 92.0 | 91.3 | 88.2 | 87.4 | 89.4 | 89.7 |

| Method | Typed PPI |

|---|---|

| Ours (Entity Mention Pooling + Relation Context) | 86.4 |

| Ours (Entity Start Marker + Relation Context) | 87.8 |

@inproceedings{park2022extracting,

title={Extracting Protein-Protein Interactions (PPIs) from Biomedical Literature using Attention-based Relational Context Information},

author={Park, Gilchan and McCorkle, Sean and Soto, Carlos and Blaby, Ian and Yoo, Shinjae},

booktitle={2022 IEEE International Conference on Big Data (Big Data)},

pages={2052--2061},

year={2022},

organization={IEEE}

}