Official implementaion by authors. Team 117 - Graph@FIT

Proposed solution for AI City Challenge 2022 Track4: Multi-Class Product Counting & Recognition for Automated Retail Checkout

Paper to download

- Ubuntu 20.04

- Python 3.8

- CUDA 11.3

- CuDNN 8.2.1

- PyTorch 1.11

- Nvidia GeForce RTX 3090

-

Install CUDA 11.3 and CuDNN

-

Clone this repo:

git clone https://github.com/BUT-GRAPH-at-FIT/PersonGONE.git-

Create virtual environment and activate it (optional)

-

Install dependencies

cd PersonGONE

pip install torch==1.11.0+cu113 torchvision==0.12.0+cu113 torchaudio==0.11.0+cu113 -f https://download.pytorch.org/whl/cu113/torch_stable.html

pip install mmcv-full==1.4.6 -f https://download.openmmlab.com/mmcv/dist/cu113/torch1.10.0/index.html

pip install -r requirements.txt- Set environment variables

export PERSON_GONE_DIR=$(pwd)If you do not want to train detector, only AIC22_Track4_TestA.zip (or TestB) is sufficient.

export TRACK_4_DATA_ROOT={/path/to/track_4/root_dir}For example: export TRACK_4_DATA_ROOT=/mnt/data/AIC22_Track4_TestA/Test_A

cd $PERSON_GONE_DIR

python download_pretrained_models.py --detectorAlternatively, you may train detector at your own

Run:

python inpainting_process.py --video_id $TRACK_4_DATA_ROOT/video_id.txtvideo_id.txt file is available in AIC22_Track4_TestA and contain video IDs and video file names (in the same directory)

Run:

python detect_ROI.py --video_id $TRACK_4_DATA_ROOT/video_id.txtArguments --roi_seed can be set (two values) - it specifies seed position for ROI detection (white tray) in format x y

Run:

python detect_and_create_submission.py --video_id $TRACK_4_DATA_ROOT/video_id.txtParameters --tracker and --img_size can be set. The values are pre-set to tracker = BYTE, img_size = 640

All scripts are set as the result was reported to AI City Challenge and no arguments must be set (only --video_id).

If you want to train detector prepare data at least from Track1, Track3, and Track4 (AI City Challenge 2022)

cd $PERSON_GONE_DIR

cp split_data.sh Track4/Train_SynData/segmentation_labels/split_data.sh

cd Track4/Train_SynData/segmentation_labels

bash split_data.sh

cd $PERSON_GONE_DIR

cp split_data.sh Track4/Train_SynData/syn_image_train/split_data.sh

cd Track4/Train_SynData/syn_image_train

bash split_data.sh- Download pretrained-model models without detector

python download_pretrained_models.py- Prepare AI City Challenge dataset as described above

- Create dataset

python create_dataset.py --t_1_path {/path/to/AIC22_Track_1_MTMC_Tracking} --t_3_path {/path/to/AIC22_Track3_ActionRecognition} --t_4_track {/path/to/AIC_Track4/Train_SynData}- Train detector

python train_detector.py Arguments --batch_size and --epochs can be set. Explicit values are batch_size = 16, epochs = 75.

- Instance segmentation: MMdetection

- Inpainting: LaMa

- Detector: YOLOX

- Trackers: BYTE, SORT

@InProceedings{Bartl_2022_CVPR,

author = {Bartl, Vojt\v{e}ch and \v{S}pa\v{n}hel, Jakub and Herout, Adam},

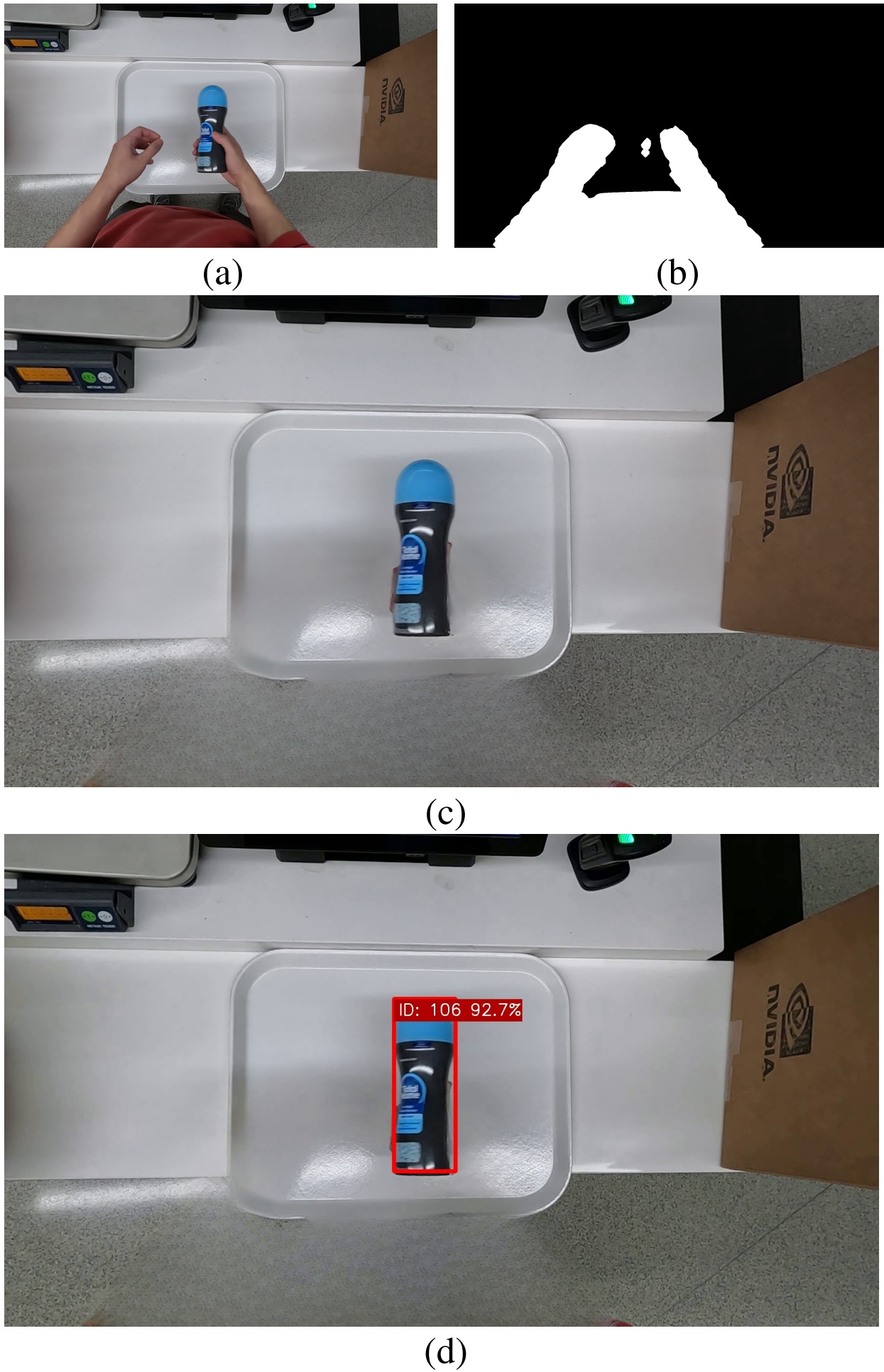

title = {PersonGONE: Image Inpainting for Automated Checkout Solution},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month = {June},

year = {2022},

pages = {3115-3123}

}