Upside-Down Reinforcement Learning (⅂ꓤ) implementation in Pytorch.

Based on the paper published by Jürgen Schmidhuber: ⅂ꓤ-Paper

This repository contains a discrete action space as well as a continuous action space implementation for the OpenAI gym CartPole environment (continuous version of the environment).

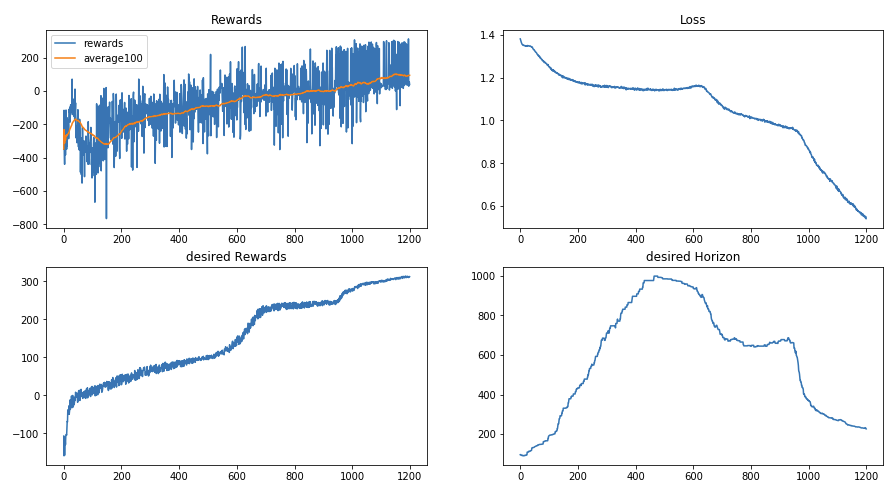

The notebooks include the training of a behavior function as well as an evaluation part, where you can test the trained behavior function. Feed it with an desired reward that the agent shall achieve in a desired time horizon.

TODO:

- test some possible improvements mentioned in the paper (6. Future Research Directions).

- Sebastian Dittert

Feel free to use this code for your own projects or research. For citation check DOI or cite as:

@misc{Upside-Down,

author = {Dittert, Sebastian},

title = {PyTorch Implementation of Upside-Down RL},

year = {2020},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/BY571/Upside-Down-Reinforcement-Learning}},

}