FinRL: A Deep Reinforcement Learning Library for Automated Trading in Quantitative Finance

We aim to maintain an open source FinRL library for the "AI + finance" community: support various markets, SOTA DRL algorithms, benchmarks for many quant finance tasks, live trading, etc. To contribute? please check the call for contributions section at the end of this page.

Feel free to leave us feedback for any issue.

Prior Arts:

This repository starts from our paper in Deep RL Workshop, NeurIPS 2020.

This project is closely related to our paper and codes in ACM International Conference on AI in Finance (ICAIF), 2020.

An early paper with codes appeared in NeurIPS Workshop on Challenges and Opportunities for AI in Financial Services, 2018.

Overview

As deep reinforcement learning (DRL) has been recognized as an effective approach in quantitative finance, getting hands-on experiences is attractive to beginners. However, to train a practical DRL trading agent that decides where to trade, at what price, and what quantity involves error-prone and arduous development and debugging.

We introduce a DRL library FinRL that facilitates beginners to expose themselves to quantitative finance and to develop their own stock trading strategies. Along with easily-reproducible tutorials, FinRL library allows users to streamline their own developments and to compare with existing schemes easily. Within FinRL, virtual environments are configured with stock market datasets, trading agents are trained with neural networks, and extensive backtesting is analyzed via trading performance. Moreover, it incorporates important trading constraints such as transaction cost, market liquidity and the investor’s degree of risk-aversion.

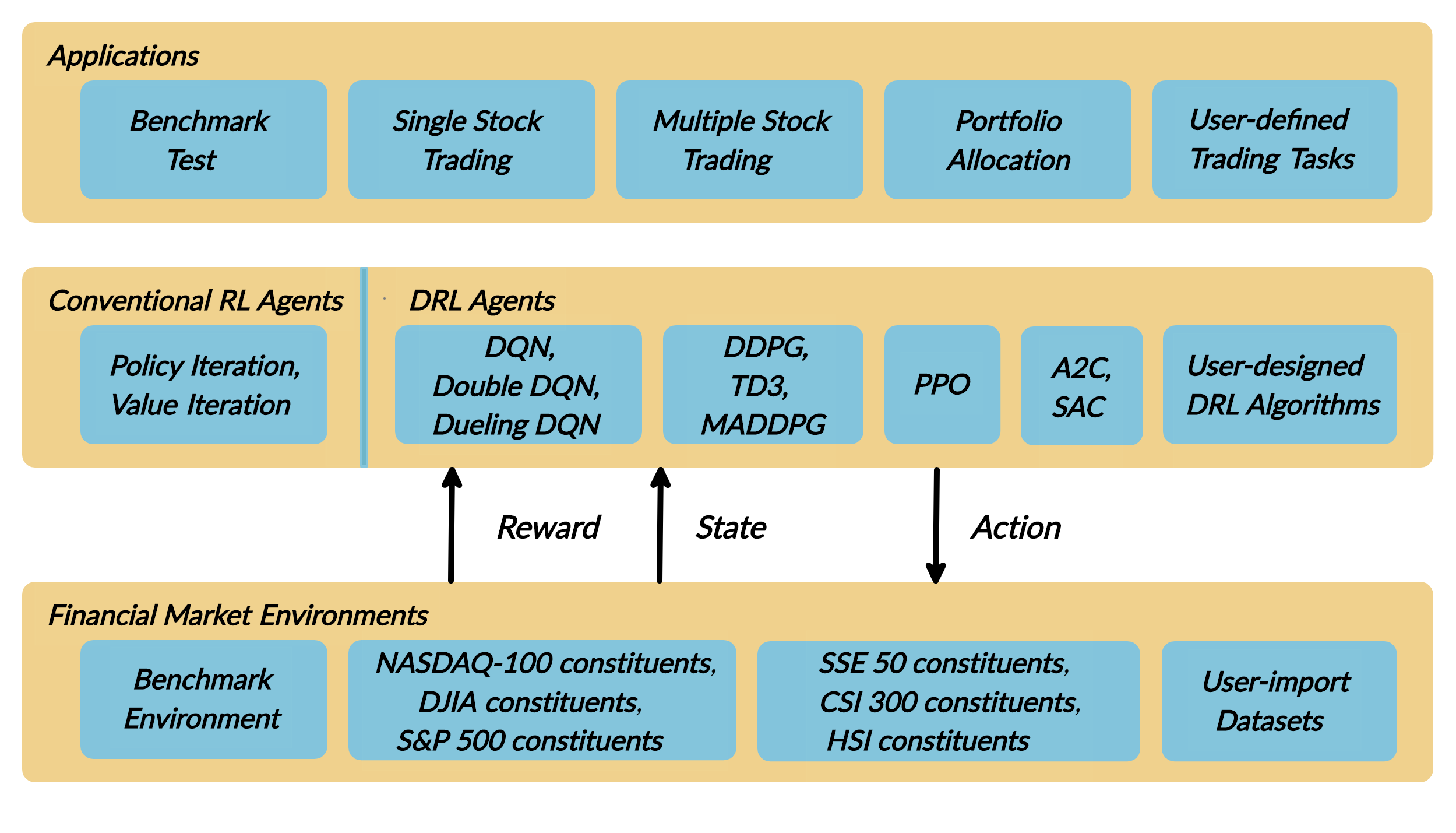

FinRL is featured with completeness, hands-on tutorial and reproducibility that favors beginners: (i) at multiple levels of time granularity, FinRL simulates trading environments across various stock markets, including NASDAQ-100, DJIA, S&P 500, HSI, SSE 50, and CSI 300; (ii) organized in a layered architecture with modular structure, FinRL provides fine-tuned state-of-the-art DRL algorithms (DQN, DDPG, PPO, SAC, A2C, TD3, etc.), commonly-used reward functions and standard evaluation baselines to alleviate the debugging work-loads and promote the reproducibility, and (iii) being highly extendable, FinRL reserves a complete set of user-import interfaces.

Furthermore, we incorporated three application demonstrations, namely single stock trading, multiple stock trading, and portfolio allocation.

Guiding Principles

- Completeness. Our library shall cover components of the DRL framework completely, which is a fundamental requirement;

- Hands-on tutorials. We aim for a library that is friendly to beginners. Tutorials with detailed walk-through will help users to explore the functionalities of our library;

- Reproducibility. Our library shall guarantee reproducibility to ensure the transparency and also provide users with confidence in what they have done.

Architecture of the FinRL Library

- Three-layer architecture: The three layers of FinRL library are stock market environment, DRL trading agent, and stock trading applications. The agent layer interacts with the environment layer in an exploration-exploitation manner, whether to repeat prior working-well decisions or to make new actions hoping to get greater rewards. The lower layer provides APIs for the upper layer, making the lower layer transparent to the upper layer.

- Modularity: Each layer includes several modules and each module defines a separate function. One can select certain modules from any layer to implement his/her stock trading task. Furthermore, updating existing modules is possible.

- Simplicity, Applicability and Extendibility: Specifically designed for automated stock trading, FinRL presents DRL algorithms as modules. In this way, FinRL is made accessible yet not demanding. FinRL provides three trading tasks as use cases that can be easily reproduced. Each layer includes reserved interfaces that allow users to develop new modules.

- Better Market Environment Modeling: We build a trading simulator that replicates live stock market and provides backtesting support that incorporates important market frictions such as transaction cost, market liquidity and the investor’s degree of risk-aversion. All of those are crucial among key determinants of net returns.

Implemented Algorithms

Our Medium Blog

FinRL for Quantitative Finance: Tutorial for Single Stock Trading

FinRL for Quantitative Finance: Tutorial for Multiple Stock Trading

FinRL for Quantitative Finance: Tutorial for Portfolio Allocation

Installation:

Clone this repository

git clone https://github.com/AI4Finance-LLC/FinRL-Library.gitInstall the unstable development version of FinRL:

pip install git+https://github.com/AI4Finance-LLC/FinRL-Library.gitPrerequisites

For OpenAI Baselines, you'll need system packages CMake, OpenMPI and zlib. Those can be installed as follows

Ubuntu

sudo apt-get update && sudo apt-get install cmake libopenmpi-dev python3-dev zlib1g-dev libgl1-mesa-glxMac OS X

Installation of system packages on Mac requires Homebrew. With Homebrew installed, run the following:

brew install cmake openmpiWindows 10

To install stable-baselines on Windows, please look at the documentation.

Create and Activate Virtual Environment (Optional but highly recommended)

cd into this repository

cd FinRL-LibraryUnder folder /FinRL-Library, create a virtual environment

pip install virtualenvVirtualenvs are essentially folders that have copies of python executable and all python packages.

Virtualenvs can also avoid packages conflicts.

Create a virtualenv venv under folder /FinRL-Library

virtualenv -p python3 venvTo activate a virtualenv:

source venv/bin/activate

Dependencies

The script has been tested running under Python >= 3.6.0, with the folowing packages installed:

pip install -r requirements.txtQuestions

About Tensorflow 2.0: hill-a/stable-baselines#366

If you have questions regarding TensorFlow, note that tensorflow 2.0 is not compatible now, you may use

pip install tensorflow==1.15.4If you have questions regarding Stable-baselines package, please refer to Stable-baselines installation guide. Install the Stable Baselines package using pip:

pip install stable-baselines[mpi]

This includes an optional dependency on MPI, enabling algorithms DDPG, GAIL, PPO1 and TRPO. If you do not need these algorithms, you can install without MPI:

pip install stable-baselines

Please read the documentation for more details and alternatives (from source, using docker).

Run

python main.py --mode=trainBacktesting

Use Quantopian's pyfolio package to do the backtesting.

Status

Version History [click to expand]

Data

The stock data we use is pulled from Yahoo Finance API

(The following time line is used in the paper; users can update to new time windows.)

Performance

Contributions

- FinRL is an open source library specifically designed and implemented for quantitativefinance. Trading environments incorporating market frictions are used and provided.

- Trading tasks accompanied by hands-on tutorials with built-in DRL agents are available in a beginner-friendly and reproducible fashion using Jupyter notebook. Customization of trading time steps is feasible.

- FinRL has good scalability, with a broad range of fine-tuned state-of-the-art DRL algorithms. Adjusting the implementations to the rapid changing stock market is well supported.

- Typical use cases are selected and used to establish a benchmark for the quantitative finance community. Standard backtesting and evaluation metrics are also provided for easy and effective performance evaluation.

Citing FinRL

@article{finrl2020,

author = {Liu, Xiao-Yang and Yang, Hongyang and Chen, Qian and Zhang, Runjia and Yang, Liuqing and Xiao, Bowen and Wang, Christina Dan},

journal = {Deep RL Workshop, NeurIPS 2020},

title = {{FinRL: A Deep Reinforcement Learning Library forAutomated Stock Trading in Quantitative Finance}},

url = {},

year = {2020}

}

Call for Contributions

We aim to maintain an open source FinRL library for the AI + finance community and welcome your contributions!

Support various markets

We would like to support more asset markets, so that the community can test their stategies.

SOTA DRL algorithms

We will continue to maintian a pool of DRL algorithms that can be treated as SOTA implementations.

Benchmarks for more trading tasks

To help quants have better evaluations, here we maintain benchmarks for many trading tasks, upon which you can improve for your own tasks.

Support live trading

Support live trading can close the simulation-reality gap, it will enable quant to switch to the real market when they are confident with their strategies.