- Distributed systems.

- Object-oriented programming.

- Educational only.

dotnet run -p src/MapReduce.Sample- Example of master initiation:

src/MapReduce.Sample/Program.cs. - Example of workers initiation:

src/MapReduce.Sample/Playbook/WorkerHelper.cs. - Example of Custom

MapandReducefunctions:- Word count -

src/MapReduce.Sample/Playbook/WordCount.cs. - Inverted file index -

src/MapReduce.Sample/Playbook/InvertedIndex.cs.

- Word count -

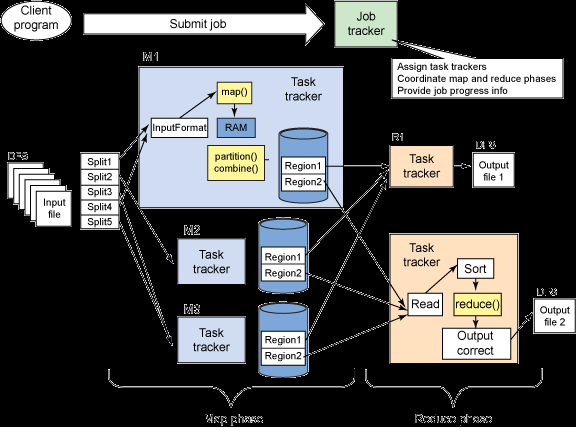

map():

part of object -> list<(key, value)>

return list<(key, value)>

combine():

hash<key, list<value>>

foreach ((key,value) in list<(key, value)>)

{

hash<key, list<value>>[key].Add(value)

}

return hash<key, list<value>>

partition():

hash<partitionIndex, hash<key, list<value>>>

reduce():

hash<key, valueAggregated>

foreach ((key,values) in hash<key, list<value>>)

{

foreach (value in values)

{

hash<key, valueAggregated>[key] += value

}

}

// foreach (key,value) in other list<(key, value)>

// omitted

return hash<key, valueAggregated>

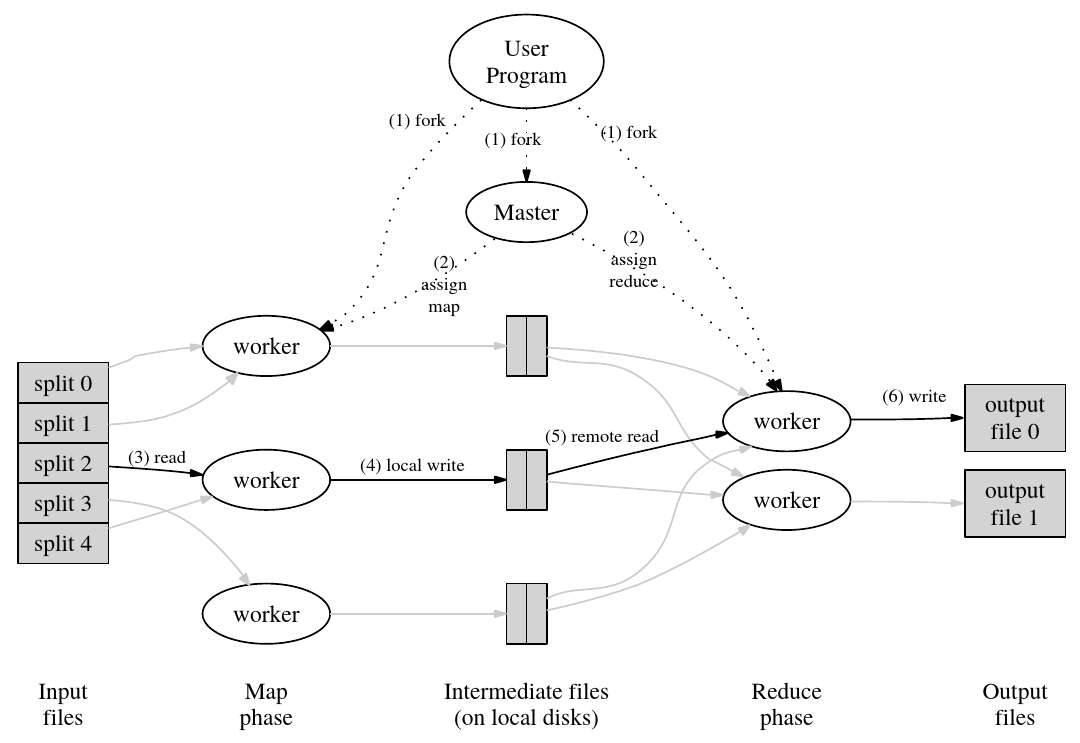

- each intermediate file is a partition.

ith reducer take everyith partition in each mapper's local disk.

class masterList<MapTask>List<ReduceTask>List<Worker>

- relative data structures

enum state { idle, in-progress, completed }- idle:

- task waiting to be scheduled.

- the task is not done yet.

- idle:

class MapTask { state, CompletedFile, ... }class ReduceTask { state, CompletedFile, ... }class CompletedFile { location, size }

- worker failure

- master pings worker.

- no response in amount of time -> worker failed.

- master pings worker.

- master failure

- exception on user code.

- master writes data structures in checkpoints periodically.

- master gives the same task to a different worker.

- When map worker completes a map task

- worker ---{file names}--> master.

- master saves file names to data structure.

- When reduce worker completes a reduce task

- rename temp output file to final output file.

- Task processing

- worker

- The workers talk to the master via RPC.

- worker ask the master for a task

- worker read the task's input from one or more files,

- worker executes the task,

- worker writes the task's output to one or more files.

- worker

- each partition is a file.

- each partition has a dictionary.

- each partition might have 0, 1, or more keys.

- those keys have the same value of

key.GetHashCode() % numPartitions. numPartitions:= number of reduce tasks.- number of reduce tasks is preset in master.

- those keys have the same value of

- at each reduce task, the worker should read the

ith partition of outputs of all mappers. - worker can acquire more than one task.

- additional details - https://stackoverflow.com/q/17734468/9920172.

Your job is to implement a distributed MapReduce, consisting of two programs, the master and the worker. There will be just one master process, and one or more worker processes executing in parallel. In a real system the workers would run on a bunch of different machines, but for this lab you'll run them all on a single machine. The workers will talk to the master via RPC. Each worker process will ask the master for a task, read the task's input from one or more files, execute the task, and write the task's output to one or more files. The master should notice if a worker hasn't completed its task in a reasonable amount of time (for this lab, use ten seconds), and give the same task to a different worker.