CMP dataset finetuning based on the repository.

/bin/bash download.sh

conda env create -f environment.yaml

conda activate ssiwpython -m src.tools.train --max-epochs 100- train your checkpoint (by default saved as out.pth)

- use my checkpoint

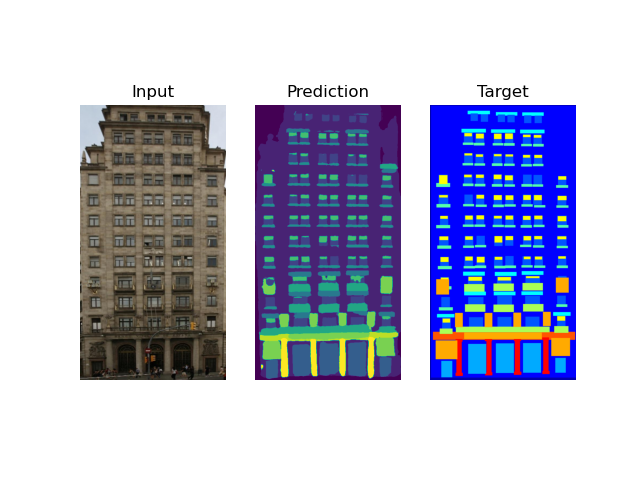

python -m src.tools.test_cpm data/base/base/cmp_b0346.jpg --checkpoint-path checkpoint.pthI have implemented CMP dataset loading and model training. Base repository contains only testing stage. My train script loads the weights shared in the authors repository and fine tune it with CMP dataset. I used only base version of dataset (extended is for testing).

Labels in the CMP dateset seems to be precise, so I have implemented only L_hd loss equations 1, 2 and 3 in the paper. Due to the deadline I have frozen the temperature parameter in the loss. I think that learnable parameters in the loss could slow down the development process, but it is worth to replace it with learnable parameter and check performance.

Typically large models overfit on small datasets, but in that case I have tried train only head (strong regularization) and it decreased the performance. It is definitely worth to check more augmentations, hyperparameters and longer training time. It can be performed in next steps.

Batch size and image crop size are adapted to my VRAM resources, not the model performance.