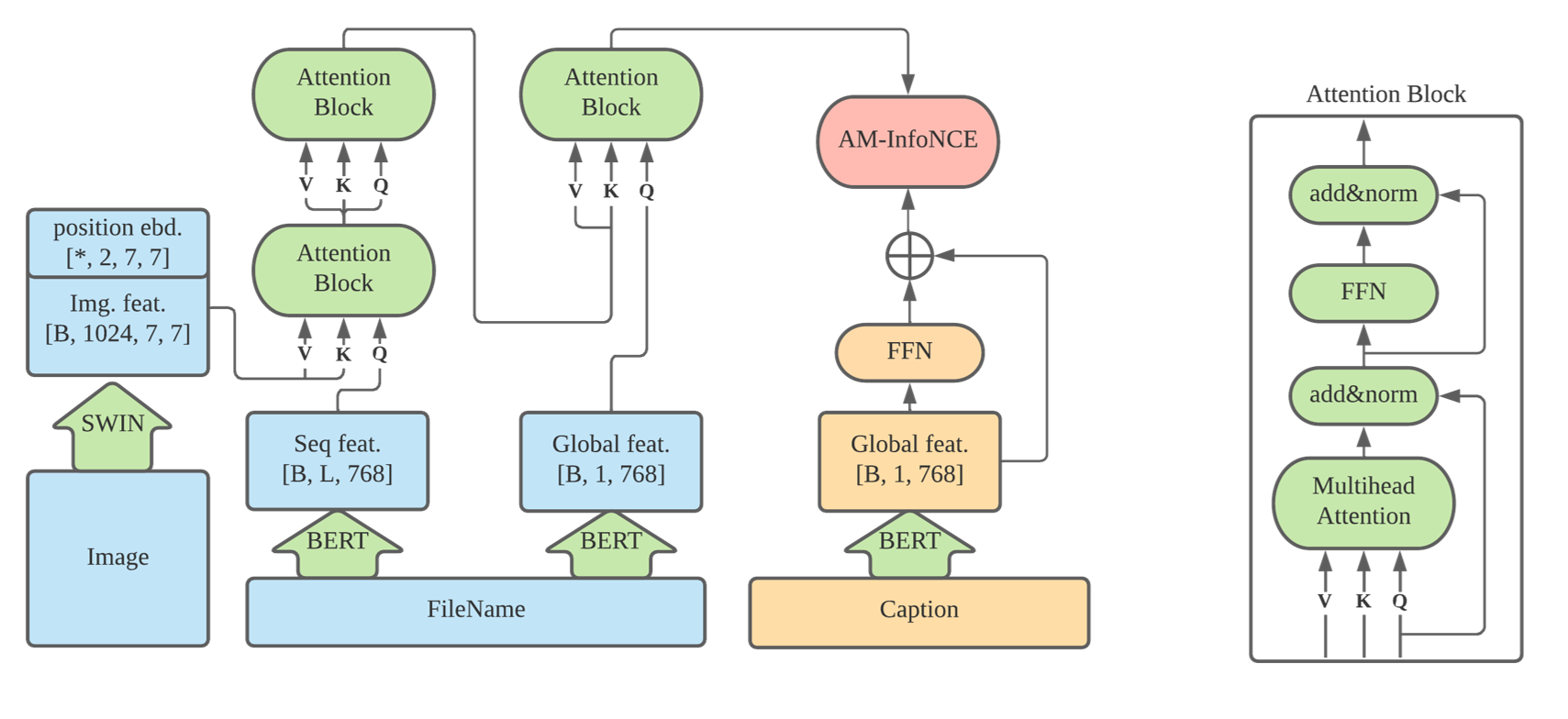

I use Swin and Bert as backbone for image/text features extraction, and fuse such features with cross-attention modules.

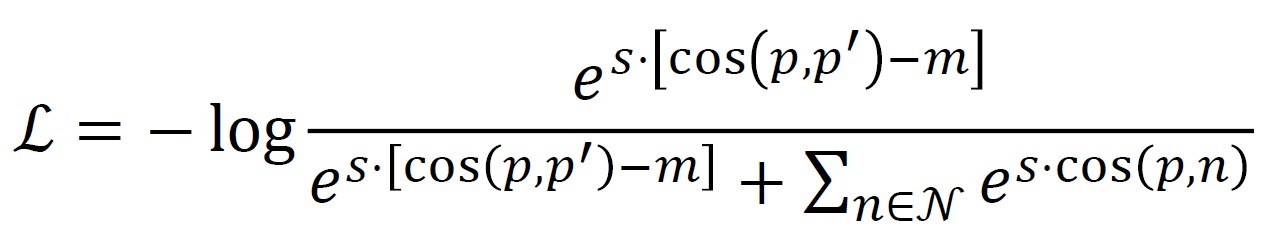

The network is trained with single loss: Arc-InfoNCE, whose cost function is:

I use 6.5M image/caption pairs to train the network, which can be downloaded from here.

python3

pytorch >= 1.3

mmcv

mmcls

tqdm

After training for 8 epochs, the model can achieve NDCG@5 with 0.59~0.6 on testset. If you normalize the similarity score for each caption over all images with Softmax, the result can be further improved to 0.61+.

- Download pretrained model

comming soon...

- run inference

cd image+name_caption_infonce

python inference.py