This is a list of needed steps to set up your project locally, to get a local copy up and running follow these instructions.

- Clone the repository

$ git clone https://github.com/Waer1/Hand-Gesture-Recognition - Install Pip and Python

Follow this article to install Pip and Python Install Pip and Python

- Install dependencies

- Train the model

$ py .\FinalCode\main.py --feature 0 --model 0

Note: You should put the images under a folder namedDatasetunder the main folderHand-Gesture-Recognition - Test the model and performance analysis

$ py .\FinalCode\app.py --feature 0

Note: You should put the images under a folder nameddataunder the main folderHand-Gesture-Recognition

Hand gesture recognition has become an important area of research due to its potential applications in various fields such as robotics, healthcare, and gaming. The ability to recognize and interpret hand gestures can enable machines to understand human intentions and interact with them more effectively. For instance, in healthcare, hand gesture recognition can be used to control medical equipment without the need for physical contact, reducing the risk of infection. In gaming, it can enhance the user experience by allowing players to control games using hand gestures instead of traditional controllers. Overall, hand gesture recognition has immense potential to revolutionize the way we interact with machines and improve our daily lives.

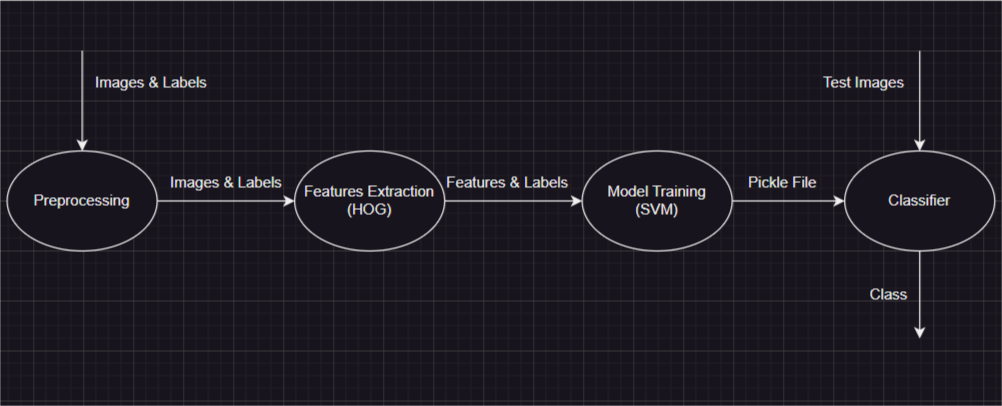

You can also look at it at

./Documentation/Project Pipeline.drawio

Our main problem at this stage is the fingers shadows due to poor imaging conditions, so, we overcome over that by applying multiple steps for every input image:

- Convert the input image to YCrCb color space.

- Get the segmented image using 'range_segmentation' method as follows:

- Segment the image based on the lower and upper bounds of skin color defined in YCrCb color space.

- Apply morphological operations to remove noise.

- Find the contours in the binary segmented image, get the contour with the largest area.

- Create a blank image to draw and fill the contours.

- Draw the largest contour on the blank image and fill the contour with white color.

- Return the image with the largest contour drawn on it.

- Apply thresholding on cr and cb components.

- Apply the following formula bitwise

(cr || cb) && range_segmented_image.- Apply morphological operations to remove noise.

- Get the image with the largest contour area.

- Apply histogram equalization to make the image more clear.

- Cut the greyscale image around the largest contour.

- Resize the image to small size to reduce extracted features array length.

We tried multiple algorithms: SURF, SIFT, LBP, and HOG. And we selected HOG (Histogram of Oriented Gradients) for providing high accuracy.

HOG algorithm is a computer vision technique used to extract features from images. It works by dividing an image into small cells and computing the gradient orientation and magnitude for each pixel within the cell. The gradient orientations are then binned into a histogram, which represents the distribution of edge orientations within that cell.

The HOG algorithm has high accuracy because it is able to capture important information about the edges and contours in an image. This information can be used to identify objects or patterns within the image, even if they have varying lighting conditions.

We apply HOG algorithm using hog() from skimage.feature, and with making sure that all features vectors are with the same length by padding zeros.

We tried multiple algorithms: Random Forest, Naive Bayes, Decision Tree, and SVM. And we selected SVM (Support Vector Machine) for providing high accuracy.

SVM is a powerful and popular machine learning algorithm used for classification and regression analysis. It works by finding the best hyperplane or decision boundary that separates the data into different classes. The hyperplane is chosen such that it maximizes the margin between the two classes, which helps to improve the generalization ability of the model.

SVM has several advantages that make it a good choice for classification tasks. Some of these advantages include:Overall, SVM is a powerful and flexible algorithm that can be used for various classification tasks in machine learning.

- Effective in high-dimensional spaces: SVM can effectively handle datasets with a large number of features or dimensions.

- Robust to outliers: SVM is less sensitive to outliers in the data compared to other algorithms.

- Versatile: SVM can be used with different types of kernels, such as linear and polynomial, which makes it versatile and applicable to different types of datasets.

- Memory efficient: SVM uses a subset of the training data called support vectors to build the model, which makes it memory efficient and scalable to large datasets.

- Good generalization performance: SVM aims to maximize the margin between the two classes, which helps to improve the generalization performance of the model.

We apply SVM algorithm using SVC() from sklearn.svm with linear kernel, and %80 of dataset images for training, and %20 of dataset images for testing.

Our result is that the trained model predicted 83% of images correctly.

You can run with your data underdatafolder under the main folderHand-Gesture-Recognitionthe script./FinalCode/app.pyto see output label and time taken for processing every image by using this command:

$ py .\FinalCode\app.py --feature 0

Note: You should see the output in 2 files:results.txtfor output classes andtime.txtfor time taken to process the image after reading it and just after the prediction, every line in these 2 files is for one image.

Although we have optained an accuracy of 83% on our dataset, we believe that our work would be improved using these points.

- Larger dataset.

- Using Deep Neural Networks.

- Better imaging conditions.