Scenario - Credit risk analysis and loan grant decision are two of the most important operations for financial institutions. By leveraging the extensive large data of historical loans performance, we need to train a model to accurately predict future outcomes.

Objective - Using historical large data, employ supervised machine learning model optimization to anlayze the features of potential borrowers in order to better predict risk level; i.e. will loan be paid back in full vs default and/or written off. Given the large data available with extensive features, the model should be able to more accurately and efficiently provide predictions using more than traditional credit reports and limited features.

Product -

- Our product is a cloud-based lending-as-a-service (LaaS) solution that can be offered to financial institutions as an API.

- The product uses Python-libraries including pandas and sci-kit learn, among others, to clean, process, and fit models based on the desired risk parameters to accurately predict customers fit for lending opportunities.

- The product is deployed using Amazon Web Services (AWS). Specifically, SageMaker in order for clients to be able to run the model on the cloud.

- Excluding the target/'labels' column, raw data provided 150 features. After preprocessing, data clean up, and preparation features count reduced to 110.

- Following use of StandardScaler with Principal Component Analysis (PCA), total features reduced to 61 with 95% explained variance ratio.

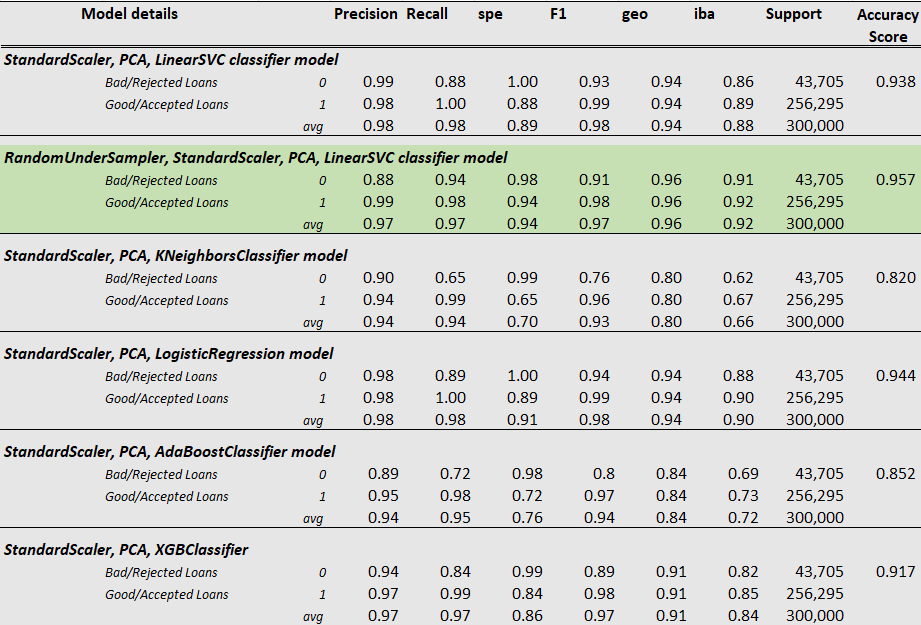

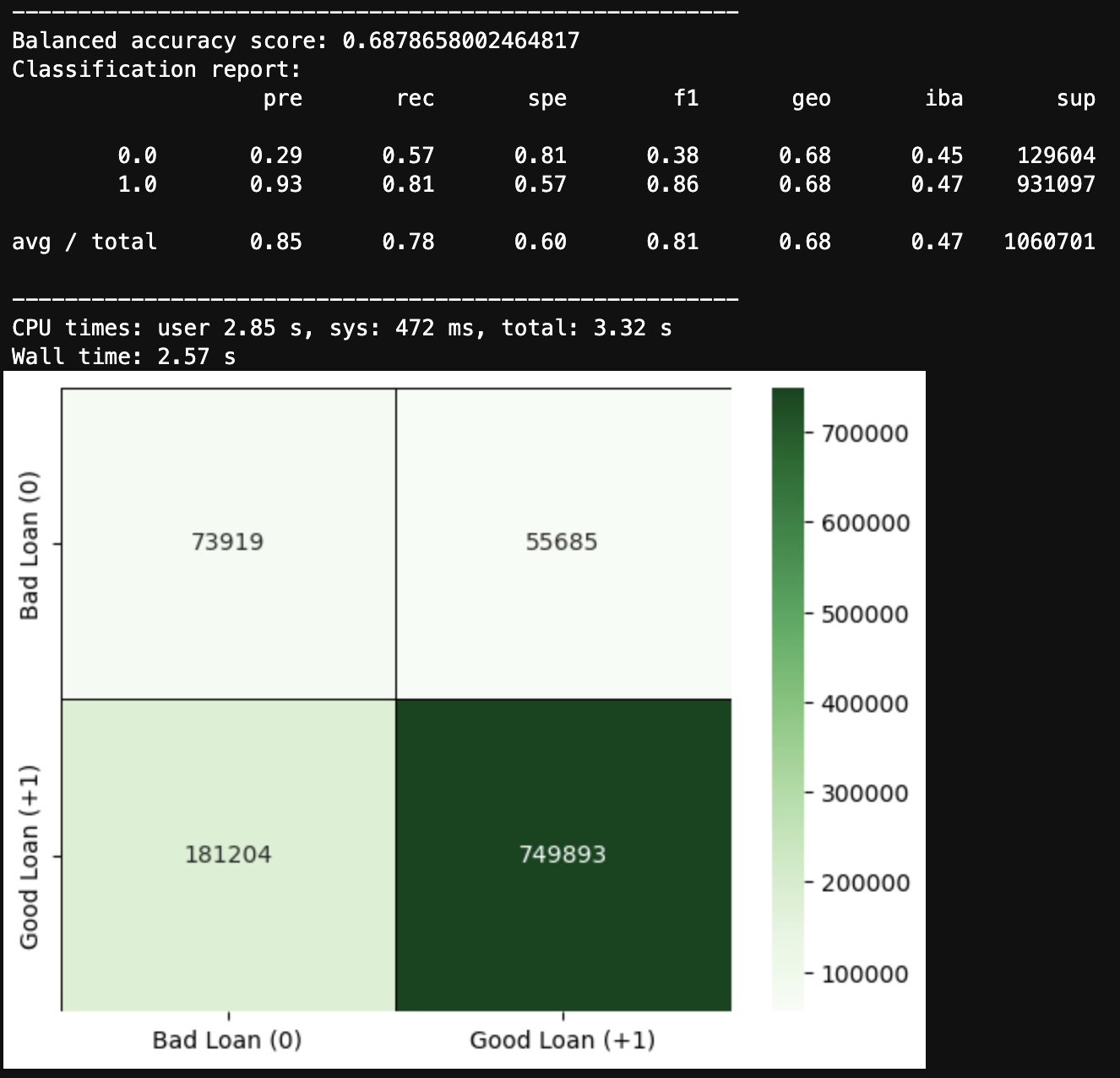

Following is the summary of results:

- Excluding the target/'labels' column, raw data provided 150 features. After preprocessing, data clean up, and preparation features count reduced to 110.

- Following use of StandardScaler with Principal Component Analysis (PCA), total features reduced to 61 with 95% explained variance ratio.

RandomUnderSampler, StandardScaler, PCA, LinearSVC classifier model:

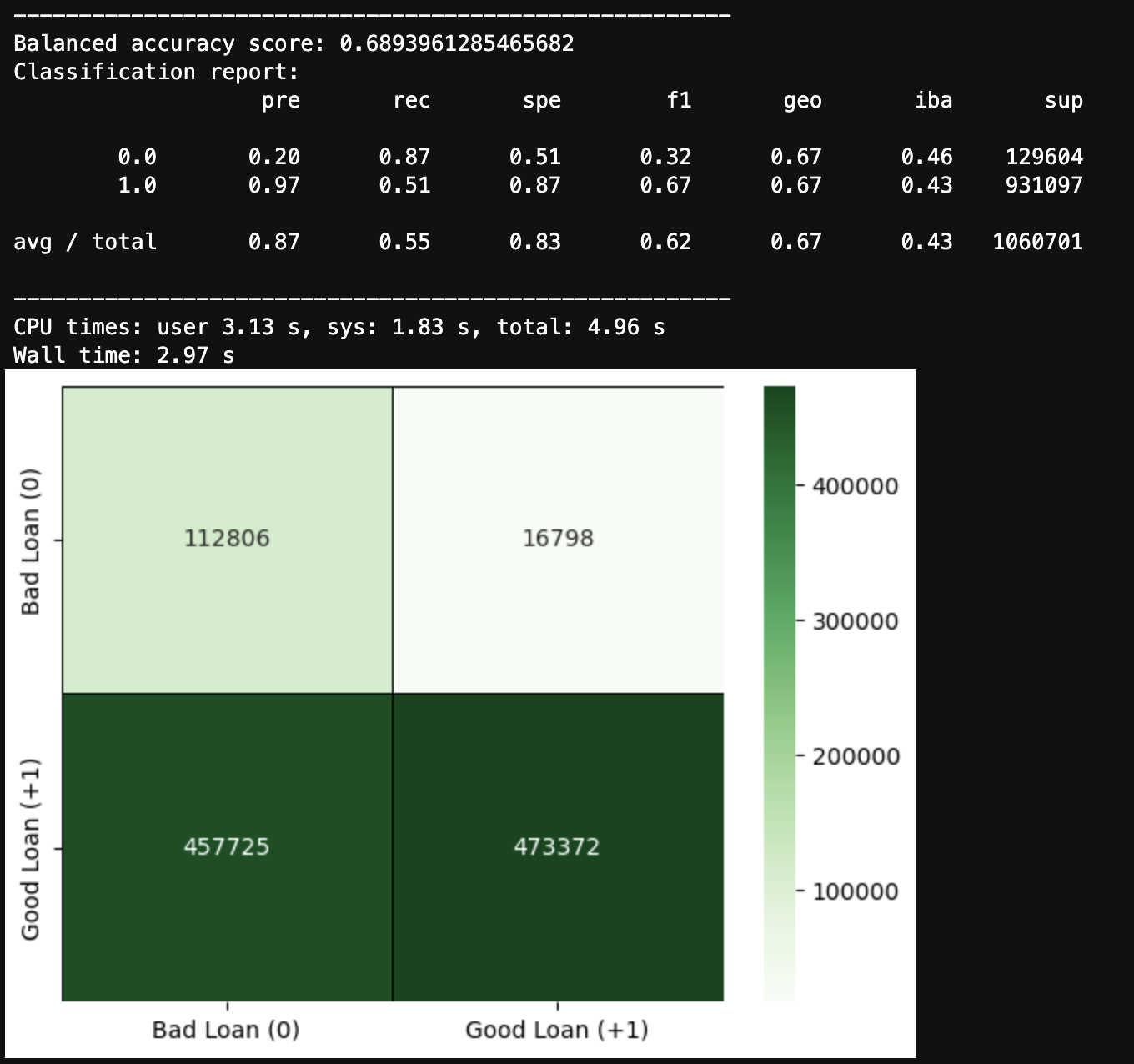

StandardScaler, PCA, LinearSVC classifier model:

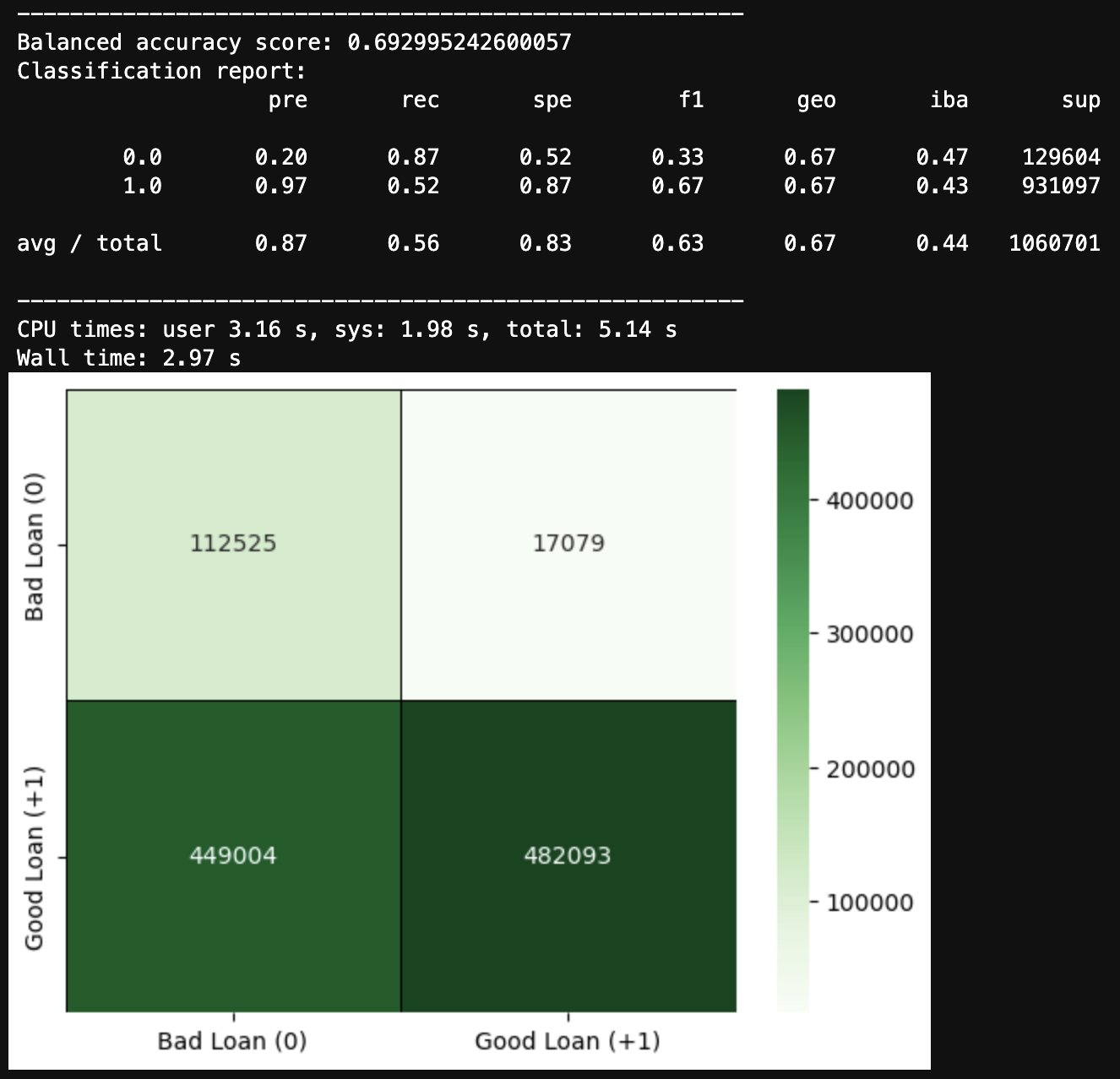

StandardScaler, PCA, LogisticRegression model:

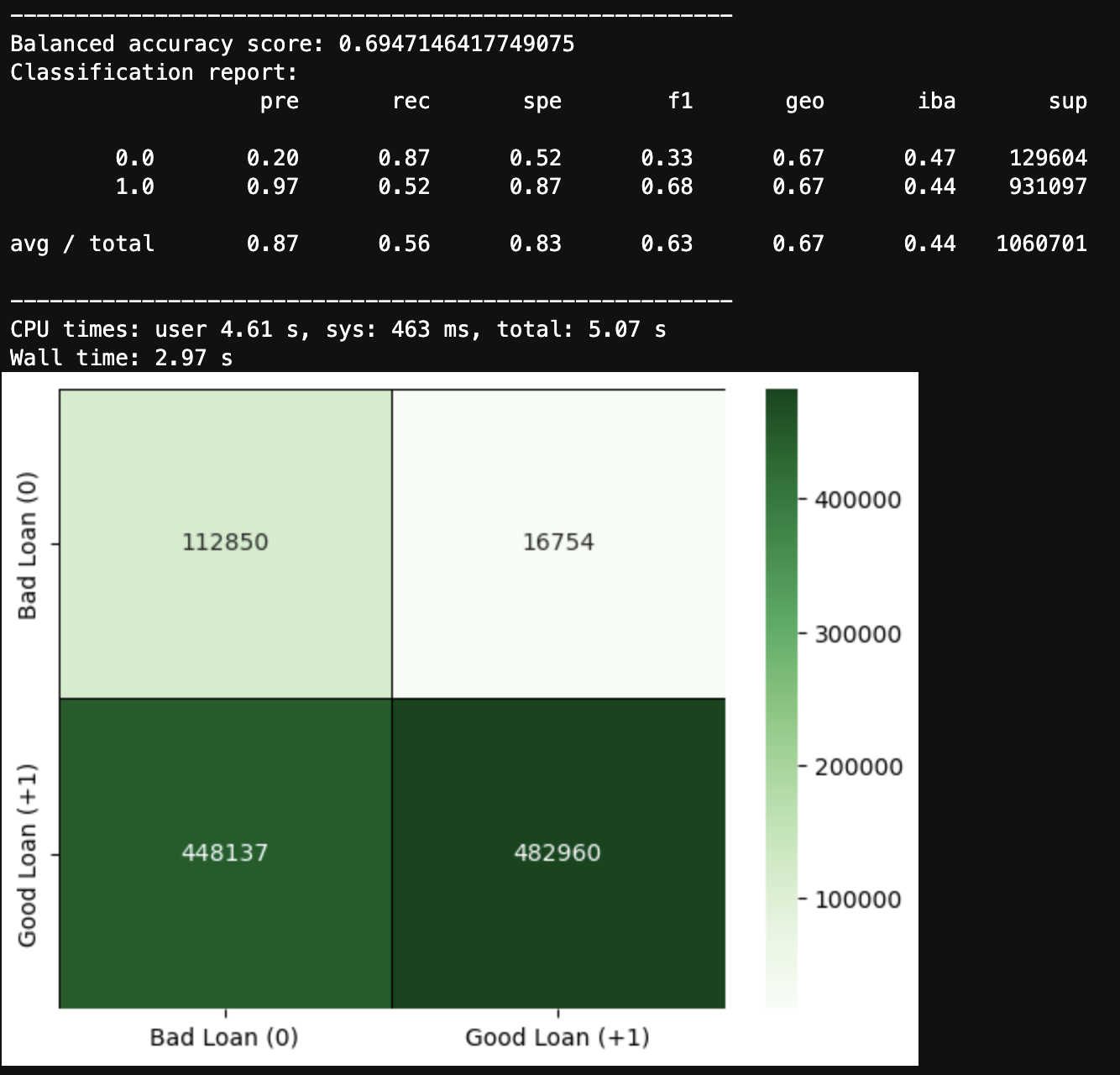

StandardScaler, PCA, hyupertuning XGBClassifier model:

This project leverages Jupyter Lab v3.4.4 and Python version 3.9.13 packaged by conda-forge | (main, May 27 2022, 17:01:00) with the following packages:

-

sys - module provides access to some variables used or maintained by the interpreter and to functions that interact strongly with the interpreter.

-

os - module provides a portable way of using operating system dependent functionality.

-

opendatasets - module is a Python library for downloading datasets from online sources like Kaggle and Google Drive using a simple Python command.

-

NumPy - an open source Python library used for working with arrays, contains multidimensional array and matrix data structures with functions for working in domain of linear algebra, fourier transform, and matrices.

-

pandas - software library written for the python programming language for data manipulation and analysis.

-

Scikit-learn - an open source machine learning library that supports supervised and unsupervised learning; provides various tools for model fitting, data preprocessing, model selection, model evaluation, and many other utilities.

-

Path - from pathlib - Object-oriented filesystem paths, Path instantiates a concrete path for the platform the code is running on.

-

DateOffset - from pandas - sttandard kind of date increment used for a date range.

-

confusion_matrix - from sklearn.metrics, computes confusion matrix to evaluate the accuracy of a classification; confusion matrix C is such that Cij is equal to the number of observations known to be in group i and predicted to be in group j.

-

balanced_accuracy_score - from sklearn.metrics, compute the balanced accuracy in binary and multiclass classification problems to deal with imbalanced datasets; defined as the average of recall obtained on each class.

-

f1_score - from sklearn.metrics, computes the F1 score, also known as balanced F-score or F-measure; can be interpreted as a harmonic mean of the precision and recall, where an F1 score reaches its best value at 1 and worst score at 0.

-

classification_report_imbalanced - from imblearn.metrics, compiles the metrics: precision/recall/specificity, geometric mean, and index balanced accuracy of the geometric mean.

-

SVMs - from scikit-learn, support vector machines (SVMs) are a set of supervised learning methods used for classification, regression and outliers detection.

-

LogisticRegression - from sklearn.linear_model, a Logistic Regression (aka logit, MaxEnt) classifier; implements regularized logistic regression using the ‘liblinear’ library, ‘newton-cg’, ‘sag’, ‘saga’ and ‘lbfgs’ solvers - regularization is applied by default.

-

AdaBoostClassifier - from sklearn.ensemble, a meta-estimator that begins by fitting a classifier on the original dataset and then fits additional copies of the classifier on the same dataset but where the weights of incorrectly classified instances are adjusted such that subsequent classifiers focus more on difficult cases.

-

KNeighborsClassifier - from sklearn.neighbors, a classifier implementing the k-nearest neighbors vote.

-

StandardScaler - from sklearn.preprocessing, standardize features by removing the mean and scaling to unit variance.

-

hvplot - provides a high-level plotting API built on HoloViews that provides a general and consistent API for plotting data into numerous formats listed within linked documentation.

-

matplotlib.pyplot a state-based interface to matplotlib. It provides an implicit, MATLAB-like, way of plotting. It also opens figures on your screen, and acts as the figure GUI manager

-

Seaborn a library for making statistical graphics in Python. It builds on top of matplotlib and integrates closely with pandas data structures.

-

pickle Python object serialization; module implements binary protocols for serializing and de-serializing a Python object structure. “Pickling” is the process whereby a Python object hierarchy is converted into a byte stream, and “unpickling” is the inverse operation.

-

joblib.dump Persist an arbitrary Python object into one file.

MacBook Pro (16-inch, 2021)

Chip Appple M1 Max

macOS Venture version 13.0.1

Homebrew 3.6.11

Homebrew/homebrew-core (git revision 01c7234a8be; last commit 2022-11-15)

Homebrew/homebrew-cask (git revision b177dd4992; last commit 2022-11-15)

Python Platform: macOS-13.0.1-arm64-arm-64bit

Python version 3.9.15 packaged by conda-forge | (main, Nov 22 2022, 08:52:10)

Scikit-Learn 1.1.3

pandas 1.5.1

Numpy 1.21.5

pip 22.3 from /opt/anaconda3/lib/python3.9/site-packages/pip (python 3.9)

git version 2.37.2

In the terminal, navigate to directory where you want to install this application from the repository and enter the following command

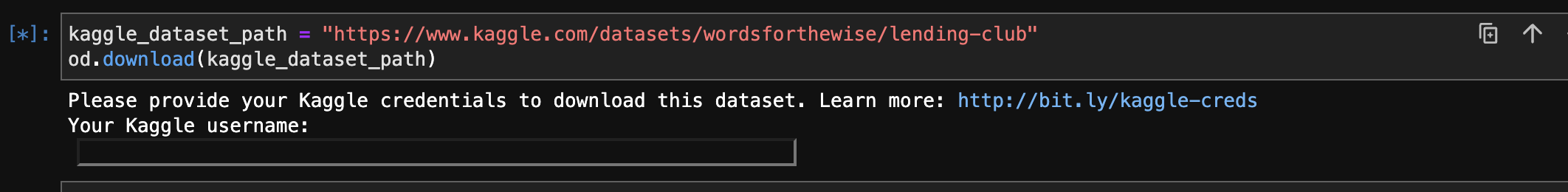

git clone git@github.com:prpercy/LendingGenie.gitYou will require Kaggle API credentials to run the jupyter lab notebook.

How to Use Kaggle - Public API Kaggle

Kaggle - opendatasets Kaggle

> pip install opendatasets --upgrade> pip install kaggle> git lfs installIn order to use the Kaggle’s public API, you must first authenticate using an API token. From the site header, click on your user profile picture, then on “My Account” from the dropdown menu. This will take you to your account settings at KaggleAccount. Scroll down to the section of the page labelled API:

To create a new token, click on the “Create New API Token” button. This will download a fresh authentication token onto your machine.

Once you obtain your Kaggle credentials and download the associated 'kaggle.json', proceed to usage below.

From terminal, the installed application is run through jupyter lab web-based interactive development environment (IDE) interface by typing at prompt:

> jupyter labThe file you will run is:

lending_genie.ipynbOnce it starts to run, you will be asked to enter your credentials-

If running the code generates error:

FileExistsError: [Errno 17] File exists: 'models_trained'You will need to delete directory 'models_trained'

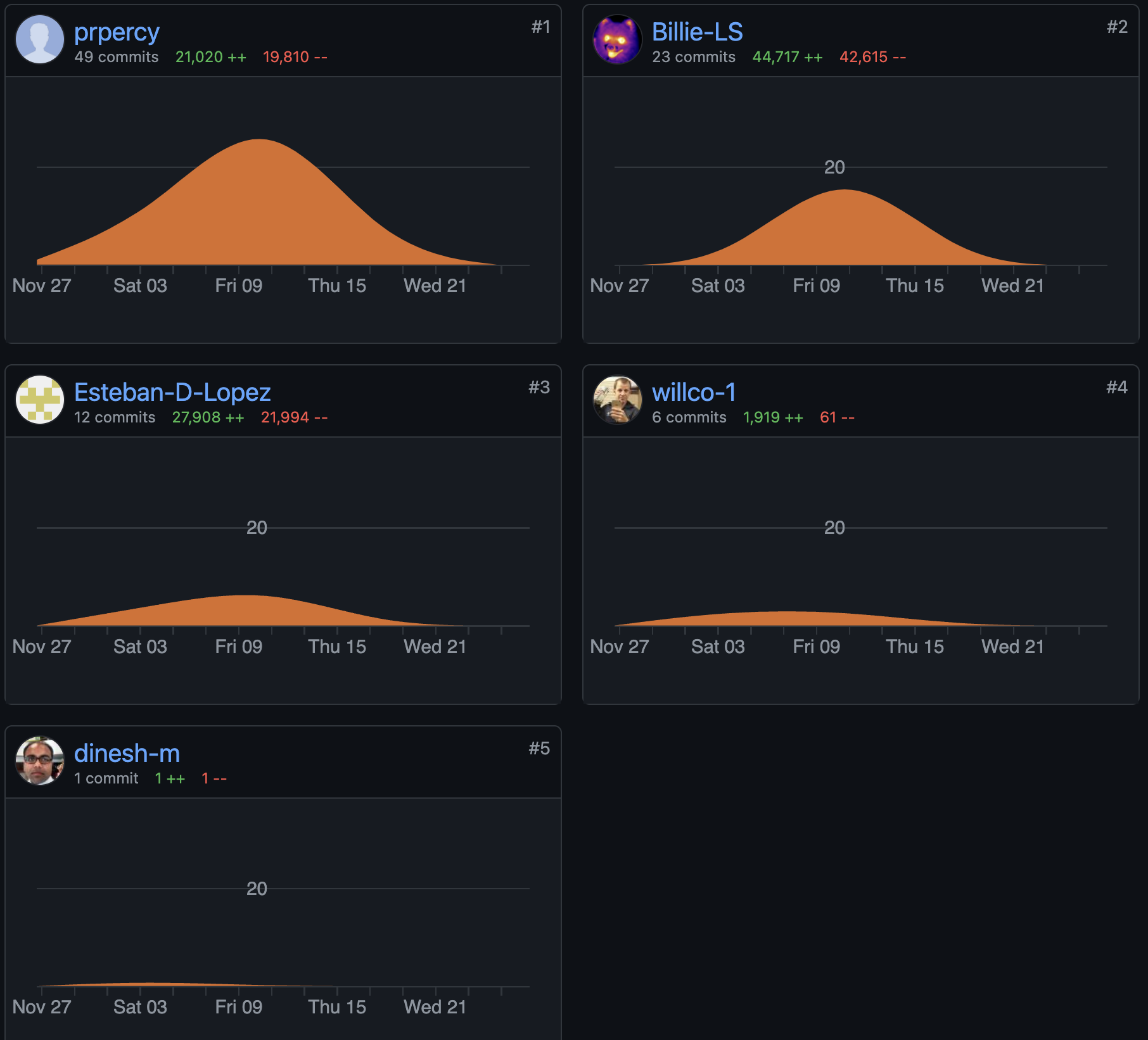

Version control can be reviewed at:

https://github.com/prpercy/LendingGenieLopez, Esteban LinkedIn @GitHub

Mandal, Dinesh LinkedIn @GitHub

Patil, Pravin LinkedIn @GitHub

Loki 'billie' Skylizard LinkedIn @GitHub

Vinicio De Sola LinkedIn @GitHub

Santiago Pedemonte LinkedIn @GitHub

EDA — Exploratory Data Analysis SearchMedium

splitting data MungingData

dealing with compression = gzip Stack Overflow

numeric only corr() Stack Overflow

find NaN Stack Overflow

color palette seaborn

horizontal bar graph seaborn

dataframe columns list geeksforgeeks

dataframe drop duplicates geeksforgeeks

PCA — how to choose the number of components mikulskibartosz

LinearSVC classifier DataTechNotes

In Depth: Parameter tuning for SVC All things AI

ConvergenceWarning: Liblinear failed to converge Stack Overflow

Measure runtime of a Jupyter Notebook code cell Stack Overflow

Building a Machine Learning Model in Python Frank Andrade

KNeighborsClassifier() scikit-learn

Linear Models scikit-learn

LogisticRegression() scikit-learn

Classifier comparison scikit-learn

XGB Classifier AnalyticsVidhya

Install XGBoost dmlc_XGBoost

XGBoost dmlc_XGBoost

XGBoost towardsdatascience

Using XGBoost in Python Tutorial datacamp

Kaggle - XGBoost classifier and hyperparameter tuning 85% Kaggle

How to Best Tune Multithreading Support for XGBoost in Python machinelearningmastery

AttributeError: 'GridSearchCV' object has no attribute 'grid_scores_' csdn.net

How to check models AUC score projectpro

Git Large File Storage git_lfs

git lfs is not a command Mac OS Stack Overflow

Histograms and Skewed Data Winder

Creating Histograms using Pandas Mode

Credit Risk Modelling [EDA & Classification] RStudio

Save and Load Machine Learning Models in Python with scikit-learn machinelearningmastery

Quick Hacks To Save Machine Learning Model using Pickle and Joblib AnalyticsVidhya

Kaggle - Lending Club data Kaggle

Kaggle - Lending Club defaulters predictions Kaggle

Kaggle - Lending Club categorical features analysis Stack Overflow

MIT License

Copyright (c) [2022] [Will Conyea, Esteban Lopez, Dinesh Mandal, Pravin Patil, Loki 'billie' Skylizard]

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.