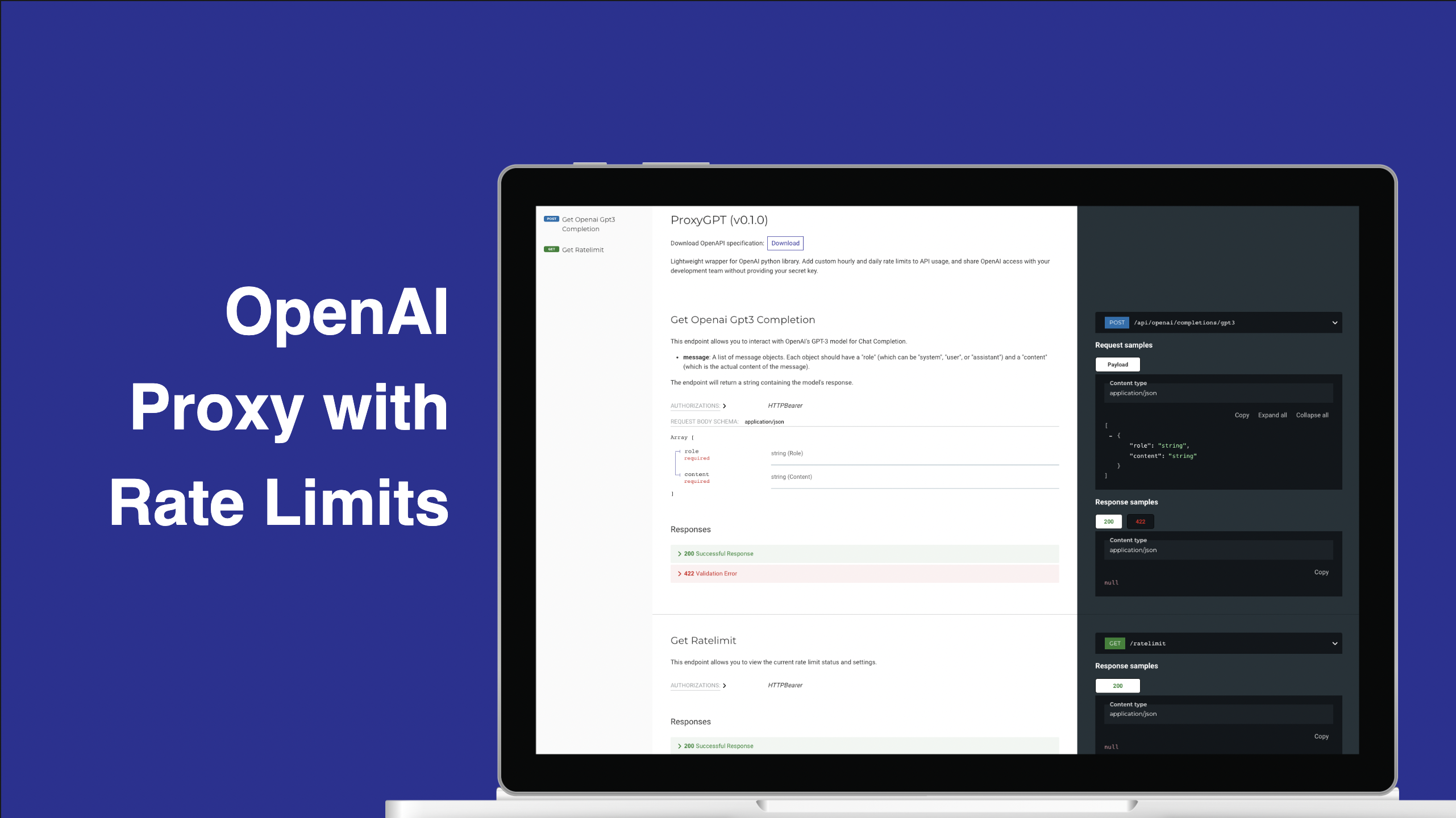

ProxyGPT is a dockerized lightweight OpenAI wrapper using FastAPI. This solution allows you to add custom hourly and daily rate limits to your OpenAI API usage, and share OpenAI access with your team without providing your secret key. The app comes with optional modules for logging and graphing API call content and results. You can confine OpenAI API usage through ProxyGPT with hourly and daily rate limits, in addition to only exposing specific endpoints of the OpenAI API. ProxyGPT also allows you the ability to reset or remove your team's access to the OpenAI API for only the services or people using a specific instance of ProxyGPT, rather than needing to reset the original OpenAI API key which could impact other projects if multiple services are using the same key (which is not recommended in production).

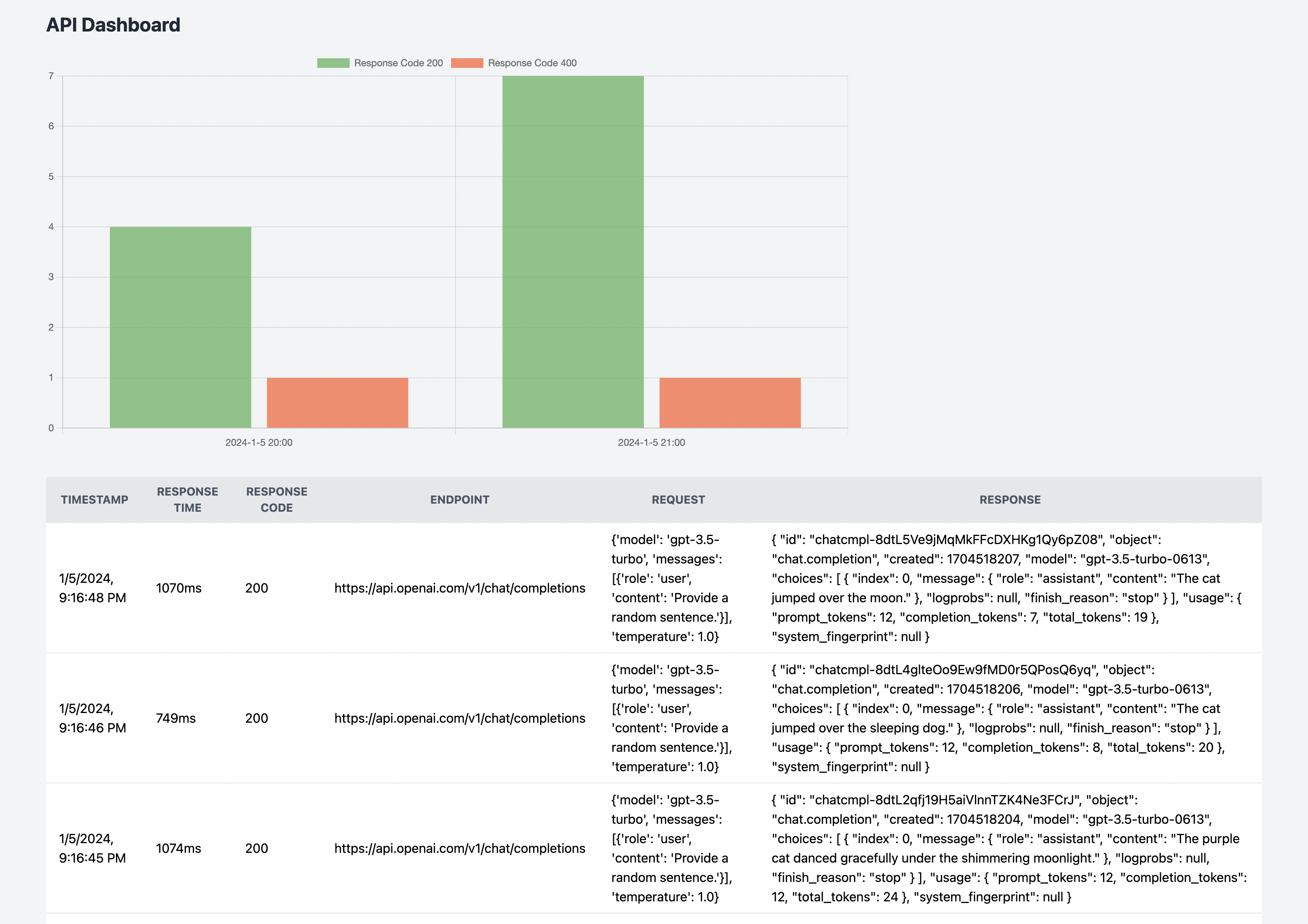

ProxyGPT comes with several modules, which are additional features beyond a simple proxy. These include logging and graphics, which allows full logging of the request and response for the API calls, and produces a dashboard for analyzing the results of the API calls.

See Installation to get started.

-

First, download the repository.

-

Next, configure the settings in settings.py.

USE_HOURLY_RATE_LIMIT (bool)

USE_DAILY_RATE_LIMIT (bool)

INSECURE_DEBUG (bool)

Note that both rate limits can be active and enforced simultaneously. There is also an INSTALLED_MODULES array which can be modified to remove modules that power unwanted features.

- Set the environment variables in .env. See Environment Variables.

- Run with or without Docker. See Running with Docker and Running without Docker.

If you wish to use ProxyGPT out of the box for only the gpt-3.5-turbo chat completion model, you can skip this section.

In order to customize ProxyGPT with new endpoints, simply add them in main.py based upon the implementation of get_openai_gpt3_completion. Ensure you handle errors and log API usage as is done with get_openai_gpt3_completion.

This project was developed with the goal of creating a simple and lightweight OpenAI wrapper, with basic yet powerful logging, rate limiting, and graphing tools. Strong documentation, easy customizability, and comprehensive initialization checks were integrated throughout the codebase. As part of the project's simple design, the service employs a local SQLite database, forgoing the use of long-term storage solutions like Docker volumes. This can be customized as you desire.

It's important to understand that the rate limits currently apply for any calls to OpenAI, meaning all calls will increase the rate count, irrespective of whether or not they were successful. This is simple to change if you wish, and just requires different placement of the log function to after validation of the response from the OpenAI API.

Rate limits, hourly and daily, are not tied to calendar hours or days. Instead, they operate on rolling windows of time, specifically the last 3600 seconds for hourly limits, and 86400 seconds for daily limits. Thus, usage counts do not reset at the beginning of a new day or hour, but are only no longer counted once they are greater than one hour or one day from the current time.

In addition, you can use both hourly and daily rate limits together, just one of the two, or none. They are seperate checks, and if either are active and the usage exceeds them, the call to ProxyGPT will be returned with status code 429 (Too Many Requests).

You can view the enabled rate limits and current usage from the /ratelimit endpoint. Use /docs or /redoc to explore all the endpoints by ProxyGPT.

Finally, it should be noted that any errors that arise in the code may be passed directly to the API client for easy debugging. However, this increases the risk of leaking any secret keys stored on the server side. You can turn this off by changing INSECURE_DEBUG to False in settings.py.

Required:

OPENAI_API_KEY = str: Your secure OpenAI API Key

PROXYGPT_API_KEY = str: Your strong custom API key for the proxy

Optional:

If using hourly rate limit (from settings):

PROXYGPT_HOURLY_RATE_LIMIT = int: max amount of calls to OpenAI through proxy allowed within a rolling one hour window

If using daily rate limit (from settings):

PROXYGPT_DAILY_RATE_LIMIT = int: max amount of calls to OpenAI through proxy allowed within a rolling one day window

docker build -f Dockerfile -t proxygpt:latest .

docker run --env-file .env -p 8000:8000 proxygpt:latest

Set environment variables in .env first. Run with -d for detached.

ProxyGPT is now online, and can be accessed at http://127.0.0.1:8000. Visit http://127.0.0.1:8000/docs to explore the auto-generated documentation.

python -m venv venv

source venv/bin/activate

pip install -r requirements.txt

touch .env

Add the variables to the .env file. See example.env and Environment Variables.

export $(cat .env | xargs)

uvicorn main:app --reload

gunicorn -w 4 -k uvicorn.workers.UvicornWorker main:app -b 0.0.0.0:8000

ProxyGPT is now online, and can be accessed at http://127.0.0.1:8000. Visit http://127.0.0.1:8000/docs to explore the auto-generated documentation.

v0.1.0-beta:

- Initialized Project for Release

v0.1.0:

- Application Tested in Beta, README.md updated

v0.1.1:

- Allow passing in multiple API keys for PROXYGPT_API_KEY

v0.2.0-beta:

- Create modules, starting with logging and graphics

Add unit tests to entire codebase

Allow multiple API keys with different rate limits in PROXYGPT_API_KEY

Allow use of production database

Add CORS origins restriction option through middleware

Convert endpoints to async so logging and returning data can be executed concurrently

Add Docker Volume integration for long term database

Add further abstraction for increased customizability (such as adding database name to settings.py)