This research project explores the application of generative modeling techniques to power analyses and statistical testing.

In a scientific study, one typically aims for a statistical power of 80%, implying that a true effect in the population is detected with a 80% chance. Power computations allow researchers to compute the minimal number of subjects to obtain the aimed statistical power. As such, power calculations avoid spending time and money on studies that are futile, and also prevent wasting time and money adding extra subjects, when sufficient power was already available.

Mounting evidence over the last few years suggest that published neuroscience research suffer from low power, and especially for published fMRI experiments. Not only does low power decrease the chance of detecting a true effect, it also reduces the chance that a statistically significant result indicates a true effect (Ioannidis, 2005). Put another way, findings with the least power will be the least reproducible, and thus a (prospective) power analysis is a critical component of any paper.

Source: Power and sample size calculations for fMRI studies based on the prevalence of active peaks

Our current scan rate is $563 per hour.

Source: University of Michigan Functional Magnetic Resonance Imaging Laboratory

Synthetic functional magnetic resonance images generated with state-of-the-art generative modeling techniques could serve as a low-cost replacement for pilot data used in power analyses.

WANTED: the smallest sample size needed for a study with 80% power

NOT WANTED:

- Wasted time/money on futile experiments

- Wasted resources on extra subjects

- Underpowered studies

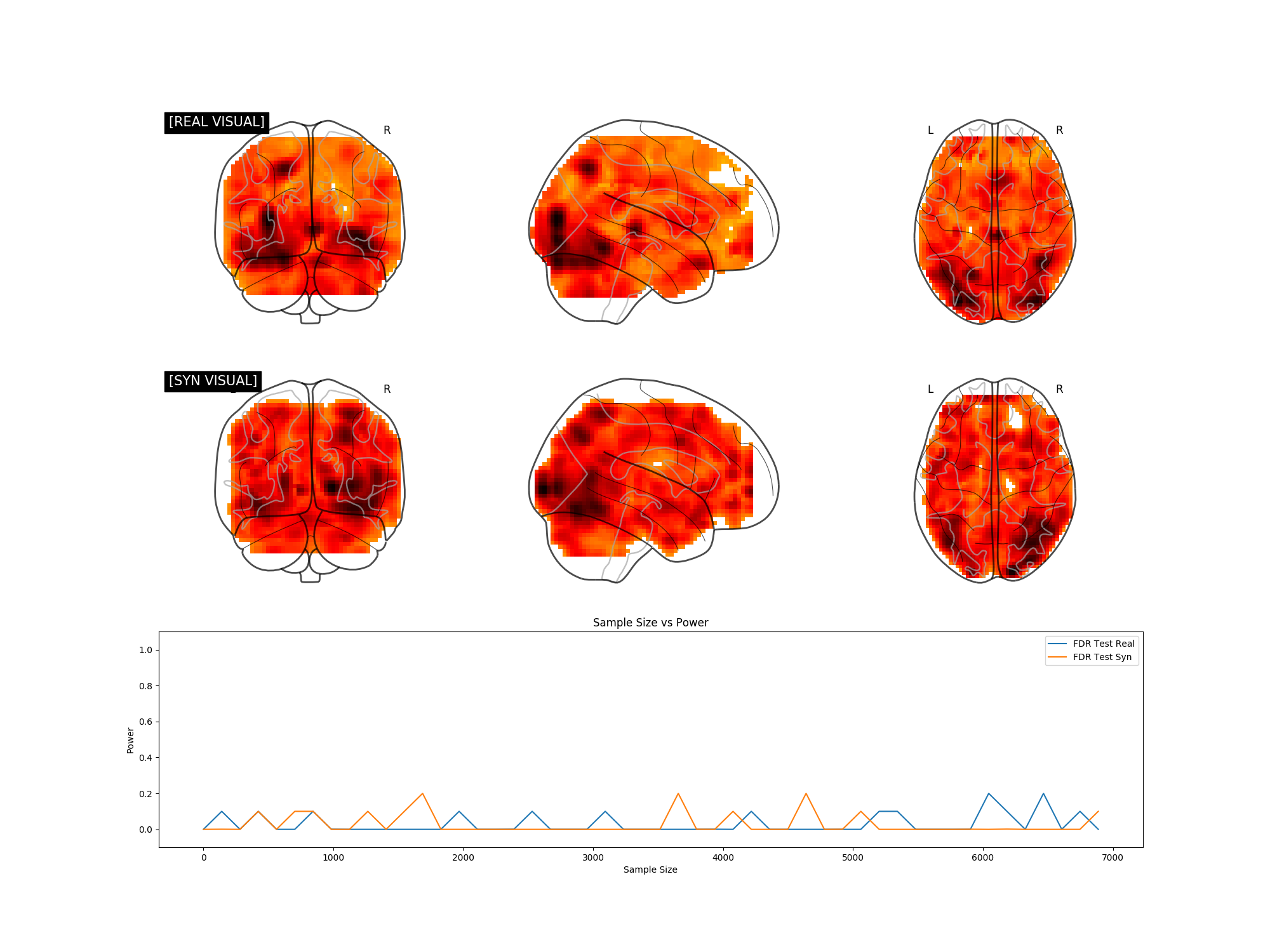

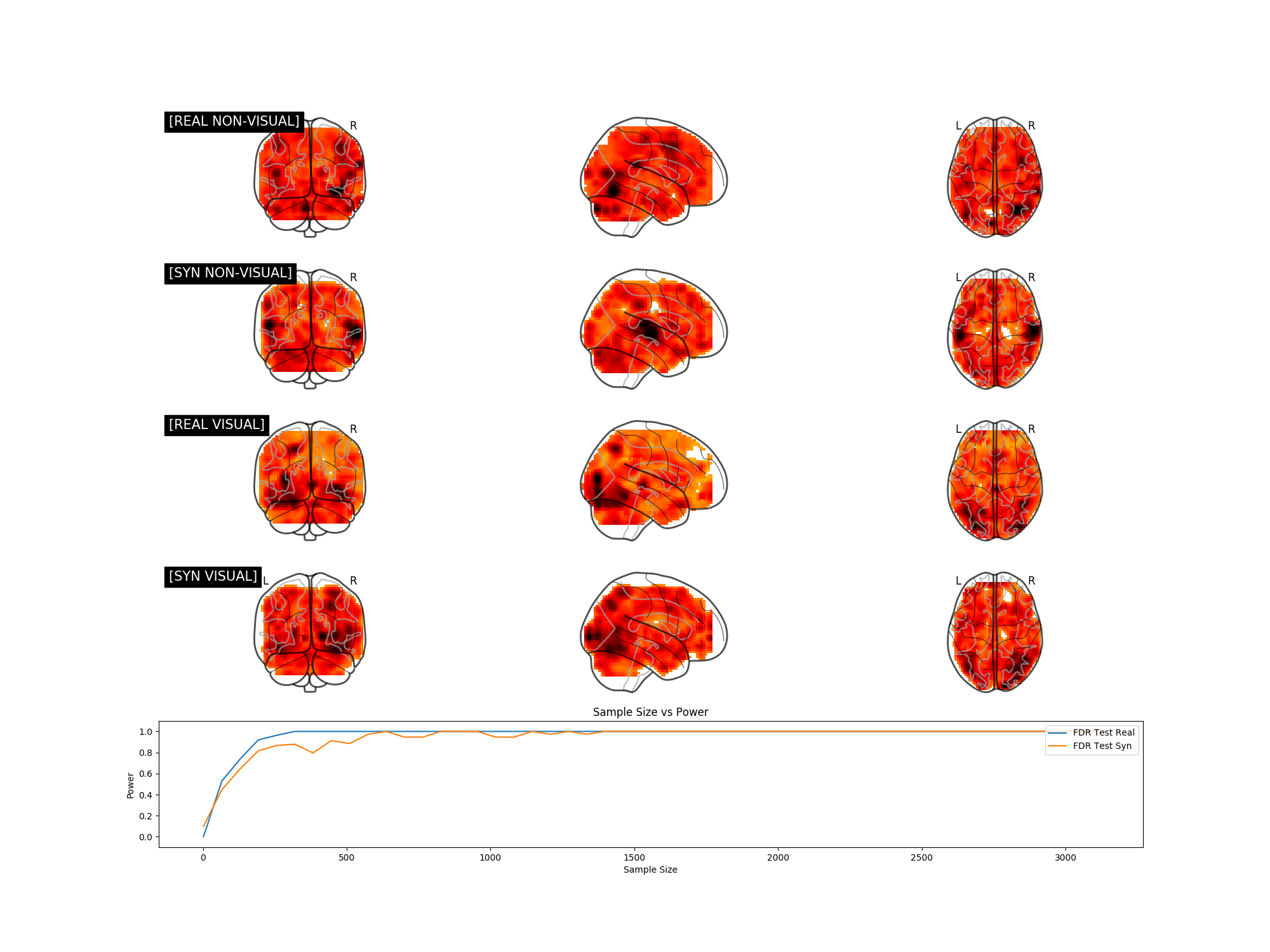

We explore the potential of synthetic power analyses through univariate, multivariate, and neuroimaging experiments. In the figures below, if the synthetic and real power curves are the same shape, then using synthetic data in our power analyses will yield a similar sample size estimate as we would have achieved with real data.

If we can train a generative model to synthesize realistic new data, doesn't that mean our training set contains more than enough data for a simple power analysis? Our fMRI generative modeling techniques can synthesize cognitive process label combinations that do not exist in the original dataset. Thus we can formulate power analyses with data that has never been collected before.

- Use bootstrap techniques to compute the distribution of the t test statistic.

- Compute p value for every test statistic.

- Power is approximately mean[1(p_i < alpha)]

Note: If the distribution is known to be Gaussian, we can directly compute the t test statistic without bootstrap techniques.

[Discussion to come]

[Discussion to come]

Test whether two distributions P and Q are different based on samples drawn from each of them.

- Sample from distribution P.

- Sample from distribution Q.

- Run a classical two sample t-test.

But why assume the data is Gaussian?

- Sample from distribution P.

- Sample from distribution Q.

- Run a non-parametric test like a Kernel Two-Sample Test.

- Given samples from P, use a generative model to recover an estimate P^ of the underlying probability distribution of P.

- Given samples from Q, use a generative model to recover an estimate Q^ of the underlying probability distribution of Q.

- Using unlimited synthetic data from the learned distributions P^ and Q^, run a (parametric or non-parametric) test to distinguish between the two.

The goal of this project is to show that sampling synthetic data from the underlying data distribution can enable powerful tests with fewer assumptions about the underlying data distribution.