Variational AutoEncoder

- This is a tensorflow implementation for paper Auto-Encoding Variational Bayes, the basic version come from hwalsuklee/tensorflow-mnist-CVAE

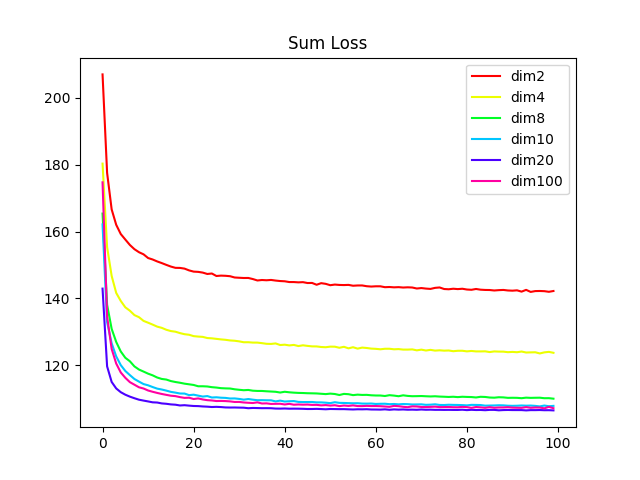

- Experiment for different dimensions of z: 2,4,8,10,20,100

- High light results:

- sampling manifold and mappings of train and test data(for mnist and dimension 2)

- reconstruction results among all dimensions(that means a mapping from x to x_hat)

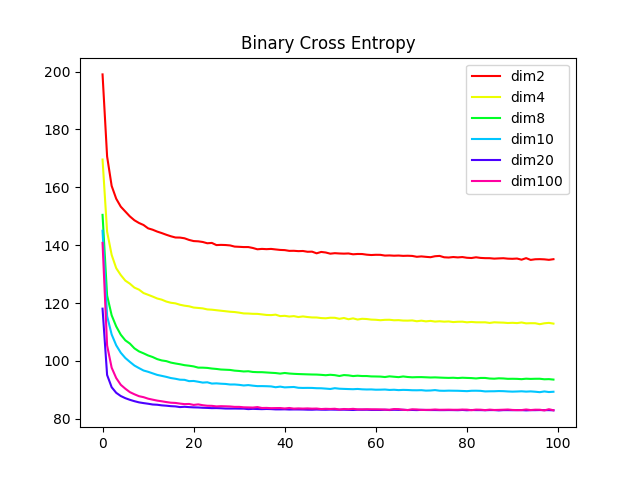

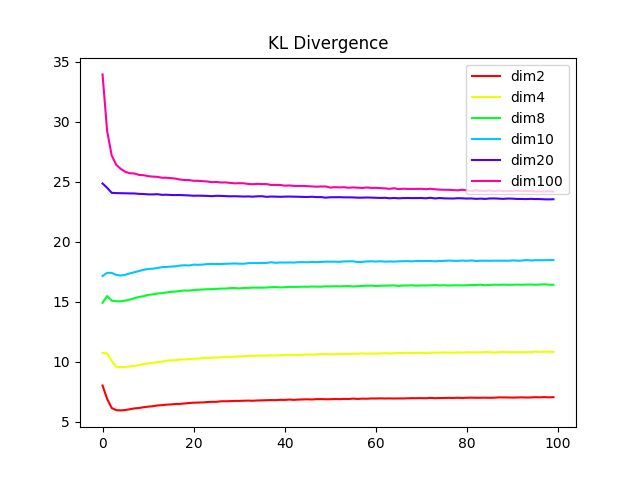

- Something interesting: plot sum loss, binary cross entropy loss(BCE) and kl-divergence(KLD). To my surprised, the results of BCE and KLD are logical, but the dim20 get the lowest sum loss, not the dim100.

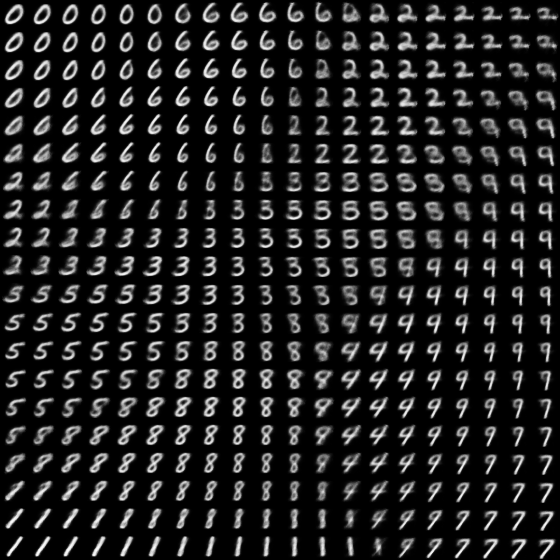

Visualizations of data manifold. Note: in manifold.png the range of z is [-2,2]

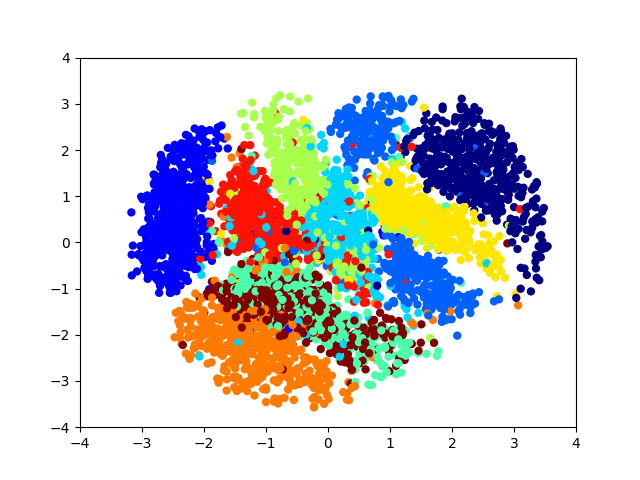

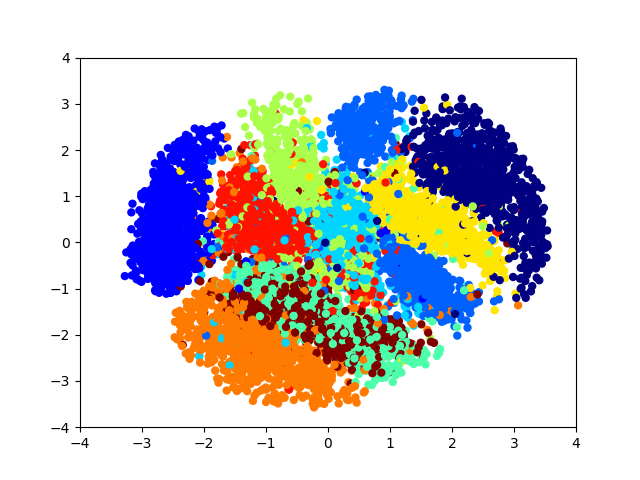

The distribution of labeled data(including train and test).

| train mappings | test mappings |

|

|

| Sum Loss | BCE | KLD |

|

|

|

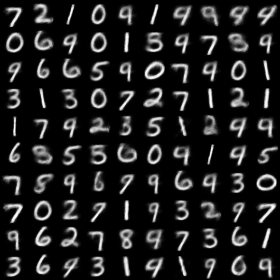

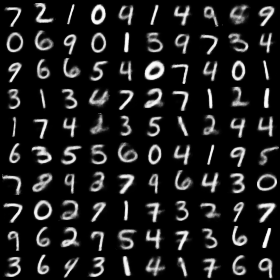

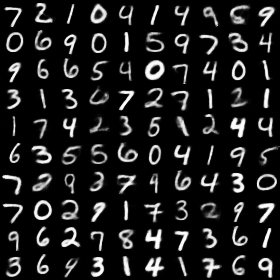

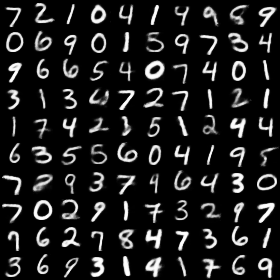

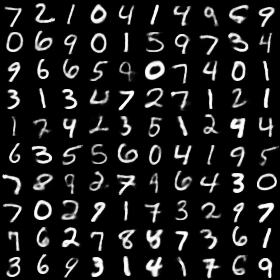

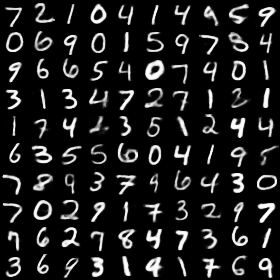

Reconstruction for images. Dimension is a very import factor for reconstruction.

| 2-Dim latent space | 4-Dim latent space | 8-Dim latent space |

|

|

|

| 10-Dim latent space | 20-Dim latent space | 100-Dim latent space |

|

|

|

[1] hwalsuklee/tensorflow-mnist-VAE

[2] kvfrans/variational-autoencoder