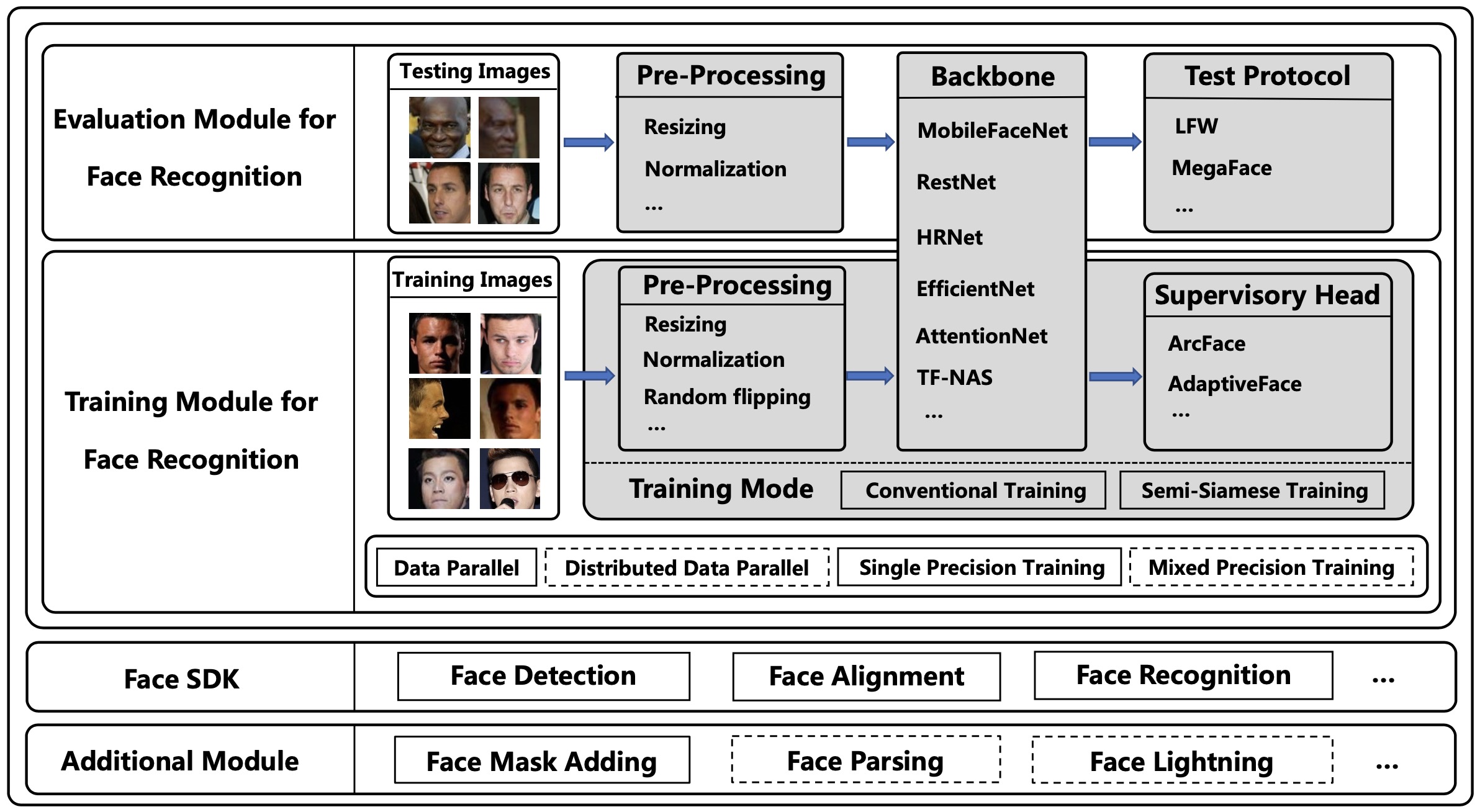

FaceX-Zoo is a PyTorch toolbox for face recognition. It provides a training module with various supervisory heads and backbones towards state-of-the-art face recognition, as well as a standardized evaluation module which enables to evaluate the models in most of the popular benchmarks just by editing a simple configuration. Also, a simple yet fully functional face SDK is provided for the validation and primary application of the trained models. Rather than including as many as possible of the prior techniques, we enable FaceX-Zoo to easilyupgrade and extend along with the development of face related domains. Please refer to the technical report for more detailed information about this project.

About the name:

- "Face" - this repo is mainly for face recognition.

- "X" - we also aim to provide something beyond face recognition, e.g. face parsing, face lightning.

- "Zoo" - there include a lot of algorithms and models in this repo.

- [Mar. 2021] ResNeSt and ReXNet have been added to the backbones, MagFace has been added to the heads.

- [Feb. 2021] Mixed precision training by apex is supported. Please check train_amp.py

- [Jan. 2021] We commit the initial version of FaceX-Zoo.

- python >= 3.7.1

- pytorch >= 1.1.0

- torchvision >= 0.3.0

See README.md in training_mode, currently support conventional training and semi-siamese training.

See README.md in test_protocol, currently support LFW, CPLFW, CALFW, RFW, AgeDB30, MegaFace and MegaFace-mask.

See README.md in face_sdk, currently support face detection, face alignment and face recognition.

FaceX-Zoo is released under the Apache License, Version 2.0.

This repo is mainly inspired by InsightFace, InsightFace_Pytorch, face.evoLVe. We thank the authors a lot for their valuable efforts.

Please consider citing our paper in your publications if the project helps your research. BibTeX reference is as follows.

@article{wang2021facex,

title={FaceX-Zoo: A PyTorh Toolbox for Face Recognition},

author={Wang, Jun and Liu, Yinglu and Hu, Yibo and Shi, Hailin and Mei, Tao},

journal={arXiv preprint arXiv:2101.04407},

year={2021}

}If you have any questions, please contact with Jun Wang (wangjun492@jd.com), Yinglu Liu (liuyinglu1@jd.com), Yibo Hu (huyibo6@jd.com) or Hailin Shi (shihailin@jd.com).