A LibTorch inference implementation of the yolov5 object detection algorithm. Both GPU and CPU are supported.

- Ubuntu 16.04

- CUDA 10.2

- OpenCV 3.4.12

- LibTorch 1.6.0

Please refer to the official document here: ultralytics/yolov5#251

Mandatory Update: developer needs to modify following code from the original export.py in yolov5

# line 29

model.model[-1].export = FalseAdd GPU support: Note that the current export script in yolov5 uses CPU by default, the "export.py" needs to be modified as following to support GPU:

# line 28

img = torch.zeros((opt.batch_size, 3, *opt.img_size)).to(device='cuda')

# line 31

model = attempt_load(opt.weights, map_location=torch.device('cuda'))Export a trained yolov5 model:

cd yolov5

export PYTHONPATH="$PWD" # add path

python models/export.py --weights yolov5s.pt --img 640 --batch 1 # export$ cd /path/to/libtorch-yolo5

$ wget https://download.pytorch.org/libtorch/cu102/libtorch-cxx11-abi-shared-with-deps-1.6.0.zip

$ unzip libtorch-cxx11-abi-shared-with-deps-1.6.0.zip

$ mkdir build && cd build

$ cmake .. && makeTo run inference on examples in the ./images folder:

# CPU

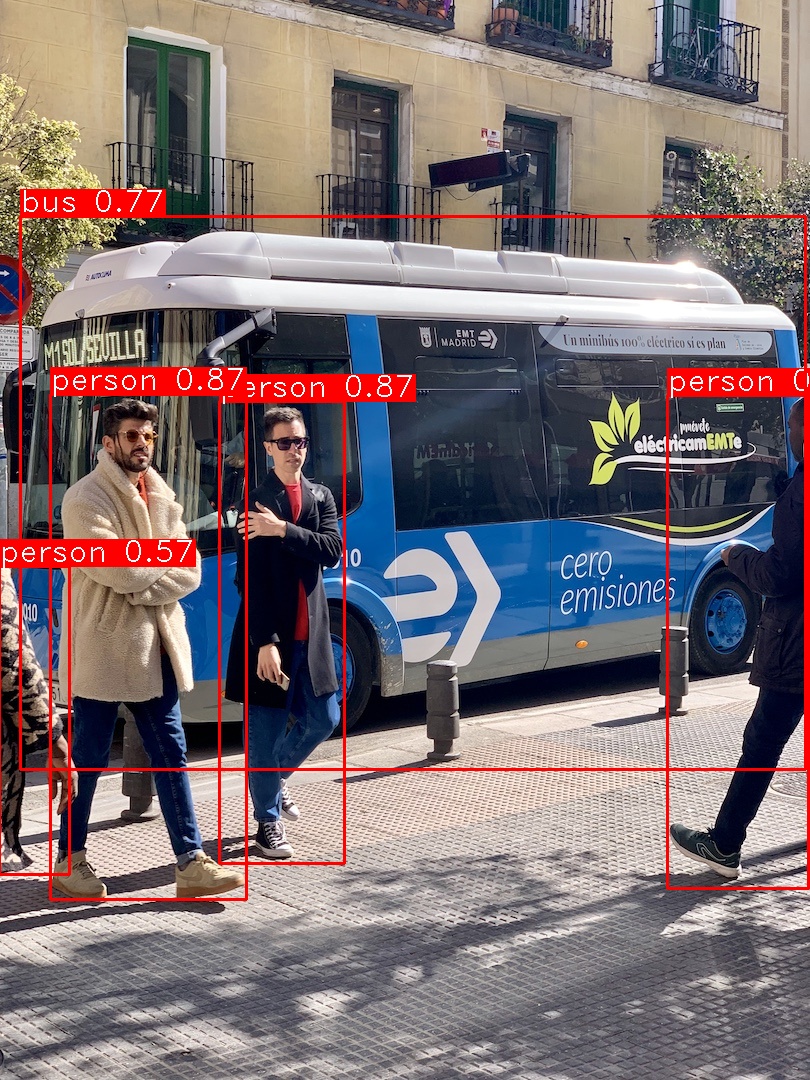

$ ./libtorch-yolov5 --source ../images/bus.jpg --weights ../weights/yolov5s.torchscript.pt --view-img

# GPU

$ ./libtorch-yolov5 --source ../images/bus.jpg --weights ../weights/yolov5s.torchscript.pt --gpu --view-img

# Profiling

$ CUDA_LAUNCH_BLOCKING=1 ./libtorch-yolov5 --source ../images/bus.jpg --weights ../weights/yolov5s.torchscript.pt --gpu --view-img- terminate called after throwing an instance of 'c10::Error' what(): isTuple() INTERNAL ASSERT FAILED

- Make sure "model.model[-1].export = False" when running export script.

-

Why the first "inference takes" so long from the log?

-

The first inference is slower as well due to the initial optimization that the JIT (Just-in-time compilation) is doing on your code. This is similar to "warm up" in other JIT compilers. Typically, production services will warm up a model using representative inputs before marking it as available.

-

It may take longer time for the first cycle. The yolov5 python version run the inference once with an empty image before the actual detection pipeline. User can modify the code to process the same image multiple times or process a video to get the valid processing time.

-

- https://github.com/ultralytics/yolov5

- Question about the code in non_max_suppression

- https://github.com/walktree/libtorch-yolov3

- https://pytorch.org/cppdocs/index.html

- https://github.com/pytorch/vision

- PyTorch.org - CUDA SEMANTICS

- PyTorch.org - add synchronization points

- PyTorch - why first inference is slower