The repository implements the NeuralWalker in Pytorch Geometric described in the following paper

Dexiong Chen, Till Schulz, and Karsten Borgwardt. Learning Long Range Dependencies on Graphs via Random Walks, Preprint 2024.

TL;DR: A novel random-walk based neural architecture for graph representation learning.

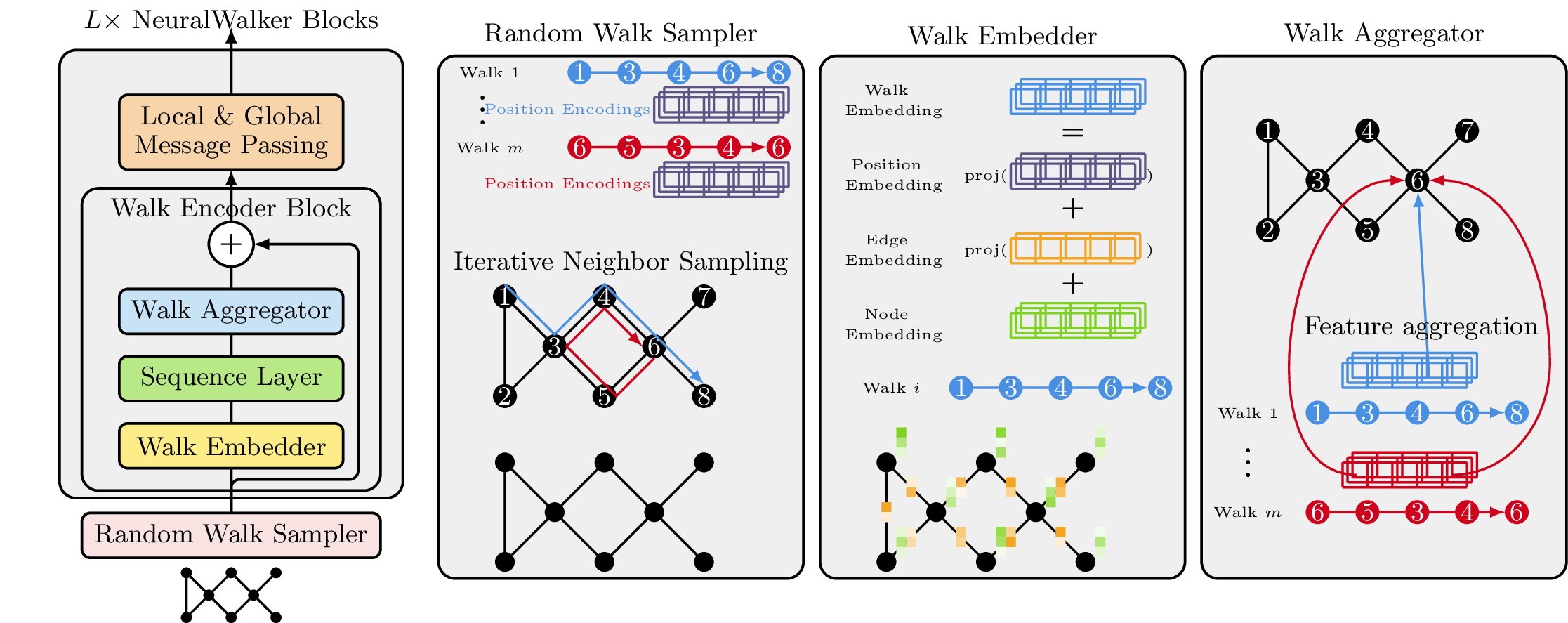

NeuralWalker samples random walks with a predefined sampling rate and length, then uses advanced sequence models to process them. Additionally, local and global message passing can be employed to capture complementary information. The main components of NeuralWalker are a random walk sampler, and a stack of neural walker blocks (a walk encoder block + a message passing block). Each walk encoder block has a walk embedder, a sequence layer, and a walk aggregator.

- Random walk sampler: samples m random walks independently without replacement.

- Walk embedder: computes walk embeddings given the node/edge embeddings at the current layer.

- Sequence layer: any sequence model, e.g. CNNs, RNNs, Transformers, or state-space models.

- Walk aggregator: aggregates walk features into node features via pooling of the node features encountered in all the walks passing through that node.

We recommend the users to manage dependencies using miniconda or micromamba:

# Replace micromamba with conda if you use conda or miniconda

micromamba env create -f environment.yaml

micromamba activate neuralwalker

pip install -e .Note

Our code is also compatible with more recent Pytorch versions, your can use micromamba env create -f environment_latest.yaml for development purposes.

Tip

NeuralWalker relies on a sequence model to process random walk sequences, such as CNNs, Transformers, or state-space models. If you encounter any issues when installing the state-space model Mamba, please consult its installation guideline.

All configurations for the experiments are managed by hydra, stored in ./config.

Below you can find the list of experiments conducted in the paper:

- Benchmarking GNNs: zinc, mnist, cifar10, pattern, cluster

- LRGB: pascalvoc, coco, peptides_func, peptides_struct, pcqm_contact

- OGB: ogbg_molpcba, ogbg_ppa, ogbg_code2

# You can replace zinc with any of the above datasets

python train.py experiment=zinc

# Running NeuralWalker with a different model architecture

python train.py experiment=zinc experiment/model=conv+vn_3LTip

You can replace conv+vn_3L with any model provided in config/experiment/model, or a customized model by creating a new one in that folder.

We integrate NeuralWalker with Polynormer, SOTA model for node classifcation. See node_classifcation for more details.

python train.py mode=debug