- A TensorFlow implementation of DeepMind's WaveNet paper

This is a TensorFlow implementation of the WaveNet generative neural network architecture for audio generation.

|

The WaveNet neural network architecture directly generates a raw audio waveform, showing excellent results in text-to-speech and general audio generation (see the DeepMind blog post and paper for details). The network models the conditional probability to generate the next sample in the audio waveform, given all previous samples and possibly additional parameters.

After an audio preprocessing step, the input waveform is quantized to a fixed integer range.

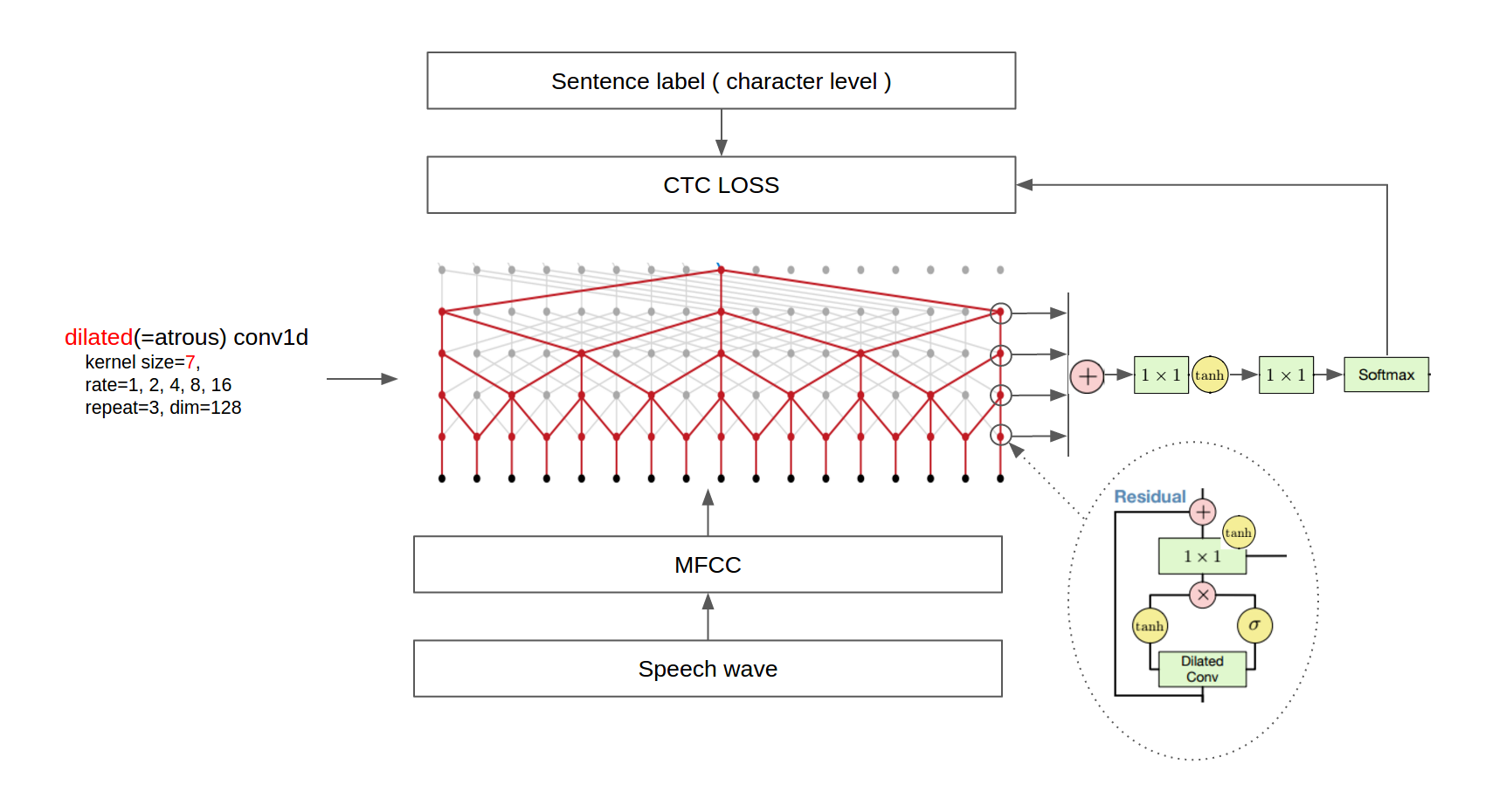

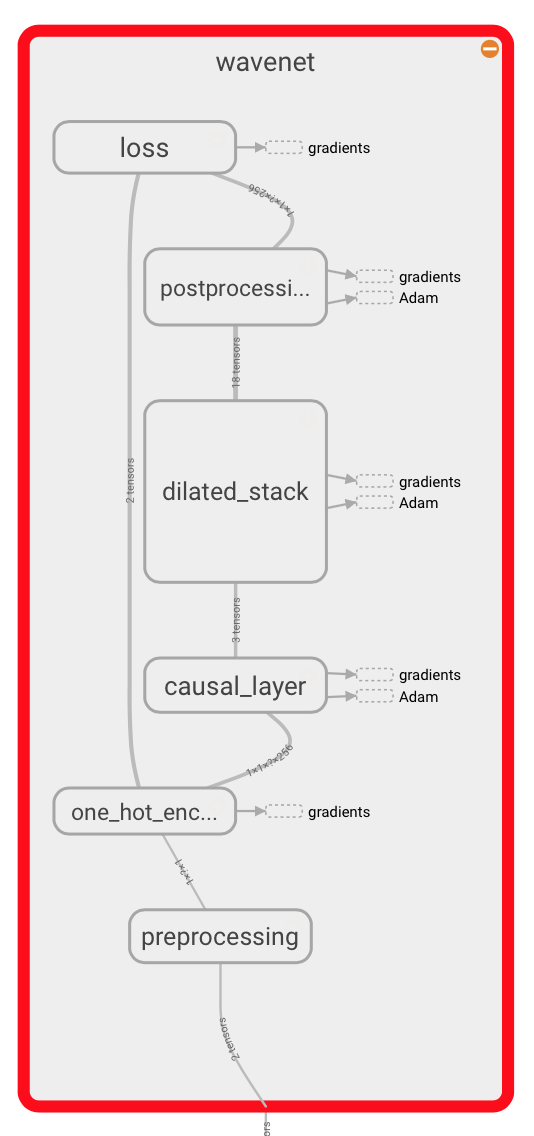

The integer amplitudes are then one-hot encoded to produce a tensor of shape A convolutional layer that only accesses the current and previous inputs then reduces the channel dimension. The core of the network is constructed as a stack of causal dilated layers, each of which is a dilated convolution (convolution with holes), which only accesses the current and past audio samples. The outputs of all layers are combined and extended back to the original number of channels by a series of dense postprocessing layers, followed by a softmax function to transform the outputs into a categorical distribution. The loss function is the cross-entropy between the output for each timestep and the input at the next timestep. In this repository, the network implementation can be found in model.py. |

|

TensorFlow needs to be installed before running the training script. Code is tested on TensorFlow version 2 for Python 3.10

In addition, glog and librosa must be installed for reading and writing audio.

To install the required python packages, run

pip install -r requirements.txtFor GPU support, use

pip install -r requirements_gpu.txtYou can use any corpus containing .wav files.

- We've mainly used the VCTK (around 10.4GB, Alternative host) so far.

- LibriSpeech

- TEDLIUM release 2

The architecture is shown in the following figure.

(Some images are cropped from [WaveNet: A Generative Model for Raw Audio](https://arxiv.org/abs/1609.03499) and [Neural Machine Translation in Linear Time](https://arxiv.org/abs/1610.10099))Create dataset

- Download and extract dataset(only VCTK support now, other will coming soon)

- Assume the directory of VCTK dataset is f:/speech, Execute to create record for train or test

python tools/create_tf_record.py -input_dir='f:/speech'

Execute to train model.

python train.py

Execute to evalute model.

python test.py

Demo

1.Download pretrain model(buriburisuri model) and extract to 'release' directory

2.Execute to transform a speech wave file to the English sentence. The result will be printed on the console.

python demo.py -input_path <wave_file path>

For example, try the following command.

python demo.py -input_path=data/demo.wav -ckpt_dir=release/buriburisuri

Citation

Kim and Park. Speech-to-Text-WaveNet. 2016. GitHub repository. https://github.com/buriburisuri/.

In order to train the network, execute

python train.py --data_dir=corpusto train the network, where corpus is a directory containing .wav files.

The script will recursively collect all .wav files in the directory.

You can see documentation on each of the training settings by running

python train.py --help