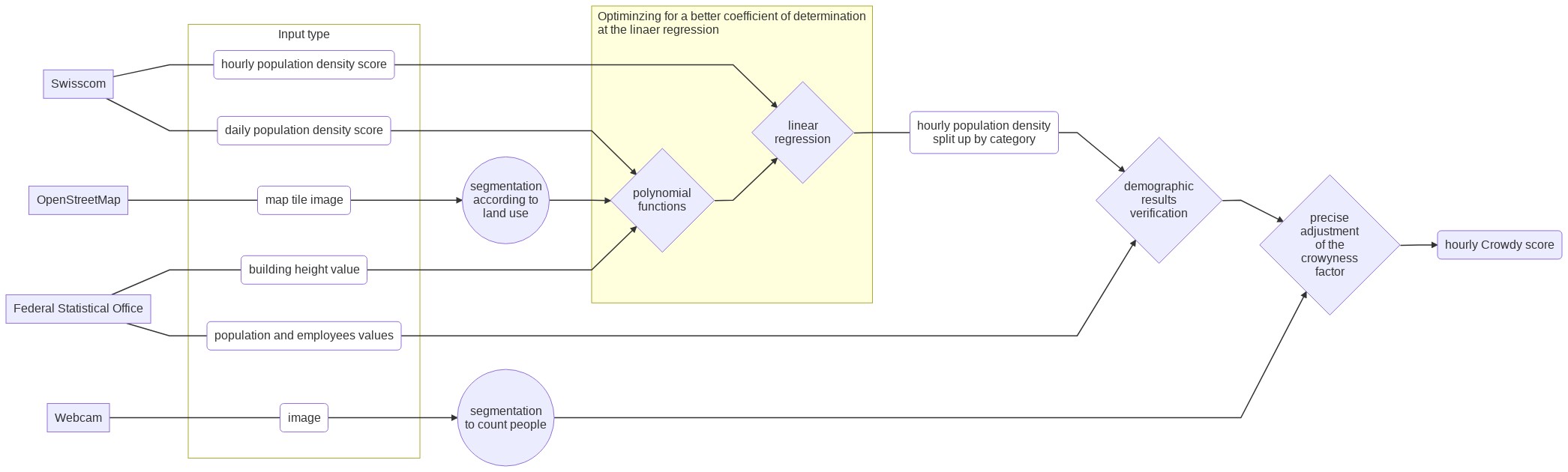

The goal of this challenge is to build a model that predicts the crowdedness in Switzerland. The unsupervised algorithm is based on data from Swisscom, OSM, and BFS.

Crowdy begun at the Hackathon VersusVirus May 2020. The aim is to avert accumulations of people and thus an increased risk of infection in advance.

We are thrilled that our group has already found some partners. In addition to MongoDB and emonitor, Swisscom is an essential partner. Our approach is to calculate larger gatherings of people in public space from the utilization of radio cells.

This repository shows the data mining part. It includes the download of the raw data and all processing steps to the final score of the people in public.

You can find more Crowdy open code in the following repos:

I developed 0the software in JupyterLab 2.2.6 with Python 3.8.5 managed by Conda 4.8.4. For the production process, functions are outsourced to Python files. The visualization is still done with Jupiter Lab.

I will briefly name the important packages and their function in this project:

oauthlib.oauth2,requests_oauthlib&urllib.request: For parsing data from Swisscom's API with authorization.OSMViz: For load tiles from OpenStreetMap. Please read Tile Usage Policy and support the project.JSON&GeoJSON: To cache, store and transmit data within the project pipelinematplotlib: For creating graphs and plots of functions and numbers.mpl_toolkits.basemap: For showing geodata on a static map in JupyterLabfolium: For visualizing data geodata on a dynamic map and store it as a website.pandas: For analyzing and getting insights from datasetsPyTorch: As my personal favorite deep learning frameworkdetectron2: For object detection on webcam images

The first part aims to parse data from the Swisscom, OSM, FSO(BFS) and the webcams.

As part of the pipeline, it is necessary to extract the information from the images. It is much easier to work with different classes and their proportions in one image. With OpenStreetMap data, it is sufficient to count the pixels by color. A complicated approach is required with webcam data and people counting, this is what I use state-of-the-art object detection algorithms.

The third part is the major consolidation of the data sources Swisscom, OpenStreetMaps, and the Federal Statistical Office. Various techniques such as linear regression, and principal component analysis (PCA) and partial least squares (PLS) observe correlations to calculate the crowdedness

As shown in the overview graphic, there is the option of verifying the calculated crowdedness and fine-tuning the data using the webcam output.