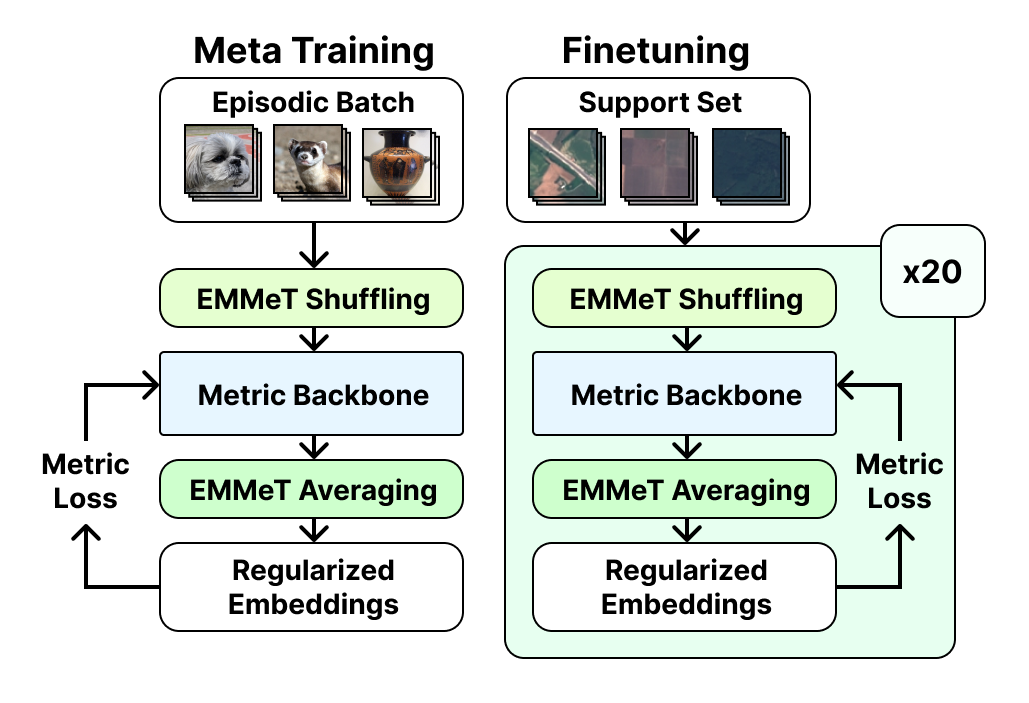

Embedding Mixup for Meta-Training (EMMeT), a novel regularization technique that creates new episodic batches through an embedding shuffling and averaging regime.

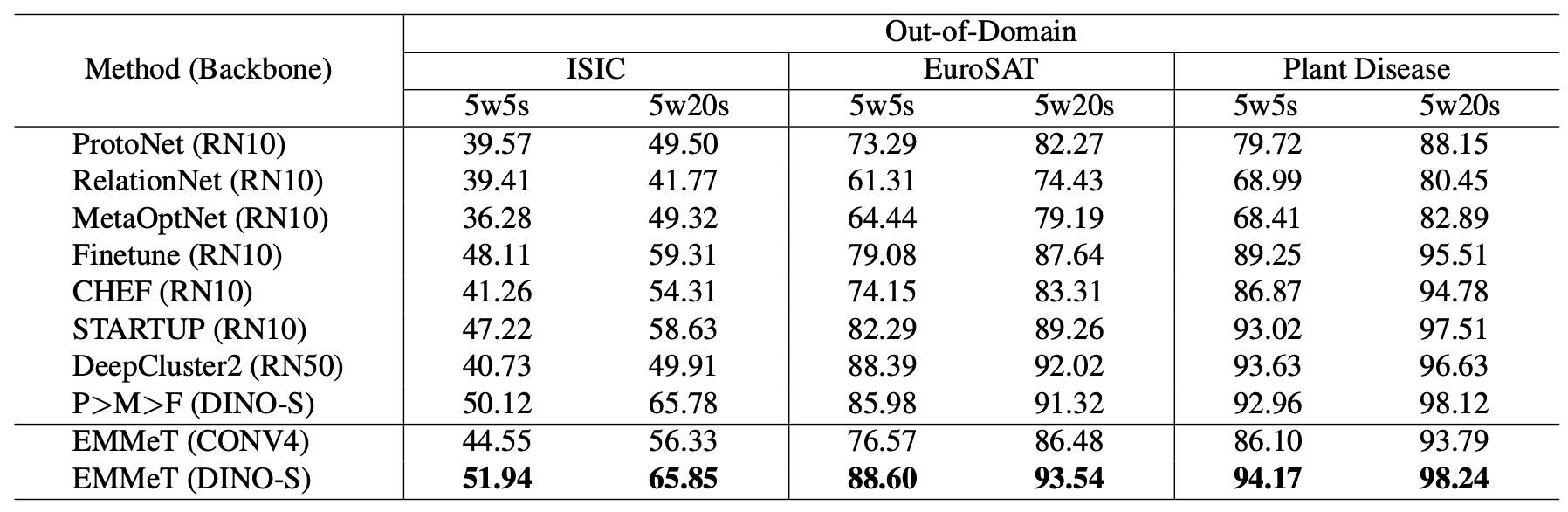

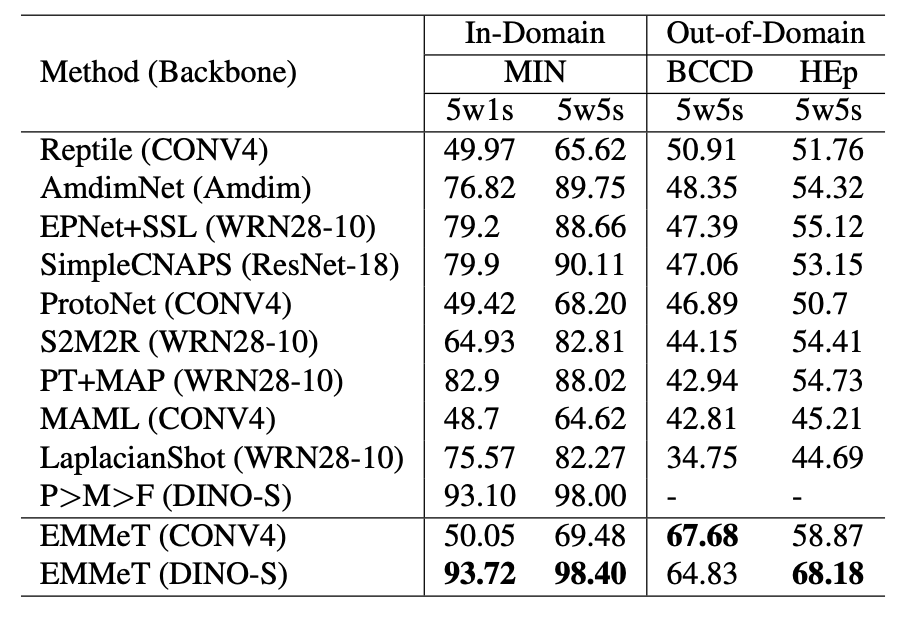

Test EMMeT on a range of out-of-domain and in-domain datasets.

Install the requirements necessary for using EMMeT. A virtual environment (or similar) is recommended.

pip install -r requirements.txtDatasets used in this work can be obtained at the following links:

After downloading all datasets, you should extract/place each respective dataset's folder in the same directory.

You can download EMMeT-applied pretrained weights from HuggingFace

To use the pretrained weights, create a models folder at the root of this repo and place all pretrained weights in it.

Run the test_finetune.py and specify the number of shots, the finetuning iterations, image size, model type, model weights path, and the path to the root data directory (where you placed the downloaded datasets earlier). A 5-shot example run is shown below with a data root of ~/Data:

python3 test_finetune.py --root_data_path "~/Data" --test_iters 20 --shots 5 --img_size 84 --model_type CONV4_VANILLA --model_path "./models/conv4.pth"To see a full list of available options, run the following command to see the help dialogue:

python3 test_finetune.py --help