An Invariant Deep Learning RANS Closure Framework provides a unified way to interact with A curated dataset for data-driven turbulence modelling by McConkey et al. allowing for data loading, preprocessing, model training and experimenting, inference, evaluation, integration with openfoam via exporting and postprocessing openfoam files.

The framework uses tensorflow and keras for the machine learning operations and scikit-learn for metrics generation. Plotting is done using matplotlib.

The provided models leverage Galilean Invariance when predicting the Anisotropy Tensor and an Eddy Viscosity which can then be injected into a converged RANS simulation using OpenFOAM v2006 and converging towards the DNS velocity field.

The physics behind this framework can be found here. [TEMPORARY LINK]

Support for SSTBNNZ (a semi Supervised Zonal Approach) will be made avalable in the future.

conda create --name ML_Turb python=3.9

conda activate ML_Turb

pip install ideal-rcfThe original dataset can be downloaded directly from kaggle

kaggle datasets download -d ryleymcconkey/ml-turbulence-dataset

mkdir ml-turbulence-dataset

unzip ml-turbulence-dataset.zip -d ml-turbulence-datasetThe expanded dataset can be included with

gdown https://drive.google.com/uc?id=1rb2-7vJQtp_nLqxjmnGJI2aRQx8u9W6B

unzip a_3_1_2_NL_S_DNS_eV.zip -d ml-turbulence-dataset/komegasstThe package is structure across three core objects CaseSet, DataSet and FrameWork. A series of other modules are available for extended functionality such as evaluation, visualization and integration with OpenFOAM, all of which interact with a CaseSet obj. Before starting make sure that A curated dataset for data-driven turbulence modelling by McConkey et al. is present in your system. The version used in the present work was augmented using these tools and can be found here.

A CaseSet must be created via a SetConfig obj which contains the params to be loaded such as features and labels.

from ideal_rcf.dataloader.config import SetConfig

from ideal_rcf.dataloader.caseset import CaseSet

set_config = SetConfig(...)

caseset_obj = CaseSet('PHLL_case_1p2', set_config=set_config)View the creating_casesets.ipynb example for more.

A DataSet receives the same type of SetConfig as the CaseSet but handles different parameters such as trainset, valset and tesset which are used to split the DataSet into the required sets for the supervised training. The DataSet object fits and stores the scalers built from the trainset.

from ideal_rcf.dataloader.config import SetConfig

from ideal_rcf.dataloader.dataset import DataSet

set_config = SetConfig(...)

dataset_obj = DataSet(set_config=set_config)

train, val, test = dataset_obj.split_train_val_test()View the creating_datasets.ipynb example for more.

The FrameWork receives a ModelConfig obj which will determine the model to be used. Currently three models are supported:

- TBNN - Tensor Based Neural Networks - proposed originally by Ling et al. [paper] code

- eVTBN - Effective Viscosity Tensor Based Neural Network - proposed by ... [paper][thesis][wiki]

- OeVNLTBNN - Optimal Eddy Viscosity + Non Linear Tensor Based Neural Network:

Each model builds on the previous, so an eVTBNN is a TBNN combined with an eVNN while the OeVNLTBNN is an eVTBNN paired with an oEVNN.

View the creating_models.ipynb example for more.

from ideal_rcf.models.config import ModelConfig

from ideal_rcf.models.framework import FrameWork

TBNN_config = ModelConfig(

layers_tbnn=layers_tbnn,

units_tbnn=units_tbnn,

features_input_shape=features_input_shape,

tensor_features_input_shape=tensor_features_input_shape,

)

tbnn = FrameWork(TBNN_config)

tbnn.compile_models()

### acess compiled model

print(tbnn.models.tbnn.summary())from ideal_rcf.models.config import ModelConfig

from ideal_rcf.models.framework import FrameWork

eVTBNN_config = ModelConfig(

layers_tbnn=layers_tbnn,

units_tbnn=units_tbnn,

features_input_shape=features_input_shape,

tensor_features_input_shape=tensor_features_input_shape,

layers_evnn=layers_evnn,

units_evnn=units_evnn,

tensor_features_linear_input_shape=tensor_features_linear_input_shape,

)

evtbnn = FrameWork(eVTBNN_config)

evtbnn.compile_models()

### acess compiled model

print(evtbnn.models.evtbnn.summary())from ideal_rcf.models.config import ModelConfig

from ideal_rcf.models.framework import FrameWork

OeVNLTBNN_config = ModelConfig(

layers_tbnn=layers_tbnn,

units_tbnn=units_tbnn,

features_input_shape=features_input_shape,

tensor_features_input_shape=tensor_features_input_shape,

layers_evnn=layers_evnn,

units_evnn=units_evnn,

tensor_features_linear_input_shape=tensor_features_linear_input_shape,

layers_oevnn=layers_oevnn,

units_oevnn=units_oevnn,

tensor_features_linear_oev_input_shape=tensor_features_linear_oev_input_shape,

learning_rate=learning_rate,

learning_rate_oevnn=learning_rate_oevnn,

)

oevnltbnn = FrameWork(OeVNLTBNN_config)

oevnltbnn.compile_models()

### after training you can extract oev model from oevnn so that S_DNS is not required to run inference

### this is done automatically inside the train module

oevnltbnn.extract_oev()

### acess compiled model

print(oevnltbnn.models.oevnn.summary())

print(oevnltbnn.models.nltbnn.summary())All models support the Mixer Architecture which is based on the concept introduced by Chen et al. in TSMixer: An All-MLP Architecture for Time Series Forecasting [code] and adapted to work with spatial features while preserving invariance. The architecture and explanation are available in the [wiki].

from ideal_rcf.models.config import ModelConfig, MixerConfig

from ideal_rcf.models.framework import FrameWork

tbnn_mixer_config = MixerConfig(

features_mlp_layers=5,

features_mlp_units=150

)

TBNN_config = ModelConfig(

layers_tbnn=layers_tbnn,

units_tbnn=units_tbnn,

features_input_shape=features_input_shape,

tensor_features_input_shape=tensor_features_input_shape,

tbnn_mixer_config=tbnn_mixer_config

)

tbnn = FrameWork(TBNN_config)

tbnn.compile_models()

### acess compiled model

print(tbnn.models.tbnn.summary())oevnltbnn.train(dataset_obj, train, val)### the predictions are saved in the test obj

oevnltbnn.inference(dataset_obj, test)from ideal_rcf.infrastructure.evaluator import Evaluator

from sklearn.metrics import mean_squared_error, r2_score, mean_absolute_error

metrics_list = [mean_squared_error, r2_score, mean_absolute_error]

eval_instance = Evaluator(metrics_list)

eval_instance.calculate_metrics(test)from ideal_rcf.foam.preprocess import FoamParser

### dump the predictions into openfoam compatible files

foam = FoamParser(PHLL_case_1p2)

foam.dump_predictions(dir_path)More use cases are covered in the examples directory:

- FrameWork Training

- Setting Up Cross Validtion

- Train and Inference with Cross Validation

- Inference on loaded DataSet, Framework and exporting to openfoam

- Post Processing resulting foam files

The solvers and configurations used for injecting the predictions are available here

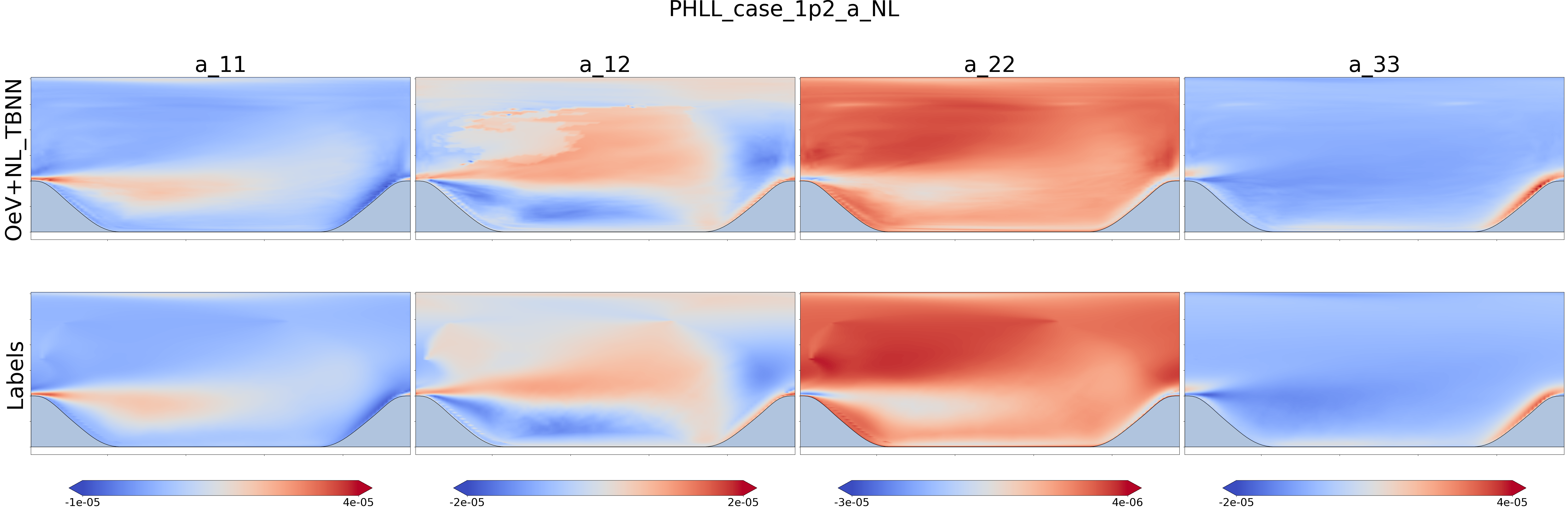

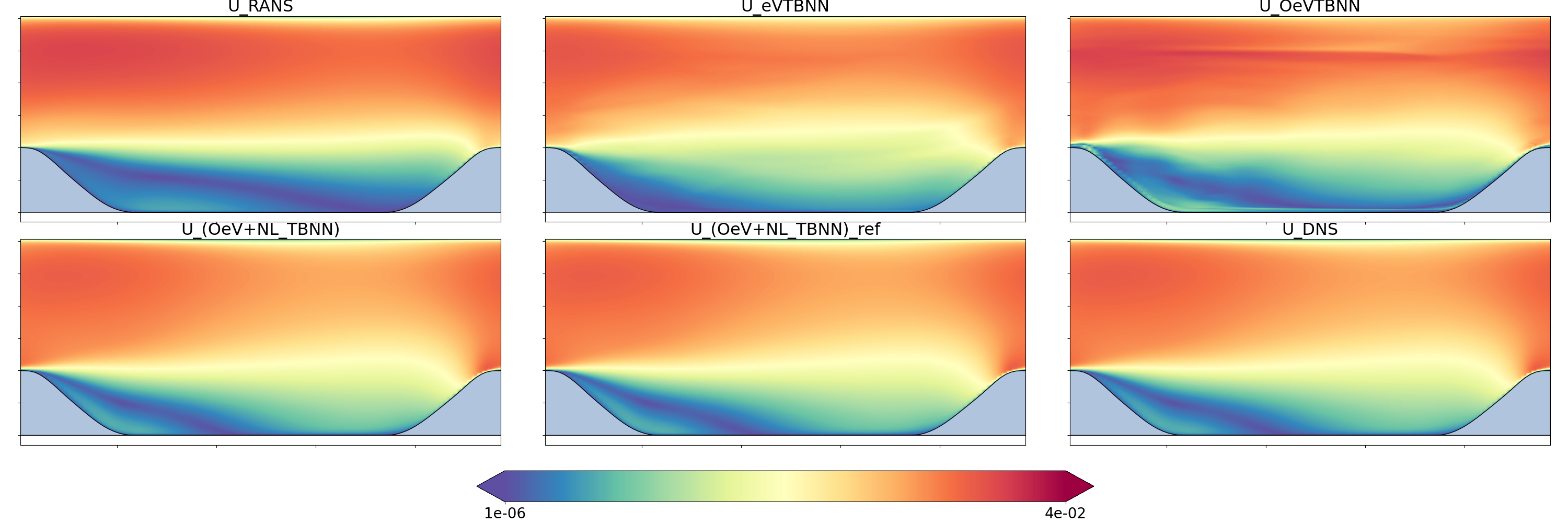

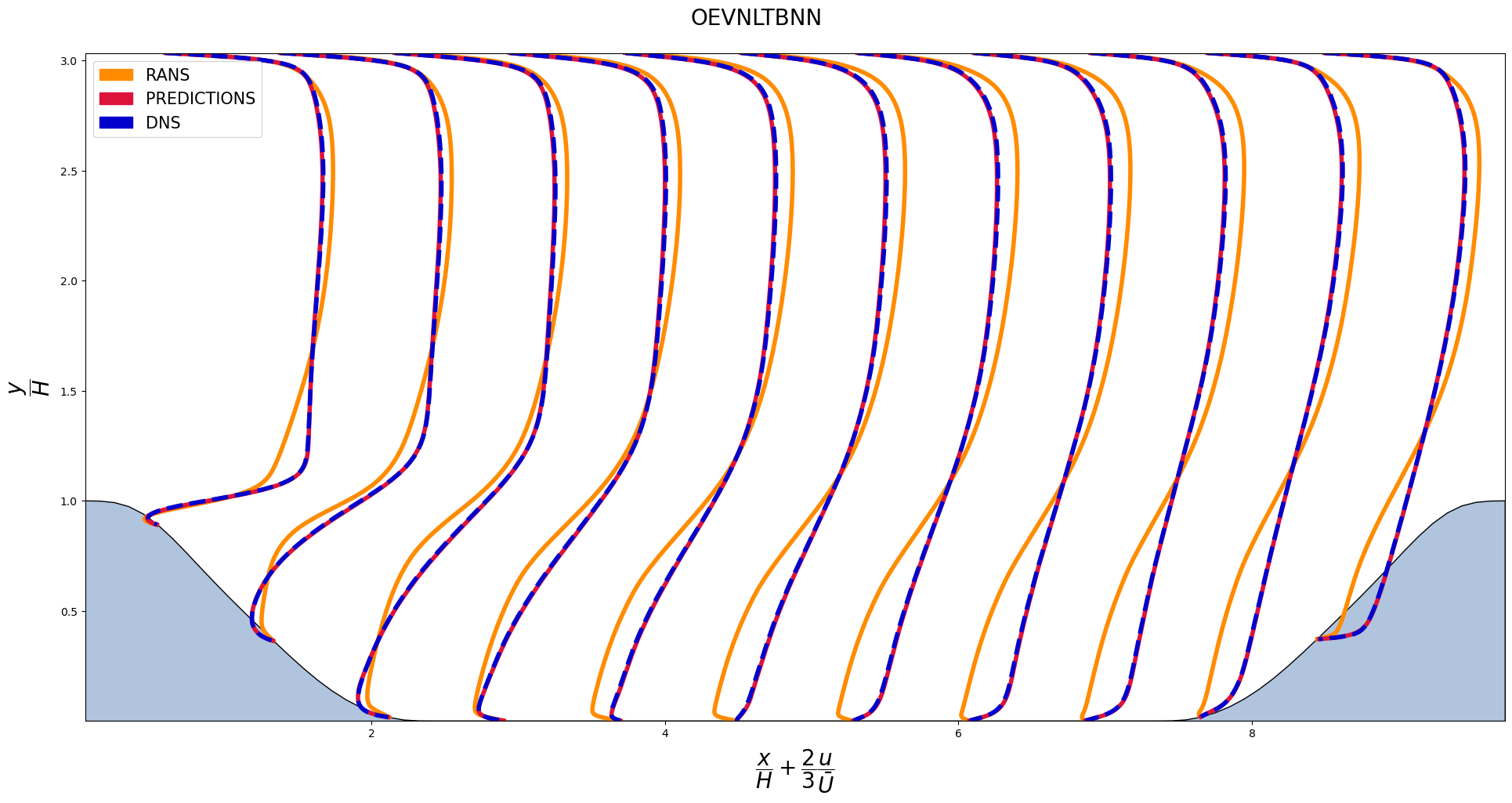

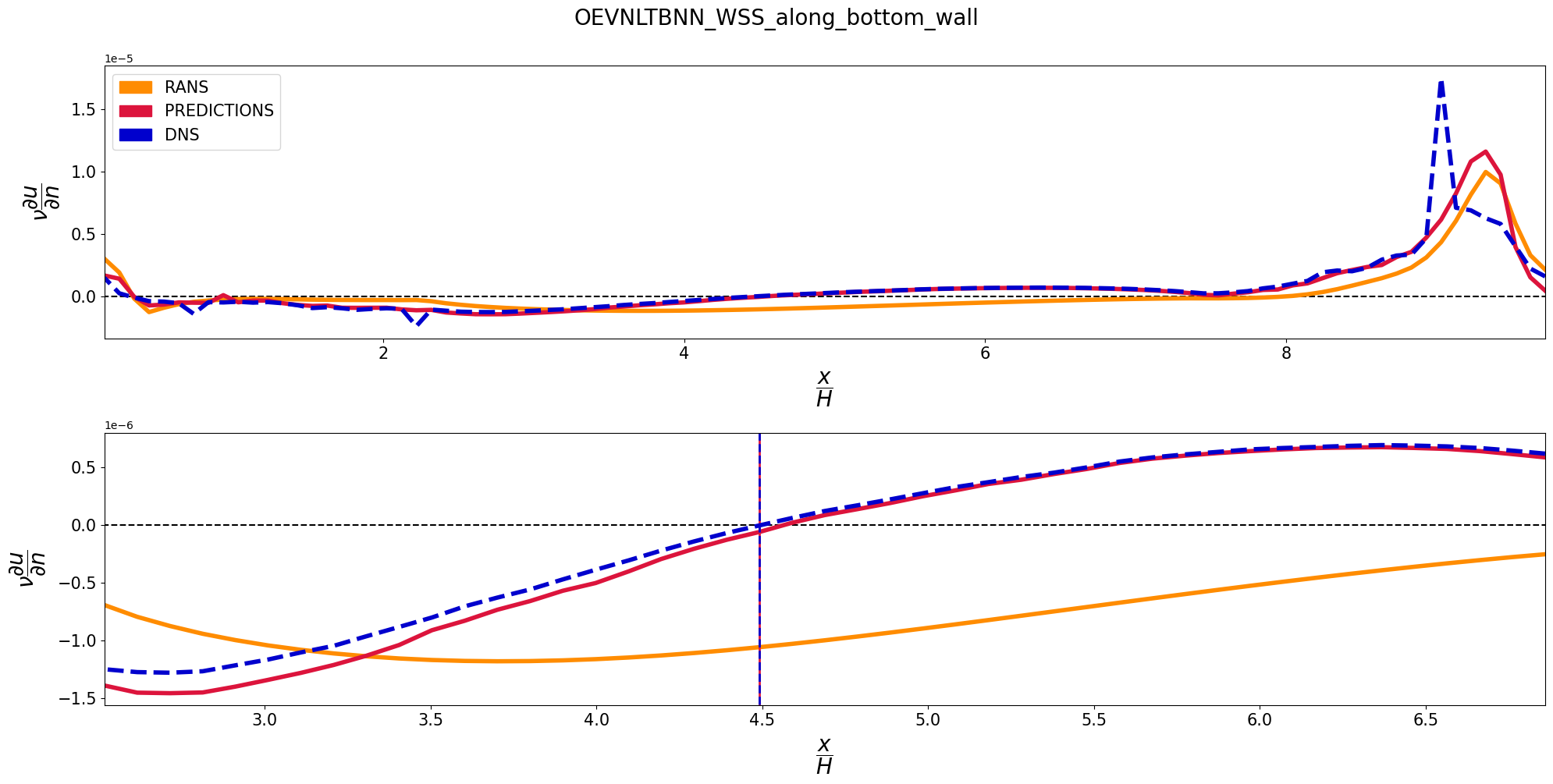

After injecting the predicted anisotpry fields into OpenFOAM, the converged velocity fields for the PHLL_case_1p2 test case perfectly match the averaged DNS field. The wall sheer stress along the bottom wall and the reattachment lenght also match their DNS counterpart.