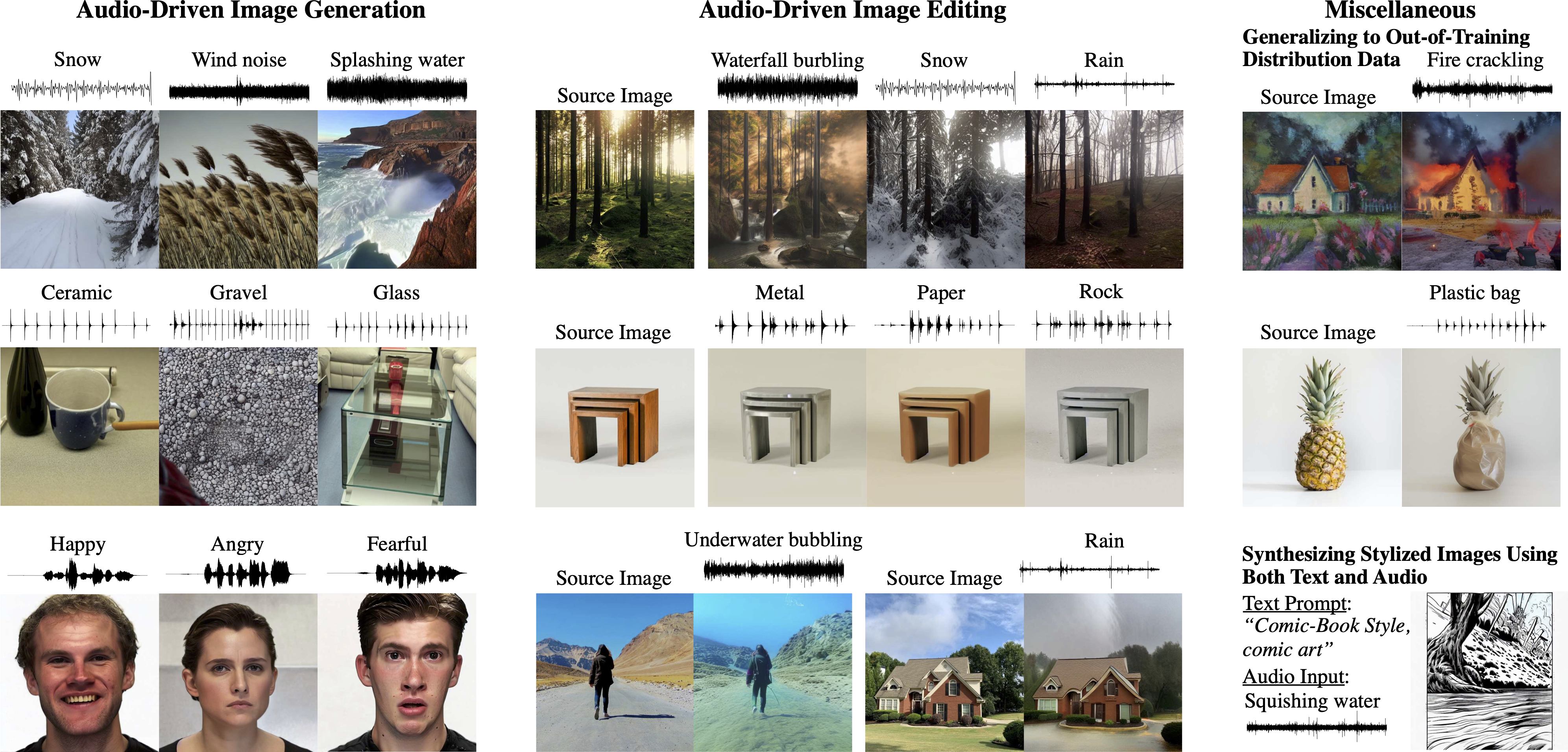

Official Implementation of SonicDiffusion: Audio-Driven Image Generation and Editing with Pretrained Diffusion Models.

03.05.2024: Our code and demo are released.

$ conda create -n "myenv" python=3.11

$ git clone git@github.com:BurakCanBiner/SonicDiffusion.git

$ cd SonicDiffusion

$ pip install -r requirements.txtPretrained models are available at the following link.

https://huggingface.co/spaces/burakcanbiner/SonicDiffusion/tree/main/ckpts

By default, we assume that all models are downloaded and saved to the directory ckpts.

@misc{biner2024sonicdiffusion,

title={SonicDiffusion: Audio-Driven Image Generation and Editing with Pretrained Diffusion Models},

author={Burak Can Biner and Farrin Marouf Sofian and Umur Berkay Karakaş and Duygu Ceylan and Erkut Erdem and Aykut Erdem},

year={2024},

eprint={2405.00878},

archivePrefix={arXiv},

primaryClass={cs.CV}

}