Title: Mitigating Adversarial Vulnerability through Causal Parameter Estimation by Adversarial Double Machine Learning

Authors: Byung-Kwan Lee*, Junho Kim*, and Yong Man Ro (*: equally contributed)

Affiliation: School of Electrical Engineering, Korea Advanced Institute of Science and Technology (KAIST)

This is an Official PyTorch Implementation code for developing adversarial double machine learning (ADML) which addresses class discrepancies and overfitting to non-vulnerable samples by utilizing the state-of-the-art causal approach relieving bias and overfitting on model, namely double machine learning (DML). This code is combined with below state-of-the-art technologies for accelerating adversarial attacks and defenses with Deep Neural Networks on Volta GPU architecture.

- Distributed Data Parallel [link]

- Channel Last Memory Format [link]

- Mixed Precision Training [link]

- Mixed Precision + Adversarial Attack (based on torchattacks [link])

- Faster Adversarial Training for Large Dataset [link]

- Fast Forward Computer Vision (FFCV) [link]

In light of our computationally efficient environment settings (e.g., distributed-data-parallel (DDP), mixed precision (both float32 and float16), the format conversion of the ffcv dataset (.beton), and computation procedure from torch-version), it has been observed that altering or removing part of these components could potentially result in fluctuating the performances. Therefore, we are actively working on enhancing and refining these technical elements to address these engineering issues and further optimize our computational environment, ensuring optimal performance for our tasks and applications at the forefront of cutting-edge technologies.

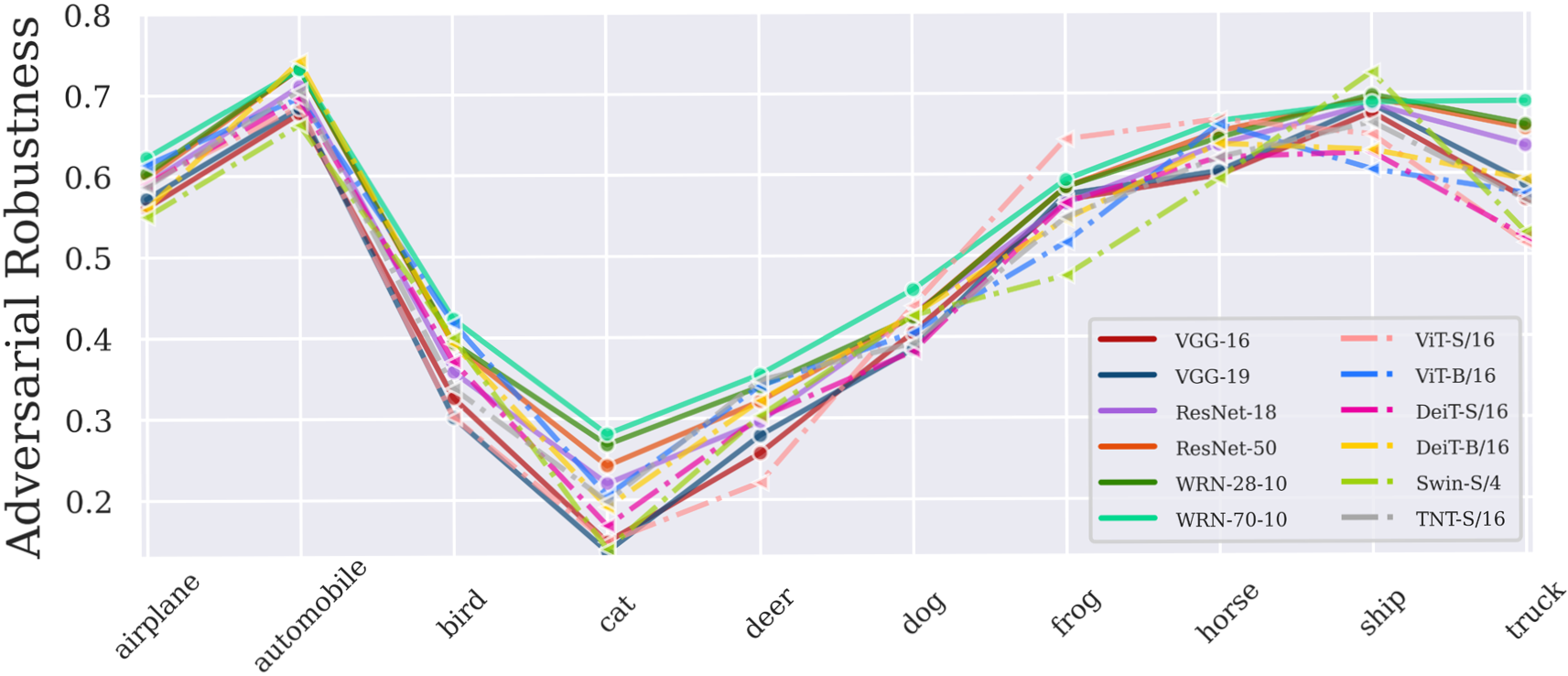

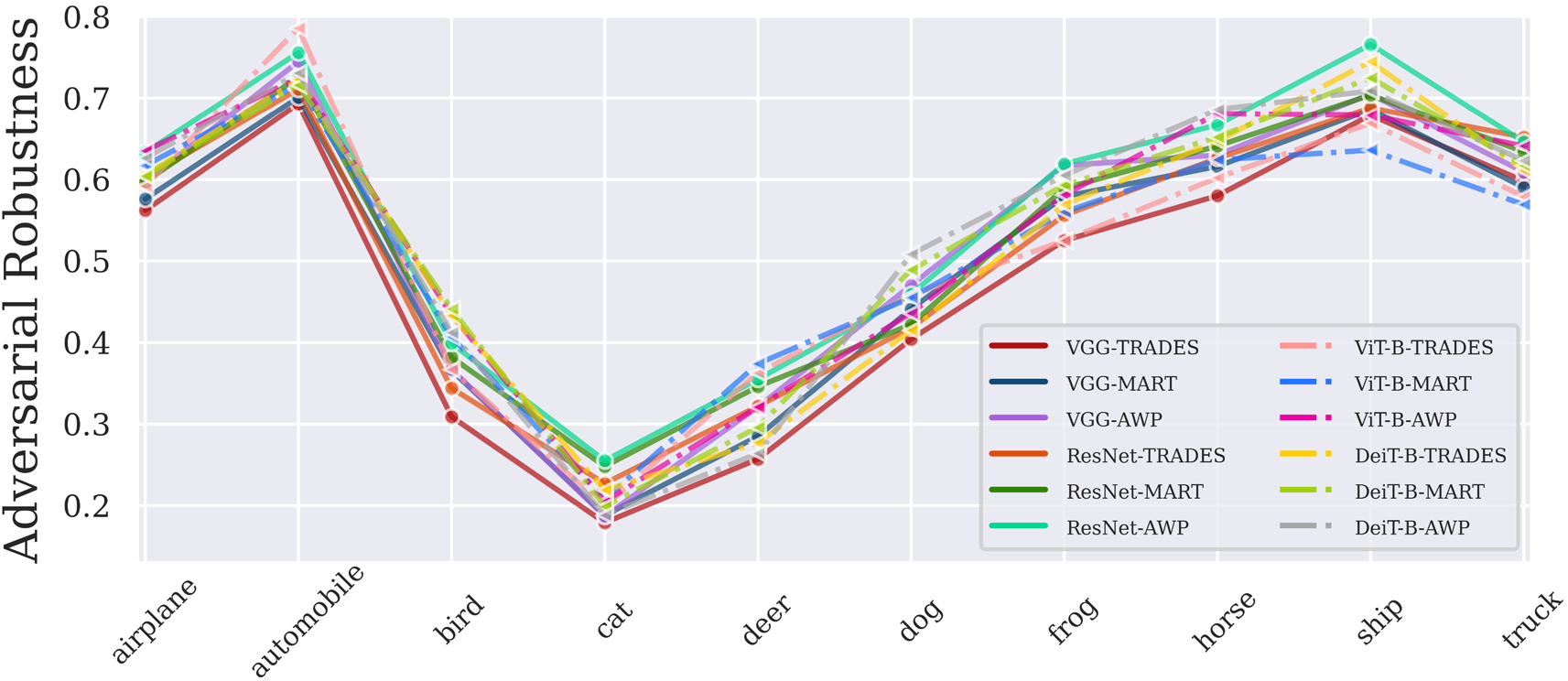

Adversarial examples derived from deliberately crafted perturbations on visual inputs can easily harm decision process of deep neural networks. To prevent potential threats, various adversarial training-based defense methods have grown rapidly and become a de facto standard approach for robustness. Despite recent competitive achievements, we observe that adversarial vulnerability varies across targets and certain vulnerabilities remain prevalent. Intriguingly, such peculiar phenomenon cannot be relieved even with deeper architectures and advanced defense methods described in the following figures.

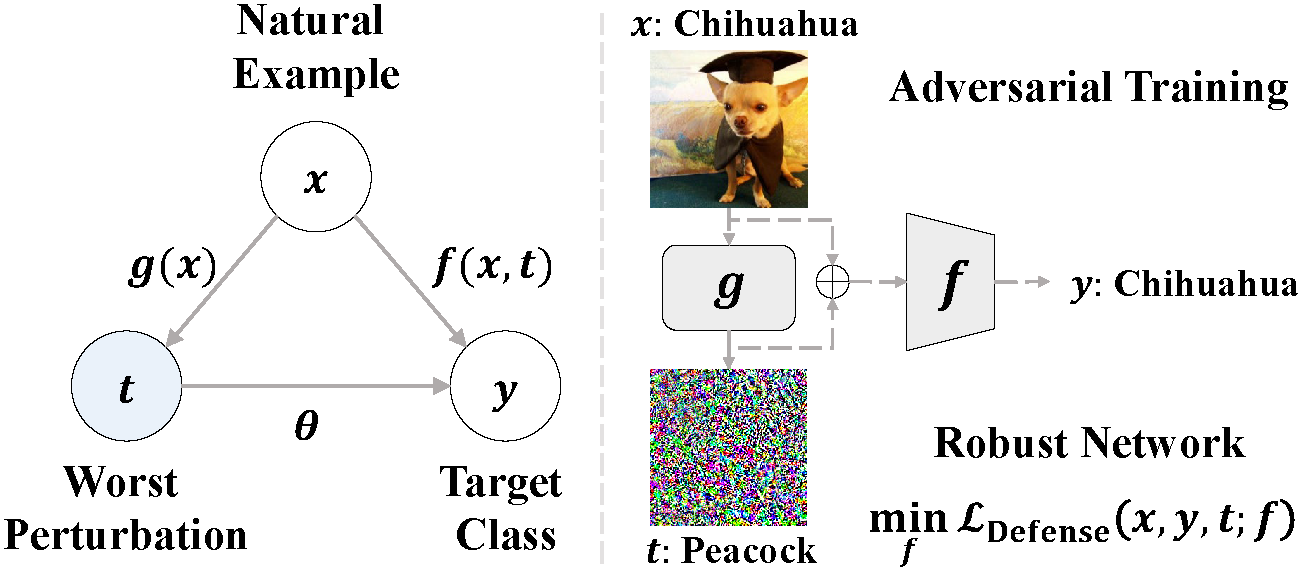

To address this issue, in this paper, we introduce a causal approach called Adversarial Double Machine Learning (ADML), which allows us to quantify the degree of adversarial vulnerability for network predictions and capture the effect of treatments on outcome of interests. Note that, this degree is denoted by theta in the figure below.

ADML can directly estimate the causal parameter (theta) of adversarial perturbations per se and mitigate negative effects that can potentially damage robustness, bridging a causal perspective into the adversarial vulnerability.

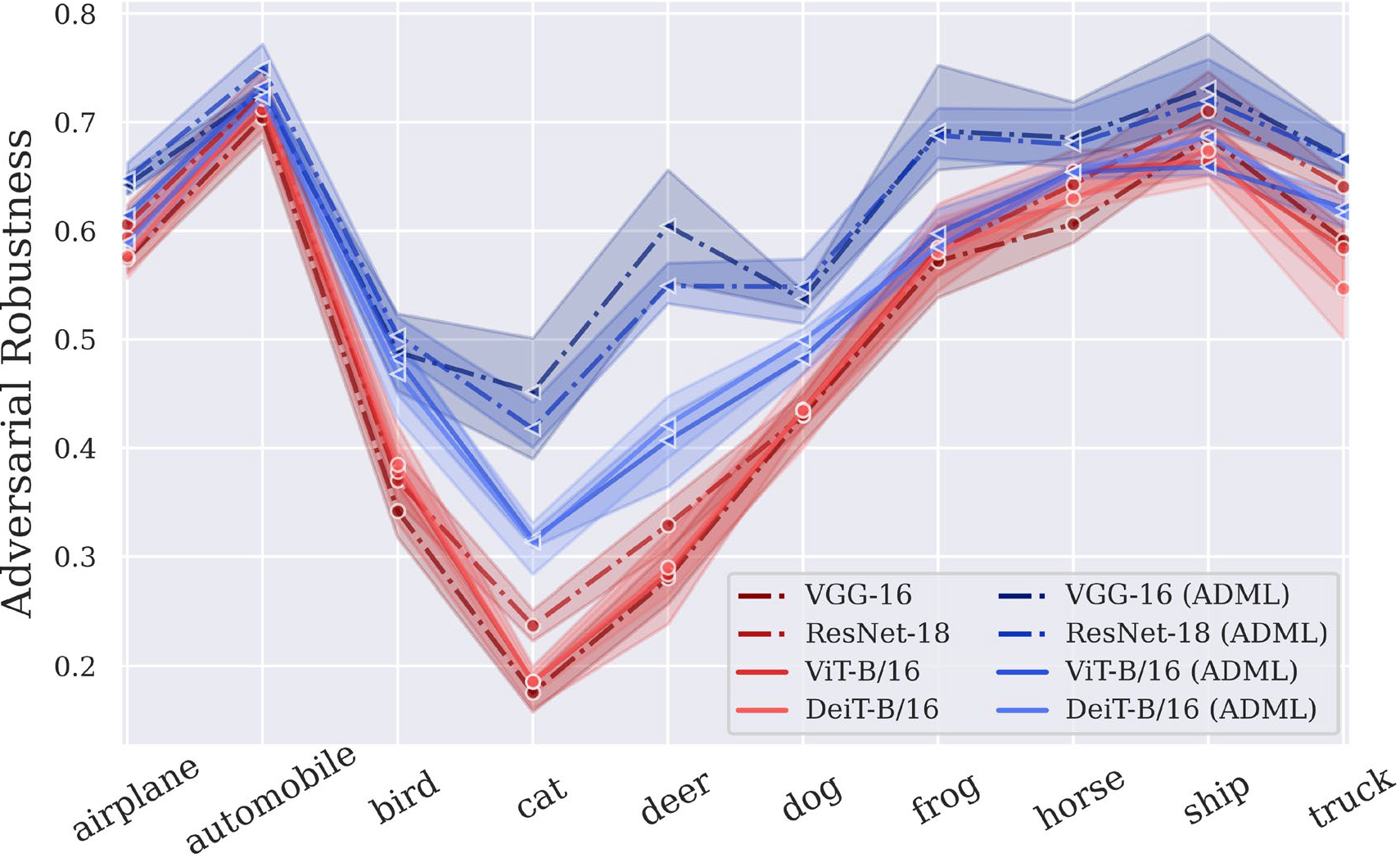

With not only the above figure, but also extensive experiments on various CNN and Transformer architectures, we corroborate that ADML improves adversarial robustness with large margins and relieve the empirical observation. For more detail, you can find a lot of empirical results in our paper on ICCV 2023.

.

├── attack

│ ├── fastattack.py # Adversarial Attack Loader

│ └── libfastattack # Adversarial Attack Library

│

├── utils

│ ├── fast_data_utils.py # FFCV DataLoader

│ ├── fast_network_utils.py # Network Loader

│ ├── scheduler.py # Transformer Scheduler

│ └── utils.py # Numerous Utility Tools

│

├── models

│ ├── vgg.py # VGG

│ ├── resnet.py # ResNet

│ ├── wide.py # WideResNet

│ ├── vision_transformer.py # ViT

│ └── distill_transformer.py # DeiT

│

├── defense # AT-based Defense Methods

│ ├── fast_train_adv.py # Standard Adversarial Training (AT)

│ ├── fast_train_trades.py # TRADES

│ ├── fast_train_mart.py # MART

│ └── fast_train_awp.py # AWP

│

├── adml # Proposed Method

│ ├── fast_train_adml_adv.py # ADML-ADV

│ ├── fast_train_adml_trades.py # ADML-TRADES

│ ├── fast_train_adml_mart.py # ADML-MART

│ └── fast_train_adml_awp.py # ADML-AWP

│

├── fast_dataset_converter.py # Dataset Converter for beton extension

├── fast_train_standard.py # Stadnard Training

├── requirements.txt # Requirement Packages

└── README.md # This README File

Please check below settings to successfully run this code. If not, follow step by step during filling the checklist in.

- To utilize FFCV [link], you should install it on conda virtual environment. I use python version 3.9, pytorch, torchvision, and cuda 11.3. For more different version, you can refer to PyTorch official site [link].

conda create -y -n ffcv python=3.9 cupy pkg-config compilers libjpeg-turbo opencv pytorch torchvision cudatoolkit=11.3 numba cudnn -c pytorch -c conda-forge

- Activate the created environment by conda

conda activate ffcv

- To install FFCV, you should download it in pip and install torchattacks [link] to run adversarial attack.

pip install ffcv torchattacks

- To guarantee the execution of this code, please additionally install library in requirements.txt (matplotlib, tqdm)

pip install -r requirements.txt

- To address biased GPU allocation problem, download ffcv 1.0.0 or 0.4.0 version and copy and paste all files in ffcv folder of the downloaded to the path (Necessary!)

paste on this path: /home/$username/anaconda3/envs/$env_name(ex:ffcv)/lib/$python_version/site-packages/ffcv

- First, run

fast_dataset_converter.pyto generate dataset with.betsonextension, instead of using original dataset [FFCV].

# Future import build

from __future__ import print_function

# Import built-in module

import os

import argparse

# fetch args

parser = argparse.ArgumentParser()

# parameter

parser.add_argument('--dataset', default='cifar10', type=str)

parser.add_argument('--gpu', default='0', type=str)

args = parser.parse_args()

# GPU configurations

os.environ["CUDA_VISIBLE_DEVICES"]=args.gpu

# init fast dataloader

from utils.fast_data_utils import save_data_for_beton

save_data_for_beton(dataset=args.dataset)- Second, run

fast_train_standard.py(Standard Training) orfast_train_adv.py(Standard Adversarial Training)

- run

fast_test_robustness.py

Refer to 'utils/fast_data_utils.py' and 'utils/fast_network_utils.py'

VGG(models/vgg.py)ResNet(models/resnet.py)WideResNet(models/wide.py)ViT(models/vision_transformer.py)DeiT(models/distill_transformer.py)

Available Adversarial Attacks (implemented with Mixed-Precision + Torchattacks)

FGSM(attack/libfastattack/FastFGSM.py)PGD(attack/libfastattack/FastPGD.py)CW(attack/libfastattack/FastCWLinf.py)AP(attack/libfastattack/APGD.py)DLR(attack/libfastattack/APGD.py)AA(attack/libfastattack/AutoAttack.py)

If you would like to run the code in 'defense' folder, then you should first move the codes out of the folder and run it because of the system path error.

AT(fast_train_adv.py)TRADES(fast_train_trades.py)MART(fast_train_mart.py)AWP(fast_train_awp.py)

If you would like to run the code in 'adml' folder, then you should first move the codes out of the folder and run it because of the system path error.

ADML-ADV(adml/fast_train_adml_adv.py)ADML-TRADES(adml/fast_train_adml_trades.py)ADML-MART(adml/fast_train_adml_mart.py)ADML-AWP(adml/fast_train_adml_awp.py)

First, download other datasets you want and convert them to ffcv format, and then you can run our codes with the same way described above!