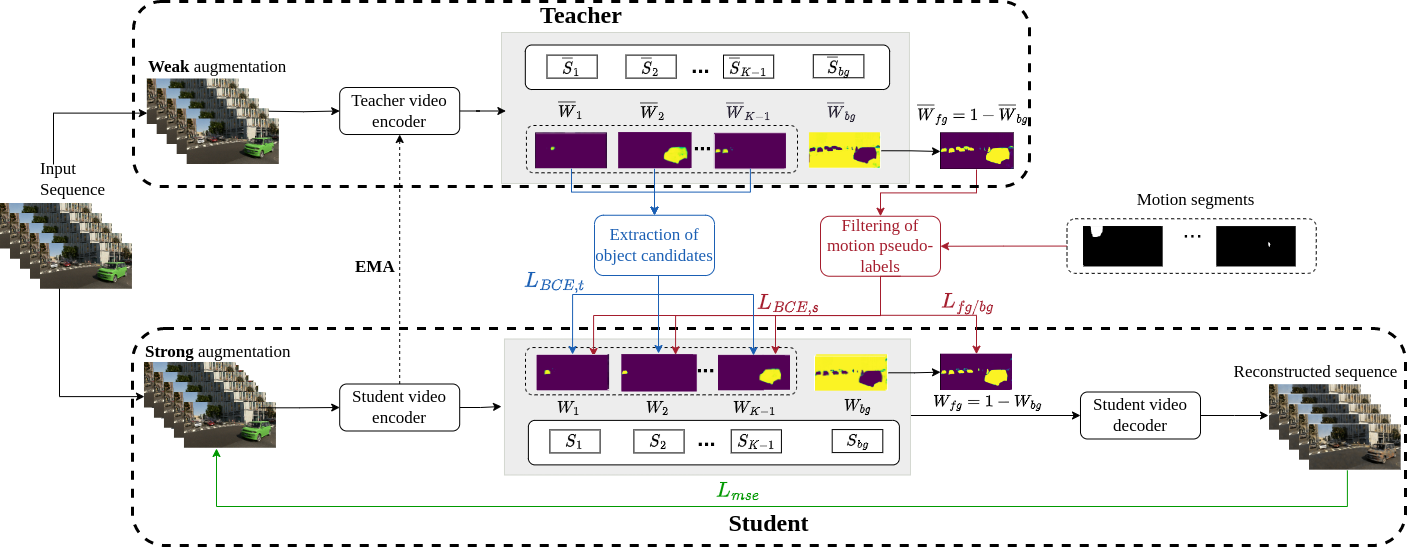

This repository is the official implementation of DIOD: Self-Distillation Meets Object Discovery, published at CVPR 2024.

Use the following command to create the environment from the DIOD_env.yml file:

conda env create -f DIOD_env.ymlTo train/evaluate DIOD, please download the required datasets along with the pseudo-labels shared by DOM:

- TRI-PD

- KITTI-train, and KITTI-test

On TRI-PD:

-

For burn-in phase, run:

# set start_teacher > num_epochs not to run distillation, example: python trainPD_ts.py --num_epochs 500\ --start_teacher 501 -

For teacher-student training, run:

python trainPD_ts.py --start_teacher 0\ --burn_in_exp 'your_burn_in_experiment_directory'\ --burn_in_ckpt 'ckpt_name'

-

Our used configuration is set as default values.

-

We provide here our model checkpoint at the end of burn in. It can be used to directly run distillation. Example:

python trainPD_ts.py --start_teacher 0\ --burn_in_exp 'checkpoints'\ --burn_in_ckpt 'DIODPD_burn_in_400.ckpt'

On KITTI:

Models trained on KITTI are initialized from TRI-PD experiment, so for KITTI directly run:

python trainKITTI_ts.py --start_teacher 0\

--burn_in_exp 'TRI-PD_experiment_ckpt_directory'\

--burn_in_ckpt 'ckpt_name'- The used configuration is set as default values.

- Example using our checkpoint DIODPD_500.ckpt provided here:

python trainKITTI_ts.py --start_teacher 0\

--burn_in_exp 'checkpoints'\

--burn_in_ckpt 'DIODPD_500.ckpt'- We provide here the checkpoints for our trained models.

For fg-ARI and all-ARI, run:

python evalPD_ARI.py # for TRI-PD

python evalKITTI_ARI.py # for KITTIFor F1 score, run:

python evalPD_F1_score.py # for TRI-PD

python evalKITTI_F1_score.py # for KITTIDIOD_DINOv2 is the version of DIOD that uses a ViT-S14 backbone pre-trained with DINOv2. Inside this directory, you can use the same commands provided above to run this version.

This code is built upon the DOM codebase, and uses pre-trained models from DINOv2. We thank the authors for their great work and for sharing their code/pre-trained models.

@inproceedings{kara2024diod,

title={DIOD: Self-Distillation Meets Object Discovery},

author={Kara, Sandra and Ammar, Hejer and Denize, Julien and Chabot, Florian and Pham, Quoc-Cuong},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={3975--3985},

year={2024}

}This project is under the CeCILL license 2.1.