[Arxiv 2024] From Parts to Whole: A Unified Reference Framework for Controllable Human Image Generation

Preparing the stable model... Stay tuned!

🏠 Project Page | Paper

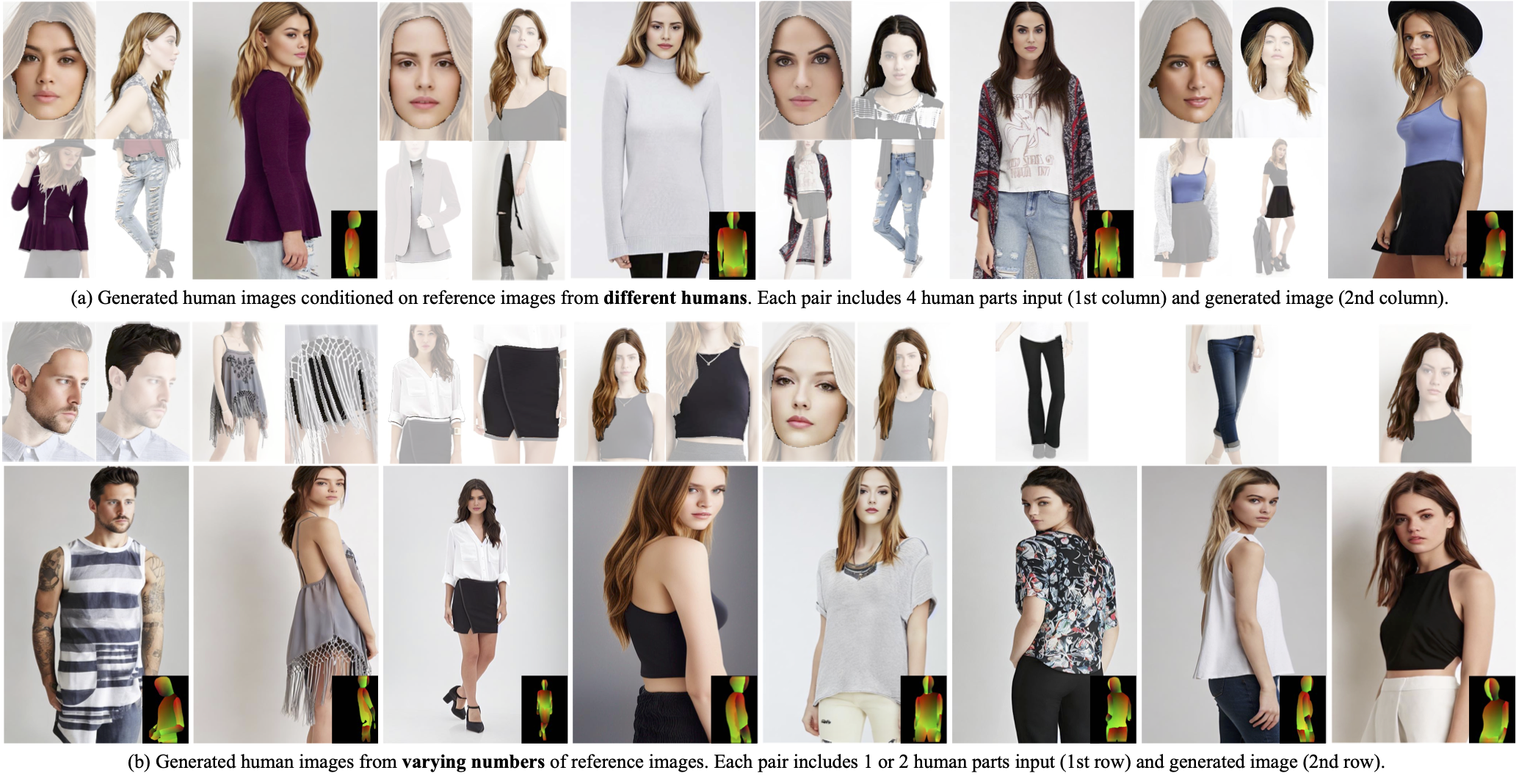

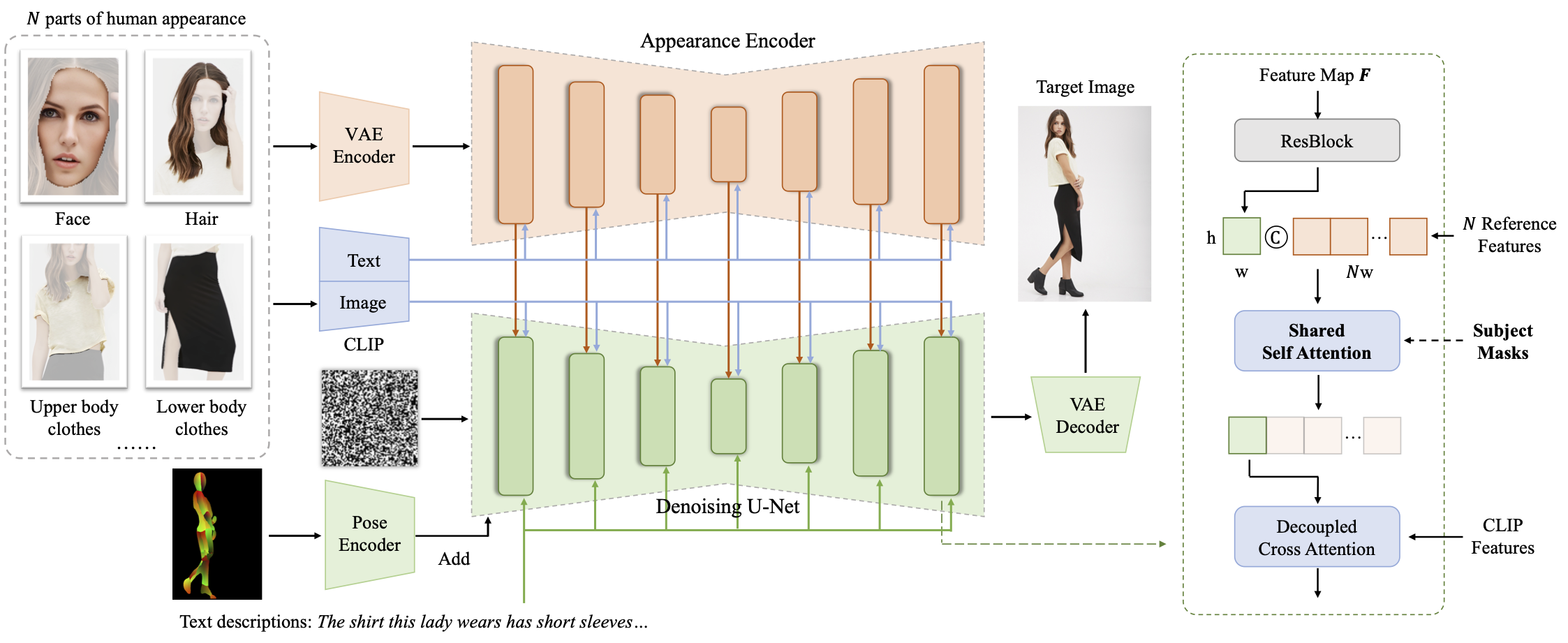

Abstract: We propose Parts2Whole, a novel framework designed for generating customized portraits from multiple reference images, including pose images and various aspects of human appearance. We first develop a semantic-aware appearance encoder to retain details of different human parts, which processes each image based on its textual label to a series of multi-scale feature maps rather than one image token, preserving the image dimension. Second, our framework supports multi-image conditioned generation through a shared self-attention mechanism that operates across reference and target features during the diffusion process. We enhance the vanilla attention mechanism by incorporating mask information from the reference human images, allowing for precise selection of any part.

We appreciate the open source of the following projects:

diffusers magic-animate Moore-AnimateAnyone DeepFashion-MultiModal Real-ESRGAN

If you find this repository useful, please consider citing:

@misc{huang2024parts2whole,

title={From Parts to Whole: A Unified Reference Framework for Controllable Human Image Generation},

author={Huang, Zehuan and Fan, Hongxing and Wang, Lipeng and Sheng, Lu},

journal={arXiv preprint arXiv:2404.15267},

year={2024}

}