In this Code Pattern, we will use German Credit data to train, create, and deploy a machine learning model using Watson Machine Learning on Cloud Pak for Data (CP4D). We will create a data mart for this model with Watson OpenScale and configure OpenScale to monitor that deployment, then inject seven days' worth of historical records and measurements for viewing in the OpenScale Insights dashboard.

When the reader has completed this Code Pattern, they will understand how to:

- Create and deploy a machine learning model using the Watson Machine Learning service on IBM Cloud Pak for Data (ICP4D).

- Setup Watson OpenScale Data Mart

- Bind Watson Machine Learning to the Watson OpenScale Data Mart

- Add subscriptions to the Data Mart

- Enable payload logging and performance monitor for subscribed assets

- Enable Quality (Accuracy) monitor

- Enable Fairness monitor

- Score the German credit model using the Watson Machine Learning

- Insert historic payloads, fairness metrics, and quality metrics into the Data Mart

- Use Data Mart to access tables data via subscription

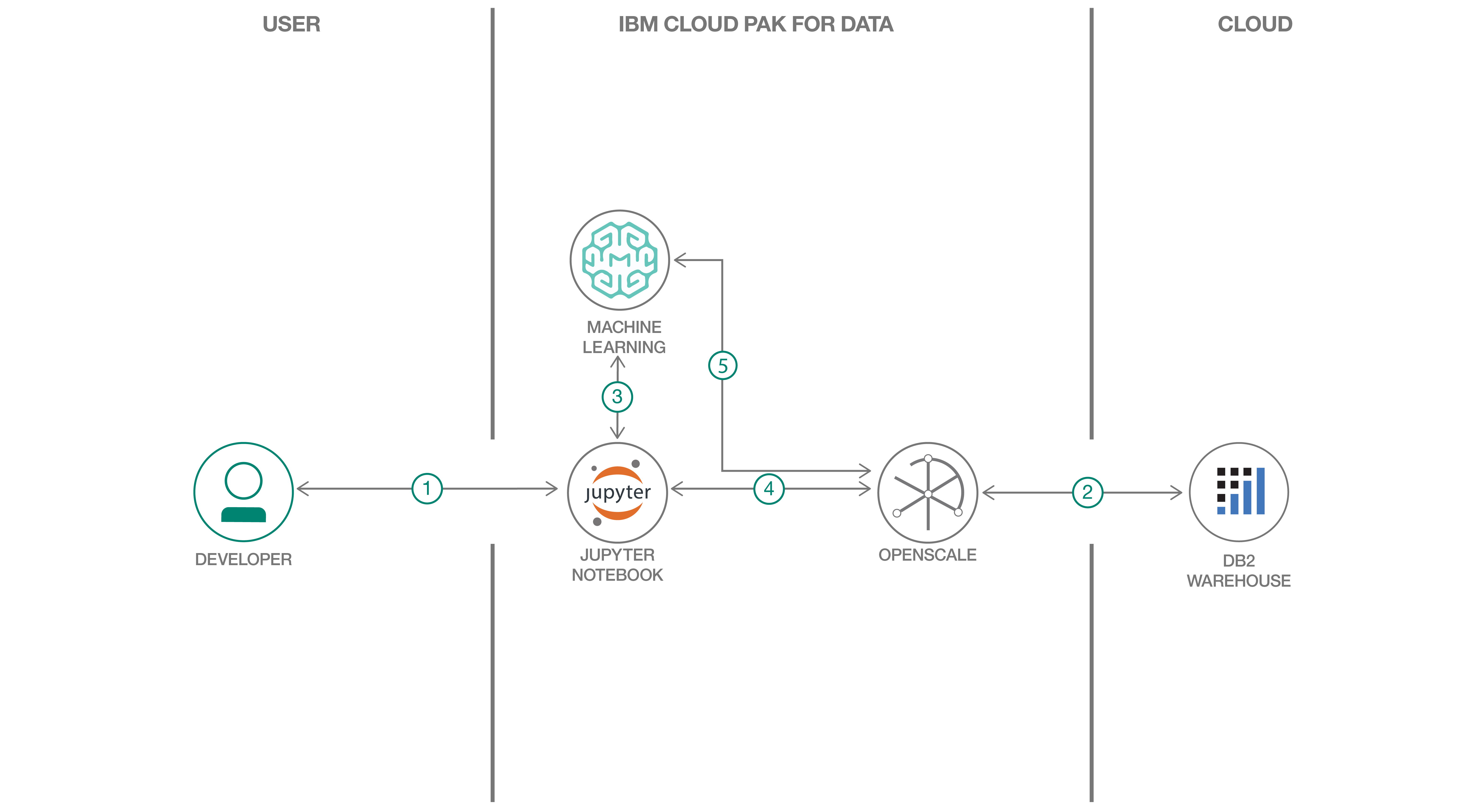

- Create a new project on ICP4D

- The developer creates a Jupyter Notebook within this project.

- OpenScale on ICP4D is connected to a DB2 database, which is used to store Watson OpenScale data.

- The notebook is connected to Watson Machine Learning and a model is trained and deployed.

- Watson OpenScale is used by the notebook to log payload and monitor performance, quality, and fairness.

- OpenScale will monitor the Watson Machine Learning model for performance, fairness, quality, and explainiblity.

- IBM Cloud Pak for Data

- Watson OpenScale Add-on installed for ICP4D

- Watson OpenScale configured for ICP4D

- Watson Machine Learning Add On for Cloud Pak for Data

- Create an IBM Cloud instance of DB2 Warehouse

- Clone the repository

- Create a new project and deployment space

- Configure OpenScale in a Jupyter Notebook

- Utilize the dashboard for OpenScale

In a terminal window, execute the following commands:

git clone https://github.com/IBM/monitor-ibm-cloud-pak-with-watson-openscale

cd monitor-ibm-cloud-pak-with-watson-openscaleIn Cloud Pak for Data, we use the concept of a project to collect / organize the resources used to achieve a particular goal (resources to build a solution to a problem). Your project resources can include data, collaborators, and analytic assets like notebooks and models, etc.

-

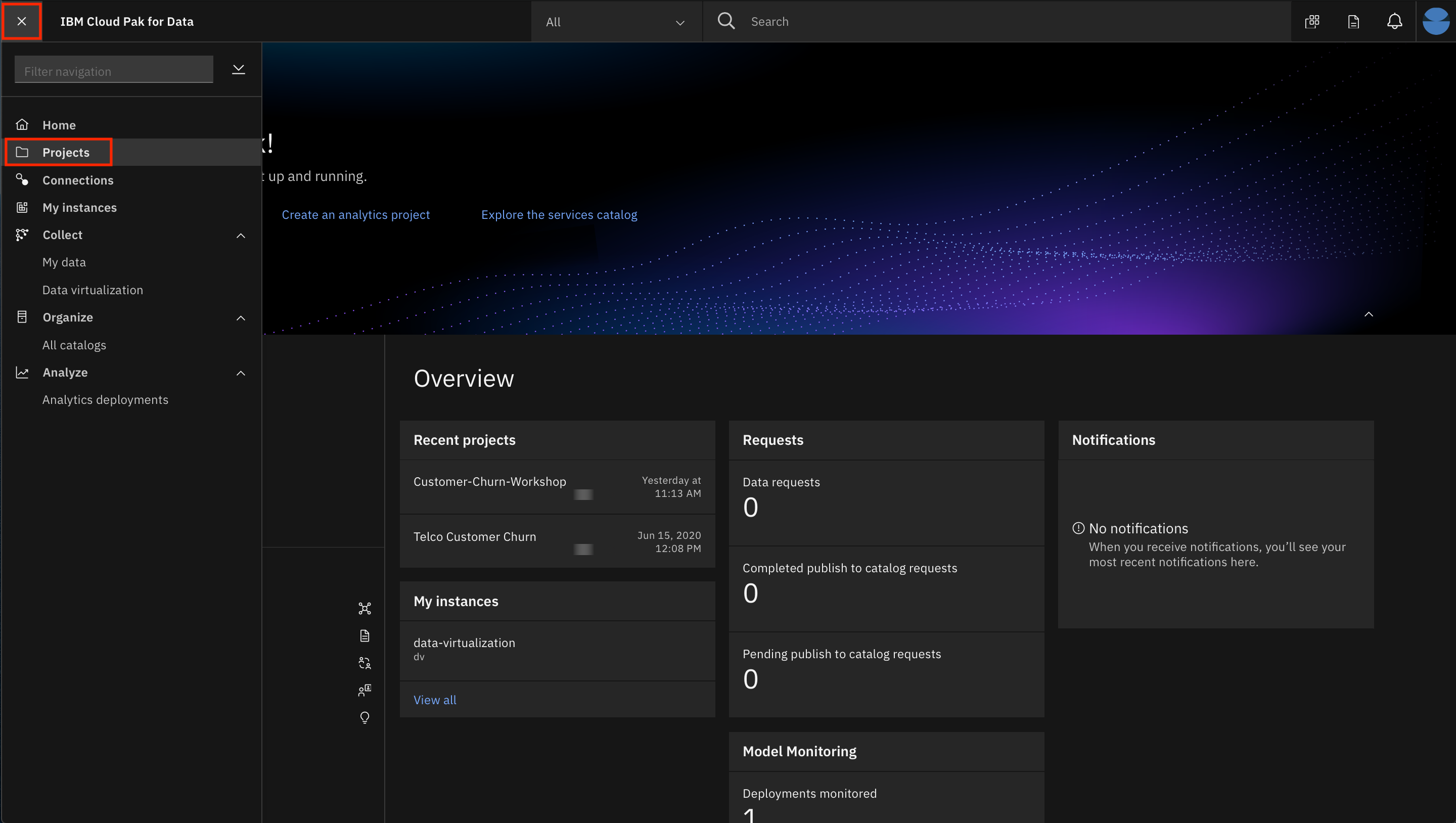

Launch a browser and navigate to your Cloud Pak for Data deployment.

-

Go the (☰) menu and click Projects:

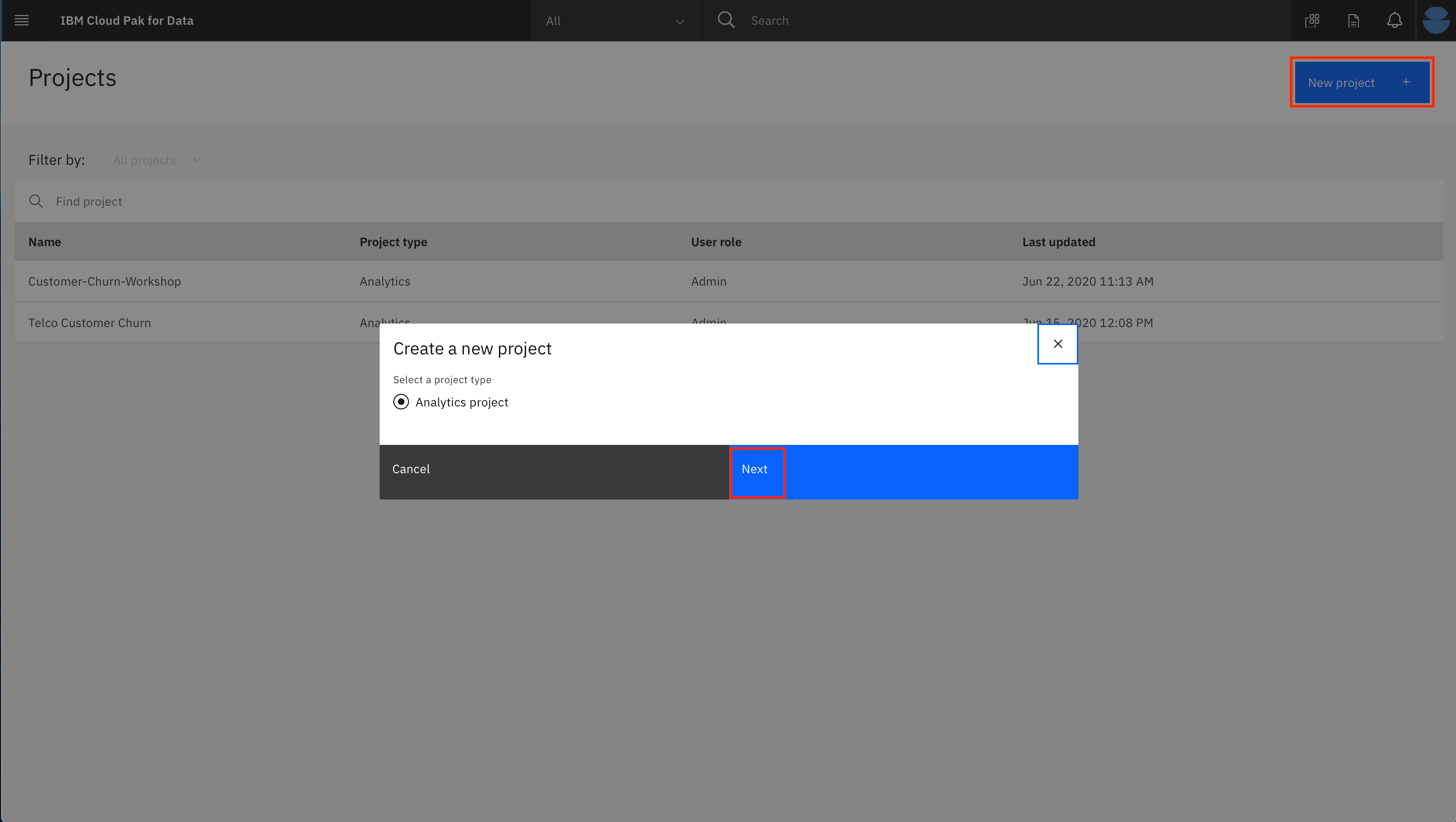

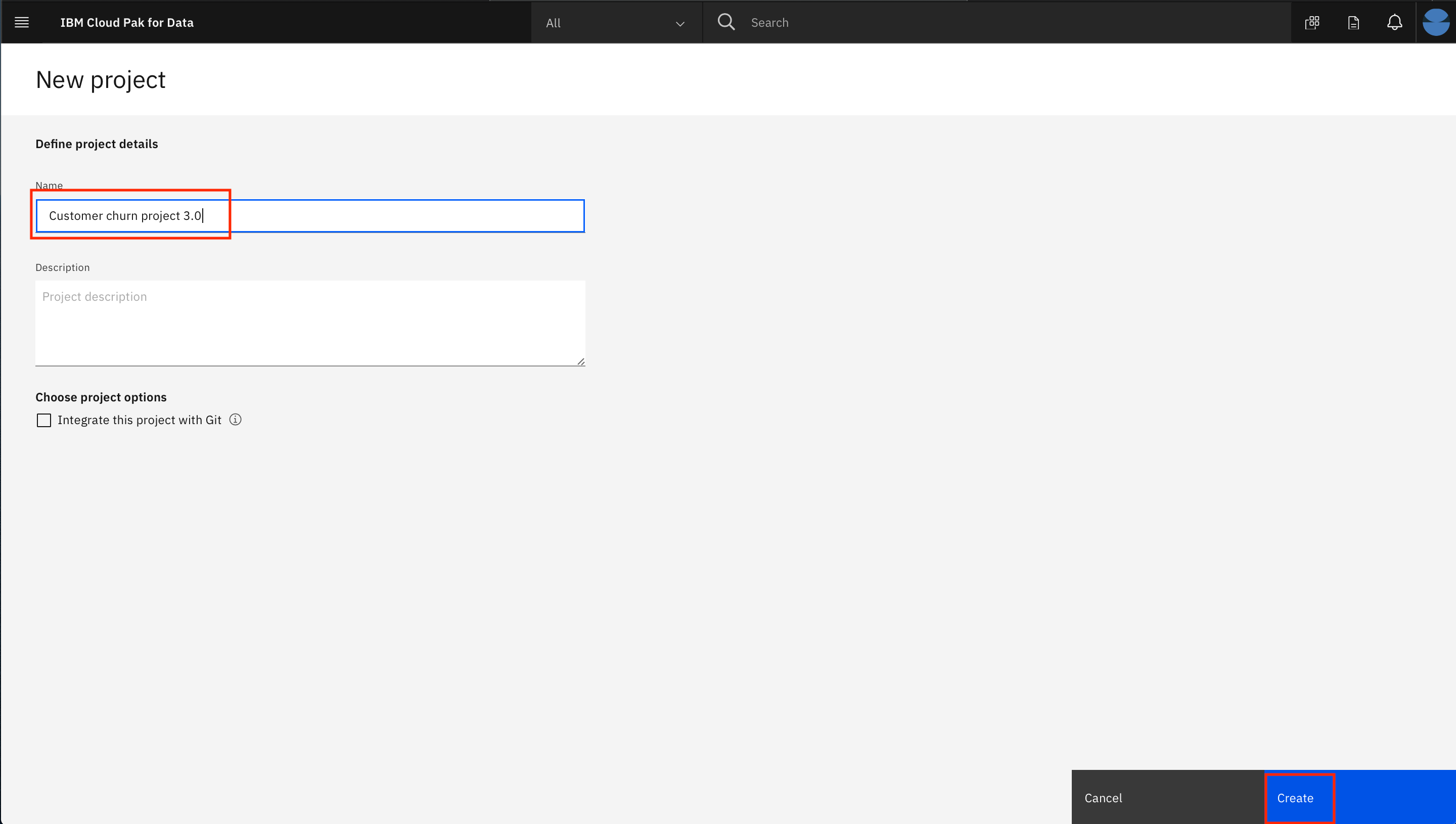

- Click on New project. In the dialog that pops up, select the project type as

Analytics projectand clickNext:

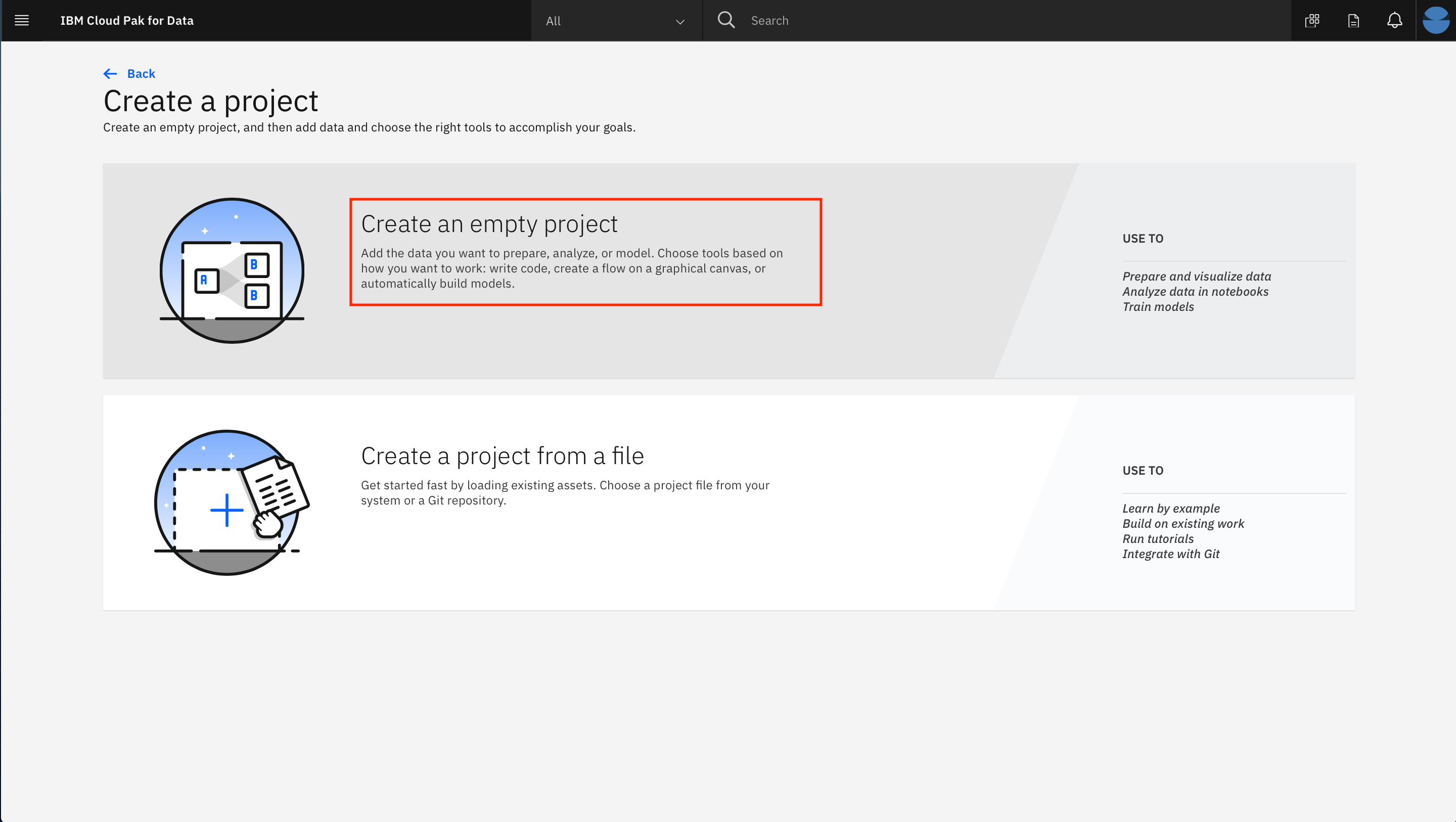

- Click on the top tile for

Create an empty project:

- Give the project a unique name, an optional description and click

Create:

Cloud Pak for Data uses the concept of Deployment Spaces to configure and manage the deployment of a set of related deployable assets. These assets can be data files, machine learning models, etc.

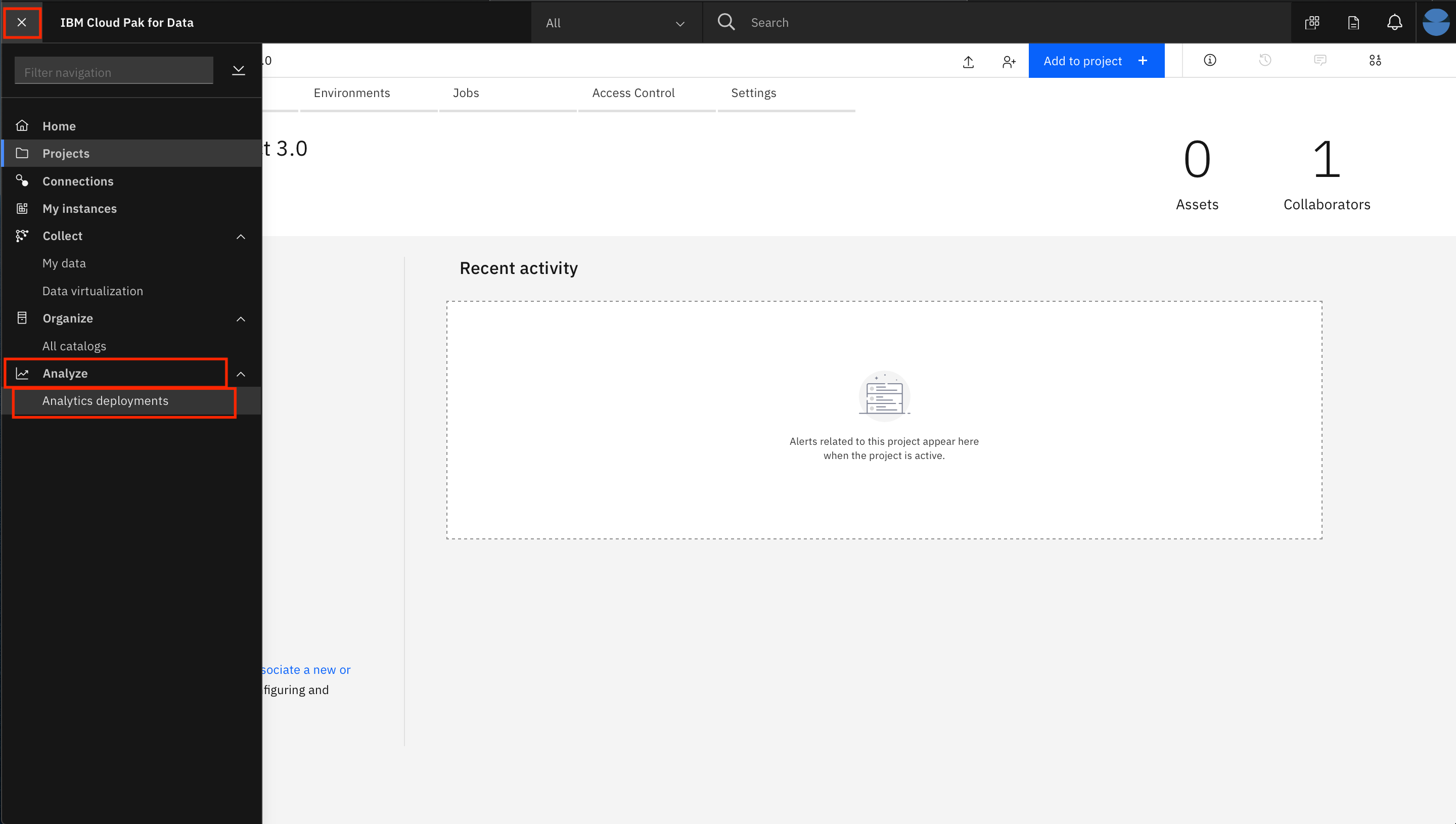

- Go the (☰) menu and click

Analyze->Analytics deployments:

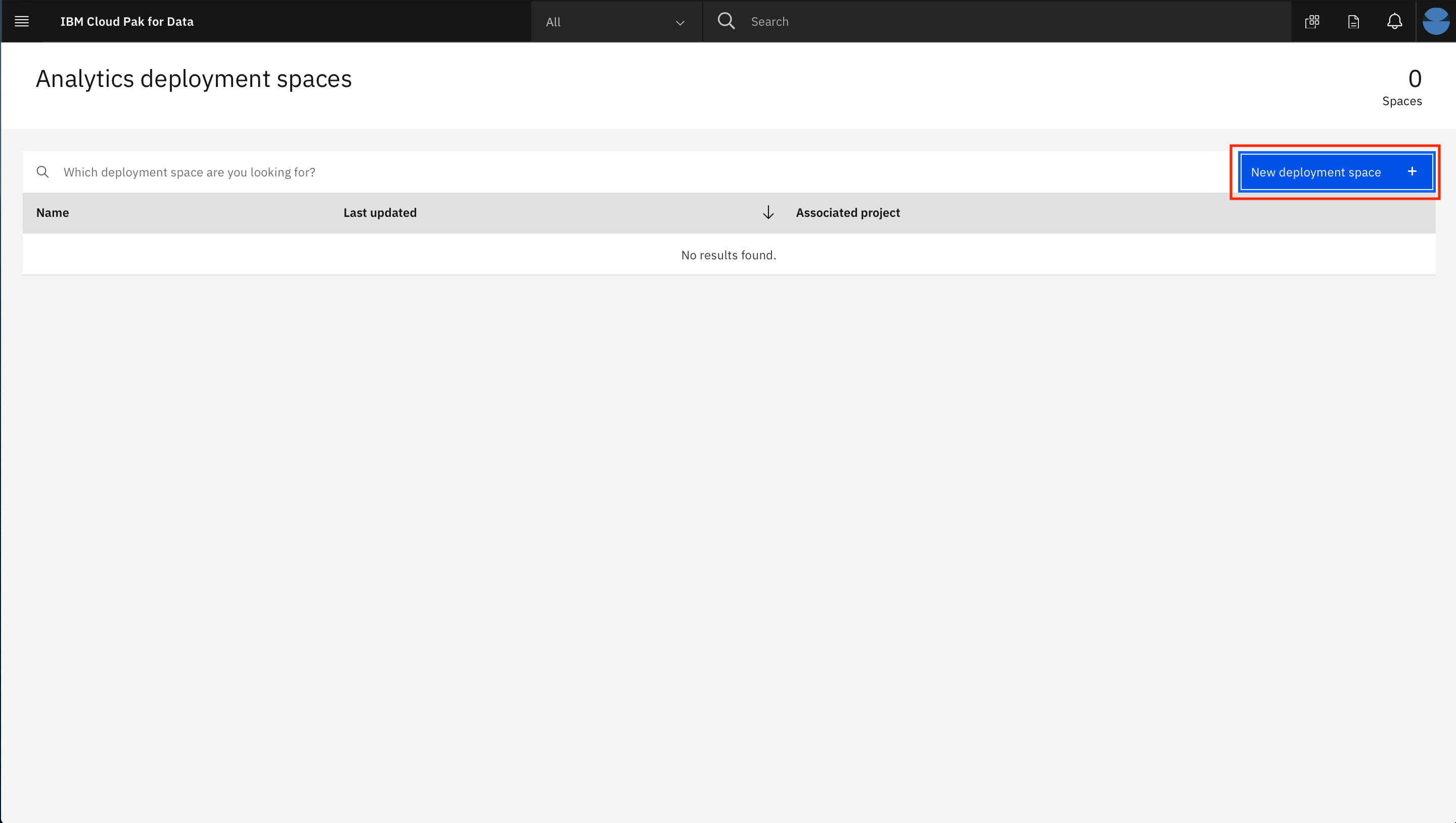

- Click on

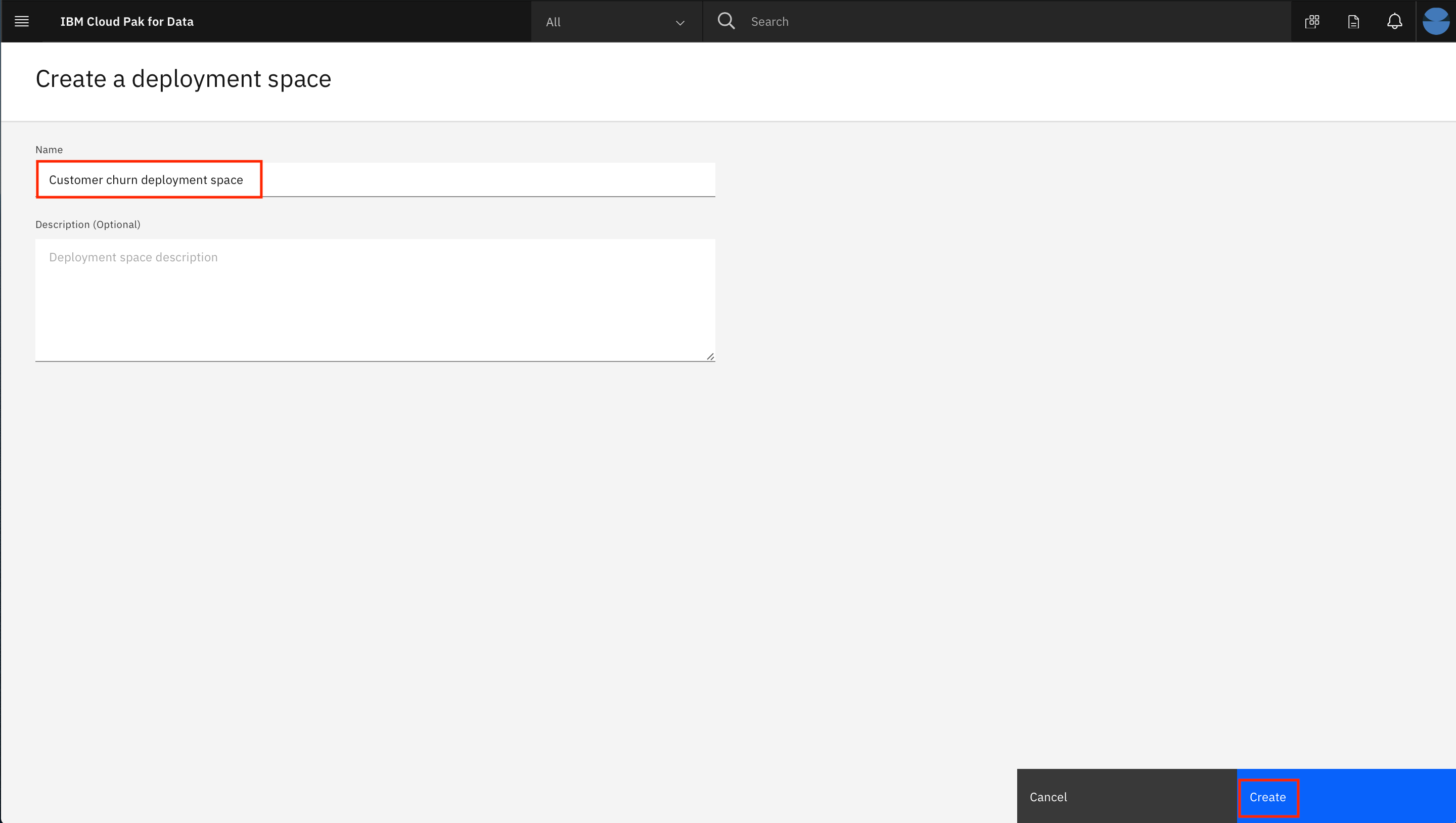

New deployment space +:

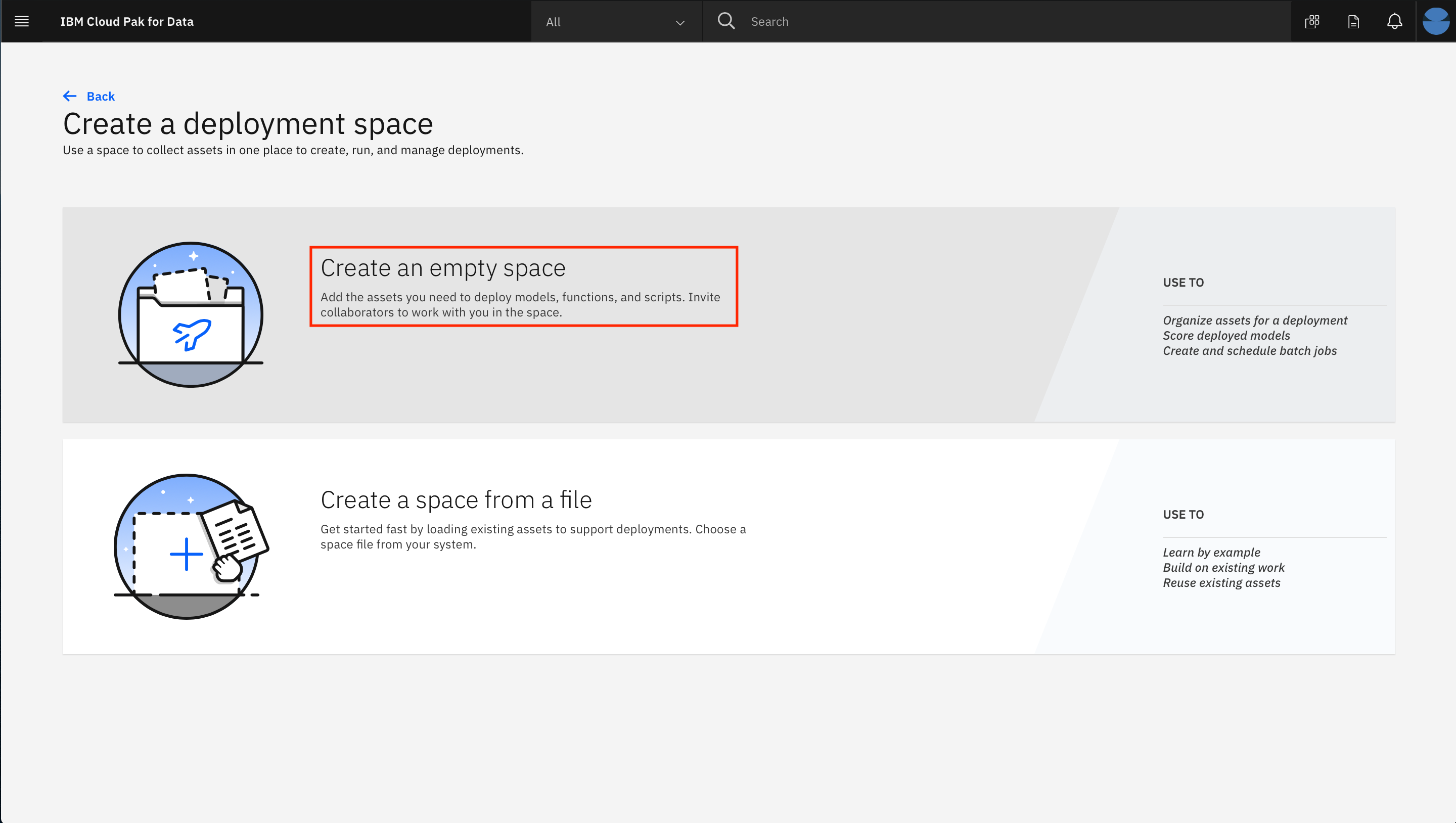

- Click on the top tile for 'Create an empty space':

- Give your deployment space a unique name, an optional description, then click

Create.

You will use this space later when you deploy a machine learning model.

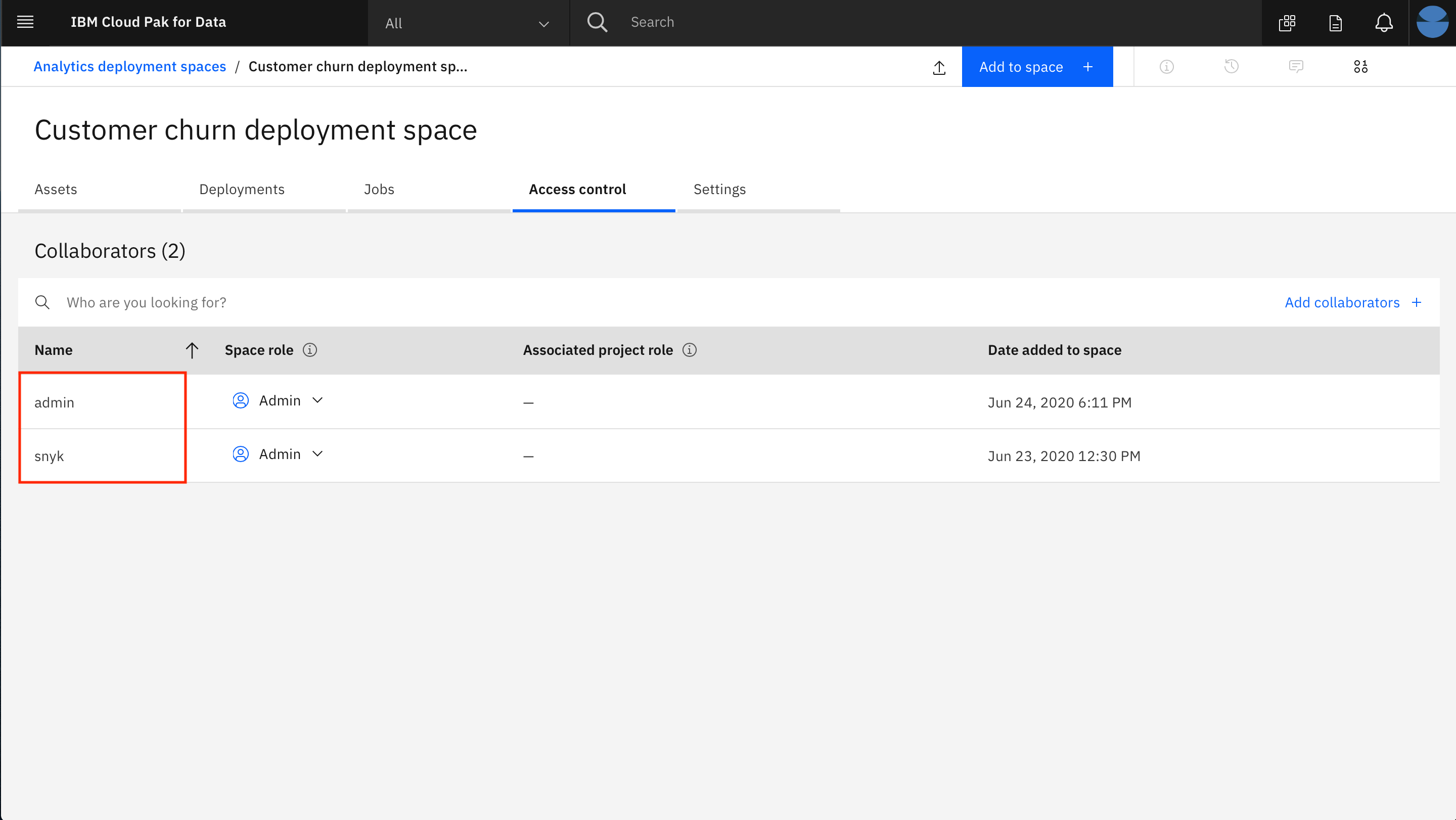

Next, we will add a collaborator to the new deployment space, so that assets we deploy can be monitored in step 4.

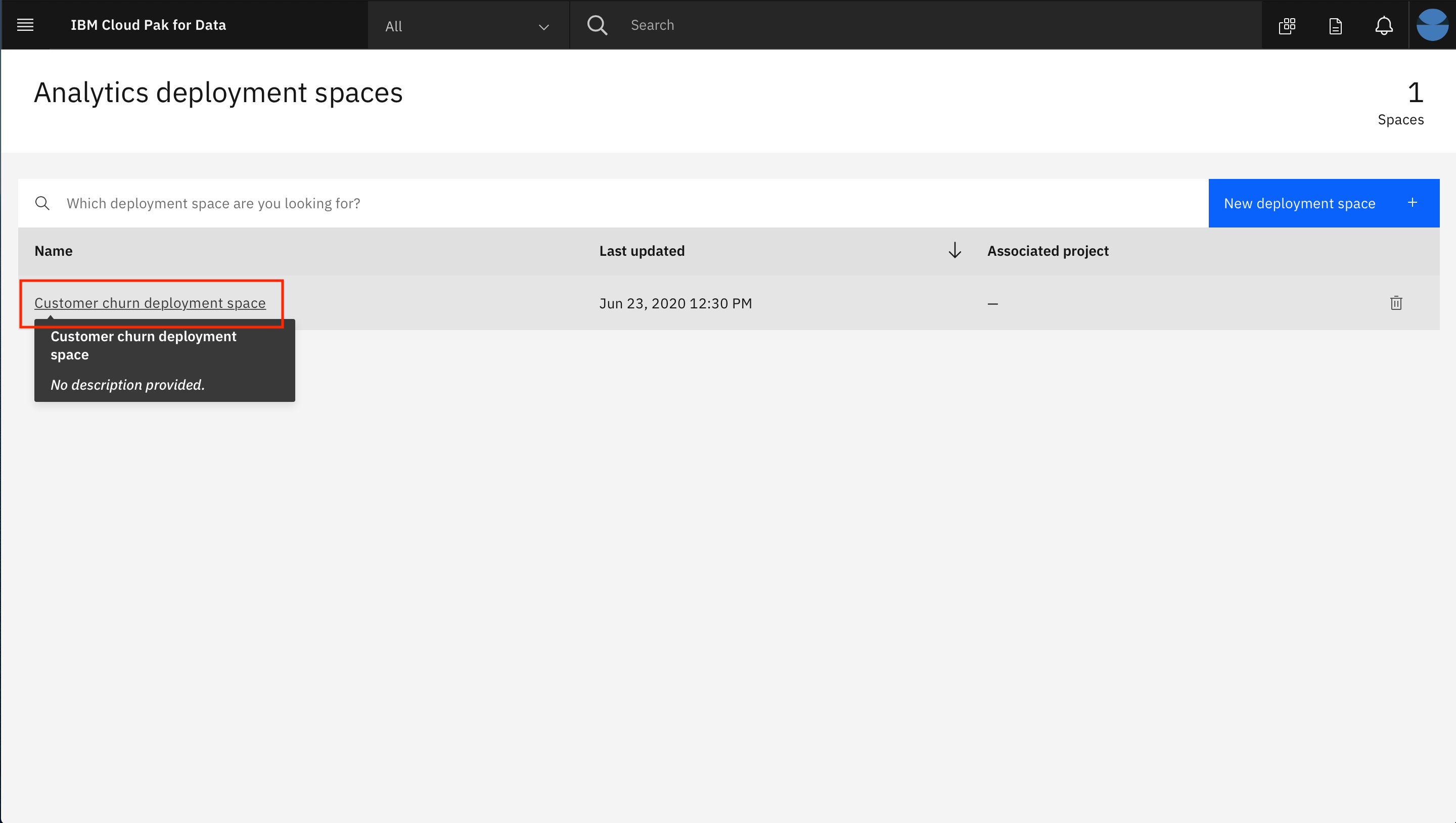

- Click on your new deployment space.

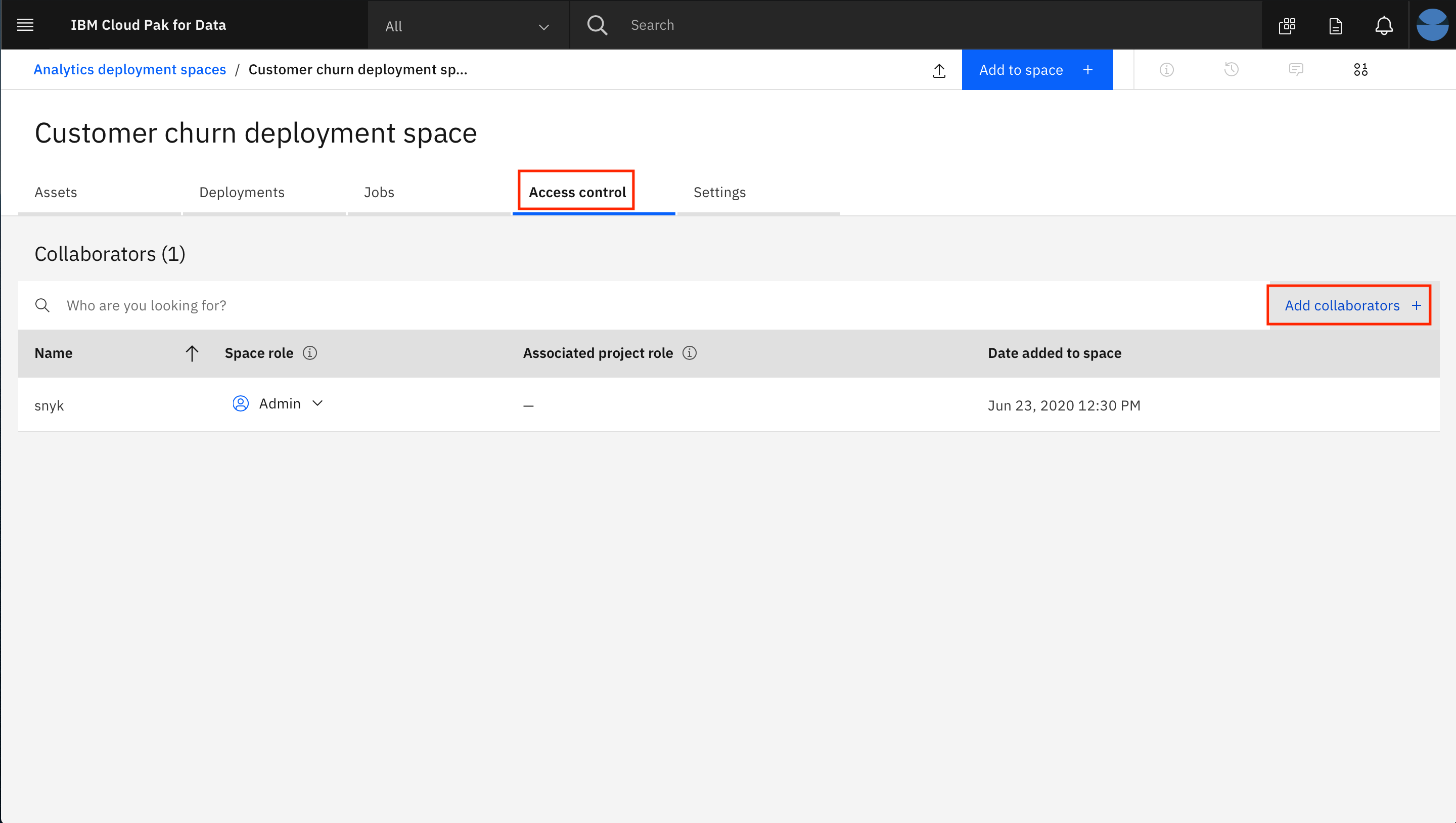

- Click on the

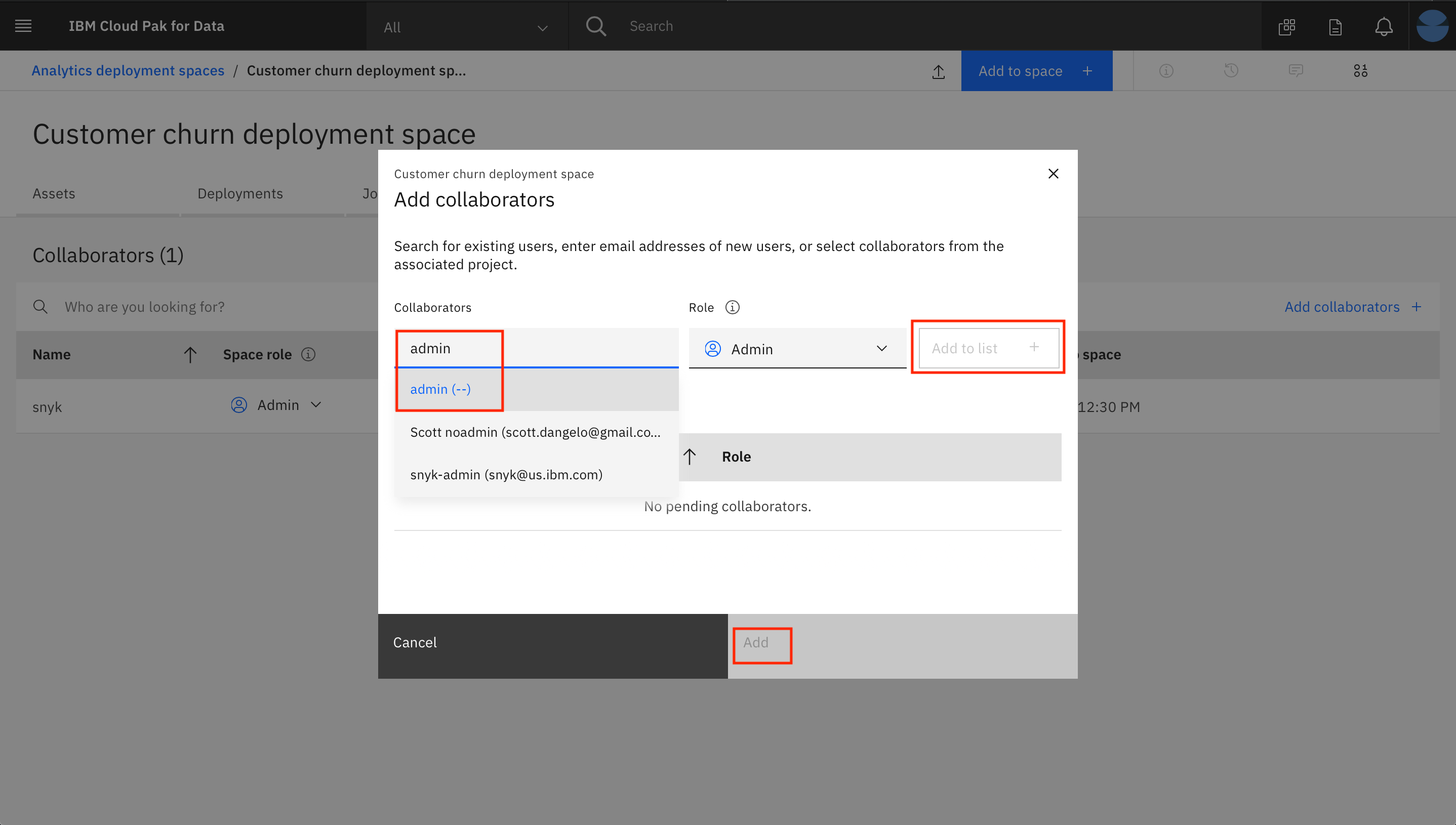

Access controltab and then click onAdd collaborators +on the right.

- Enter "admin" as a Collaborator and select the user from the drop down list. Then click on the

Add to list +button. Then click theAddbutton to finish adding the collaborator.

NOTE: We are adding the user that configured the machine learning instance for OpenScale monitoring. In this case, the user is the admin user. If the user is someone other than "admin", then that user should be added as a collaborator here.

You should be brought back to the deployment space page and see your user ID along with the admin user as collaborators for this space.

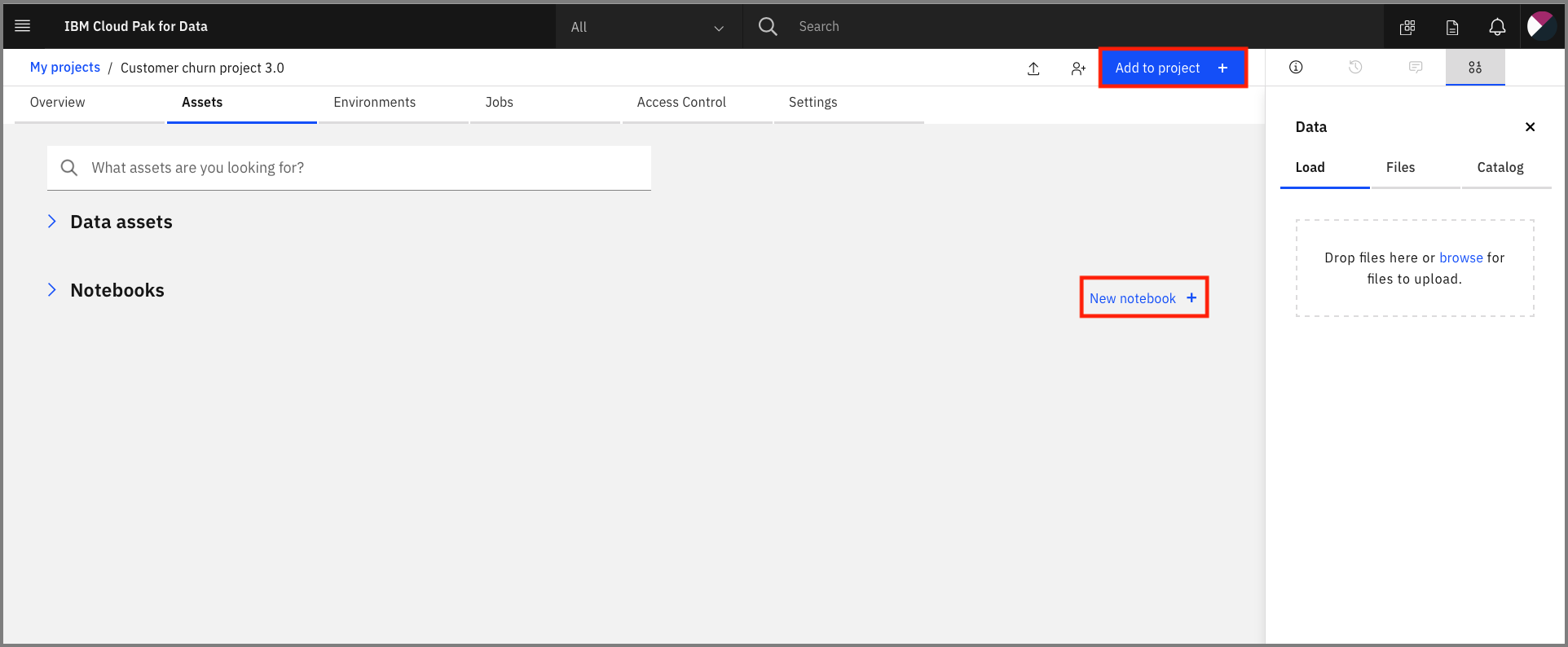

For this part of the pattern we're going to configure our Watson OpenScale service by running a Jupyter Notebook.

At the project overview click the Add to project + button, and choose Notebook or click the New notebook + option next to the Notebooks section.

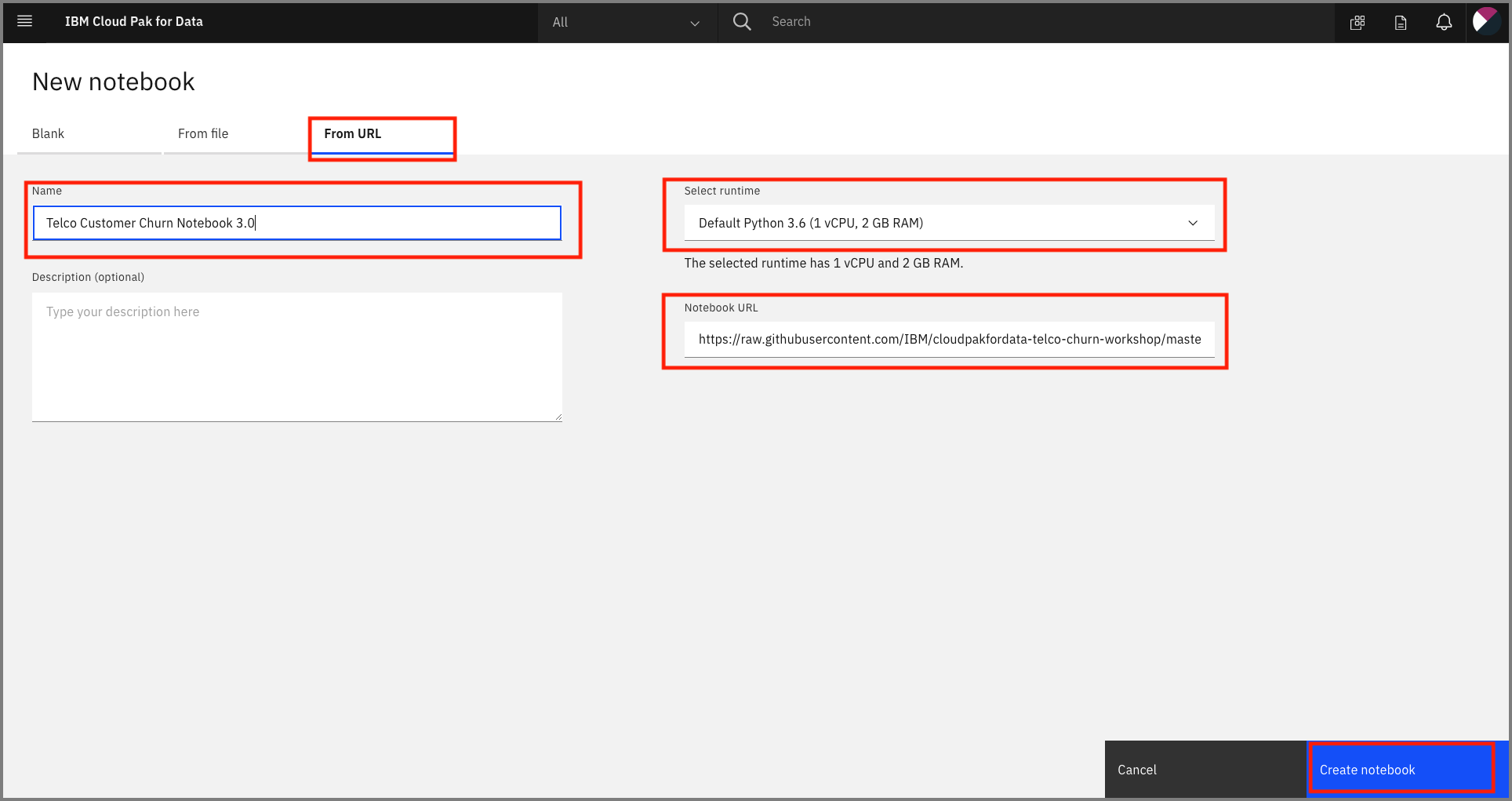

On the next panel select the From URL tab, give your notebook a name, provide the following URL, and choose the Python 3.6 environment:

Provide the notebook URL https://raw.githubusercontent.com/IBM/monitor-ibm-cloud-pak-with-watson-openscale/master/notebooks/ConfigureOpenScale.ipynb.

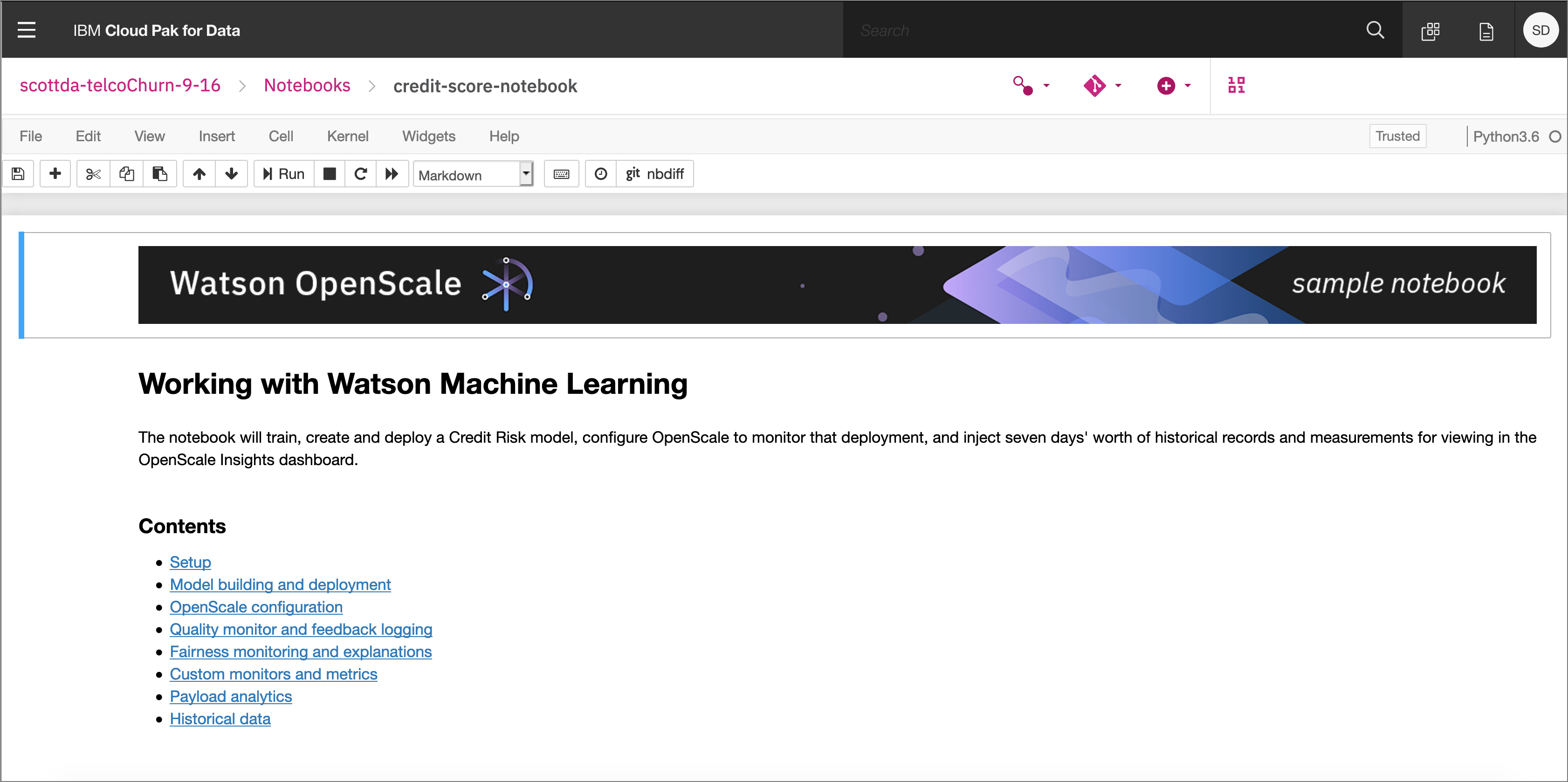

When the Jupyter notebook is loaded and the kernel is ready then we can start executing cells.

- In the notebook section 1.2 you will add your ICP platform credentials for the

WOS_CREDENTIALS. - For the

urlfield, changehttps://w.x.y.zto use the IP address of your ICP cluster, i.e something like: "url": "https://zen-cpd-zen.omid-cp4d-v5-2bef1f4b4097001da9502000c44fc2b2-0001.us-south.containers.appdomain.cloud" - For the

username, use your login username. - For the

password, user your login password. - For the

DATABASE_CREDENTIALSandSCHEMA_NAMEvalues, follow instructions from prerequisites to Create an IBM Cloud instance of DB2 Warehouse

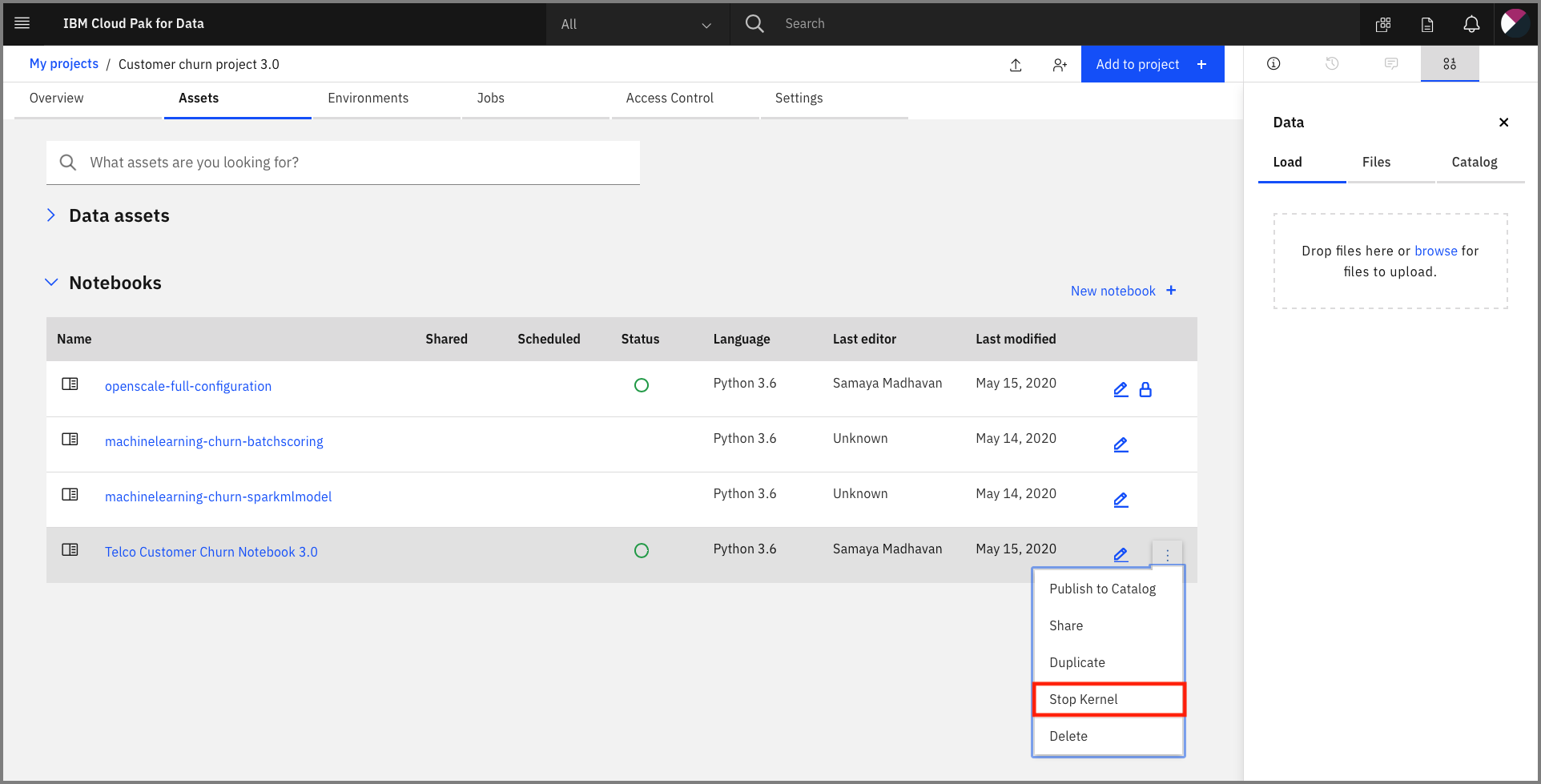

Important: Make sure that you stop the kernel of your notebook(s) when you are done, in order to prevent leaking of memory resources!

Spend a minute looking through the sections of the notebook to get an overview. You will run cells individually by highlighting each cell, then either click the Run button at the top of the notebook. While the cell is running, an asterisk ([*]) will show up to the left of the cell. When that cell has finished executing a sequential number will show up (for example, [17]).

Under 8.9 Identify transactions for Explainability run the cell. It will produce a series of UIDs for indidvidual ML scoring transactions. Copy one or more of them to examine in the next section.

Now that you have created a machine learning model and configured Openscale, you can utilize the OpenScale dashboard to gather insights.

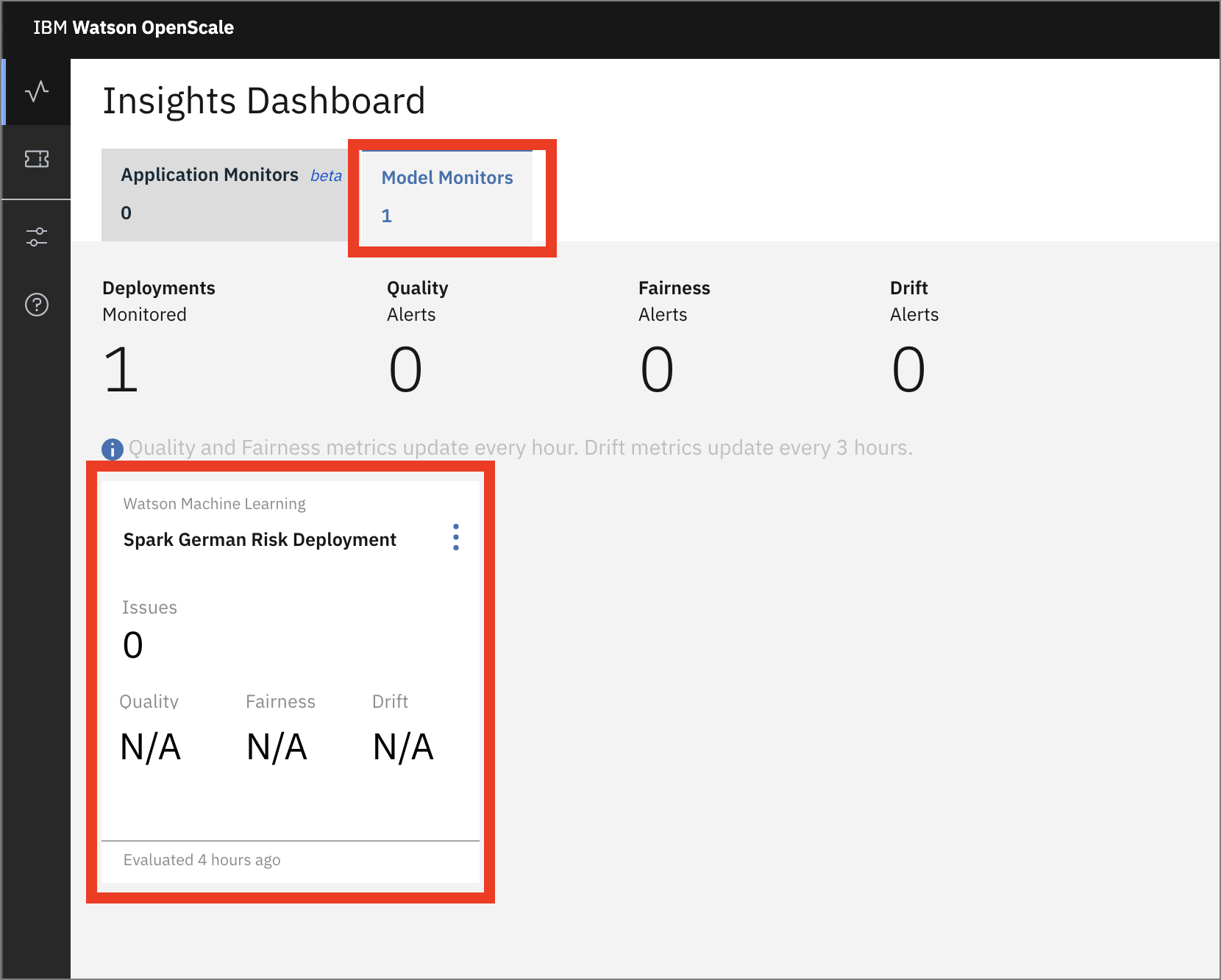

The Insights Dashboard provides an overview of the models that OpenScale is monitoring.

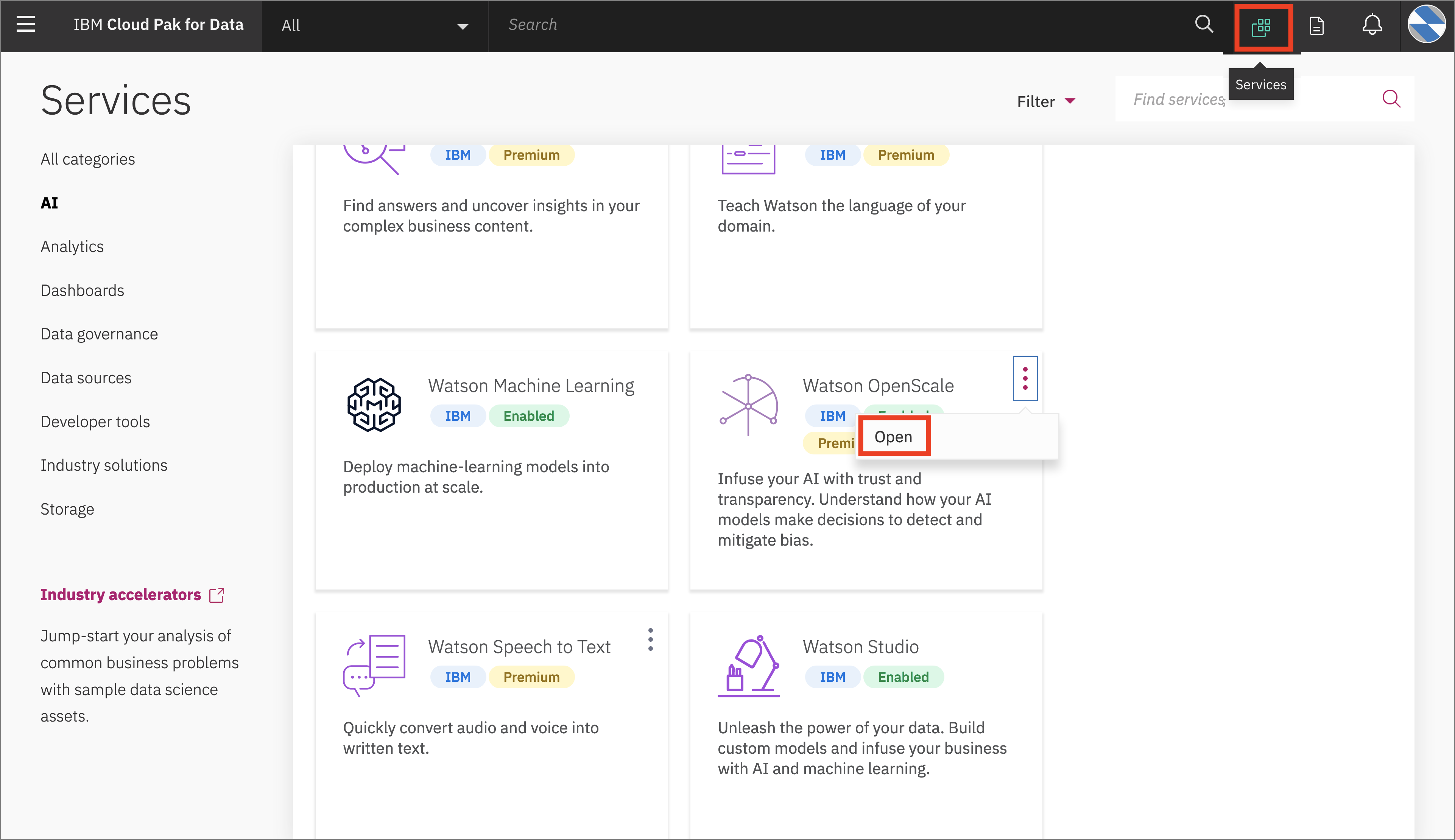

- Open the

Servicestab by clicking the icon in the upper right. Go to theOpenScaletile under theAIcategory and clickOpen:

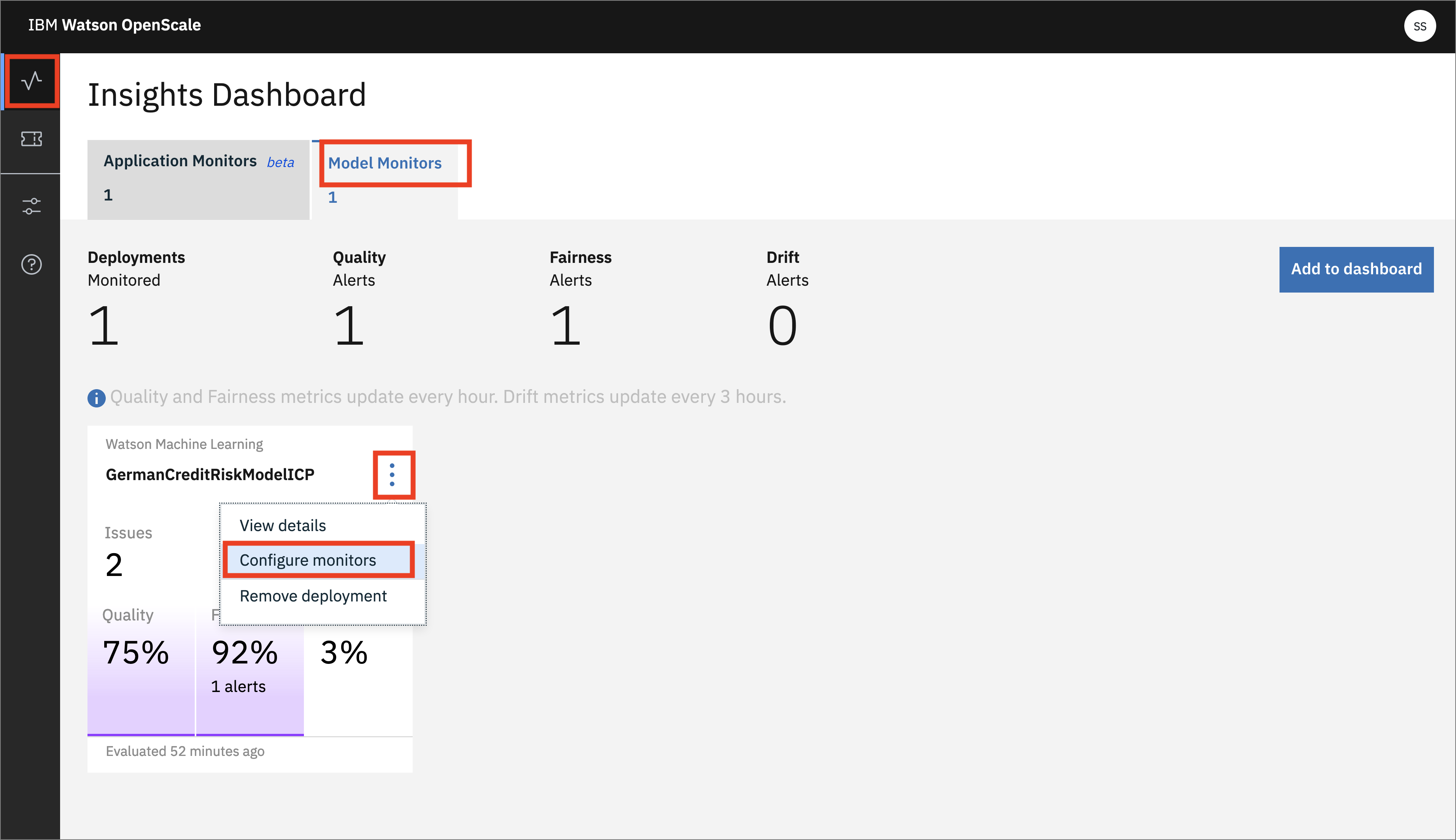

- When the dashboard loads, Click on the 'Model Monitors' tab and you will see the one deployment you configured in the previous section.

Do not worry if the name you see does not match exactly with the screenshot. The deployment name you see will correspond to the variable used in the Jupyter notebook

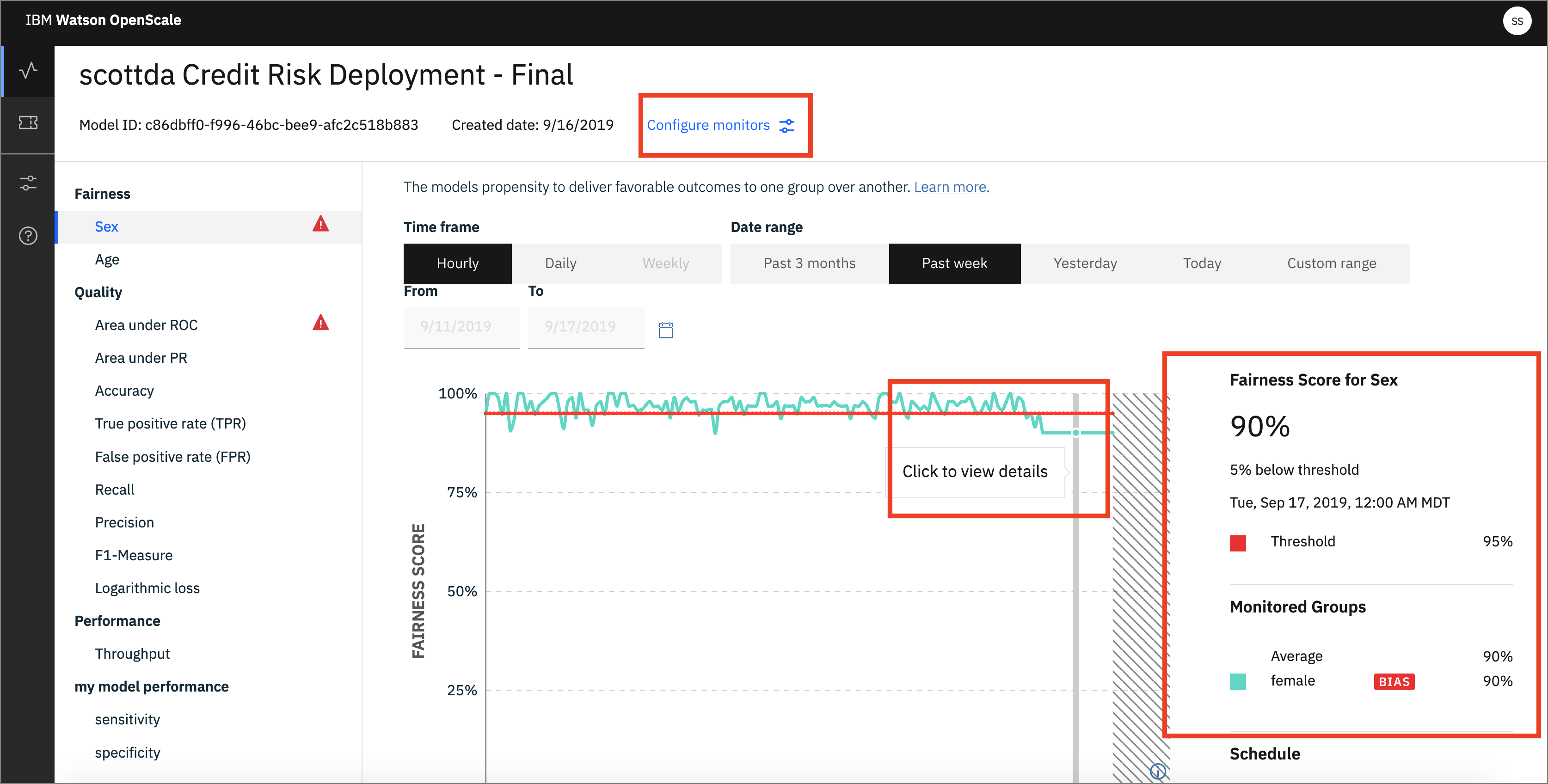

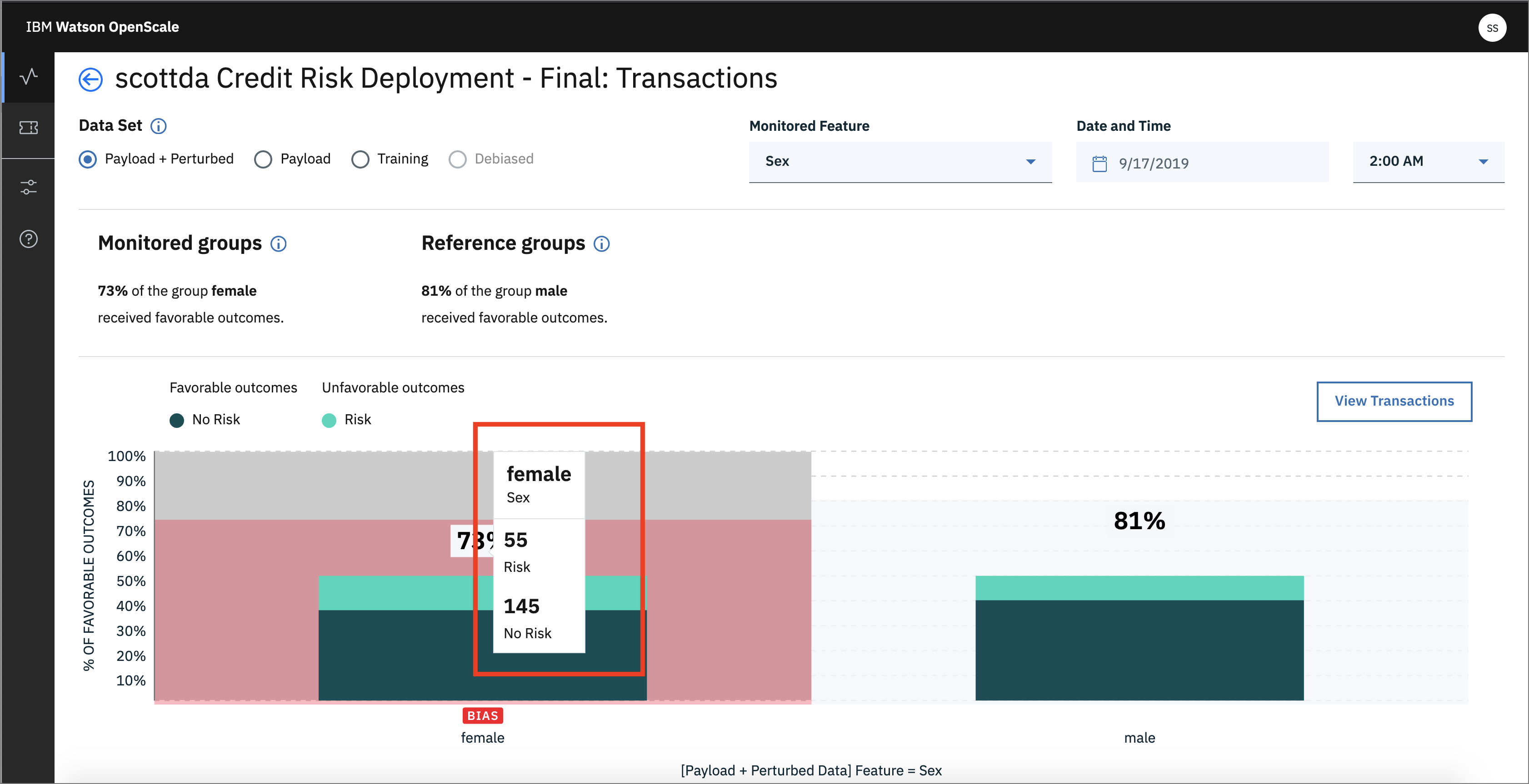

You will see the triangle with ! under Fairness -> Sex. This indicates that there has been an alert for the Fairness monitor. Alerts are configurable, based on thresholds for fairness outcomes which can be set and altered as desired.

- By moving your mouse pointer over the graph, you can see the values change, and which contains bias. Click one spot to view the details. Later, we'll click

Configure Monitorsto get a Fairness endpoint:

- Once you open the details page, you can see more information. Note that you can choose the radio buttons for your choice of data (Payload + Perturbed, Payload, Training, Debiased):

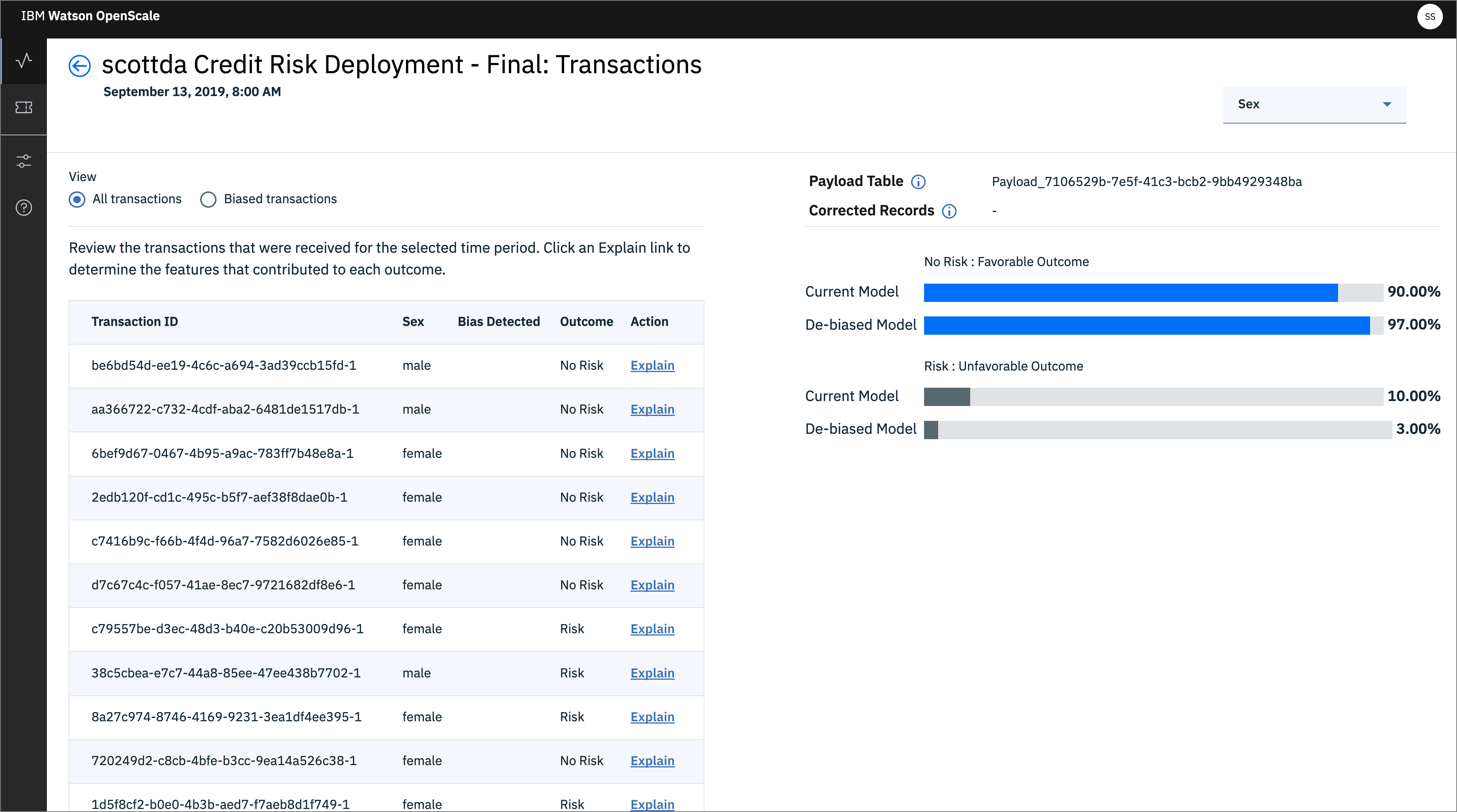

- Click on

View Transactionsto drill deeper. Here you have radio buttons for All transactions and Biased transactions. Each of the individual transactions can be examined to see them in detail. Doing so will cache that transaction, as we will see later.

- Now, go back to the Insights Dashboard page by clicking on the left-hand menu icon for

Insights, make sure that you are on theModel monitorstab, and click the 3-dot menu on the tile and thenConfigure monitors:

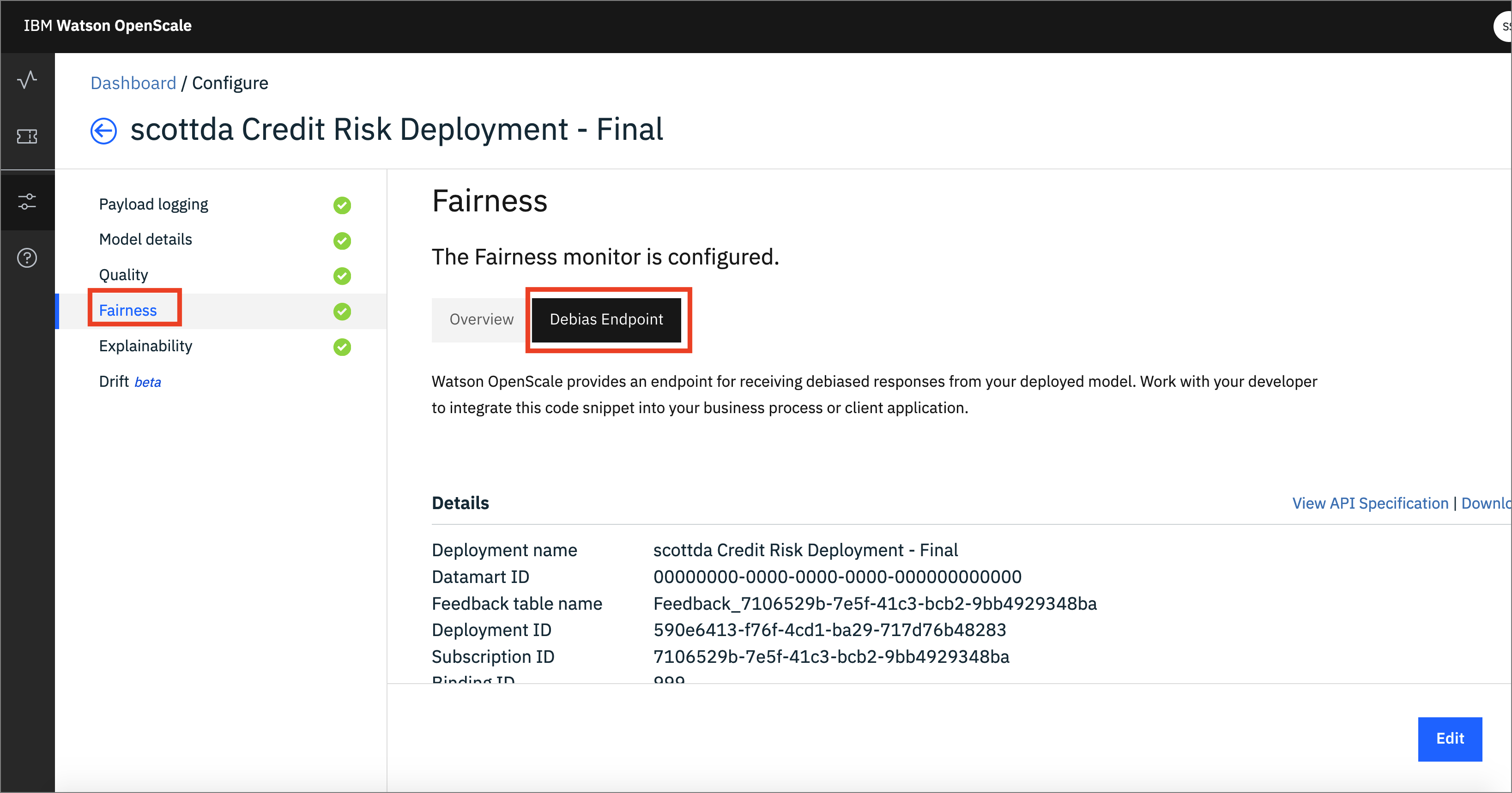

- Click the

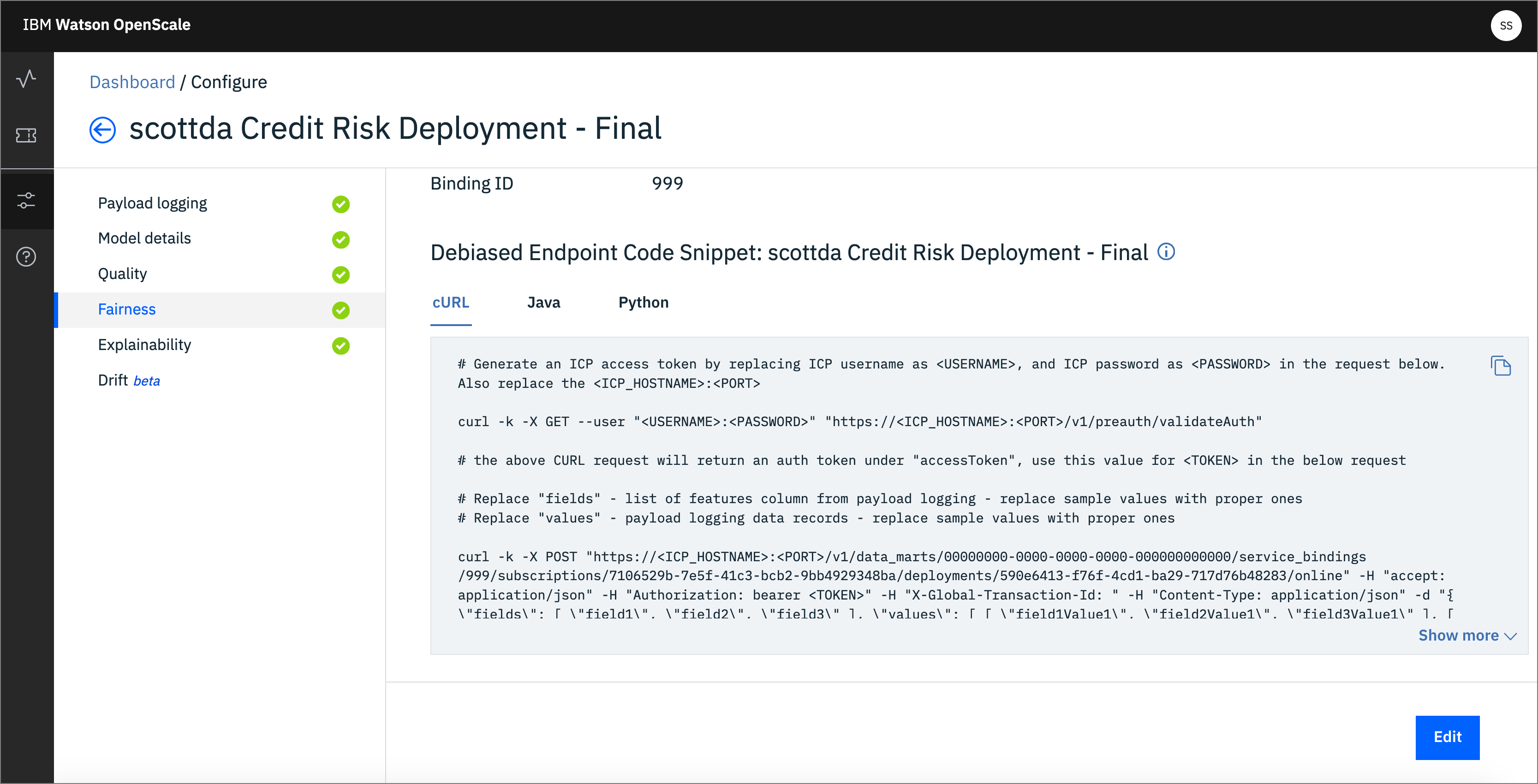

Fairnessmenu, then theDebias Endpointtab. This is the REST endpoint that offers a debiased version of the credit risk ML model, based on the features that were configured (i.e. Sex and Age). It will present an inference, or score, that attempts to remove the bias that has been detected:

- Then scroll down for code examples on how to use the Fairness Debiased endpoint. You can see code snippets using cURL, Java, and Python, which can be used in your scripts or applications to access this debiased endpoint:

- Similarly, you can choose the

Qualitymenu and choose theFeedbacktab to get code for Feedback Logging. This provides an endpoint for sending fresh test data for ongoing quality evaluation. You can upload feedback data here or work with your developer to integrate the code snippet provided to publish feedback data to your Watson OpenScale database.

- Click on the left-hand menu icon for

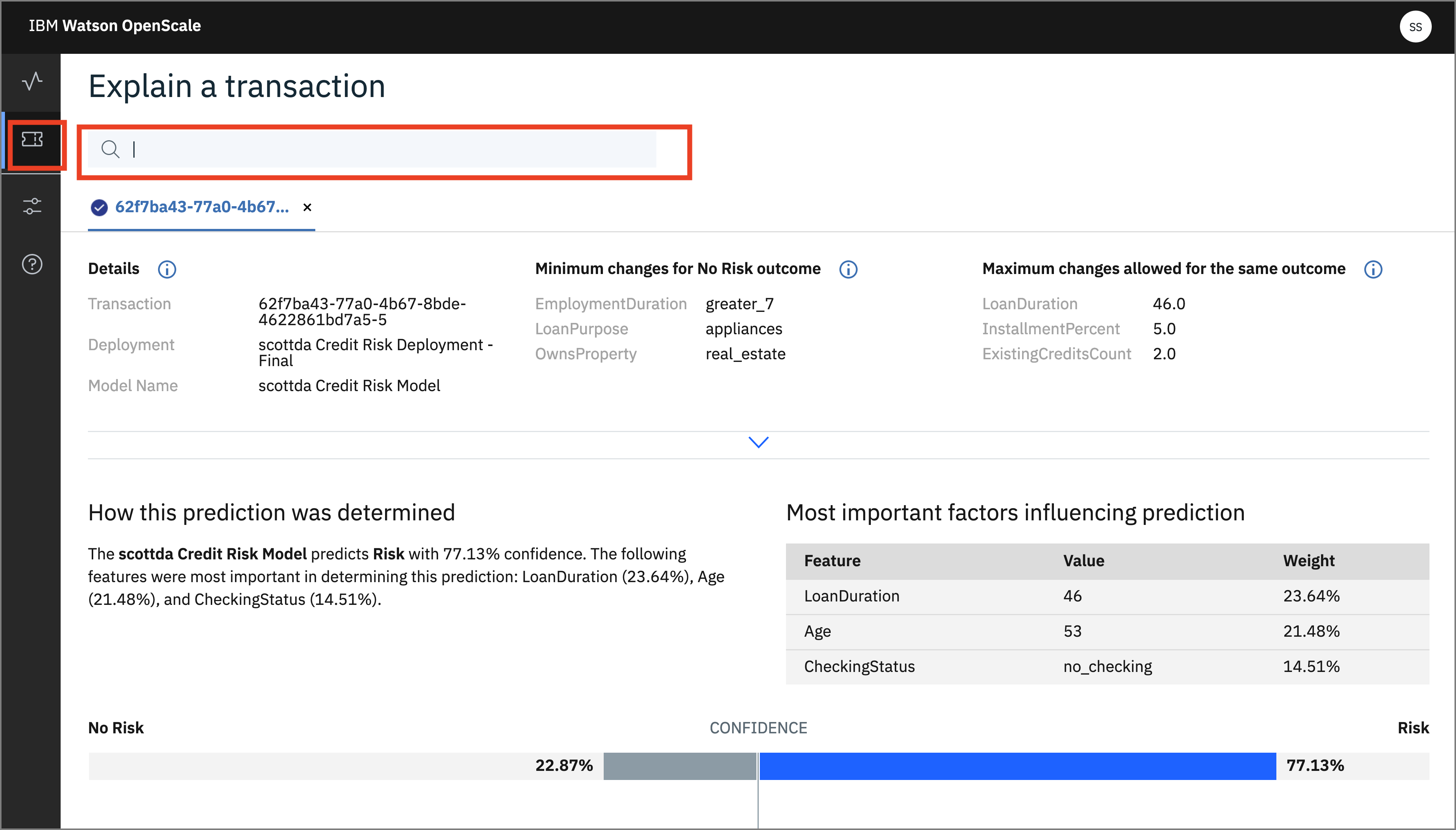

Explain a transactionand click one of the transactions that have been run. These are the transactions that have been cached. Alternately, enter the transaction UID you copied after running the notebook from step 3.

NOTE: Each time you create the Explainibility data, the perterbation algorithm is sending 1000's of requests to the deployed Machine Learning REST endpoint, so the first time this is done can take a few seconds.

- From the info icon next to

Details:

Explanations show the most significant factors when determining an outcome. Classification models also include advanced explanations. Advanced explanations are not available for regression, image, and unstructured text models.

- Click on the info icon next to

Minimum changes for No Risk outcomeand look at the feature values:

Pertinent Negative If the feature values were set to these values, the prediction would change. This is the minimum set of changes in feature values to generate a different prediction. Each feature value is changed so that it moves towards its median value in the training data.

- Click on the info icon next to

Maximum changes allowed for the same outcomeand look at the feature values:

Pertinent Positive The prediction will not change even if the feature values are set to these values. This is the maximum change allowed while maintaining the existing prediction. Each feature value is changed so that it moves towards its median value in the training data.

You can see under Most important factors influencing prediction the Feature, Value, and Weight of the most important factors for this score.

A full breakdown of the factors contributing to either "Risk" or "No Risk" are at the bottom.

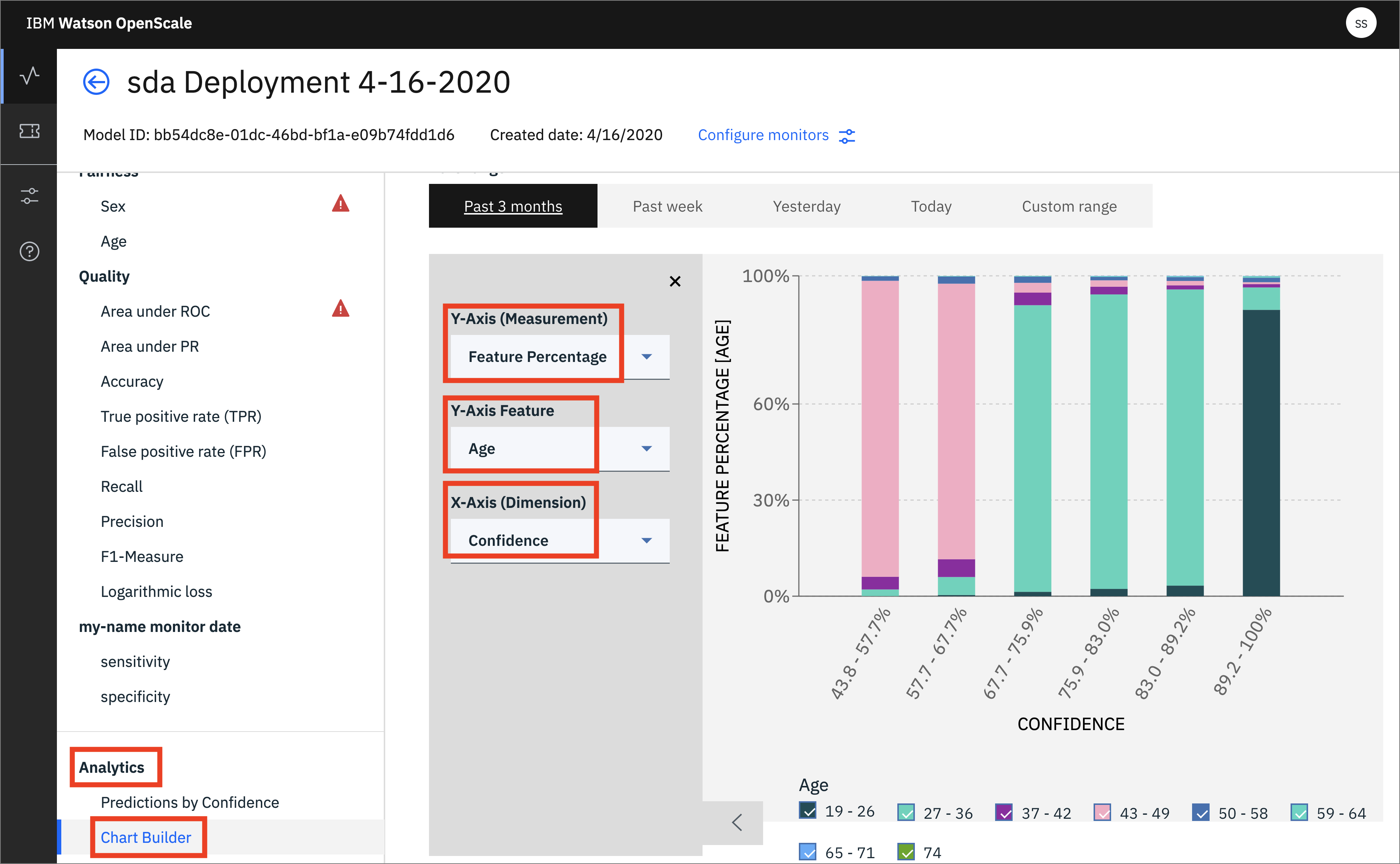

- From the model monitor view, click on

Analytics->Chart Builder. Here you can create charts using various Measurements, Features, and Dimensions of your machine learning model. Change them and examine the charts that are created:

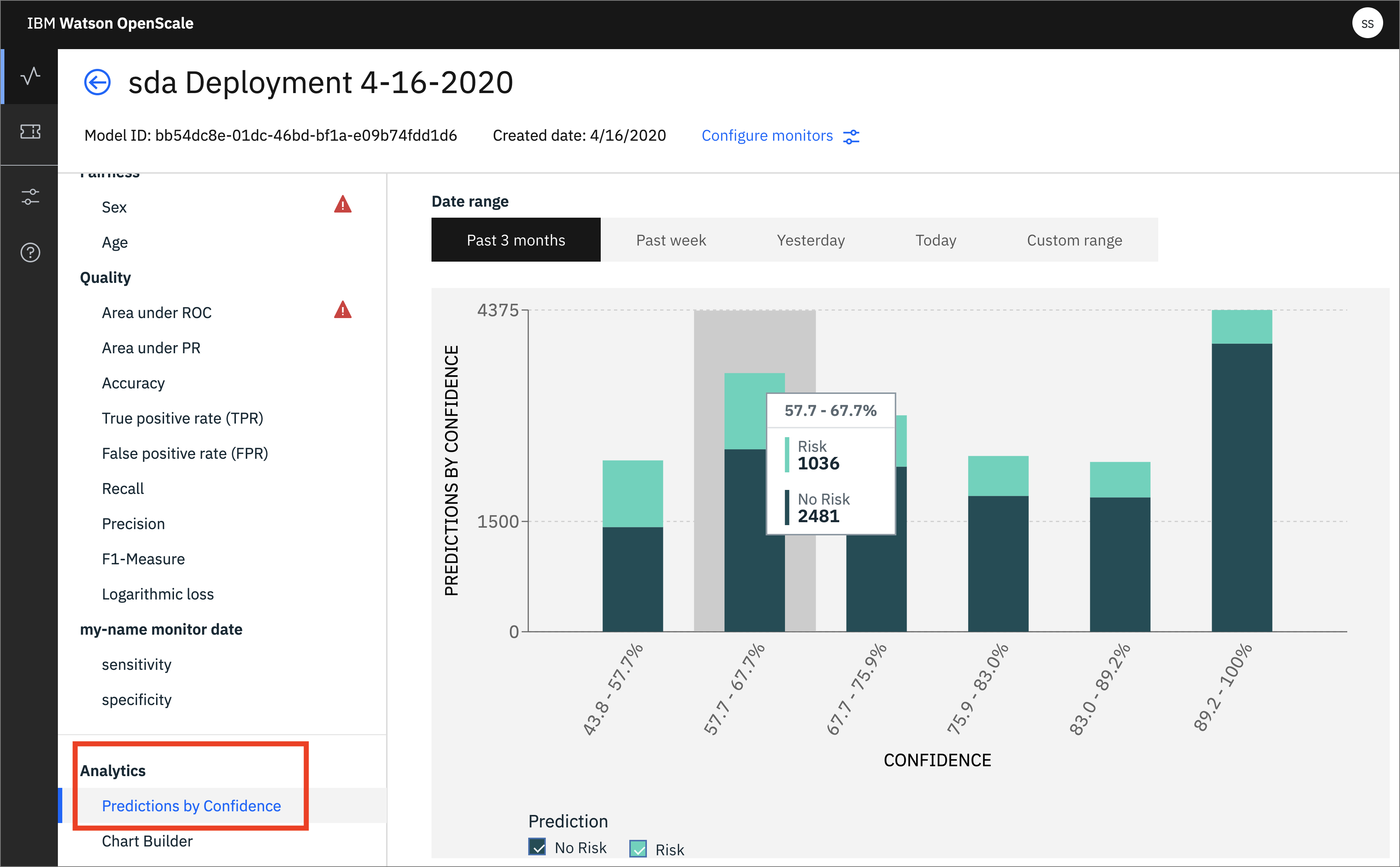

- You can also see a chart that breaks down Predictions by Confidence:

NOTE: Returning to the Cloud Pak for Data requires altering the URL in the browser, so that the cluster name is the only thing present. Remove anything in the browser URL containing

/aiopenscale/*.