Superpolyak.py

Superpolyak.py is a pytorch implementation of the SuperPolyak subgradient method [1]. SuperPolyak is a first-order method for solving (possibly) nonsmooth equations/optimization problems of the form:

where

SuperPolyak is an unusual first-order method in that when certain minimal assumptions are met, it locally converges superlinearly (i.e., "double exponentially").

Installation

Currently, the only way to install SuperPolyak is to git clone this repository and install it (via pip or conda). We will make it available via PyPI soon.

Example installation using pip

$ git clone https://github.com/COR-OPT/SuperPolyak.py.git

$ python -m pip install -e .Quick demo: SuperPolyakDemo.ipynb.

Outline:

- Two Quick Examples

- What is SuperPolyak doing?

- How to use

- References

Two quick examples

Example 1: Fitting a 1-hidden layer neural network with max-pooling

Let's try to fit a simple neural network with max-pooling to data

The network has

We reformulate this as a root finding problem:

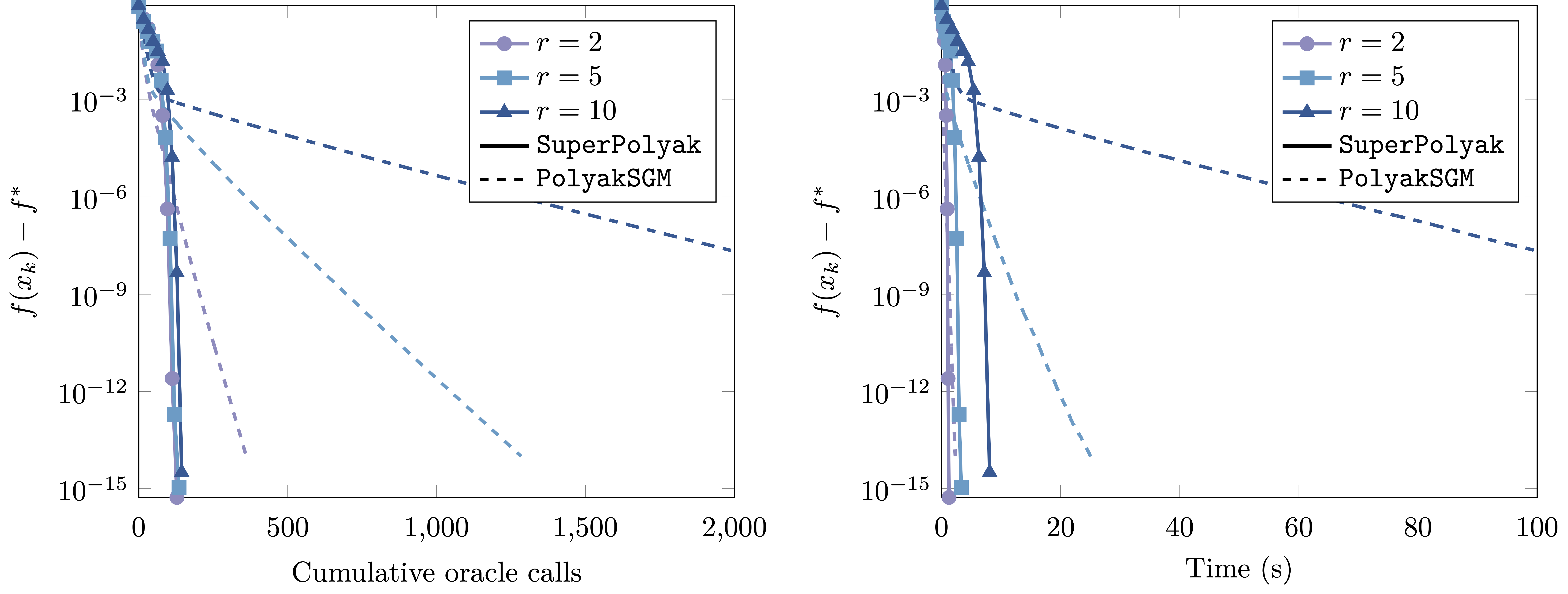

Now let's do a quick experiment:

-

Setup:

- We use Gaussian data.

- We set

$d = 500$ , vary$r$ , and set$m = 3dr$ . - We run SuperPolyak and a standard first-order method (Polyak).

-

Conclusion:

- Superpolyak outperforms Polyak both in terms of time and oracle calls (evals of

$f$ and its gradient).

- Superpolyak outperforms Polyak both in terms of time and oracle calls (evals of

Example 2: Solving a smooth and strongly convex optimization problem

Let's try to solve the following optimization problem:

where

The gradient

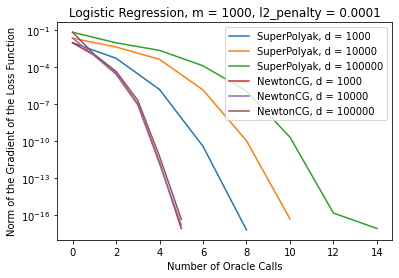

Now let's do a quick experiment:

-

Setup:

- We fit a logistic regression model with l2 regularization to Gaussian data.

- We vary the dimension

$d$ and fix the number of parameters$m$ . - We run SuperPolyak and Newton's method, where the linear system is solved with a conjugate gradient method.

-

Conclusion:

- Superpolyak offers comparable performance to NewtonCG:

- SuperPolyak used slightly more oracle calls;

- SuperPolyak took slightly less time.

- Superpolyak offers comparable performance to NewtonCG:

d SuperPolyak time (s) NewtonCG time (s)

-------------------------------------------------

1000 0.023495912551879883 0.05375099182128906

10000 0.38303589820861816 0.6963608264923096

100000 3.3524928092956543 4.931229829788208

Note: we tried to use existing implementations of NewtonCG, but for the scale of problems considered here, we could not decrease the norm of the gradient to machine tolerance. Thus, we implemented our own version of NewtonCG; it performs on par with existing implementations.

What is SuperPolyak doing?

SuperPolyak is inspired by Newton's method, which is taught in first-year calculus.

Inspiration: Newton's method

Newton's method attempts to find the root of a single-variable function by repeatedly applying the following two steps:

- Construct the tangent approximation of

$f$ at the current iterate - Declare the next iterate to be the root of the tangent approximation.

The following gif shows newton's method in action.

If we start close enough to the root

This super fast. To put this in perspective, around 10 steps gives ~300 digits of accuracy.

k 2^{-2^{k}}

-------------------------------------------------

1 2.50e-01

2 6.25e-02

3 3.91e-03

4 1.53e-05

5 2.33e-10

6 5.42e-20

7 2.94e-39

8 8.64e-78

9 7.46e-155

10 5.56e-309

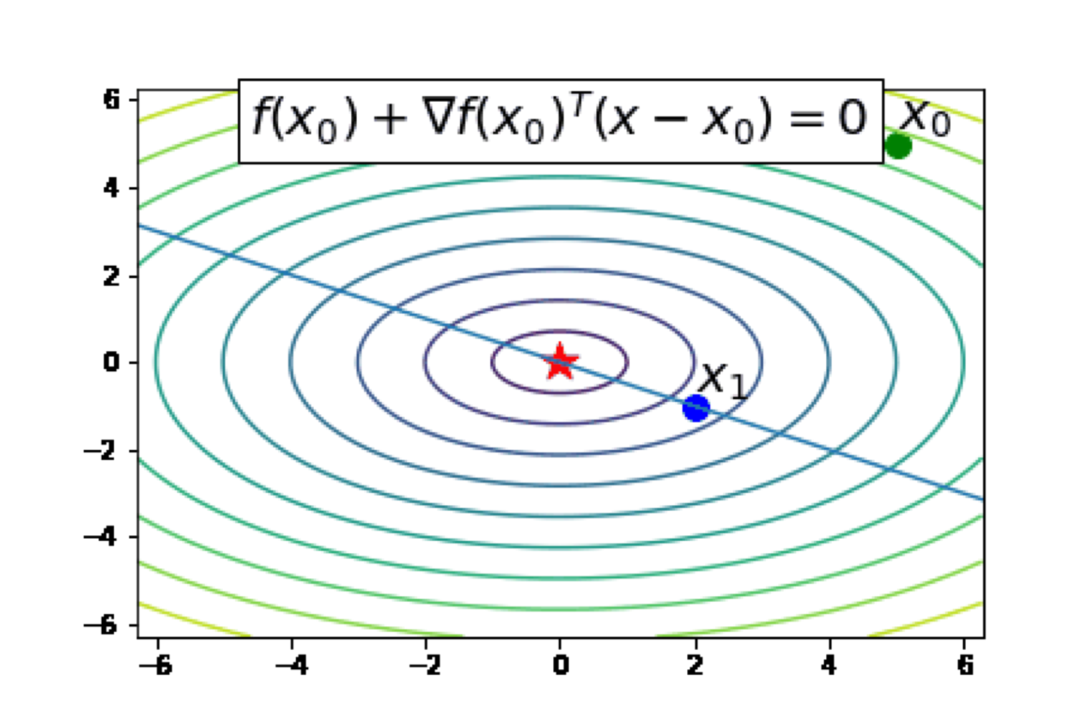

Slow-down of Newton's method in higher dimensions

Now let's consider an example where the dimension

- Construct the tangent approximation of

$f$ at the current iterate - Declare the next iterate to be the nearest root of the tangent approximation.

Here, a qualifier such as nearest root is necessary since there are infinitely many roots of the tangent approximation, as shown in the following contour plot, corresponding to the function

How well does the above Newton's method work? The following animation suggests that it works quite well:

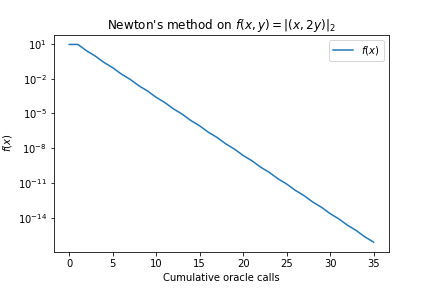

While fast, the following plot shows the method converges only linearly, not superlinearly.

Is it possible to recover the superlinear rate?

The SuperPolyak step: a method for repairing Newton's method in higher dimensions

The issue with Newton's method in higher dimensions is that there are infinitely many roots to the tangent approximation: why should we expect that the nearest root is very close to the solution?

In the interest of fixing this, we ask the following simple question:

What if we choose the next iterate to be a root of several distinct tangent approximations?

For example, suppose we could find

There are infinitely many ways to choose the "several distinct tangent approximations." For example, we could choose the locations randomly or sampled from the past history of the algorithm.

Instead of random, we suggest the following iterative scheme (here

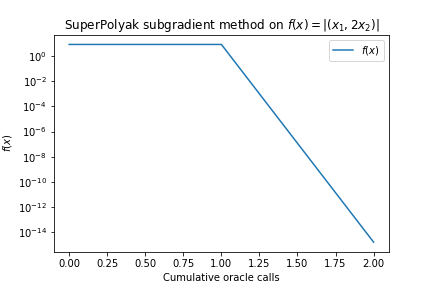

Let's take a look at the algorithm in action:

From the above animation, we see the approach works well, essentially finding the solution in two evaluations of

When does SuperPolyak work?

Two broad families of examples where SuperPolyak locally converges superlinearly:

-

Piecewise linear functions, e.g., for

$$f(\beta_1, \ldots, \beta_r) = \frac{1}{m} \sum_{i=1}^m |y_i - \max_{j \in [r]} \langle a_i, \beta_j\rangle|.$$ -

Generic semialgebraic mappings, e.g., for

$$f_v = |F(x) - v|,$$ where$F$ is a "semialgebraic mapping" and$v$ is a "generic right-hand-side" vector.

More formally, SuperPolyak works under minimal assumptions known as "sharpness" and "semismoothness;" see [1] for a formal discussion.

Practical improvements: early termination of the SuperPolyak step

In [1], we show that SuperPolyak converges superlinearly. However, its naïve implementation could be prohibitively expensive,

since it requires

- Fix a maximum per-step budget, called

max_elt. Then declare the next iterate to be the best among the firstmax_eltpoints$y_i$ . - Fix a "superlinear improvement" exponent

eta_estand exist as soon as one finds a point$y_i$ such that$f(y_i) \leq f(y_0)^{1+ \eta}$ .

Comparison to semismooth Newton

Semismooth Newton's method is the direct generalization of Newton's method to systems of nonsmooth systems of equations:

where

where

Semismooth newton is known to converge superlinearly in several circumstances outlined in [1, 2].

However, for the problems we consider in [1], it converges at most linearly, as we saw for the function

Potential benefits of SuperPolyak over semismooth Newton

If one is presented with a nonsmooth system of equations

There are two reasons to try this:

First, SuperPolyak is known to work under less restrictive assumptions than semismooth Newton.2

Second, with SuperPolyak, one can sometimes get away with solving significantly smaller linear systems than with semismooth Newton. Indeed, let us compare the linear algebra cost of each iteration of SuperPolyak and semismooth Newton. Both methods must solve a linear system at each step. Semismooth Newton solves a system of size

For instance, consider the formulation of Example 1 to which one could apply the semismooth Newton method:

Thus, each step of semismooth Newton would require solving a

How to use

SuperPolyak can be run in two ways:

- Method 1: standalone pytorch optimizer;

- Method 2: coupled with another pytorch optimizer.

Standalone optimizer class

SuperPolyak inherits from the pytorch optimizer class. It implements a single step of the algorithm. It has several additional inputs:

-

max_elts: The maximal size of the linear system to solve at each step. -

eta_est: Exit early if some$y_i$ satisfies$f(y_i) \leq f(y_0)^{1+\eta}$ , with$\eta$ equal toeta_est. -

linsys_solver: how to solve the linear system at each step.-

BundleLinearSystemSolver.LSMR: a solver based on warm-started conjugate gradient. -

BundleLinearSystemSolver.QR:an exact solver based on a compact QR decomposition; see [1] for details.

-

In our experiments, we found both linsys_solvers to have comparable performance.

Coupling with a fallback algorithm (e.g. SGD)

In several applications, it may be desirable to use SuperPolyak to accelerate an

existing optimizer such as SGD. For that purpose, we provide a utility function

that couples SuperPolyak with an arbitrary "fallback" method, implemented as a

class that inherits from torch.optim.Optimizer. Its signature is as follows:

def superpolyak_coupled_with_fallback(

superpolyak_closure: Callable,

fallback_closure: Callable,

superpolyak_optimizer: SuperPolyak,

fallback_optimizer: Optimizer,

max_inner_iter: int,

max_outer_iter: int,

mult_factor: float = 0.5,

tol: float = 1e-16,

verbose: bool = False,

metric_to_print: Optional[Callable] = None,

) -> Tuple[Sequence[int], Sequence[float]]This function runs for max_outer_iter steps. At each step, it attempts a SuperPolyak

step equivalent to

>>> superpolyak_optimizer.step(superpolyak_closure)If this step reduces the loss by at least mult_factor, the function proceeds to the

next step. Otherwise, it calls

>>> fallback_optimizer.step(fallback_closure)for at most max_inner_iter steps before attempting a new SuperPolyak step.

The function terminates if max_outer_iter steps of the above have elapsed,

or if the loss (as measured by the output of superpolyak_closure()) drops

below tol at any given iteration. Upon termination, the function returns

a list containing the cumulative number of calls to the autodifferentiation

oracles as well as list containing the objective function value at each step.

An example of using superpolyak_coupled_with_fallback is given in SuperPolyakDemo.ipynb.

References

[1]: V. Charisopoulos, D. Davis. A superlinearly convergent subgradient method for sharp semismooth problems, Mathematics of Operations Research, to appear. arXiv: https://arxiv.org/abs/2201.04611.

[2]: L. Qi, J. Sun. "A nonsmooth version of Newton's method." Mathematical programming 58.1 (1993): 353-367.

Footnotes

-

This story is somewhat subtle. One could of course reformulate the problem to finding a root of the smooth mapping $F(x,y) = (x,2y)$ and apply the standard Newton method, which would converge superlinearly. However, our goal is to treat the loss function $f(x) = |(x, 2y)|$ as a blackbox, accessible only through gradient and function evaluations. Under this setting, Newton's method only converges linearly. ↩

-

To the best of our knowledge, without assuming further smoothness properties, existing results on superlinear convergence of semismooth Newton methods require the Jacobian to be injective. In contrast, SuperPolyak requires the mapping to be metrically subregular, a weaker property in general. ↩