| Network Architecture Search and Expert Designed CNNs for Multi-target Concrete Defect Detection

In this repository, you can find our work and corresponding report for the Project 2 of the Machine Learning at EPFL. We focus on the crack concrete classification problem as described here, with the CODEBRIM dataset provided.

To see a toy sample from our best trained model ZenNAS (75.6% multi-target accuracy) to predict different concrete defect images, simply run run_sample.ipynb. We have prepared the best weight (75.6%) for ZenNAS in this repo, you can directly use it at model_scripts/hard_ZenNas_withPretrain.pth. (the demo loads this weight by default)

Our project require pytorch >= 1.6, Tensorflow == 1.4. Linux and MacOS systems are preferred (you may encounter a file-path problem using Windows.)

Good news is we have packed all the environment in a docker file and upload it to dockerHub, you can find it here.

- To search for the models (i.e., ENAS-1, ENAS-2, ENAS-3) please refer to

NAS_designed_model/enas.ipynb. - To train the ENAS generated models, you need to mannually extract the architecture description in training log, please refer it here.

- Please refer to the code in

NAS_designed_model/train_crack_efficientNet.ipynb.

- Please refer to the code in

NAS_designed_model/train_crack_zenNAS.ipynb.

- Please refer to code in folder --

expert_designed_model.

- Please refer to codes in folder

/data_augmentationandNAS_designed_model/EfficientNet_grid_search.ipynb. Other analysis files could be found at the folder/tools.

- We evaluate and compare expert designed and NAS generated CNN architectures for the multi-target concrete defect classification task, and obtain highly competitive result using ZenNAS with much less parameters compared to the expert-designed models.

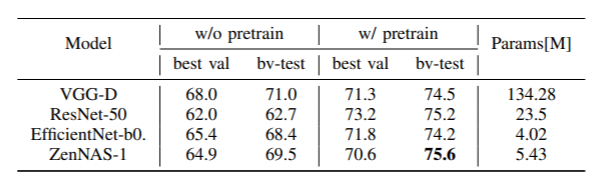

- Further, We show that cross-domain transfer learning could greatly boost the model performance, under which the ZenNAS model achieves best multi-target accuracy and surpassed the best result from MetaQNN in the original CODEBRIM paper (from 72.2% to 75.6%)

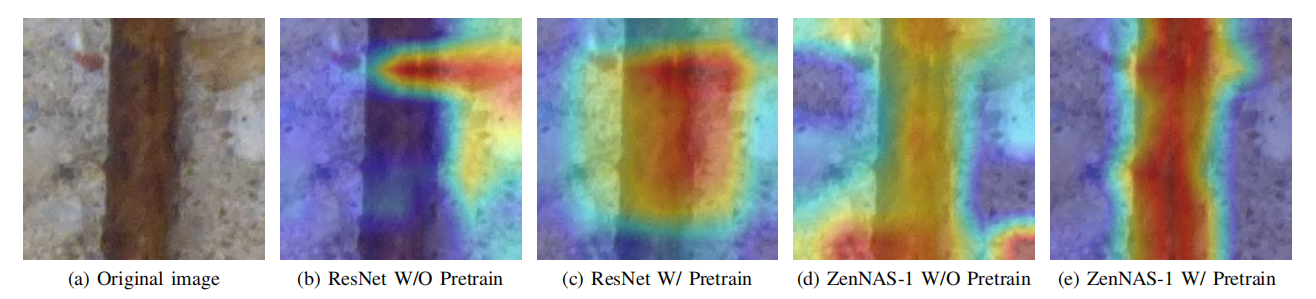

- We validate the performance gain using Grad-CAM to inspect attention pattern of last few convolutional layers, which shows that our transferred models' attention is better aligned with the defect area.

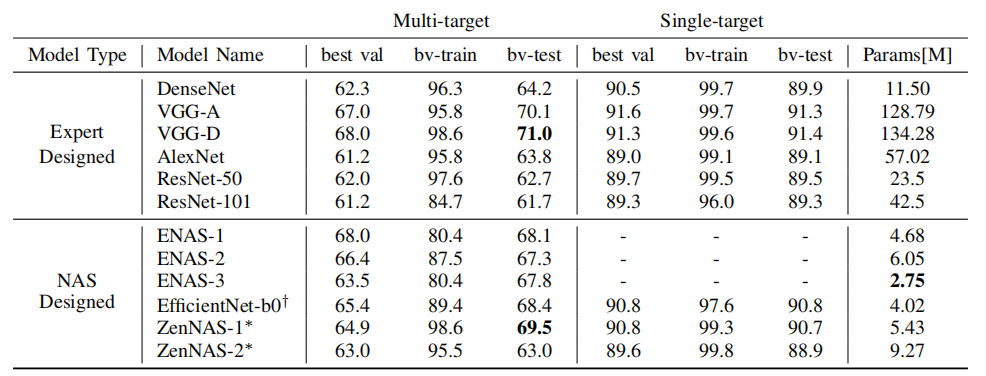

Model evaluation results for multi-target and single-target scenarios. For each model, we report the best validation result, as well as the corresponding training and testing accuracy at the same epoch. Models with † are searched on ImageNet dataset while models with (*) are obtained from data-free zero-shot search.

Model evaluation results for multi-target and single-target scenarios. For each model, we report the best validation result, as well as the corresponding training and testing accuracy at the same epoch. Models with † are searched on ImageNet dataset while models with (*) are obtained from data-free zero-shot search.

Grad-CAM attention pattern in last convolutional layer. For more examples please refer to the Appendix in our report.

Grad-CAM attention pattern in last convolutional layer. For more examples please refer to the Appendix in our report.

Pretrained models use weights from ImageNet dataset then retrain in CODEBRIM dataset.

.

├── NAS_designed_model

│ ├── EfficientNet_grid_search.ipynb

│ ├── enas.ipynb

│ ├── train_crack_efficientNet.ipynb

│ └── train_crack_zenNAS.ipynb

├── data_augmentation

│ ├── data_analysis.ipynb

│ ├── data_augmentation.ipynb

│ └── datasets.py

├── expert_designed_model

│ ├── train_crack_resnet.ipynb

│ └── train_crack_vgg_AlexNet_DenseNet.ipynb

├── model_scripts

│ ├── hard_ZenNas_withPretrain.pth

│ ├── hard_ZenNas_withoutPretrain.pth

├── sample

│ ├── defects

│ └── defects.xml

├── tools

│ ├── Image_banlance.pkl

│ ├── NAS_result_analysis.ipynb

│ ├── ZenNas_example.py

│ ├── copy_balance.ipynb

│ ├── datasets.py

│ ├── focal_loss.py

│ ├── model_acc_param.csv

│ ├── result_analysis.ipynb

├── README.md

├── P2_Report_Patricks.pdf

├── run_sample.ipynb

├── ref_codes

├── cam_test

└── train_log

A simple workflow using ZenNAS-1 model as an example to get a quick overview of our project.

Include five sample pictures and labels

Notebook scripts for training models of ENAS, ZenNas, and EfficientNet. Also includes a script for conducting a grid search on hyper-parameters batchsize, patchsize, weight decay using EfficientNet.

Notebook scripts for training models of ResNet, VGG, AlexNet, DenseNet.

data_analysis.ipynb:It includes our code for analyzing data distribution and resolution.datasets.py:To modify the transforms used in the dataloader, it includes 9 methods, such as RandomHorizontalFlip, RandomVerticalFlip, RandomRotation, RandomResizedCrop, RandomPerspective, GaussianBlur, RandomAdjustSharpness, random_select and normalize.data_augmentation.ipynb:In the training framework, we get data from dataloader that we modify indatasets.py.

Including model structure produced by ZenNas method and parameter weight files.

NAS_result_analysis.ipynb: It is used for analyzing the result of NAS like Agent Searching Reward.ZenNas_example.py:It includes functions for drawing and generating results in run_sample.ipynb.copy_balance.ipynb:It is the over-sampling method to address the problem of class imbalance.focal_loss.py:It implements focal loss to address the problem of class imbalance.result_analysis.py:It summarizes results and analyzes comprehensively with the accuracy rate and the number of model parameters.

All the reference codes from other repositories used by this project:

pytorch_grad_cam:It is an adjusted python package implementing Grad_Cam.ZenNAS:Code for ZenNAS search methods with imagenet-pretrained sample model path. Original Paper.ENAS:Code for ENAS search scripts. Original Paper

It include almost all the training logs during our experiment as well as the tools to generate acc-loss curves during training.