LiteLLM Config Generator

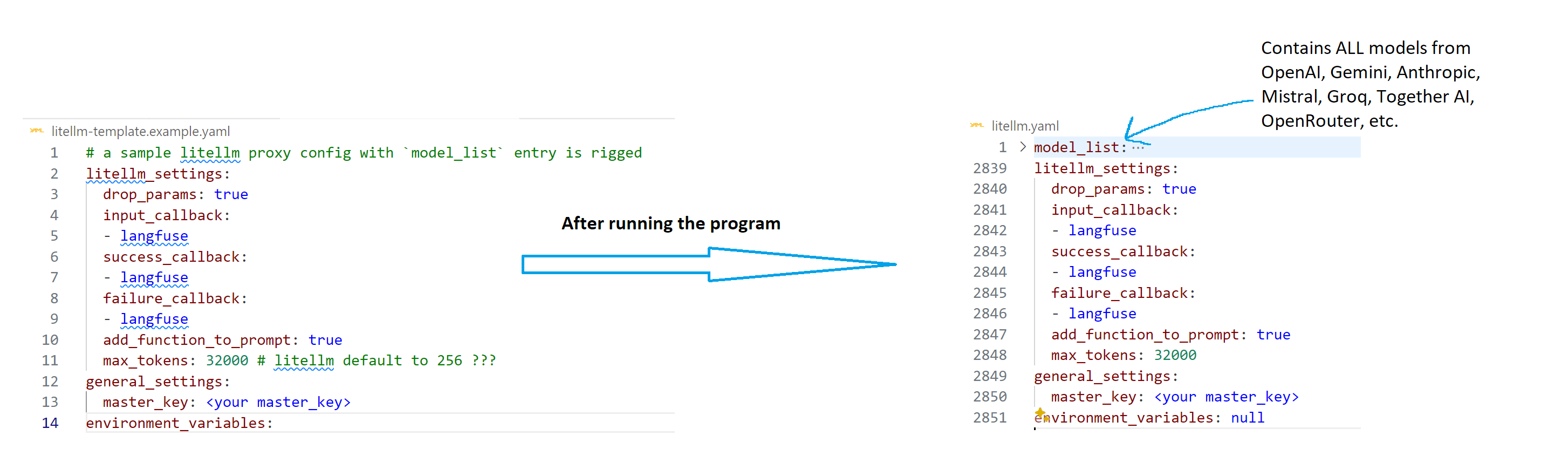

A helper python program to generate a configuration file for LiteLLM proxy.

Just provide a LiteLLM Proxy configuration file in YAML with model_list removed, and then run this program with the following instruction, to get the model_list filled up with templates.

If you have both VSCode and Docker installed, you can use the .devcontainer directory to setup a dev container. It contains all the necessary Python related plugins to help you modify this project. (It is also the environment I used to develop this project)

Otherwise, installed a recent Python 3.x on your system.

Follow the steps below to setup the environment:

-

First, create a virtual environment:

python3 -m venv .venv

-

Activate the virtual environment:

On Windows:

.venv\Scripts\activate

On Unix or MacOS:

source .venv/bin/activate -

Install the required packages:

pip install -r requirements.txt

copy the config.example.yaml file to config.yaml, and the litellm-template.example.yaml file to litellm-template.yaml.

Fill in the config.yaml file with your own configuration.

For the litellm-template.yaml, this is where you put your LiteLLM configuration file with model_list removed.

The config_generator/src/component/model_poper.py file contains the logic to generate the model_list. It delegates the generation to different AbstractLLMPoper implementation defined in the config_generator/src/component/llm_poper directory.

So far we have the following AbstractLLMPoper implementations:

| Provider | Implementation | Support Fetching Model List |

|---|---|---|

| OpenAI | config_generator/src/component/llm_poper/openai.py |

Yes |

config_generator/src/component/llm_poper/google.py |

Yes | |

| Anthropic | config_generator/src/component/llm_poper/anthropic.py |

No |

| Mistral | config_generator/src/component/llm_poper/mistral.py |

Yes |

| Groq | config_generator/src/component/llm_poper/groq.py |

Yes |

| GitHub Copilot | config_generator/src/component/llm_poper/copilot.py |

No |

| TogetherAI | config_generator/src/component/llm_poper/togetherai.py |

Yes |

| OpenRouter | config_generator/src/component/llm_poper/openrouter.py |

Yes |

You may be interested in modifying the template in each AbstractLLMPoper implementation to suit your needs.

./run.shThe output LiteLLM configuration file will be saved to io.output-file.